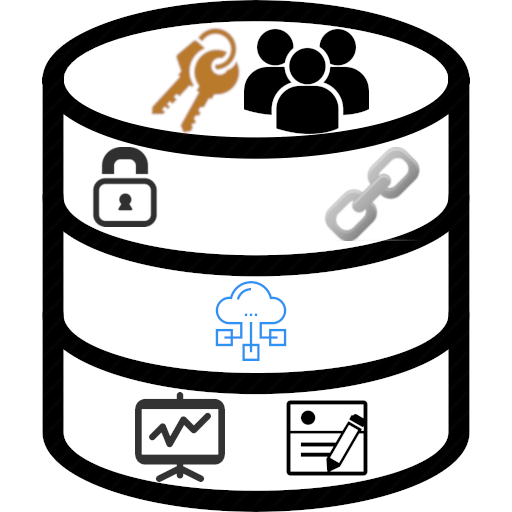

DB is not only data storage

Using the database only for data storage is the same as calling the Unix interface for working with files. Therefore, I want to remind you about the well-known and not-so-functioning database functions that I would like to see more often in combat web applications.

Using the database only for data storage is the same as calling the Unix interface for working with files. Therefore, I want to remind you about the well-known and not-so-functioning database functions that I would like to see more often in combat web applications.

tl; dr

Below will be about authentication, users, access rights, data integrity, FDW, logging and statistics. Nothing new.

Notes

- I will keep in mind Ruby on Rails and Postgres, but most of the mentions are well portable to other languages and DBMS.

- I will not say anything new, all this has long been described in the documentation and articles. I just want to remind once again about the tools and where they can be applied to make life a little better.

Peer / ident authentication

Absolutely health thing, which almost no one uses. She maps user Unix user DB. In the first case, it maps the local user, and in the second, the remote user. The profit is that you can throw out the host, username and password from the config (and the database name can be thrown out), but everything will work as before. Plus, it will be more convenient to go to the console for direct debug (just psql from the terminal instead of all these -h -U -W -d, etc.).

PG documentation about peer and ident .

Nuances: suitable, if you have not only root and superuser on the server; in the case of ident, you control the network, the hardware and you are sure that there are no masters and saboteurs there.

Examples of using

Security. You can not drag off the password from the database and connect to it from the local environment or from somewhere else. There is no password and there is nothing to drag away.

Access control. If there are several access roles on a production or another server and they are already divided at the unix level, then it is convenient to attach database users to them. In this case, the same code base will connect under different database users. For example, tech support and developers climb into the same rails console, but for some it is readonly, and for the second it is full-fledged.

Access rights

In Unix, everyone thinks about them and to work as root or 'chmod 777'look at it very awkwardly. But in the database everything is somehow different. Superuser and go. Although there is no less (and maybe even more) cool.

There is a hierarchy of role inheritance (a bit like a group in Unix), there are different levels of access: to specific objects (like file access rights), to specific operators (like the rules in sudoers), even to specific strings . In short, everything is there. Use.

Application areas

In the minimal version, together with the above-mentioned peer / ident, it is possible to separate the user for migrations / deployments and the user for the daily work of the application. It, at least, will save from a call DDL in. Of course, there are many cases of modifying the database structure "to hot". This and zero-downtime deploy, and different hotfixes, and rebildy indexes with concurency (and sometimes without). But, in general, the DDL application should not do.

Another option: if you have “microservices”, but, for some reason, they use the same database, then explicitly sharing access to the database objects is a very good idea. After all, interaction interfaces should be as localized as possible, and anarchic access to all data contributes to the erosion of logic and responsibility.

Integrity constraint

Rails 5 has at least started working with reference and data integrity. But, in general, many developers believe that validation in the model or its surroundings is sufficient to maintain a consistent state of the data. Alas, this is not the case.

Validations can be skipped, you can go directly to the database and use sql-i, you can nakosyachit during migration. In general, a lot of things can be done. Therefore, everything that business logic relies on must be nailed by the constraints in the database. This is the only way to preserve the integrity of the data and not get "surprises" at the next Deploy.

Foreign data wrapper

This is about connecting one database to another database in order to access the remote tables as if they were their own. The main profit is that the web application is not involved here at all, but there are a lot of optimizations, when two identical databases work (in general, there are pushdowns for different adapters, but everything is difficult, therefore it is easier to assume that the PG-PG bundle works well, and everything else - as it will).

Using FDW

Instead of configs with the coordinates of several databases in a web application, it is incomparably easier to leave one connection to the database and resolve everything at the level of the database itself. In the same place, by itself, the question of access rights and choice of objects to which access is needed will be resolved.

Plus, in the future, you can replace the external table with a materialized view or just a table, but do not change anything in the web application.

And yet, you can connect to the exotic type of MS Access and the problems with restrictions on the use of reports in the models disappear. After all, if you have 2+ connections, then you will not make a join of two databases at the web application level, although ORM (in particular, ActiveRecord) will honestly try to do it ... and fall off. And at the database level this can be done, in some cases, almost without an overhead projector.

Logging

About him, almost everyone knows and everyone uses. But just in case, let me remind you: do not hesitate to log long requests. Out of the box, in PG, it is off. Need to poke log_min_duration_statement. With regards to its value, there are many holivars and, perhaps, they stumble me, but first, put a couple of seconds. Since if you have a large application, you hardly read it and you know what to do, and if it is small, then you have no time to deal with small brakes and only fatal things bother you.

Just remember about N + 1. The DB will not tell you anything about it. Use third-party tools. For example, bullet and common sense.

Statistics

We must remember that she is and that she can die. At first, everything is fine. But over time, usually, the following is obtained: the rate of change of data is approximately the same, and the size of the table is increasing. Consequently, the vacuum / analyze table starts to happen less and less, and at some point the scheduler starts to miss. At best, the request falls into the above mentioned logging, at worst - you just suffer and do not understand why. In general, look at pg_stat_user_tablesand correlate the vacuum / analysis dates with the load on the tables.

And sometimes you can use statistics for approximate count. It rarely comes in handy, but it’s pretty accurate, because PG is not Oracle and countis not executed for O (1) for the whole table, although I really want to.

the end

Thanks for reading. If not difficult, answer the question below. In the light of a recent article about GQL instead of SQL, he began to worry me especially strongly.

Only registered users can participate in the survey. Sign in , please.