GAN taught to create faces with realistic texture and geometry

Hi, Habr! I present to your attention the translation of the article "Facial Surface and Texture Synthesis via GAN" .

When researchers have a lack of real data, they often resort to augmentation of data as a way to expand the existing data. The idea is to modify the existing training data in such a way as to leave the semantic properties intact. Not such a trivial task when it comes to human faces.

The method of generating faces should take into account such complex data transformations as

while creating realistic images that correlate with the statistics of real data.

Consider how state-of-art methods attempt to solve this problem.

Generative-competitive neural networks (GAN) show their effectiveness in making synthetic data more realistic. Taking the input to the synthesized data, the GAN produces patterns that are more like real data . However, the semantic properties can be changed, and even the loss function, which penalizes changing the parameters, does not completely solve the problem.

3D Morphable Model (3DMM) is the most common method for representing and synthesizing geometry and textures and was originally introduced in the context of generating three-dimensional human faces. According to this model, the geometric structure and textures of a human face can be linearly approximated, as a combination of root vectors.

Recently,The 3DMM model was combined with convolutional neural networks for data augmentation. However, the samples obtained are too smooth and unrealistic, as can be seen in the picture below:

Persons obtained using 3DMM

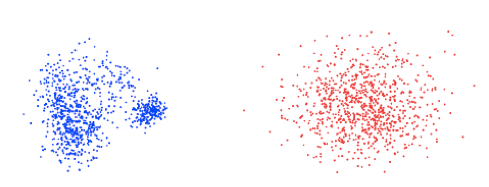

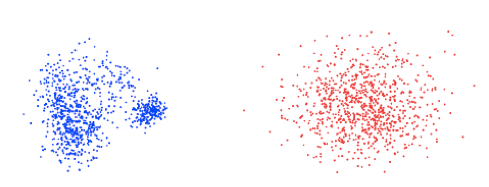

Moreover, 3DMM generates data based on Gaussian distribution, which rarely reflects the actual distribution of data. For example, the following shows two PCA (principal component analysis) coefficients constructed by real persons and synthesized using 3DMM. The difference between synthetic and real distribution can easily lead to the generation of incorrect data.

The first two PCA coefficients for real (left) and 3DMM generated (right) faces

Slossberg, Shamay and Kimmel from the Technion - Israel Institute of Technology offer a new approach to the synthesis of realistic human faces using a combination of 3DMM and GAN.

In particular, researchers use GAN to simulate the space of parametrized human textures and create corresponding face geometries, calculating the best 3DMM coefficients for each texture. The generated textures are mapped to the corresponding geometries for new high resolution 3D faces.

This architecture generates realistic images, with:

Let's take a closer look at the data generation process.

Data preparation

Pipeline data generation consists of four main steps:

Flat aligned facial textures

The next step is to train the GAN to create imitations of aligned textures. For this task, the researchers used a progressive GAN with a generator and a discriminator, organized as a symmetrical neural network. In such an implementation, the generator progressively increases the size of the feature map until it reaches the size of the output image, while the discriminator gradually reduces the size back to a single output.

Face textures obtained by GAN

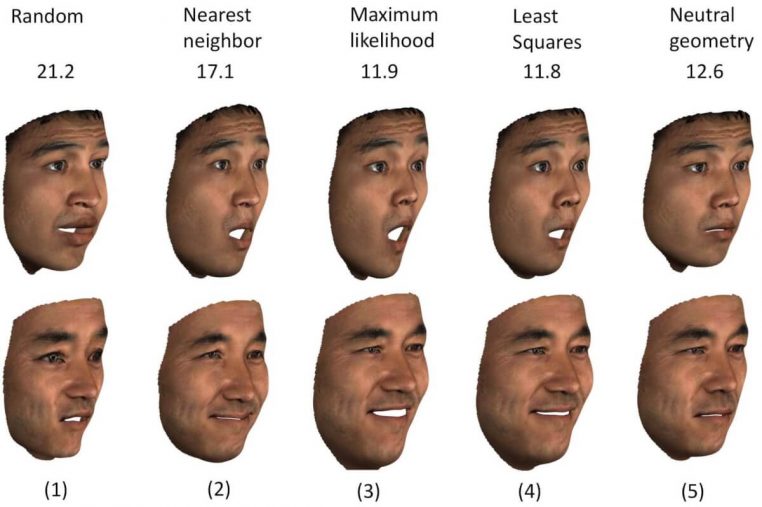

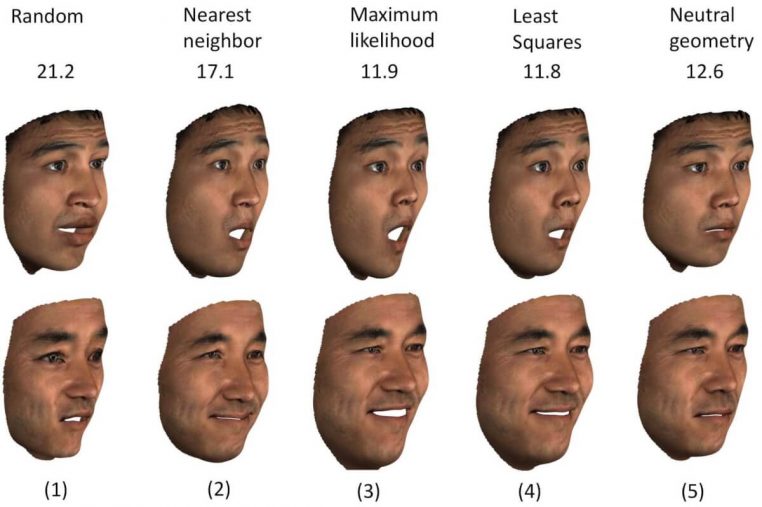

The last step is to create face geometry. The researchers tried different approaches to find the correct geometry coefficients for the texture. Qualitative and quantitative comparison of different methods below (L2 geometric error):

Two synthesized textures superimposed on different geometries.

Unexpectedly, the smallest squares method shows the best results. Considering the simplicity of the method, it was chosen for all experiments.

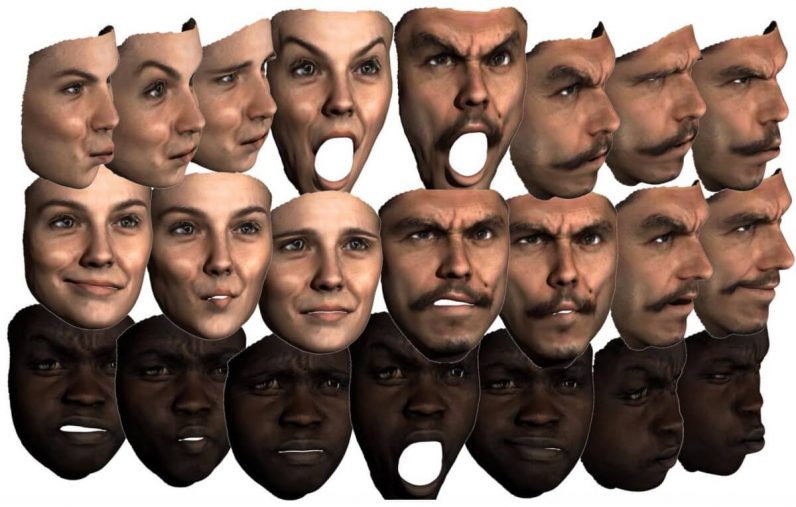

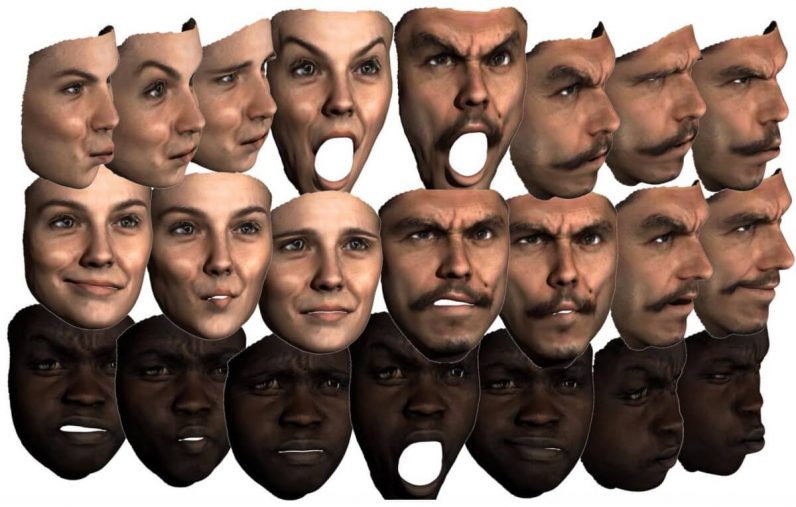

The proposed method can generate many new faces, and each of them can be represented in various poses, with different expressions and lighting. Various facial expressions are added to neutral geometry using the Blend Shape model. The resulting images are shown below:

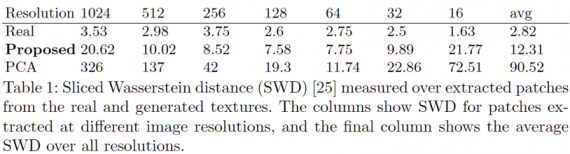

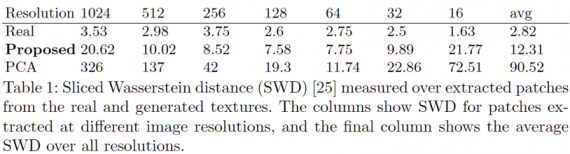

For quantitative assessments, researchers used a truncated Wasserstein metric (SWD) to measure the distance between the training and generated image distributions.

The table shows that the resulting textures are statistically closer to real data than those obtained using 3DMM.

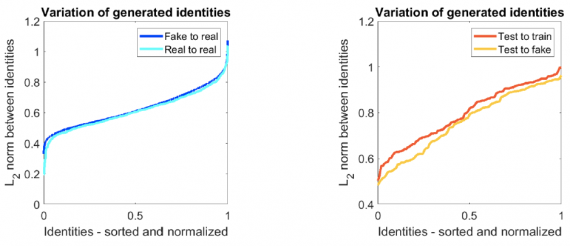

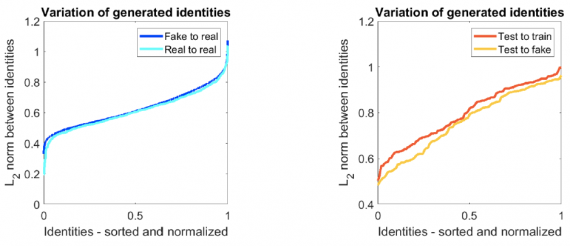

The following experiment evaluates the ability to synthesize images, which are significantly different from the training dataset, and to obtain previously unseen images. Thus, 5% of individuals were not included in the assessment. The researchers measured L2 distance between each real person from the training data and the most similar of the generated ones, and similarly for the real one from the training dataset.

The distance between the synthesized and real persons

As can be seen from the graphs, the test data is closer to the generated images than to the training. Moreover, the “Test to fake” distance is not too different from “Fake to real”. From this it follows that the samples obtained are not just synthesized faces, similar to a training sample, but completely new faces.

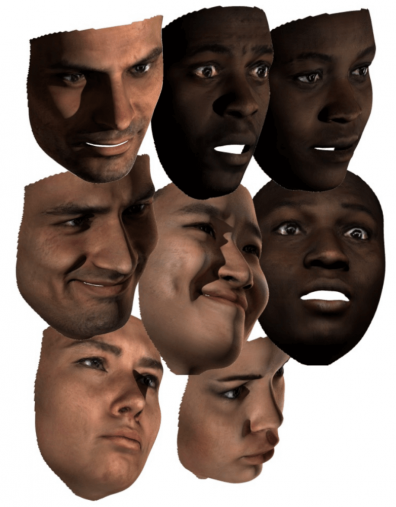

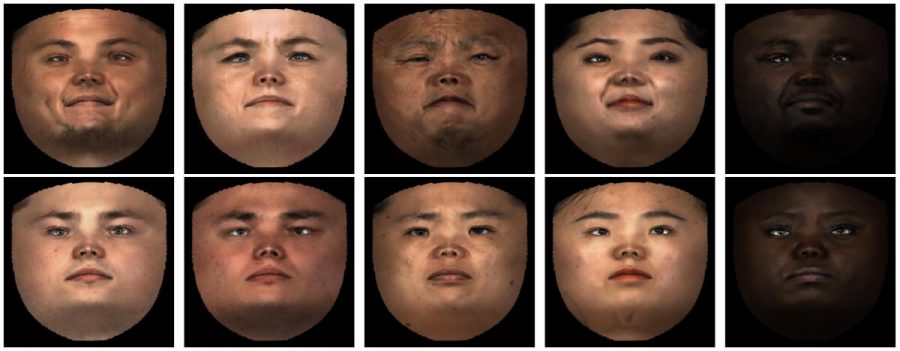

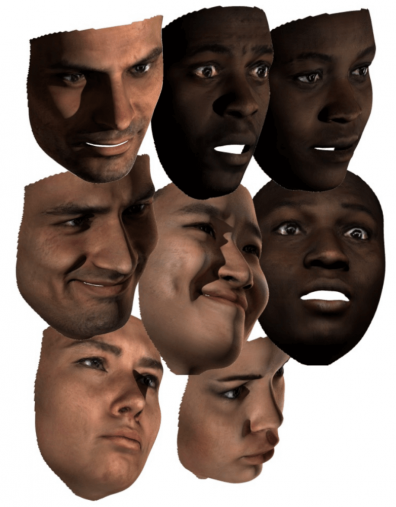

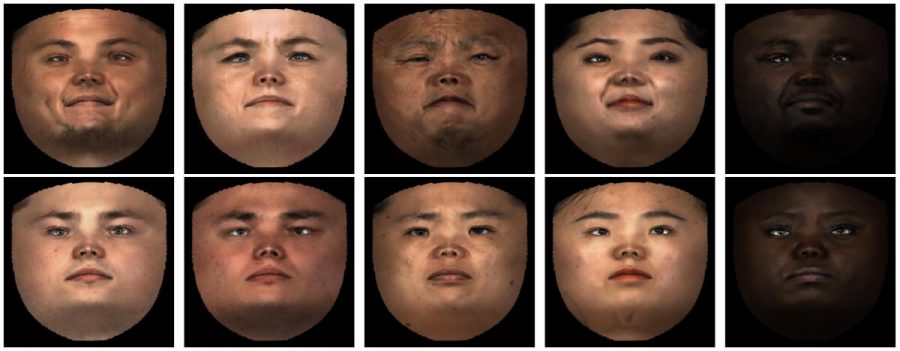

Finally, to verify the possibility of generating the initial dataset, a qualitative assessment was made: the face textures obtained by this model were compared with their closest neighbor in the L2 metric.

The synthesized textures (above) versus the nearest real “neighbors” (below)

As you can see, the nearest real textures are quite different from the original ones, which allows us to conclude about the ability to generate new faces.

The proposed model is probably the first one that can realistically synthesize both the texture and the geometry of human faces. This can be useful for the detection and recognition of faces or models of facial reconstruction. In addition, it can be used in cases where many different realistic faces are required, for example, in the film industry or computer games. Moreover, this structure is not limited to the synthesis of human faces, but can actually be used for other classes of objects where augmentation of data is possible.

Original

Translated - Stanislav Litvinov.

When researchers have a lack of real data, they often resort to augmentation of data as a way to expand the existing data. The idea is to modify the existing training data in such a way as to leave the semantic properties intact. Not such a trivial task when it comes to human faces.

The method of generating faces should take into account such complex data transformations as

- pose,

- lighting,

- non-rigid deformations

while creating realistic images that correlate with the statistics of real data.

Consider how state-of-art methods attempt to solve this problem.

Modern approaches to the generation of individuals

Generative-competitive neural networks (GAN) show their effectiveness in making synthetic data more realistic. Taking the input to the synthesized data, the GAN produces patterns that are more like real data . However, the semantic properties can be changed, and even the loss function, which penalizes changing the parameters, does not completely solve the problem.

3D Morphable Model (3DMM) is the most common method for representing and synthesizing geometry and textures and was originally introduced in the context of generating three-dimensional human faces. According to this model, the geometric structure and textures of a human face can be linearly approximated, as a combination of root vectors.

Recently,The 3DMM model was combined with convolutional neural networks for data augmentation. However, the samples obtained are too smooth and unrealistic, as can be seen in the picture below:

Persons obtained using 3DMM

Moreover, 3DMM generates data based on Gaussian distribution, which rarely reflects the actual distribution of data. For example, the following shows two PCA (principal component analysis) coefficients constructed by real persons and synthesized using 3DMM. The difference between synthetic and real distribution can easily lead to the generation of incorrect data.

The first two PCA coefficients for real (left) and 3DMM generated (right) faces

State-of-art idea

Slossberg, Shamay and Kimmel from the Technion - Israel Institute of Technology offer a new approach to the synthesis of realistic human faces using a combination of 3DMM and GAN.

In particular, researchers use GAN to simulate the space of parametrized human textures and create corresponding face geometries, calculating the best 3DMM coefficients for each texture. The generated textures are mapped to the corresponding geometries for new high resolution 3D faces.

This architecture generates realistic images, with:

- does not suffer from control of attributes such as posture and lighting;

- not quantitatively limited in the generation of new individuals.

Let's take a closer look at the data generation process.

Data generation process

Data preparation

Pipeline data generation consists of four main steps:

- Data collection : researchers collected more than 5,000 scans (face scans) of different ethnic, gender, and age groups. Each participant had to portray 5 different facial expressions including a neutral one.

- Markup : 43 key points are added to the meshes semi-automatically, by rendering the face and using the pre-trained face mark detector

- Alignment of meshes : implemented due to deformation of the patterned face mesh according to the geometry of each scan, focusing on the affixed markup.

- Texture transfer : the texture is transferred from the scan to the template using the ray casting technique built into the Blender toolbox. After that, the texture is converted from the template into a two-dimensional plane using a predefined universal transformation.

Flat aligned facial textures

The next step is to train the GAN to create imitations of aligned textures. For this task, the researchers used a progressive GAN with a generator and a discriminator, organized as a symmetrical neural network. In such an implementation, the generator progressively increases the size of the feature map until it reaches the size of the output image, while the discriminator gradually reduces the size back to a single output.

Face textures obtained by GAN

The last step is to create face geometry. The researchers tried different approaches to find the correct geometry coefficients for the texture. Qualitative and quantitative comparison of different methods below (L2 geometric error):

Two synthesized textures superimposed on different geometries.

Unexpectedly, the smallest squares method shows the best results. Considering the simplicity of the method, it was chosen for all experiments.

results

The proposed method can generate many new faces, and each of them can be represented in various poses, with different expressions and lighting. Various facial expressions are added to neutral geometry using the Blend Shape model. The resulting images are shown below:

For quantitative assessments, researchers used a truncated Wasserstein metric (SWD) to measure the distance between the training and generated image distributions.

The table shows that the resulting textures are statistically closer to real data than those obtained using 3DMM.

The following experiment evaluates the ability to synthesize images, which are significantly different from the training dataset, and to obtain previously unseen images. Thus, 5% of individuals were not included in the assessment. The researchers measured L2 distance between each real person from the training data and the most similar of the generated ones, and similarly for the real one from the training dataset.

The distance between the synthesized and real persons

As can be seen from the graphs, the test data is closer to the generated images than to the training. Moreover, the “Test to fake” distance is not too different from “Fake to real”. From this it follows that the samples obtained are not just synthesized faces, similar to a training sample, but completely new faces.

Finally, to verify the possibility of generating the initial dataset, a qualitative assessment was made: the face textures obtained by this model were compared with their closest neighbor in the L2 metric.

The synthesized textures (above) versus the nearest real “neighbors” (below)

As you can see, the nearest real textures are quite different from the original ones, which allows us to conclude about the ability to generate new faces.

Results

The proposed model is probably the first one that can realistically synthesize both the texture and the geometry of human faces. This can be useful for the detection and recognition of faces or models of facial reconstruction. In addition, it can be used in cases where many different realistic faces are required, for example, in the film industry or computer games. Moreover, this structure is not limited to the synthesis of human faces, but can actually be used for other classes of objects where augmentation of data is possible.

Original

Translated - Stanislav Litvinov.