Attention movement based on the continuously accumulated perception experience as the basis of the proposed approach to the design of strong AI

The directed movement of attention - as the main function of consciousness

In this article, we will not go into the global problems of strong artificial intelligence, but only demonstrate some of the foundations of our approach to its design.

Consider, perhaps, one of the most famous illustrations of how vision works by examining visual objects.

We see that visual attention is mobile.

But what if, the movement of attention itself is the key to understanding it?

We hypothesized that consciousness directs the movement of attention, and imagined the following image of the algorithm of conscious visual perception.

How could such an algorithm work?

Important concepts

We have introduced the definitions of two concepts for our algorithm: feature and generalization.

What is a sign, the easiest way to illustrate the above image of a lady with a cat.

Let's take some area of the image and calculate its integral characteristics, for example, the size of the area (in the case of a circle, this will be its diameter), average color, brightness, contrast and fractal dimension.

Now, in a certain direction and at a certain distance, we choose another region of the image and calculate its integral characteristics.

Now we calculate the relative changes in the transition from the first region to the second in order to get rid of absolute values and not depend on changes in scale, rotation, shift, general illumination or tonality of the image. Conventional affine transformations also allow one to get rid of changes in the perspective of the image plane.

We will call such a transition a sign.

Further, by linking such signs into chains, we will obtain generalizations of the signs.

Here is a somewhat free, but definition of key concepts of the proposed approach.

I want the picture to come to life!

Good, but so far there is no directed movement of attention. If we randomly form such chains of transitions, our database will be immense and it will be absolutely impossible to distinguish something useful from the trash in it.

Therefore, we define a few more concepts.

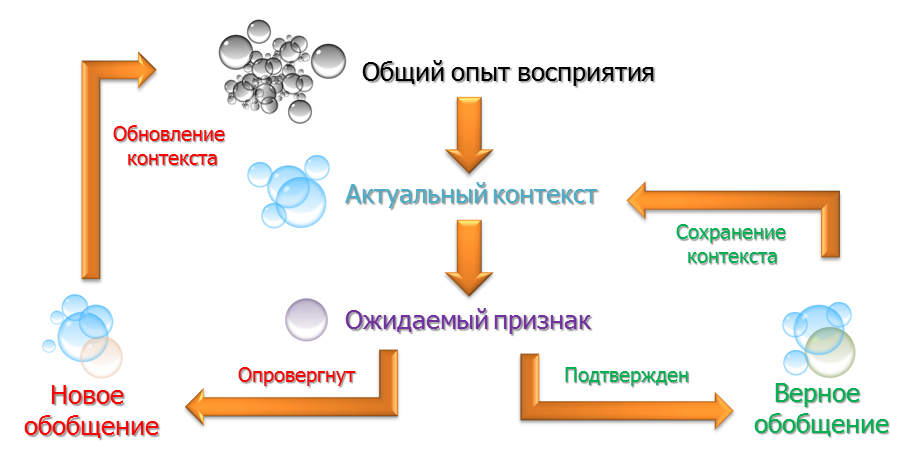

The key concept is experience. The totality of generalizations ranked by significance (frequency of confirmations).

Moreover, each time in the vicinity of the current focus, we determine the actual context of generalizations - those that have already been confirmed and are still not refuted.

This current context of generalizations is also ranked by the probability of the most anticipated features in the stream.

The central element of the algorithm is the current expected attribute, which we can check. The transition from the current area (focus of attention) to the area specified by the expected transition directs the system to calculating the characteristics in a certain area and comparing them with the expected ones.

If the sign is confirmed, we obtain the following expected sign from the current context.

If the symptom is not confirmed, we form a new generalization and remove from the actual context generalizations that have not been confirmed.

What if the context is empty or fully validated and there are no new expected signs?

We assume that the natural consciousness in this situation explores the surroundings of what is already known, but still little studied. That is, attention switches to what regularly appears in the current context, but the level of generalization is low (i.e., the chain of generalizations is rather short)

Well now are we here?

It is very early to draw conclusions about the practical utility of the proposed approach. Research is only at the very beginning.

The first experiments showed that the implementation of the “forehead” algorithm, without special optimizations, in C #, in one thread on one core generates about 50 thousand generalizations per second, while the million generalizations are repeated at least once less than 1%, and more are confirmed once about 0.2%

Quite a lot of calculations are performed “just in case” and a significant amount of the database is still occupied by unconfirmed generalizations of the shortest chains of two transitions (signs).

In the near future, the formation of attractors of the system’s behavior (attention movement of AI) on the training samples, the study of their parameters and the publication of the results.