Server optimization for Drupal with measurement of results

The instruction itself on where to tighten up something on the server so that Drupal can work faster can be found on the Internet in varying degrees of detail. However, all the articles I came across had a slight flaw: I did not meet any real measurements that accompanied the setup. How does the page generation speed change numerically? How is memory usage changing? What happens when you increase the number of concurrent requests? Let's do an experiment. Some of the recommendations presented in the article are general in nature and may be useful for other CMS.

This article is based on a selection of Server tuning considerations , available on the Drupal official website. From it, what has been selected is the most universal in nature and can be applied to an arbitrary server on which Drupal is planned to be used (in particular, the sections on setting up PHP and MySQL). This article does not cover the fine-tuning of the CMS itself.

To test the load, a certain reference heavy site was created. For this, Drupal 7 and several popular modules were used, including Views and Pathauto. A number field was added to one of the material types, which could take a value from 1 to 10. Using the Devel module content generation function, about 10 thousand pages of this type of material were created and from 0 to 15 comments for each post.

Next, a Views block was created and placed on the main page, selecting 100 random pages, where the field took the value 5 (i.e., according to probability theory, 100 out of 1000 pages) along with comments on them. Global: Random filtering criterion was used so that the page is guaranteed to be generated anew at every load. On a personal test server, the time to generate such a page was approximately 10 seconds. Also, in preparation, a test online store based on Commerce Kickstarter was raised and about 5 thousand products were generated. However, it turned out that Global: Random was not at all friendly with the Search API, and without randomization, the page with 96 products loaded significantly faster than the previous test page. Therefore, measurements on the speed of the online store were not carried out. Test sites have been moved to ...

For experiments, I borrowed for a few days the freshly installed VPS Intel Xeon E3-1230 3.2GHz / 2-3 GB RAM / 30 GB SSD and Intel Xeon E3-1230 / 8GB DDR3 / 4x1TB SATA2 / 100Mbps Unmetered in the Netherlands (hereinafter referred to as VPS and E3-1230, respectively). The servers had standard LAMP + Nginx configured. The bulk of the measurements were performed by the ab utility with a total number of requests of 1000 and the number of parallel requests from 10 to 50. At the end, several Loadimpact tests were also run.

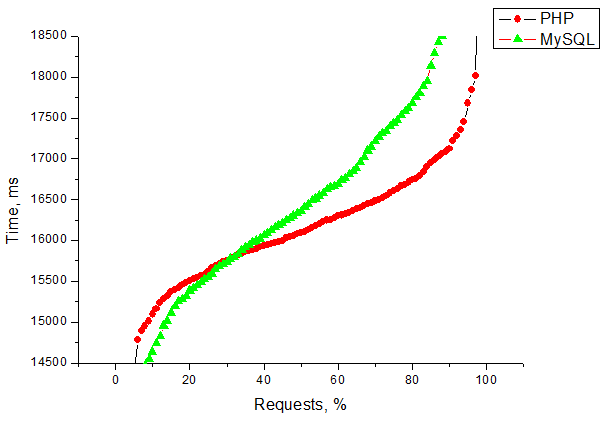

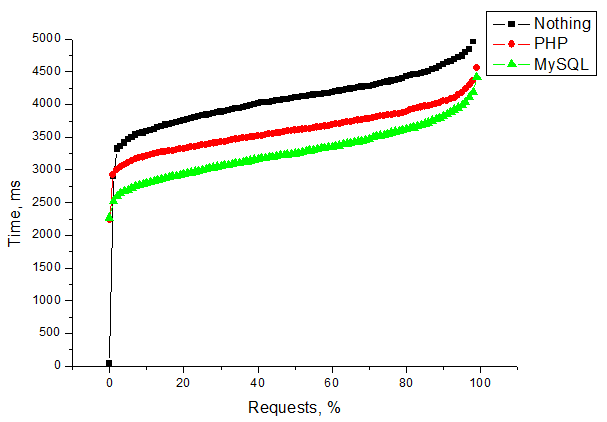

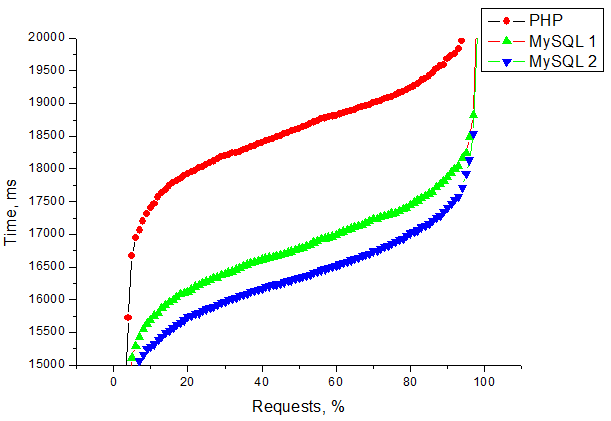

The first measurement was made before any optimization began. Actually, in these measurements I settled on 40 parallel queries. The results look something like this:

On this and subsequent similar graphs along the X axis, there will be a proportion of requests that were serviced for a time not exceeding the corresponding value along the Y axis.

In addition, for the sake of interest, I launched the free Loadimpact test, but there was no noticeable load he did not create.

The first thing to do on the server under Drupal is to set the memory_limit value to at least 256M in php.ini. As a rule, this is completely sufficient for most sites. But 128M is sometimes not enough. However, this can not be called optimization, it is rather a vital necessity.

To speed up the work of sites at the PHP level, developers recommend using various caching optimizers. In other sources, APC is most often mentioned, so I turned to him. You can read about how to put it in the instructions. Now we are interested in key settings. Actually, the main parameter is the size of the cache memory segment, apc.shm_size. The heavier the page, the more different files are included during execution, the greater the value should be. For example, 64M was enough for the test site. A test store with this value generated an error:

Raising the value to 256M instantly eliminated this problem. According to the Devel module, when visiting sites for one time, the inclusion of caching affected the following parameters:

How the inclusion of APC influenced the results of the bombing with ab will be discussed a little later. According to publicly available public information, the use of php-fpm instead of Apache + mod_php yields remarkable results. However, I have not tried to compare these two configurations yet.

One of the easiest ways to optimize MySQL is to replace my.cnf with my-huge.cnf. This file is designed for systems with sufficient (2 GB or more) RAM and large-scale use of MySQL. Among other things, it differs from the standard config in a significantly larger buffer size (key_buffer_size) and the inclusion of query caching (query_cache_size).

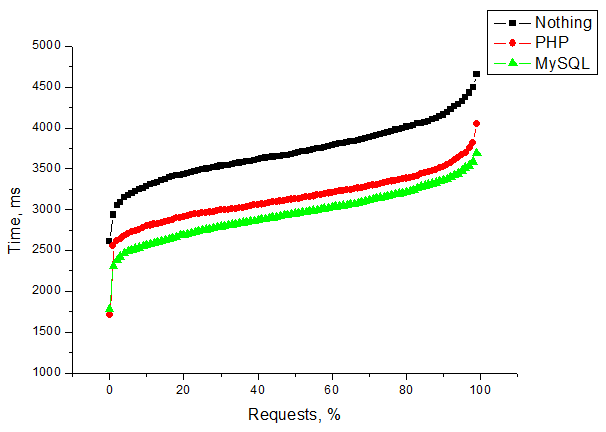

A general change in the recoil speed during sequential use looks something like this.

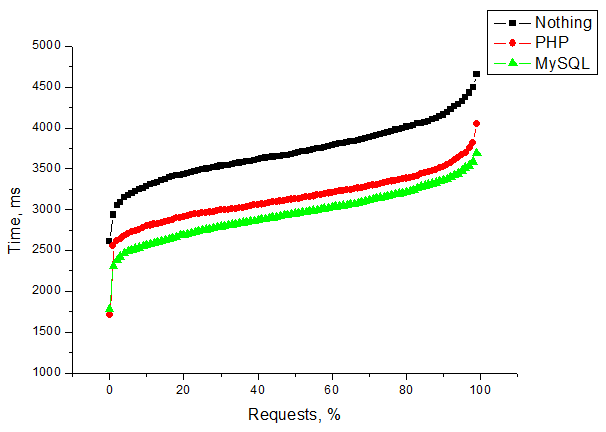

E3-1230, 10 parallel

VPS requests , 10 parallel

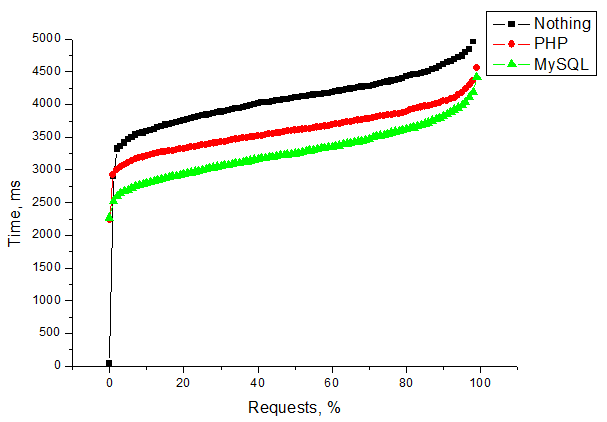

E3-1230 requests , 30 parallel

VPS requests , 30 parallel requests

As you can see, on VPS the gap between non-optimized MySQL and optimized is more noticeable than on E3-1230. I dare to suggest that this is due to the use of SSD drives on VPS. Nowadays, server configurations in which databases are placed on SSDs are becoming more and more popular. Considering how much they have fallen in price lately, this solution does not hit much and can in many cases significantly speed up the work of sites. An advantage in favor of SSDs, in my opinion, are the following two graphs.

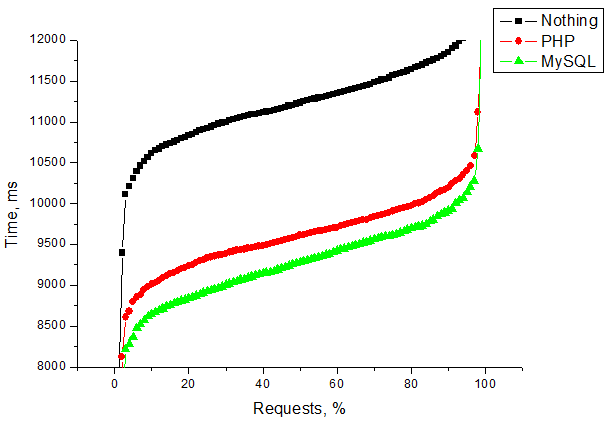

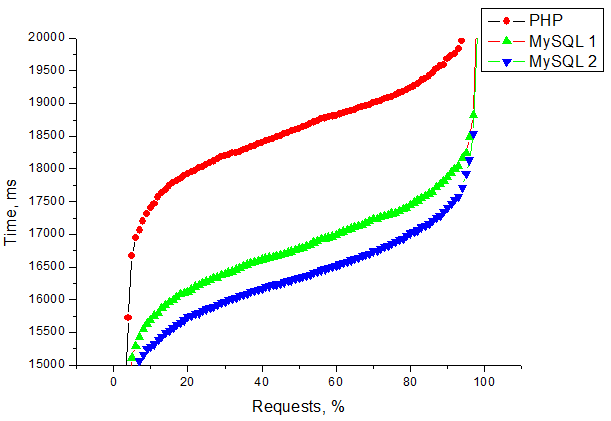

E3-1230, 50 concurrent

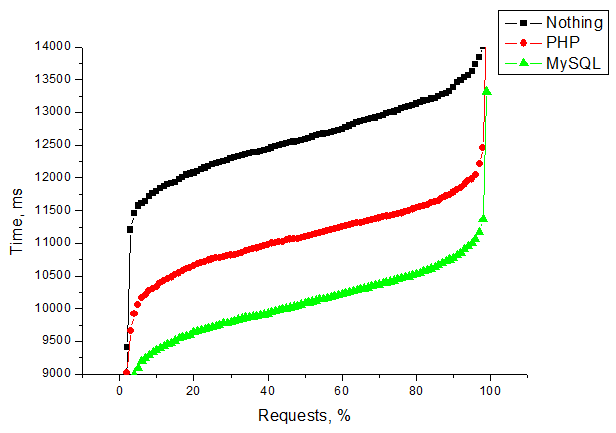

VPS requests , 50 concurrent requests

As you can see, on a server with SATA drives, buffering with caching very quickly begins to choke. At the same time, on the VPS with SSD drives, the general trend continues. On VPS, I tried to further increase the key_buffer_size and query_cache_size values, due to which I got a slight but stable performance gain (MySQL curve 2). However, at this stage, on both configurations, the processor is already choking.

The tests carried out using the ab utility do not show the actual loading of the site pages, but rather a qualitative change in performance. For some kind of closer to life data, I conducted a couple of tests using Loadimpact .

I examined the impact on the speed of the site with only two essential parameters, therefore I will be grateful to everyone who can give additional useful tips on the topic. In addition, about 5 days after publication, the test environments will still be alive, and if you have any other recommendations for optimization or suggestions for testing, I will try to implement them during this period and add them to the article. I urge everyone to share their own optimization experience and their tricks. And thanks for reading it patiently.

Instead of the foreword

This article is based on a selection of Server tuning considerations , available on the Drupal official website. From it, what has been selected is the most universal in nature and can be applied to an arbitrary server on which Drupal is planned to be used (in particular, the sections on setting up PHP and MySQL). This article does not cover the fine-tuning of the CMS itself.

Experimental model

To test the load, a certain reference heavy site was created. For this, Drupal 7 and several popular modules were used, including Views and Pathauto. A number field was added to one of the material types, which could take a value from 1 to 10. Using the Devel module content generation function, about 10 thousand pages of this type of material were created and from 0 to 15 comments for each post.

Next, a Views block was created and placed on the main page, selecting 100 random pages, where the field took the value 5 (i.e., according to probability theory, 100 out of 1000 pages) along with comments on them. Global: Random filtering criterion was used so that the page is guaranteed to be generated anew at every load. On a personal test server, the time to generate such a page was approximately 10 seconds. Also, in preparation, a test online store based on Commerce Kickstarter was raised and about 5 thousand products were generated. However, it turned out that Global: Random was not at all friendly with the Search API, and without randomization, the page with 96 products loaded significantly faster than the previous test page. Therefore, measurements on the speed of the online store were not carried out. Test sites have been moved to ...

Experimental equipment

For experiments, I borrowed for a few days the freshly installed VPS Intel Xeon E3-1230 3.2GHz / 2-3 GB RAM / 30 GB SSD and Intel Xeon E3-1230 / 8GB DDR3 / 4x1TB SATA2 / 100Mbps Unmetered in the Netherlands (hereinafter referred to as VPS and E3-1230, respectively). The servers had standard LAMP + Nginx configured. The bulk of the measurements were performed by the ab utility with a total number of requests of 1000 and the number of parallel requests from 10 to 50. At the end, several Loadimpact tests were also run.

Raw Server Data

The first measurement was made before any optimization began. Actually, in these measurements I settled on 40 parallel queries. The results look something like this:

On this and subsequent similar graphs along the X axis, there will be a proportion of requests that were serviced for a time not exceeding the corresponding value along the Y axis.

In addition, for the sake of interest, I launched the free Loadimpact test, but there was no noticeable load he did not create.

PHP optimization

The first thing to do on the server under Drupal is to set the memory_limit value to at least 256M in php.ini. As a rule, this is completely sufficient for most sites. But 128M is sometimes not enough. However, this can not be called optimization, it is rather a vital necessity.

To speed up the work of sites at the PHP level, developers recommend using various caching optimizers. In other sources, APC is most often mentioned, so I turned to him. You can read about how to put it in the instructions. Now we are interested in key settings. Actually, the main parameter is the size of the cache memory segment, apc.shm_size. The heavier the page, the more different files are included during execution, the greater the value should be. For example, 64M was enough for the test site. A test store with this value generated an error:

[Mon Jan 13 21:41:46 2014] [error] [client 176.36.31.190] PHP Warning: Unknown: Unable to allocate memory for pool. in Unknown on line 0, referer: http://s2shop.1b1.info/products?page=2

Raising the value to 256M instantly eliminated this problem. According to the Devel module, when visiting sites for one time, the inclusion of caching affected the following parameters:

- page generation time was reduced by one and a half to two times;

- peak memory consumption decreased from about 50-55 MB to 30-32 MB for the test site and from about 65-70 MB to 30-32 MB for the test store.

How the inclusion of APC influenced the results of the bombing with ab will be discussed a little later. According to publicly available public information, the use of php-fpm instead of Apache + mod_php yields remarkable results. However, I have not tried to compare these two configurations yet.

MySQL optimization

One of the easiest ways to optimize MySQL is to replace my.cnf with my-huge.cnf. This file is designed for systems with sufficient (2 GB or more) RAM and large-scale use of MySQL. Among other things, it differs from the standard config in a significantly larger buffer size (key_buffer_size) and the inclusion of query caching (query_cache_size).

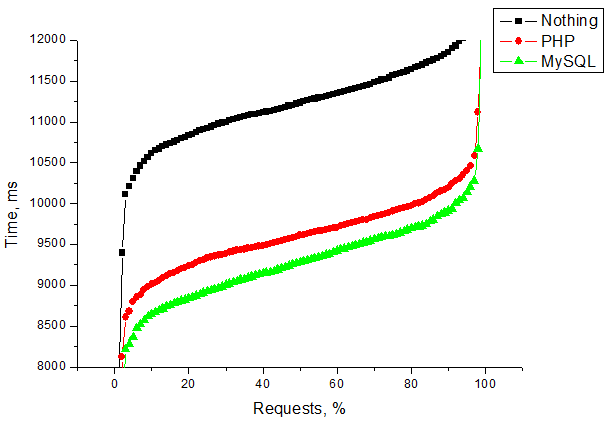

A general change in the recoil speed during sequential use looks something like this.

E3-1230, 10 parallel

VPS requests , 10 parallel

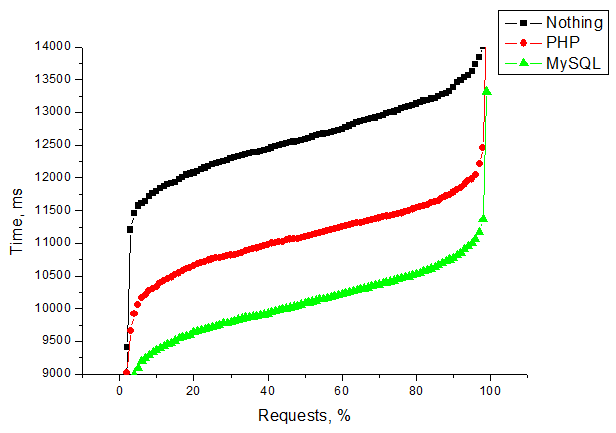

E3-1230 requests , 30 parallel

VPS requests , 30 parallel requests

As you can see, on VPS the gap between non-optimized MySQL and optimized is more noticeable than on E3-1230. I dare to suggest that this is due to the use of SSD drives on VPS. Nowadays, server configurations in which databases are placed on SSDs are becoming more and more popular. Considering how much they have fallen in price lately, this solution does not hit much and can in many cases significantly speed up the work of sites. An advantage in favor of SSDs, in my opinion, are the following two graphs.

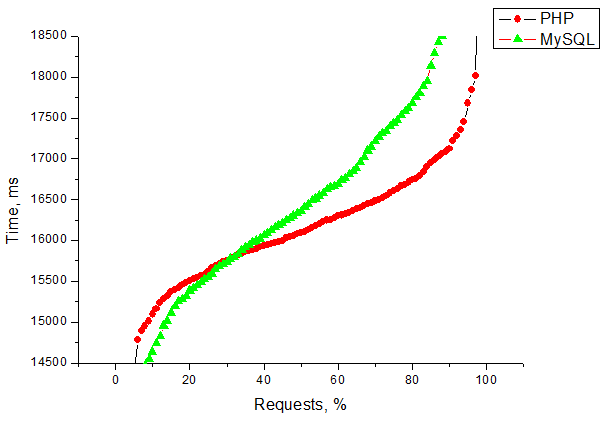

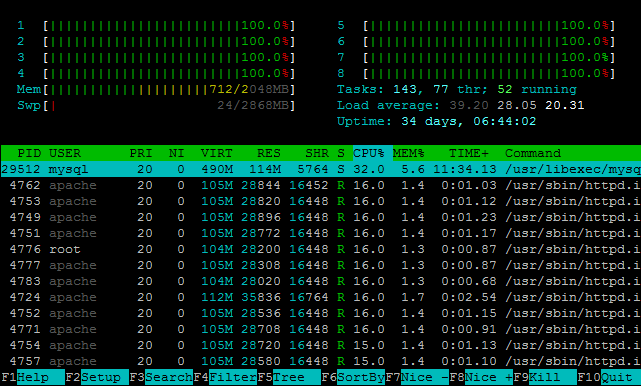

E3-1230, 50 concurrent

VPS requests , 50 concurrent requests

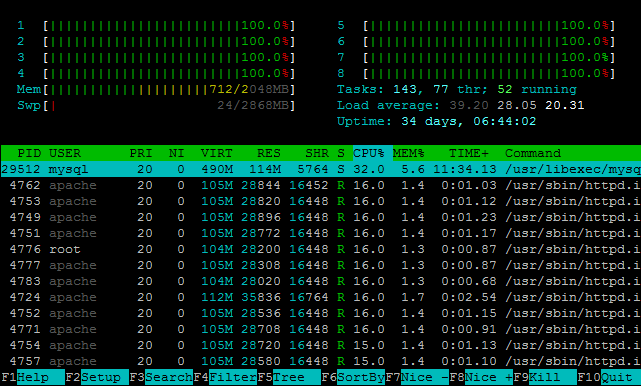

As you can see, on a server with SATA drives, buffering with caching very quickly begins to choke. At the same time, on the VPS with SSD drives, the general trend continues. On VPS, I tried to further increase the key_buffer_size and query_cache_size values, due to which I got a slight but stable performance gain (MySQL curve 2). However, at this stage, on both configurations, the processor is already choking.

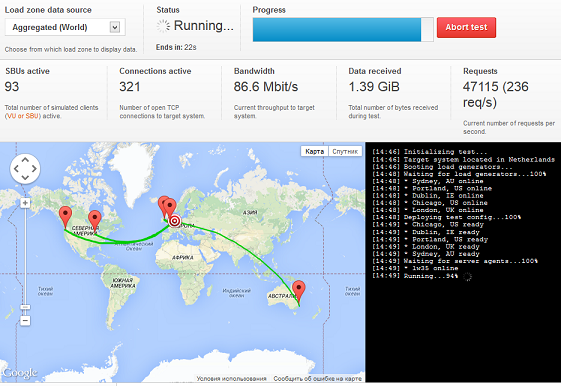

LoadImpact Tests

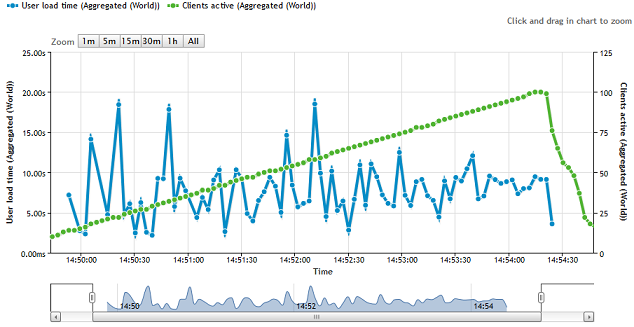

The tests carried out using the ab utility do not show the actual loading of the site pages, but rather a qualitative change in performance. For some kind of closer to life data, I conducted a couple of tests using Loadimpact .

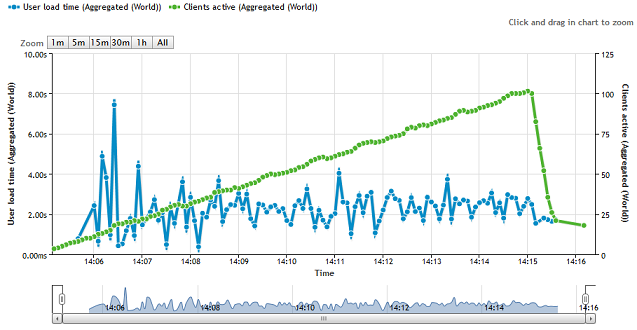

- Up to 100 SBUs were etched to the main page of the test site, roughly evenly distributed across 5 different locations (2 in the USA, 2 in Europe, 1 in Australia). The total graph does not allow to see whether the server has strained this load at least a little.

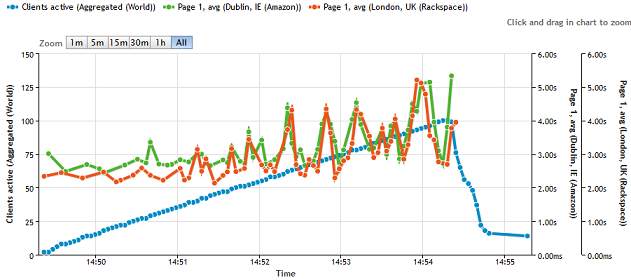

But the increase in load becomes more noticeable if you look at the graphs purely on European points with which connectivity is better.

At the same time, the total data stream was close to 90 Mbps. I wanted to catch the channel to the shelf, but there were no loans left.

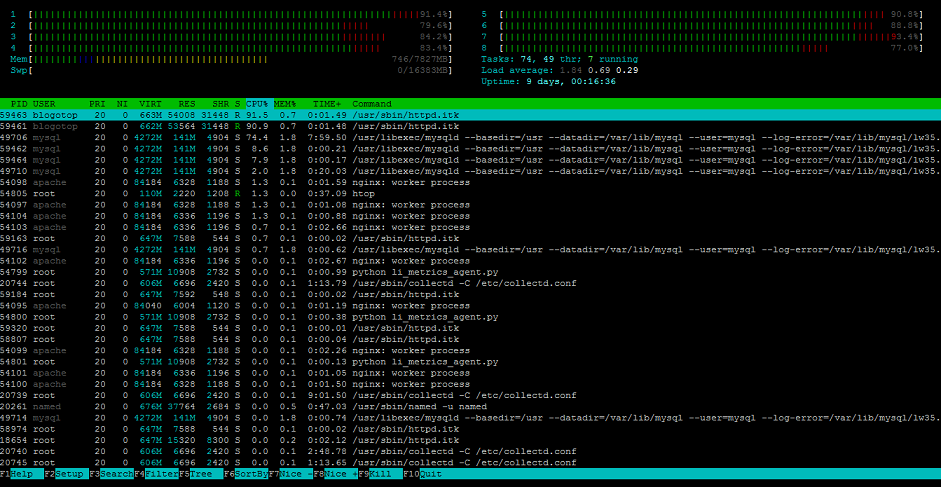

The load on the processor becomes noticeable, however, Load average does not go in any comparison with the ab tests.

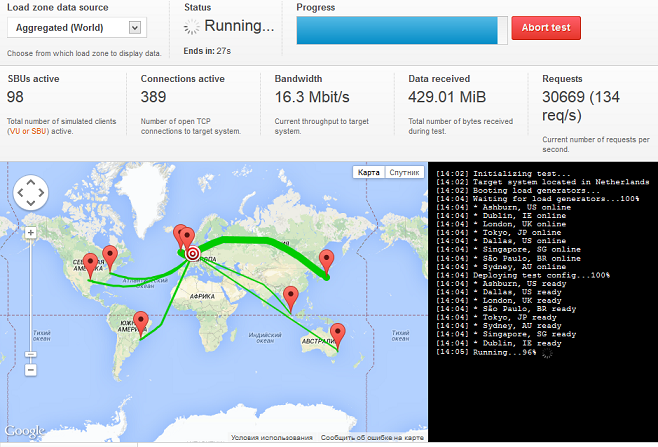

- In conclusion, I cloned a regular small information SDL and set Loadimpact from 8 different points on it. All test points turned to different pages of the site. In 10 minutes, the number of SBUs also reached 100. The

recoil was very uniform, without any obvious slowdowns.

At the same time, the server practically did not notice this test.

Instead of a conclusion

I examined the impact on the speed of the site with only two essential parameters, therefore I will be grateful to everyone who can give additional useful tips on the topic. In addition, about 5 days after publication, the test environments will still be alive, and if you have any other recommendations for optimization or suggestions for testing, I will try to implement them during this period and add them to the article. I urge everyone to share their own optimization experience and their tricks. And thanks for reading it patiently.