Metaio SDK: Augmented Reality Gets Fuller

For 10 years, Metaio GmbH has been developing and improving Augmented Reality (DR) technologies for PCs and mobile devices. One of the results of these titanic efforts was the Metaio SDK - a set of tools for implementing DR elements in applications and programs, optimized with the support and assistance of Intel for its platforms. The set, by the way, is completely free ! We offer you a closer look at this product and see how it works.

So, using Metaio tools, complex and resource-intensive computer vision functions, such as, for example, three-dimensional tracking of real objects, are performed smoothly and effortlessly on Intel mobile platforms. This means, first of all, that applications using DR technologies will work accurately and stably. Implemented support for many wearable gadgets, improved visualization and processing speed of objects. By the way, the Metaio SDK is the only SDK in the mobile segment that provides reliable markerless tracking of three-dimensional real-world objects, as well as two-dimensional ones. The latest version of the Metaio SDK also supports contour tracking, which allows users to track the geometry of real objects, which largely solves the problem of light inconsistencies.

Today, more than 60 thousand developers using the company's products are registered on the Metaio portal.

The Metaio SDK is at the heart of many mobile applications using DR, such as the IKEA product catalog for 2012/13, McDonalds McMission, and many others. Consider, as an example, how DR is used in the Audi eKurzinfo application. A modern car is extremely full of various functions, and understanding their management is far from simple at once. Audi eKurzinfo allows you to get instant help on the units and controls of the Audi car; just bring the camera to the object of interest to you, and the application will issue a certificate about it. The operation of the system is shown in this short video.

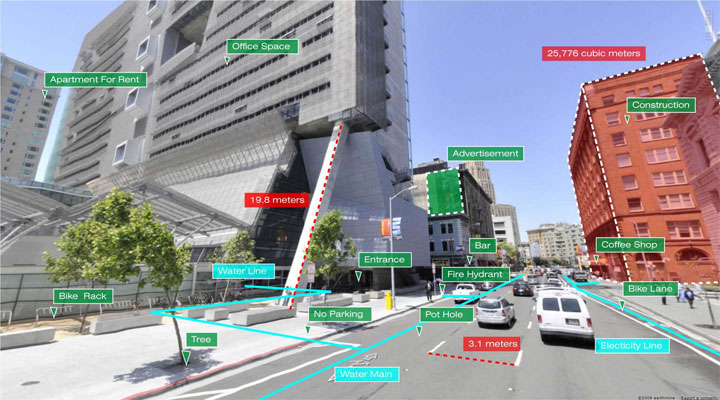

The most advanced DR browser, junaio, also uses the Metaio SDK as the basis.

Key Features of the New Metaio SDK 5.0:

- Available for Android, iOS and PC platforms, supports 2D, 3D, ID and SLAM tracking;

- Supports wearable gadgets Google Glass, Epson Moverio BT-100 and Vuzix M-100;

- Supports 3D markerless tracking based on CAD data (contour tracking);

- In 2014, face tracking functionality will be added;

- Improved visualization quality through programmable shaders;

- Built-in powerful 3D engine, equipped with multi-threaded tracking and rendering pipelines;

- Support for complex 3D models (more than 32 thousand polygons);

- Simple management of 3D content and its debugging (BoundingBox, Normals, Wireframe).

Here is a short case study showing the capabilities of the Metaio SDK. This and other sections of the tutorial can be studied on the Metaio website .

Let's see how to arrange various tracking data to get our own augmented reality.

By default, we will use a markerless configuration. To get started, upload our 3D model.

mMetaioMan = metaioSDK.createGeometry(metaioManModel);

Now add onTouch handlers for the buttons. For example, for a picture marker button, an Android handler will look like this

publicvoidonPictureButtonClick(View v){

trackingConfigFile = AssetsManager.getAssetPath("Assets3/TrackingData_PictureMarker.xml");

boolean result = metaioSDK.setTrackingConfiguration(trackingConfigFile);

}

For iOS, we have implemented the UISegmentedControl element , so the code will look a little different. In AREL (Augmented Reality Experience Language), we will use the jQuery .buttonset () function to create buttons in the HTML code and then attach JavaScript click handlers to it.

As we can see, simply by executing the setTrackingConfiguration (trackingConfigurationFile) method , we get a new tracking configuration.

Please note that our marker picture configuration does not contain any markers; to be sure that the model includes both images from the test task, we must implement the following functionality by rewriting the onDrawFrame () method in the case of Android, drawFrame () for iOS and defining a tracking handler for AREL.

@OverridepublicvoidonDrawFrame(){

super.onDrawFrame();

if (metaioSDK != null)

{

// get all detected poses/targets

TrackingValuesVector poses = metaioSDK.getTrackingValues();

//if we have detected one, attach our metaio man to this coordinate system Idif (poses.size() != 0)

mMetaioMan.setCoordinateSystemID(poses.get(0).getCoordinateSystemID());

}

}

With the first call, we will get all the detected targets and then, if a target is found, we will correlate our model with the target coordinate system. In AREL, we skipped the first step because we got a callback with a tracking event.

And finally, a fun video from IKEA about augmented home reality.