Under the hood of Dictionary and ConcurrentDictionary

Some time ago, I decided that I wanted to know more details about the work of multithreading in .NET and that I paid undeservedly little attention to this in the past. There is a great deal of information on this subject (I chose this section of the book “C # in a nutshell” as a starting point ), but as it turned out, only a small part of the resources try to explain something in detail.

Each master should know his tools, and what can be used more often than collections? Therefore, I decided to make a small overview of multithreaded collections and start with ConcurrentDictionary (a cursory review has already been seen here , but there are very few of them). In general, I was somewhat surprised that there was no such article for .NET yet (but enough for Java ).

So let's go.

If you are already familiar with Dictionary itself, then you can skip the next section.

What is a Dictionary

Dictionary is an implementation of the standard hashtable .

The following functions are interesting here:

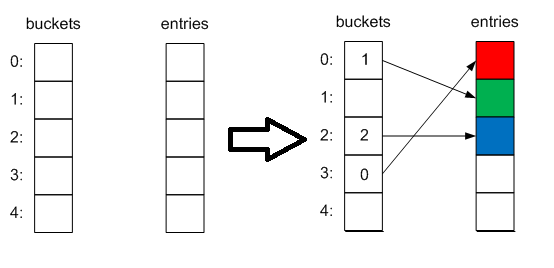

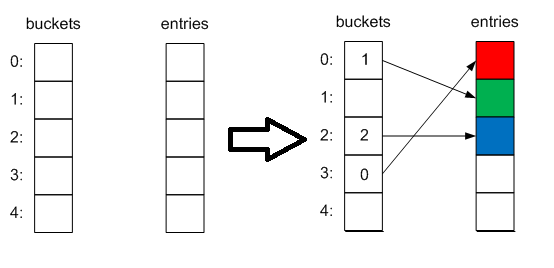

Initialization occurs either during creation (if the initial size of the collection is transferred) or when the first element is added, and the nearest prime number will be selected as the size (3). This creates 2 internal collections - int [] buckets and Entry [] entries . The first will contain the indices of the elements in the second collection, and it, in turn, will contain the elements themselves in this form:

When adding an element, the hash code of its key is calculated and then the basket index into which it will be added modulo the collection size:

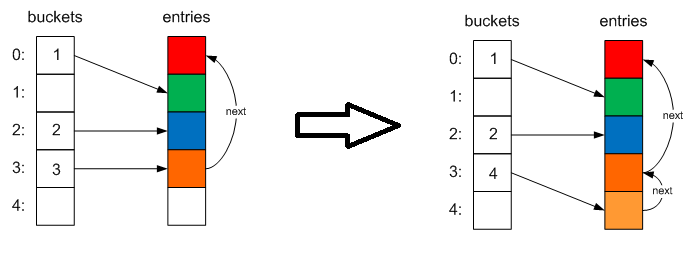

It will look something like this:

Then it checks if there is already such a key in the collection, if so, the Add operation will throw an exception, and assignment by index will simply replace the element with a new one. If the maximum size of the dictionary is reached, then expansion occurs (a new size is selected by the nearest prime number).

The complexity of the operation, respectively, is O (n).

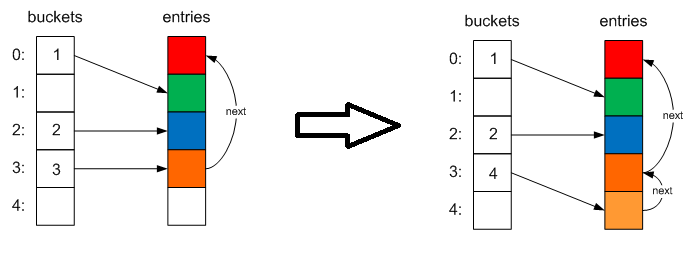

If a collision occurs (that is, there is already an element in the basket with bucketNum indices), then the new element is added to the collection, its index is stored in the basket, and the index of the old element is in its next field.

Thus, we obtain a unidirectional linked list . This collision resolution mechanism is called chaining.. If when adding an element the number of collisions is large (more than 100 in the current version), then when the collection is expanded , a re-hash operation occurs , before which a new hash code generator is randomly selected.

The difficulty of adding O (1) or O (n) in the event of a collision.

When deleting elements, we overwrite its contents with default values, change the next pointers of other elements, if necessary, and store the index of this element in the freeList internal field, and the old value in the next field. Thus, when adding a new element, we can reuse such free cells:

The complexity is again O (1) or O (n) in the event of a collision.

It is also worth noting 2 points:

1) When cleaning the dictionary, its internal size does not change. That is, potentially, you are simply wasting space.

2) GetEnumerator simply returns an iterator over the entires collection (O (1) complexity). If you only added elements, they will return in the same order. However, if you deleted elements and added new ones, the order will change accordingly, so you should not rely on it (especially since this may change in future versions of the framework).

It would seem that there are 2 solutions in the forehead to ensure thread safety - wrap all accesses to the dictionary in locks or wrap all its methods in them. However, for obvious reasons, this solution will be slow - delays added by lock, and the restriction on 1 thread that could work with the collection do not add performance.

Microsoft went a better way and Dictionary has undergone some changes. So, thanks to the internal structure of the dictionary and its baskets, blocking is performed on them, using the method

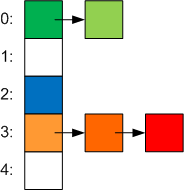

At the same time, a regular dictionary could not work with this scheme, because all the baskets use the same entries array, so the baskets have become a regular single linked list: volatile Entry [] m_buckets (the field is declared as volitale to provide non-blocking synchronization in a situation when one thread is trying to perform some operation, and the other at this moment changes the size of the collection).

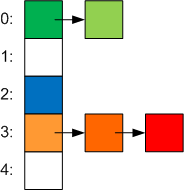

As a result, the baskets began to look like this:

lockNo is the index in a new array that contains synchronization objects - object [] m_locks . Its use allows different threads to change different baskets at the same time. The size of this collection depends on the ConcurrencyLevel parameterwhich can be set through the constructor. It defines the approximate number of threads that will work simultaneously with the collection (by default, this is the number of processors * 4). The higher this value, the easier it will be to write operations, but it will also become much more expensive operations that require complete blocking of the entire collection ( Resize, ToArray, Count, IsEmpty, Keys, Values, CopyTo, Clear ). Also, this parameter determines how many elements of the collection fall on one lock (as the ratio of the number of baskets to the size of this collection) and when there are more elements than necessary, the collection expands, because otherwise the search for the element does not require O (1), but O (n) by traversing linked lists. To slightly reduce the number of initial extensions of the collection, the initial dictionary size is no longer 3, but 31.

All operations took the form:

When adding a new element, you have to go around the entire linked list simply to determine if there is already such a key and if not, add it. Similarly, when deleting - first you need to find the node to be deleted.

However, for some operations, locking is not necessary in principle - these are TryGetValue, GetEnumerator, ContainsKey and indexing . Why? Because all changes in the size of the baskets are visible due to the fact that the field is volatile, any additions or changes to elements occur by creating a new element and replacing it with the old one, and deleting occurs simply by replacing the pointer with the next node.

1) Unlike a regular Dictionary, calling the Clear method resets the collection size to the default value.

2) GetEnumerator - can return old values if changes were made by another thread after a method call and how the iterator passed this element. In the article mentioned at the beginning, it was noted that

Thanks for attention.

The article used:

1. The .NET Dictionary

2. Inside the Concurrent Collections: ConcurrentDictionary

3. Concurrent Collections

4. .NET Reflector

Each master should know his tools, and what can be used more often than collections? Therefore, I decided to make a small overview of multithreaded collections and start with ConcurrentDictionary (a cursory review has already been seen here , but there are very few of them). In general, I was somewhat surprised that there was no such article for .NET yet (but enough for Java ).

So let's go.

If you are already familiar with Dictionary itself, then you can skip the next section.

What is a Dictionary?

Dictionary is an implementation of the standard hashtable .

The following functions are interesting here:

Initialization

Initialization occurs either during creation (if the initial size of the collection is transferred) or when the first element is added, and the nearest prime number will be selected as the size (3). This creates 2 internal collections - int [] buckets and Entry [] entries . The first will contain the indices of the elements in the second collection, and it, in turn, will contain the elements themselves in this form:

private struct Entry

{

public int hashCode;

public int next;

public TKey key;

public TValue value;

}

Adding Items

When adding an element, the hash code of its key is calculated and then the basket index into which it will be added modulo the collection size:

int bucketNum = (hashcode & 0x7fffffff) % capacity;

It will look something like this:

Then it checks if there is already such a key in the collection, if so, the Add operation will throw an exception, and assignment by index will simply replace the element with a new one. If the maximum size of the dictionary is reached, then expansion occurs (a new size is selected by the nearest prime number).

The complexity of the operation, respectively, is O (n).

If a collision occurs (that is, there is already an element in the basket with bucketNum indices), then the new element is added to the collection, its index is stored in the basket, and the index of the old element is in its next field.

Thus, we obtain a unidirectional linked list . This collision resolution mechanism is called chaining.. If when adding an element the number of collisions is large (more than 100 in the current version), then when the collection is expanded , a re-hash operation occurs , before which a new hash code generator is randomly selected.

The difficulty of adding O (1) or O (n) in the event of a collision.

Delete items

When deleting elements, we overwrite its contents with default values, change the next pointers of other elements, if necessary, and store the index of this element in the freeList internal field, and the old value in the next field. Thus, when adding a new element, we can reuse such free cells:

The complexity is again O (1) or O (n) in the event of a collision.

Other

It is also worth noting 2 points:

1) When cleaning the dictionary, its internal size does not change. That is, potentially, you are simply wasting space.

2) GetEnumerator simply returns an iterator over the entires collection (O (1) complexity). If you only added elements, they will return in the same order. However, if you deleted elements and added new ones, the order will change accordingly, so you should not rely on it (especially since this may change in future versions of the framework).

So what about ConcurrentDictionary?

It would seem that there are 2 solutions in the forehead to ensure thread safety - wrap all accesses to the dictionary in locks or wrap all its methods in them. However, for obvious reasons, this solution will be slow - delays added by lock, and the restriction on 1 thread that could work with the collection do not add performance.

Microsoft went a better way and Dictionary has undergone some changes. So, thanks to the internal structure of the dictionary and its baskets, blocking is performed on them, using the method

private void GetBucketAndLockNo(int hashcode, out int bucketNo, out int lockNo, int bucketCount, int lockCount)

{

bucketNo = (hashcode & 0x7fffffff) % bucketCount;

lockNo = bucketNo % lockCount;

}

At the same time, a regular dictionary could not work with this scheme, because all the baskets use the same entries array, so the baskets have become a regular single linked list: volatile Entry [] m_buckets (the field is declared as volitale to provide non-blocking synchronization in a situation when one thread is trying to perform some operation, and the other at this moment changes the size of the collection).

As a result, the baskets began to look like this:

lockNo is the index in a new array that contains synchronization objects - object [] m_locks . Its use allows different threads to change different baskets at the same time. The size of this collection depends on the ConcurrencyLevel parameterwhich can be set through the constructor. It defines the approximate number of threads that will work simultaneously with the collection (by default, this is the number of processors * 4). The higher this value, the easier it will be to write operations, but it will also become much more expensive operations that require complete blocking of the entire collection ( Resize, ToArray, Count, IsEmpty, Keys, Values, CopyTo, Clear ). Also, this parameter determines how many elements of the collection fall on one lock (as the ratio of the number of baskets to the size of this collection) and when there are more elements than necessary, the collection expands, because otherwise the search for the element does not require O (1), but O (n) by traversing linked lists. To slightly reduce the number of initial extensions of the collection, the initial dictionary size is no longer 3, but 31.

All operations took the form:

void ChangeBacket(TKey key)

{

while (true)

{

Node[] buckets = m_buckets;

int bucketNo, lockNo;

GetBucketAndLockNo(m_comparer.GetHashCode(key), out bucketNo, out lockNo, buckets.Length);

lock (m_locks[lockNo])

{

if (buckets != m_buckets)

{

// Race condition. Пока мы ждали блокировки, другой поток расширил коллекцию.

continue;

}

Node node = m_buckets[bucketNo];

// Вносим изменения в нод.

}

}

}

When adding a new element, you have to go around the entire linked list simply to determine if there is already such a key and if not, add it. Similarly, when deleting - first you need to find the node to be deleted.

However, for some operations, locking is not necessary in principle - these are TryGetValue, GetEnumerator, ContainsKey and indexing . Why? Because all changes in the size of the baskets are visible due to the fact that the field is volatile, any additions or changes to elements occur by creating a new element and replacing it with the old one, and deleting occurs simply by replacing the pointer with the next node.

Other

1) Unlike a regular Dictionary, calling the Clear method resets the collection size to the default value.

2) GetEnumerator - can return old values if changes were made by another thread after a method call and how the iterator passed this element. In the article mentioned at the beginning, it was noted that

better to use dictionary.Select (x => x.Value) .ToArray () than dictionary.Values.ToArray ()And this is not entirely true - instead of "better" there should be "faster" - due to the absence of locks, but we must take into account that these approaches can return different data.

Thanks for attention.

The article used:

1. The .NET Dictionary

2. Inside the Concurrent Collections: ConcurrentDictionary

3. Concurrent Collections

4. .NET Reflector