Rescue clouds

I don’t know about the others, but I personally am concerned about fashionable words and technologies such as virtualization, clouds and BigData. They are alarming, because, without delving into their essence and applicability, people blindly begin to use them where necessary and not necessary, because “others do it!”, Eventually creating solutions in which there are more problems than solutions (for example, counting that gigabytes is big data, deploy Hadoop, instead of adding memory and using Excel). Therefore, I am especially scrupulously biting into fashionable technologies, trying to understand their specifics and the scope of their applicability, so as not to succumb to the “hammer syndrome” itself. In this article I want to share some of my thoughts about cloud storage, especially since we got one in Acronis several years ago.

Where does childhood go?

Somehow my brother, like any normal person, was going on vacation with his family. On the eve of his departure, he appeared in his parents' house with an external hard drive and said: “Let him lie down here.” To the question “what is this and why is it necessary?” (Well, what if there are missile guidance codes or unpublished Wikileaks materials), he replied that this was one of several backup copies of his children’s photos, and that in case of a fire or the robbery of his empty apartment he wouldn’t like to lose the pictures. After all, money and equipment can be restored by earning. Lost pictures of children cannot be restored in any way because of the vile second law of thermodynamics - time in our world is irrevocably leaving. This is the main function of backup - to stop the leaking time. This is the value of backup - frozen time. But here an interesting point arises: being valuable, backup itself needs a backup! This is the backup replication scenario. But as they say, it makes no sense to put all the eggs in one basket. So backups must be dispersed in space. You can take it to parents, or you can take it to the cloud. My brother showed me the first example of offsite backup, which I remember forever.Flesh from flesh, backup from backup

So, if we truly cherish our data, then we need backup copies of their backups. However, with the increase in the number of copies, we are faced with the problem that the malicious encoder-copy-paste encounters - the problem of coordination. For example, I somehow flew a Western Digital external drive with a backup of photos (after which I have a fierce distrust of WD). Since then I have been copying pictures to two disks, but this creates an additional load for synchronizing these copies. Sticking and sticking USB drives is not what I would like to do for the rest of my life. It would be cool if someone backed up backups for me.In Acronis Backup & Recovery, this is the product itself, which can replicate the created backup chains to additional storage. For example, this is how D2D2T (disk to disk to tape) or D2D2C (disk to disk to cloud) scripts are solved.

In Acronis True Image, backup redundancy is decided by backup to the cloud. Indeed, in the Acronis cloud, there are already two who are engaged in replication - Reed and Solomon , copying data on several storage nodes, so that if one node fails, the data is not lost. Some details about the implementation of the cloud will be described in more detail in another post.

Copy to the cloud

So, a backup to the cloud is the easiest solution for those who need redundancy. Let the data center itself be engaged in data reproduction, and our task will be minimal - to deliver data to the data center. But, as it turns out, this task in itself is not so trivial. The following (often unexpected) factors seriously affect the way we can deliver data to the cloud:- Geography

- Legislation

- Volume of data to be copied

- Data Frequency

- Channel width

- Computer uptime without rebooting

- The Importance of Data Consistency

Geography and legislation

Given the global availability of the cloud, when moving data into it, the question arises - is the state against you to do this? For example, if the data center is located in Syria, US users will not feel the security of their data as deep as they would like. In the Old World, standards for the transfer of personal data are rooted in Ancient Rome, so transferring on tapes under guard is often the only way to legally transfer data from bank to bank, not to mention keeping them in no place.Technical factors

If the previous factors are more likely to affect the business logic of backup to the cloud, allowing or not allowing users to select certain data centers, then the following five factors directly affect the technologies involved. Depending on this or that factor, different bottlenecks arise, to which one or another technology is called upon to fight, which we will consider after a brief review of factors.Volume of data to be copied

The larger the volume, the greater the problems are its delivery anywhere. The process of copying itself is delayed in time, enhancing the effects of the frequency of data changes, data consistency, and computer runtime without rebooting. Accordingly, for freedom of maneuver, one must try to manage with small volumes. Unless, of course, this is possible.Data Frequency

If the data did not change, then they would not particularly need backups (this is if we consider the destruction of data of one of the forms of change). Depending on the frequency of the change, the question also arises - is it important for the user to track each change or some changes can be ignored. For example, when editing a DOC file, it may be interesting for the user to track all saved versions of the document (a kind of constant high-level undo stack), while few people are interested in tracking the modification of each block of the database file. The frequency of data changes directly affects the maintenance of their consistency, which is especially painful on busy production systems with a snapshot created, which leads to duplication of I / O write operations.Channel width

If the channel is wide (such as a processor bus or a fast local area network), then the thin spot is reading from the disk itself. Optimization consists in minimizing I / O disk operations, which is achieved by single-pass disk copying, or by imaging. However, if the bottleneck is the communication channel, then I / O disk operations cease to play a key role and other factors come to the fore.Runtime without reboots

With large amounts of data and a slow channel, backups can take quite a while. One acquaintance dealing with truly BigData said that backing up analytic data for the quarter on cassettes took a month and a half. When downloading to the cloud, twelve-hour processes are by no means uncommon, and a home user during this time may well want to turn off the computer. Accordingly, if no special measures are taken, the process of copying the entire amount of data is doomed to impossibility.Data consistency

When backing up a system and applications, the consistency of the files that make up this system or application is of particular importance. Let's say the copying process lasts an hour. If we copy files by a simple search, we will have a situation in which the first file will be at the state of one time, and the last - at the state an hour later. And if during this hour the last file managed to change (which is quite normal for loaded systems), then we get in the backup a state that never existed in nature, and therefore does not have to be working. For example, the registry may contain links to files that no longer exist and other horrors of a dispersed system. However, there are backup scenarios when data consistency is not important. For instance,Cloud Scenarios

Having considered the factors affecting the technologies and scenarios used, let us consider how they combine and what it translates into.Synchronization

If data consistency is not important, then the most convenient solution for protecting it is synchronization. The high-level synchronization scheme is as follows:- An agent is installed on all nodes to be synchronized.

- The agent is assigned a certain area for tracking (folder, set of folders, whole volume)

- The agent monitors changes and immediately uploads them to the cloud, if it is available or postpones until a connection

- Agent subscribes to events in the cloud and plays them locally

- Versioning files due to backup occurs in the cloud itself.

Synchronization is suitable for most home users, as they deal mainly with independent data - documents and photos. These files, plus everything else, are not large, so they manage to download to the cloud or get pumped out of there for a user’s computer session. That is why the market for protecting individual files in the user segment is gradually giving way to synchronization.

System backup

Users who have spent more than one hour setting up their system, installing their favorite applications there, understand that time is money. Even if all these applications are, in principle, recoverable from distribution disks, the time spent on the configuration itself cannot be reversed. Therefore, there is a need to protect the system - to create a backup of your efforts. In this perspective, the system configuration is likened to writing a document. Only much more complex and larger. This scenario is especially vivid in the business segment, where recovery time directly affects financial losses. So we get the recovery scenario after failures - the ability to quickly get the system image in a healthy state. In this scenario, data consistency plays an important role, and the volumes are larger than in the case of synchronization. If the channel were wide, as in the case of backup to a local disk, for example, then sector-by-sector removal of an image from a snapshot would be the simplest and cheapest solution. However, the channel to the cloud is not wide and not reliable.

With such a channel, filling hundreds of gigabytes takes hours, or even days. With large volumes, incremental copies can be fought, but there is no escape from the primary large volumes. If corporate clients can still endure such a time, the home user will turn off his computer on the first day. Patient corporate clients, too, will not be particularly pleased for several days to keep an active snapshot (oh my God, bring the word snapshot into Russian!) Of a system that doubles I / O operations. There are two solutions to this problem:

- Initial download (initial seeding)

- Resume

Primary download

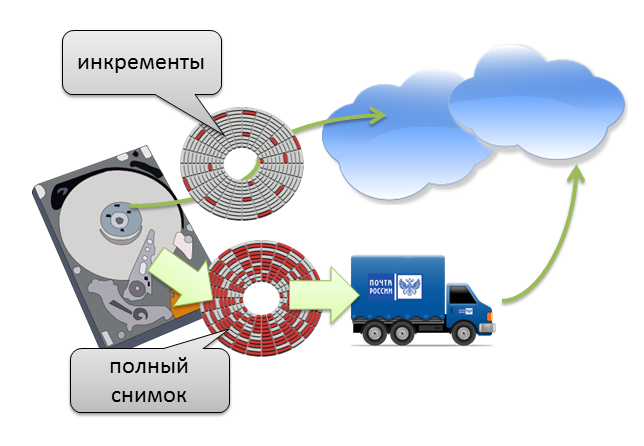

The essence of this solution is to transfer this primary amount of data to the data center not via a slow and unreliable HTTP channel, but through a fast (for large volumes) and reliable channel such as real physical mail (reliable if it is not Russian Post) . That is, the user copies his backup to a real disk and sends it by mail such as DHL to the data center. They unpack it there, and after that the user incrementally copies small changes in their data to the cloud using the usual WAN. The fact that the physical channel can be quite fast is confirmed by a well-known joke that illustrates the crazy bandwidth of the channel, loading KamAZ with hard drives and accelerating it to 60 km / h. By the way, when my brother brought the disc to his parental home, he essentially made this initial seeding. Since sending and tracking disks is a rather energy-intensive task, and there are many home users (Acronis has more than five million), this solution in the consumer segment did not suit Acronis purely organizationally. In the corporate segment, it suited where it was successfully applied.

Resume

A resume is an opportunity to interrupt a backup in half and continue later without serious additional overhead. This is where sector-by-sector copying fails. After all, a half-loaded disk is almost the same as a half pregnancy. The disk is downloaded either completely or not downloaded at all. The snapshots used for the consistent state of the copied disk do not survive the computer restart, so a mechanism for more granular data copying without loss of rolling the machine’s image onto bare iron is needed.Hybrid Image Capture

In True Image 2014, for the first time in Acronis (and in the world!), Hybrid imaging was implemented. The algorithm is as follows:- Creates a snapshot of the file system

- Image capture technology copies an empty volume with basic boot information (this volume does not exceed 200 Kb)

- Files from the image are incrementally copied to the cloud.

If the connection is interrupted in the middle, the backup is considered incomplete, but the files already uploaded to the cloud are available for download and recovery. At the next resume, if the files have not changed (and hashes tell us about it), they will not be uploaded and will be taken into account when building an incremental backup. The resume is implemented!

By the way, due to the fact that we have an image of a volume with boot information, it remains possible to roll a backup copy of bare metal from boot media in addition to restoring individual files. Acronis in his line!

Russia and the cloud

Given all the above, the question arises: are these buns available to Russian home users? The answer to those who read marketing materials is no. Let's understand why not, before we look for ways to try it in Russia. The fact is that today our cloud storage services are deployed in the USA (St. Louis and Boston) and Europe (Strasbourg), and we do not have a data center in Russia yet.

Targeted copying of Russian users' data outside the Russian Federation is faced with legal acts and restrictions, so before giving this opportunity, our just-emerging cyber laws should be thoroughly investigated. One way or another, there are rumors that nothing illegal has been found in our legislation, and one of the next updates, the European cloud will also be available to Russian home users. Well, there, if there are a lot of users, perhaps their data will be migrated to their homeland!

As for those home users of True Image 2014, there are no technical restrictions, and who still wants to try the cloud while in Russia, can expose some other European country (for example, Poland) in their online account and use the cloud. Corporate users of Acronis Backup & Recovery 11.5 do not need any special squats - everything works out of the box there.

Conclusion

- Cloud is the primary storage for offsite backup

- The cloud itself provides redundancy

- The cloud is reliable, but not quite accessible storage, which is normal for backup storage

- Delivering data to the cloud is a difficult task, depending on the needs and scenario, it can be solved using one of the following methods:

- Synchronization

- Primary download

- Hybrid Image Capture