In simple words about the Fourier transform

I believe that, in general terms, they know about the existence of such a wonderful mathematical tool as the Fourier transform. However, in universities, for some reason, it is taught so poorly that they understand how this transformation works and how relatively few people should use it correctly. Meanwhile, the mathematics of this transformation is surprisingly beautiful, simple and elegant. I invite everyone to learn a little more about the Fourier transform and the related topic of how analog signals can be effectively converted for digital processing to digital.

(c) xkcd

(c) xkcd

Without using complex formulas and matlab, I will try to answer the following questions:

I will proceed from the assumption that the reader understands what the integral is , the complex number (as well as its module and argument ), the convolution of functions , plus at least “on the fingers” imagine what the Dirac delta function is . Do not know - it does not matter, read the above links. By “product of functions” in this text I will everywhere mean “pointwise multiplication”.

We must probably start with the fact that the ordinary Fourier transform is some kind of thing that, as you might guess from the name, converts one function to another, that is, puts it in the correspondence of each function of the real variable x (t) is its spectrum or the Fourier transform of y (w):

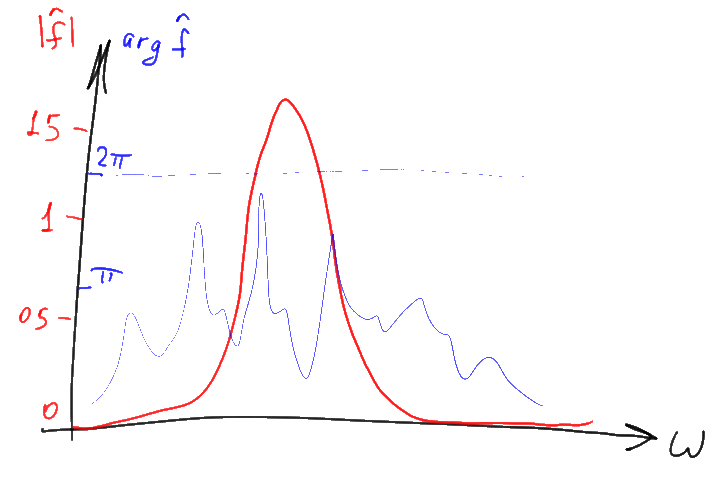

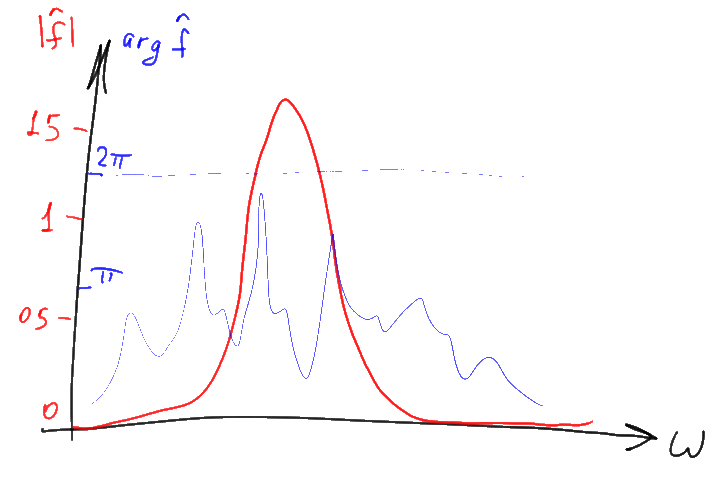

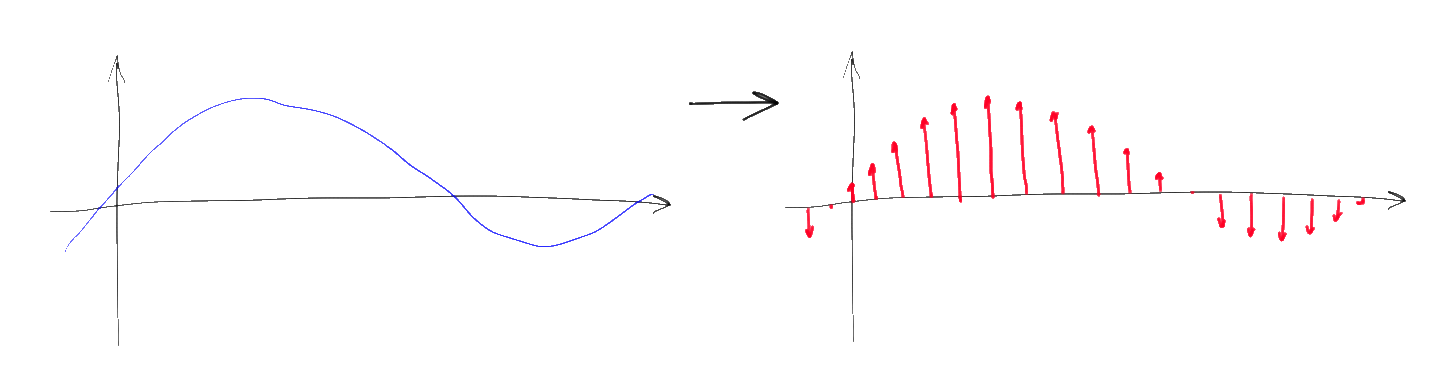

If we give analogies, then an example of a similar transformation in meaning can serve as differentiation, for example, turning a function into its derivative. That is, the Fourier transform is the same operation, in essence, as the derivative, and it is often denoted in a similar way, drawing a triangular “cap” over the function. Only in contrast to the differentiation that can be determined for real numbers, the Fourier transform always “works” with more general complex numbers. Because of this, problems constantly arise with the display of the results of this transformation, since complex numbers are determined not by one, but by two coordinates on the graph operating with real numbers. The most convenient way, as a rule, is to represent complex numbers in the form of a module and an argument and draw them separately as two separate graphs:

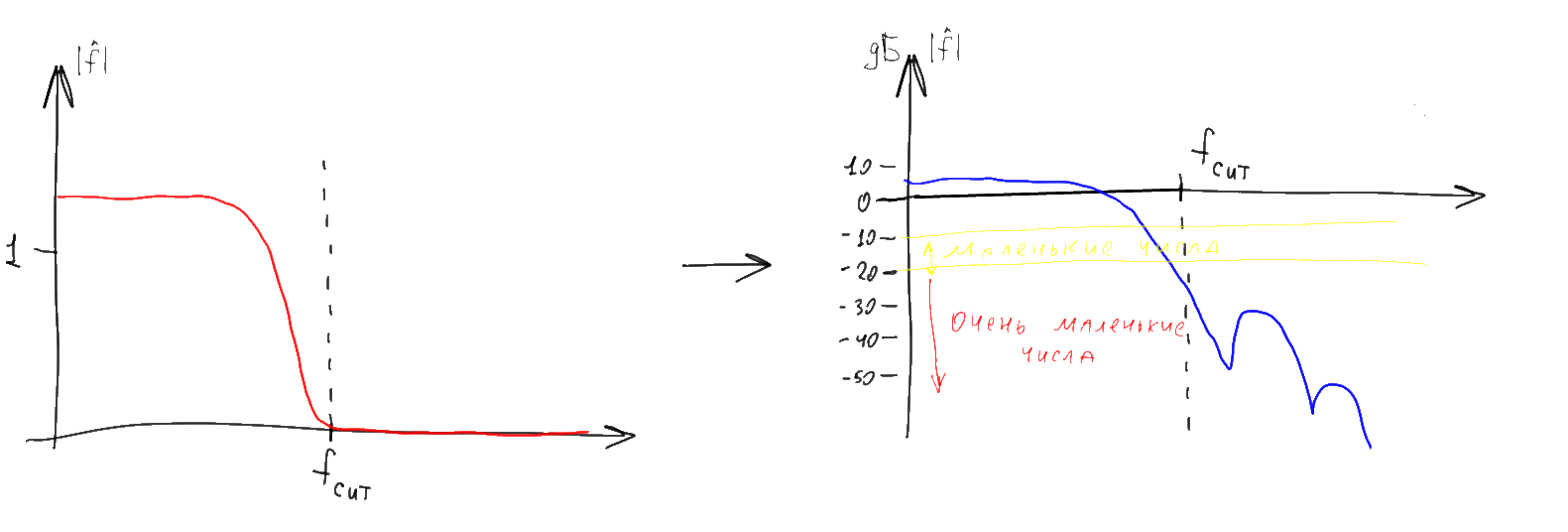

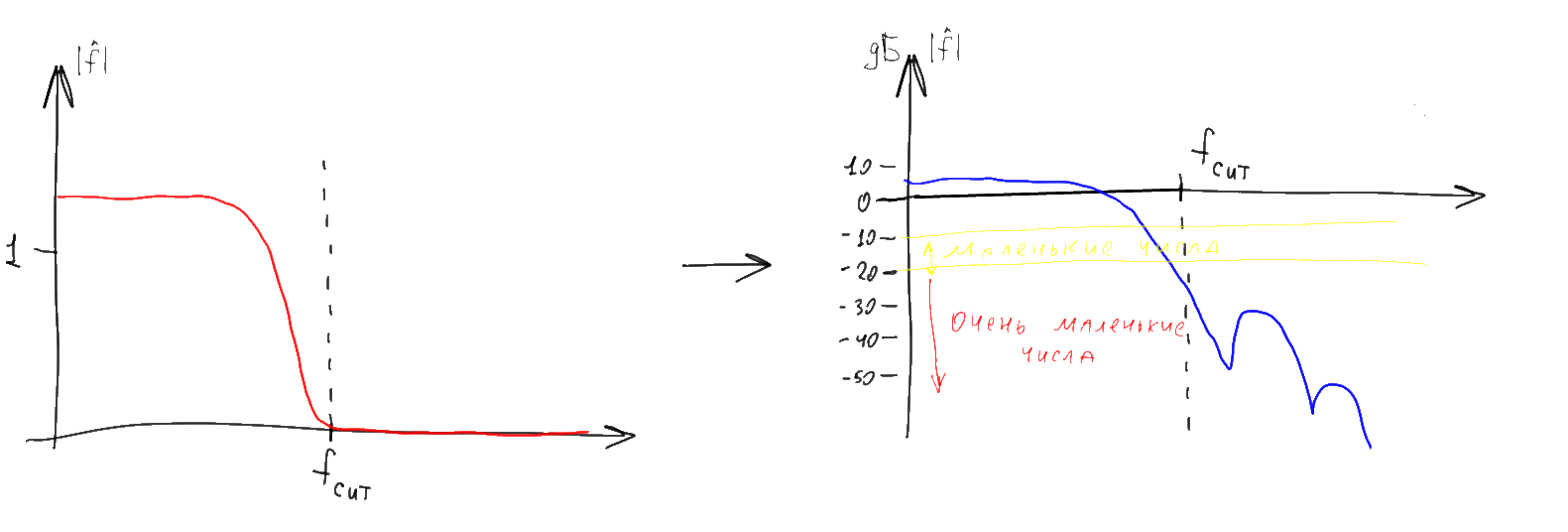

The plot of the argument of the complex value is often called in this case the “phase spectrum”, and the graph of the module is called the “amplitude spectrum”. The amplitude spectrum, as a rule, is of much greater interest, and therefore, the “phase" part of the spectrum is often skipped. In this article, we will also focus on “amplitude” things, but we should not forget about the existence of the missing phase part of the graph. In addition, instead of the usual module of the complex value, its decimal logarithm times 10 is often drawn. The result is a logarithmic graph, the values on which are displayed in decibels (dB).

Please note that not very negative numbers in the logarithmic graph (-20 dB or less) correspond to almost zero numbers on the “normal” graph. Therefore, the long and wide “tails” of various spectra on such graphs, when displayed in “ordinary” coordinates, as a rule practically disappear. The convenience of such a strange at first glance representation arises from the fact that the Fourier images of various functions often need to be multiplied among themselves. With a similar pointwise multiplication of complex-valued Fourier images, their phase spectra add up, and the amplitude ones multiply. The first is easy, and the second is relatively difficult. However, the logarithms of the amplitude when multiplying the amplitudes add up, so the logarithmic amplitude graphs, like phase graphs, can simply be added pointwise. Moreover, in practical problems it is often more convenient to operate not with the "amplitude" of the signal, but with its "power" (squared amplitude). On the logarithmic scale, both graphs (both amplitudes and powers) look identical and differ only in coefficient - all values on the power graph are exactly twice as large as on the amplitudes scale. Accordingly, to plot the power distribution by frequency (in decibels), you can not square anything, but calculate the decimal logarithm and multiply it by 20.

Are you bored? Wait a bit, with the tedious part of the article explaining how to interpret the graphs, we will finish soon :). But before this, one extremely important thing should be understood: although all the above graphs of the spectra were drawn for some limited ranges of values (in particular, positive numbers), all these graphs actually continue in plus and minus infinity. The graphs simply depict some “most meaningful” part of the graph, which is usually mirrored for negative parameter values and is often periodically repeated with some step, if we consider it on a larger scale.

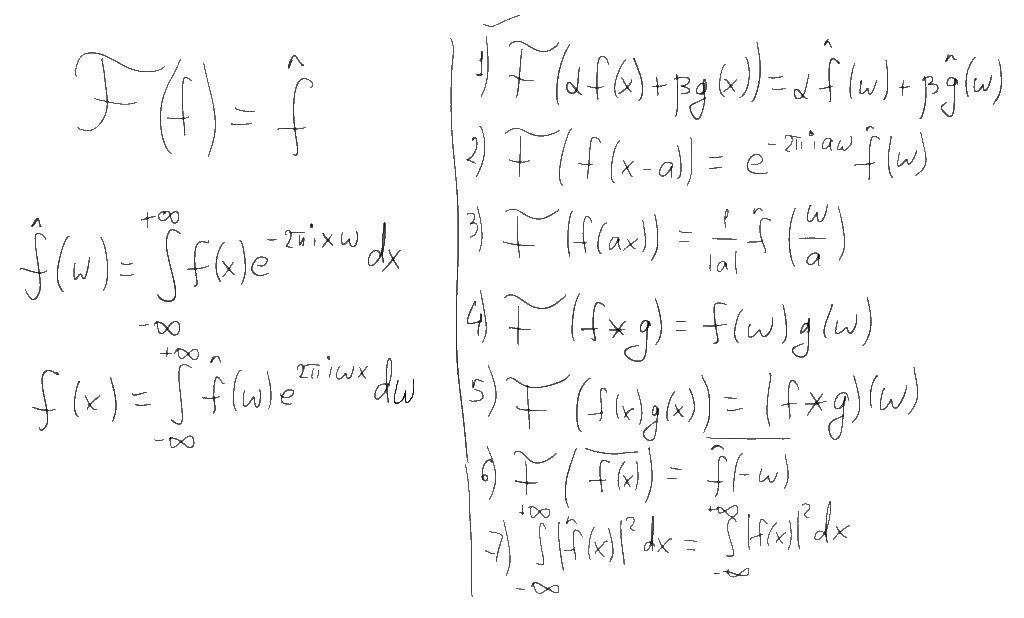

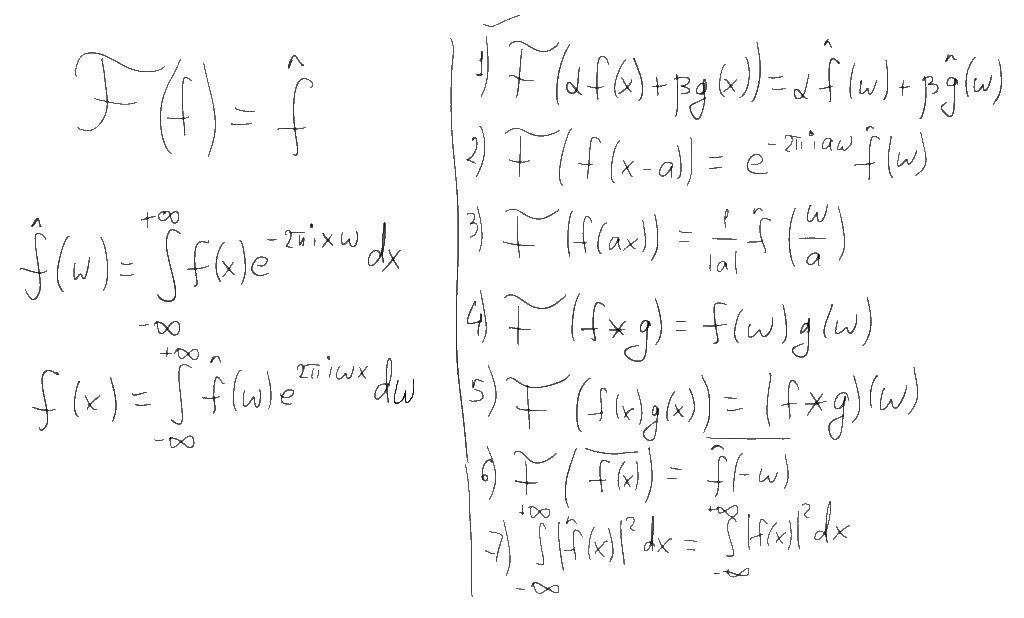

Having decided what is drawn on the graphs, let's get back to the Fourier transform itself and its properties. There are several different ways to determine this transformation, which differ in small details (different normalizations). For example, in our universities, for some reason, they often use the normalization of the Fourier transform that defines the spectrum in terms of the angular frequency (radians per second). I will use a more convenient western formulation defining the spectrum in terms of the usual frequency (hertz). The direct and inverse Fourier transforms in this case are determined by the formulas on the left, and some properties of this transformation that we need are determined by the list of seven items on the right:

The first of these properties is linearity. If we take some linear combination of functions, then the Fourier transform of this combination will be the same linear combination of the Fourier images of these functions. This property allows us to reduce complex functions and their Fourier transforms to simpler ones. For example, the Fourier transform of a sinusoidal function with frequency f and amplitude a is a combination of two delta functions located at points f and -f and with coefficient a / 2:

If we take a function consisting of the sum of the set of sinusoids with different frequencies, then according to the linearity property, the Fourier image of this function will consist of the corresponding set of delta functions. This allows us to give a naive, but visual interpretation of the spectrum according to the principle “if the amplitude a corresponds to the frequency f in the spectrum of the function, then the original function can be represented as the sum of sinusoids, one of which will be a sinusoid with frequency f and amplitude 2a”. Strictly speaking, this interpretation is incorrect, since the delta function and the point on the graph are completely different things, but as we will see later, for discrete Fourier transforms it will not be so far from the truth.

The second property of the Fourier transform is the independence of the amplitude spectrum from the time shift of the signal. If we move the function left or right along the x axis, then only its phase spectrum will change.

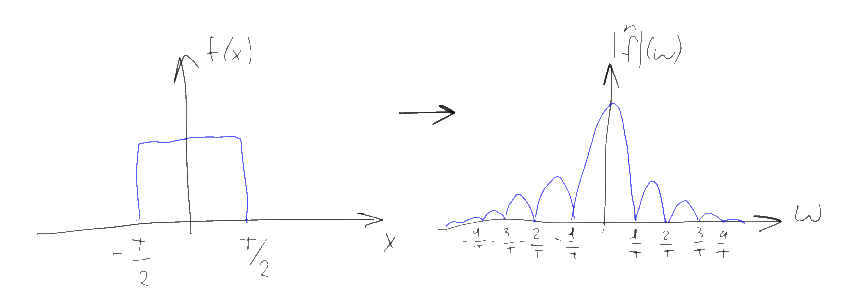

The third property is the extension (compression) of the original function along the time axis (x) proportionally compresses (stretches) its Fourier image on the frequency scale (w). In particular, the spectrum of a signal of finite duration is always infinitely wide, and vice versa, the spectrum of a finite width always corresponds to a signal of unlimited duration.

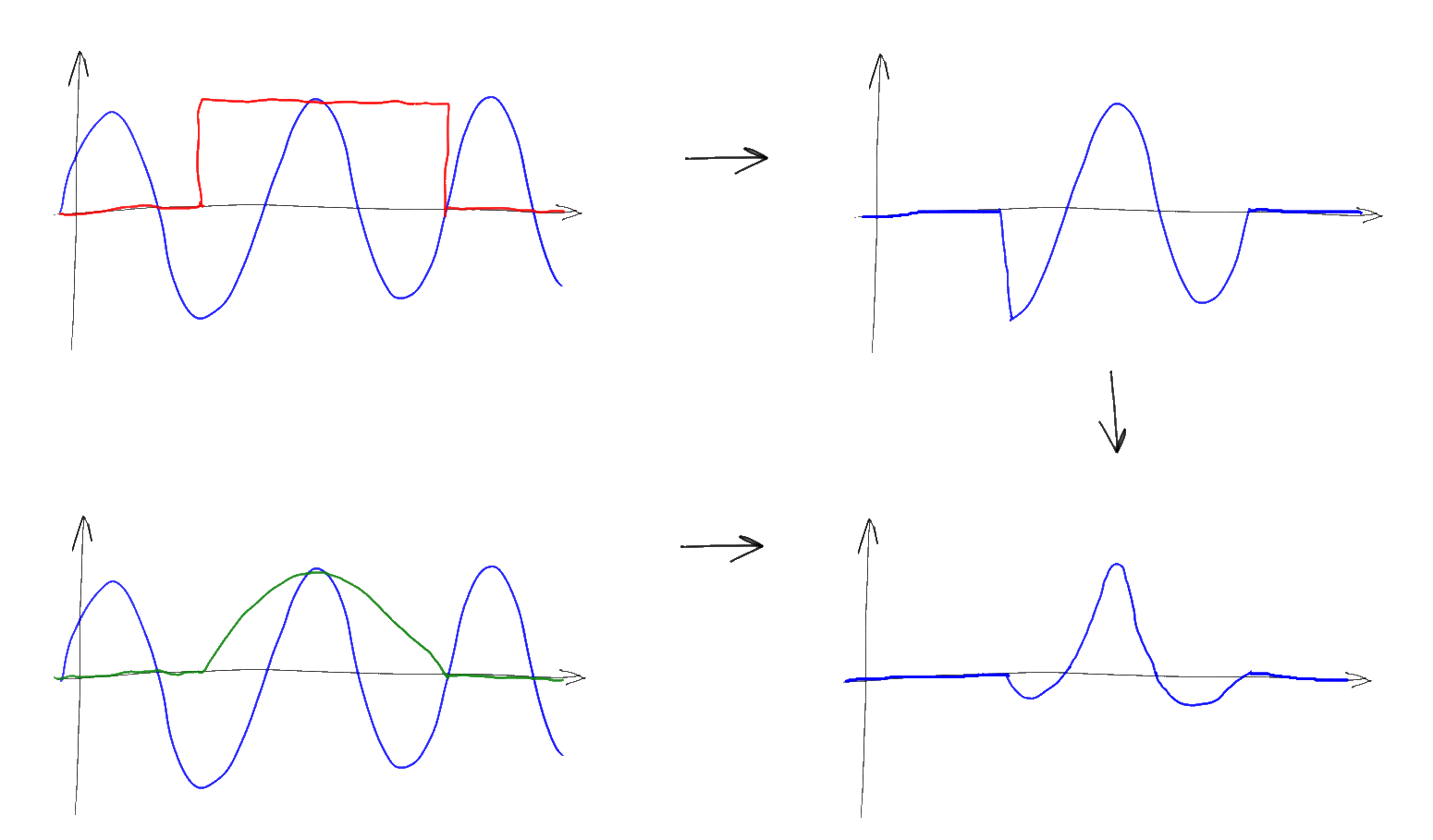

The fourth and fifth properties are perhaps the most useful of all. They allow us to reduce the convolution of functions to the pointwise multiplication of their Fourier images and vice versa - pointwise multiplication of functions to the convolution of their Fourier images. A little further I will show how convenient it is.

The sixth property speaks of the symmetry of Fourier images. In particular, it follows from this property that in the Fourier transform of a real-valued function (that is, any “real” signal), the amplitude spectrum is always an even function, and the phase spectrum (if it is brought to the -pi ... pi range) is odd . For this reason, the negative part of the spectrum is almost never plotted on the spectral graphs - for real-valued signals, it does not provide any new information (but, again, it is not zero).

Finally, the last, seventh property, suggests that the Fourier transform conserves the “energy” of the signal. It is meaningful only for signals of finite duration, the energy of which is finite, and suggests that the spectrum of such signals at infinity quickly approaches zero. Due to this property, as a rule, only the “main” part of the signal, which carries the lion's share of the energy, is usually depicted on the spectral graphs - the rest of the graph simply tends to zero (but, again, is not zero).

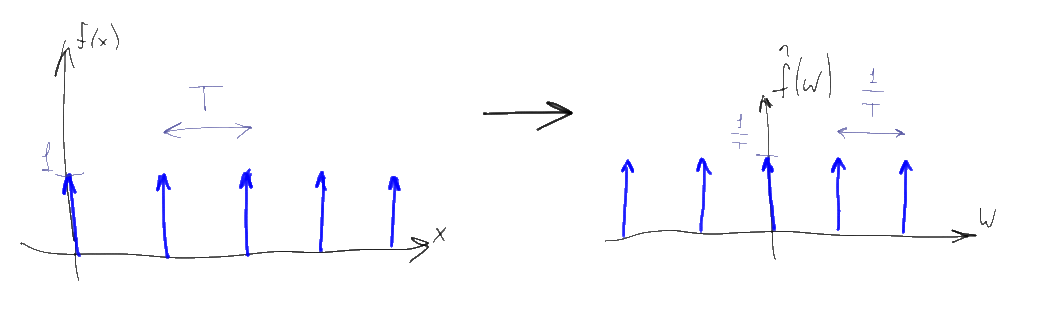

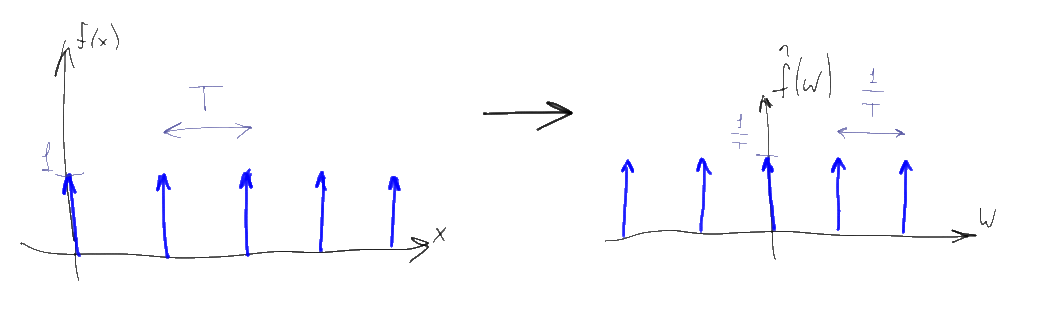

Armed with these 7 properties, let's look at the mathematics of the “digitization” of the signal, which allows you to translate a continuous signal into a sequence of numbers. To do this, we need to take the function known as the “Dirac comb”:

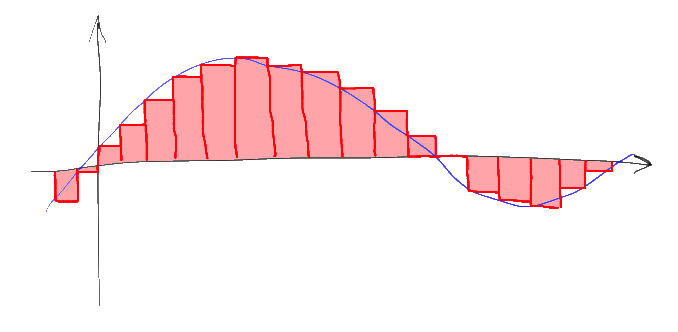

A Dirac comb is simply a periodic sequence of delta functions with a unit coefficient, starting at zero and going with step T. To digitize the signals, T is chosen as small as possible, T << 1. The Fourier image of this function is also a Dirac comb, only with a much larger 1 / T step and a slightly lower coefficient (1 / T). From a mathematical point of view, the discretization of a signal by time is simply a pointwise multiplication of the original signal by the Dirac comb. The value 1 / T is called the sampling frequency:

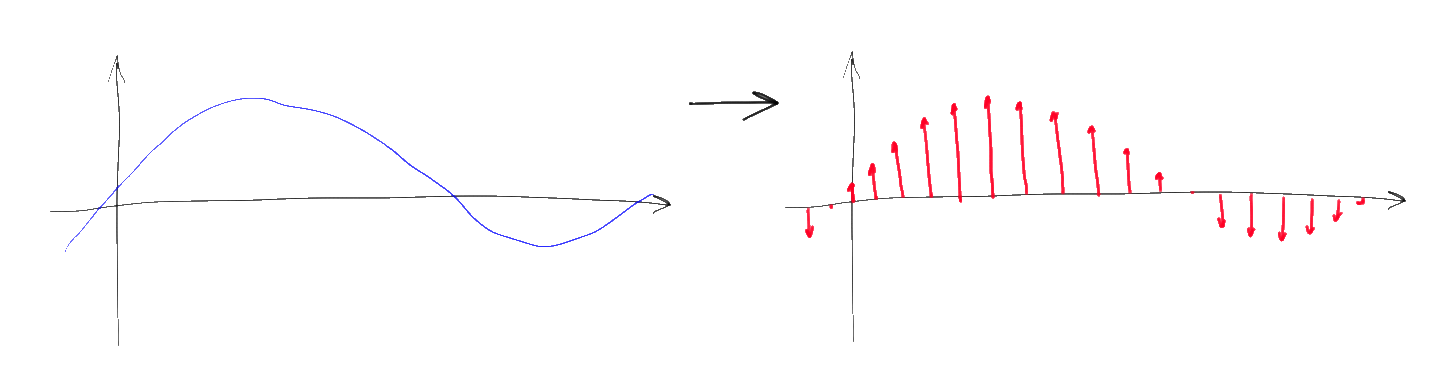

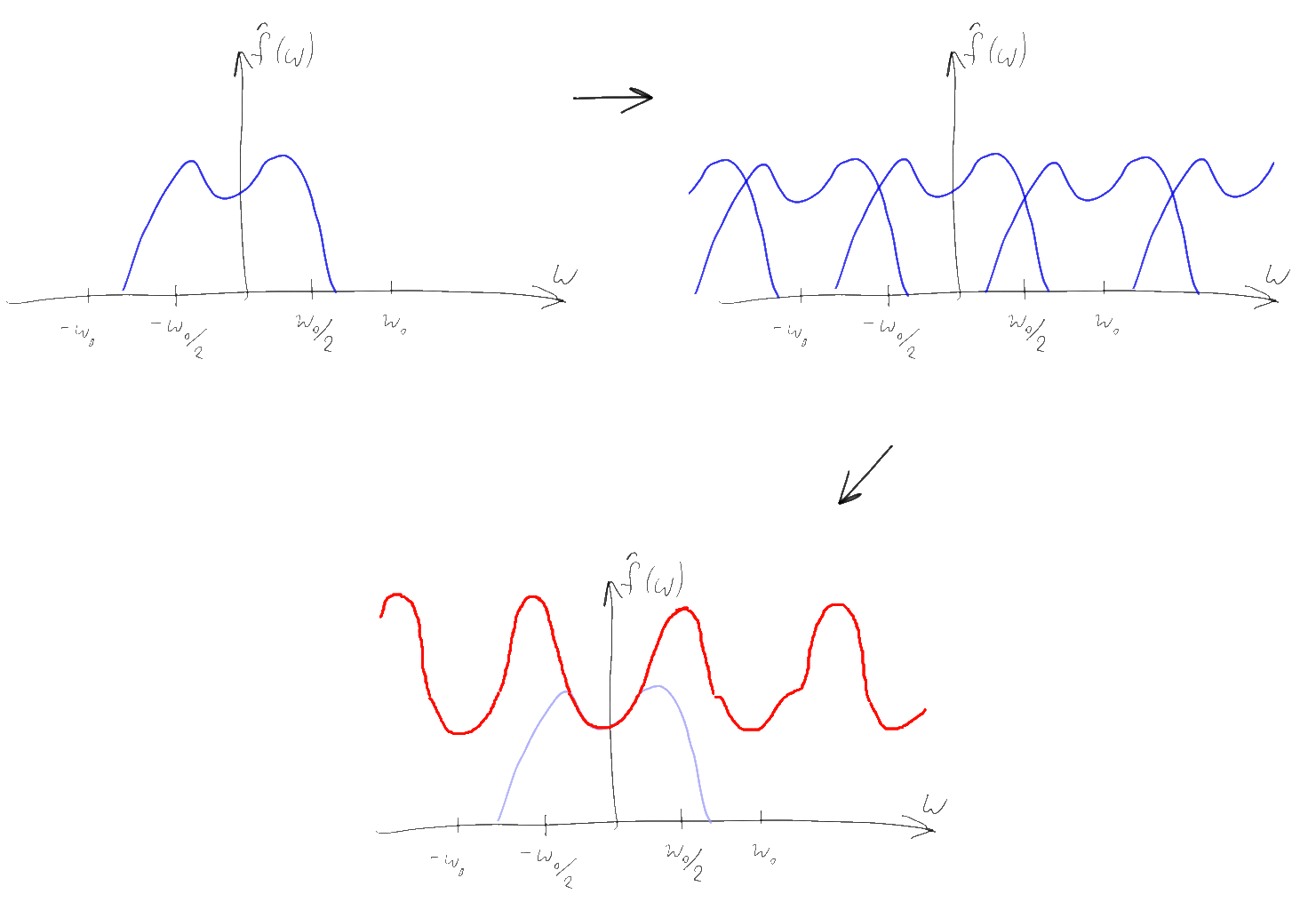

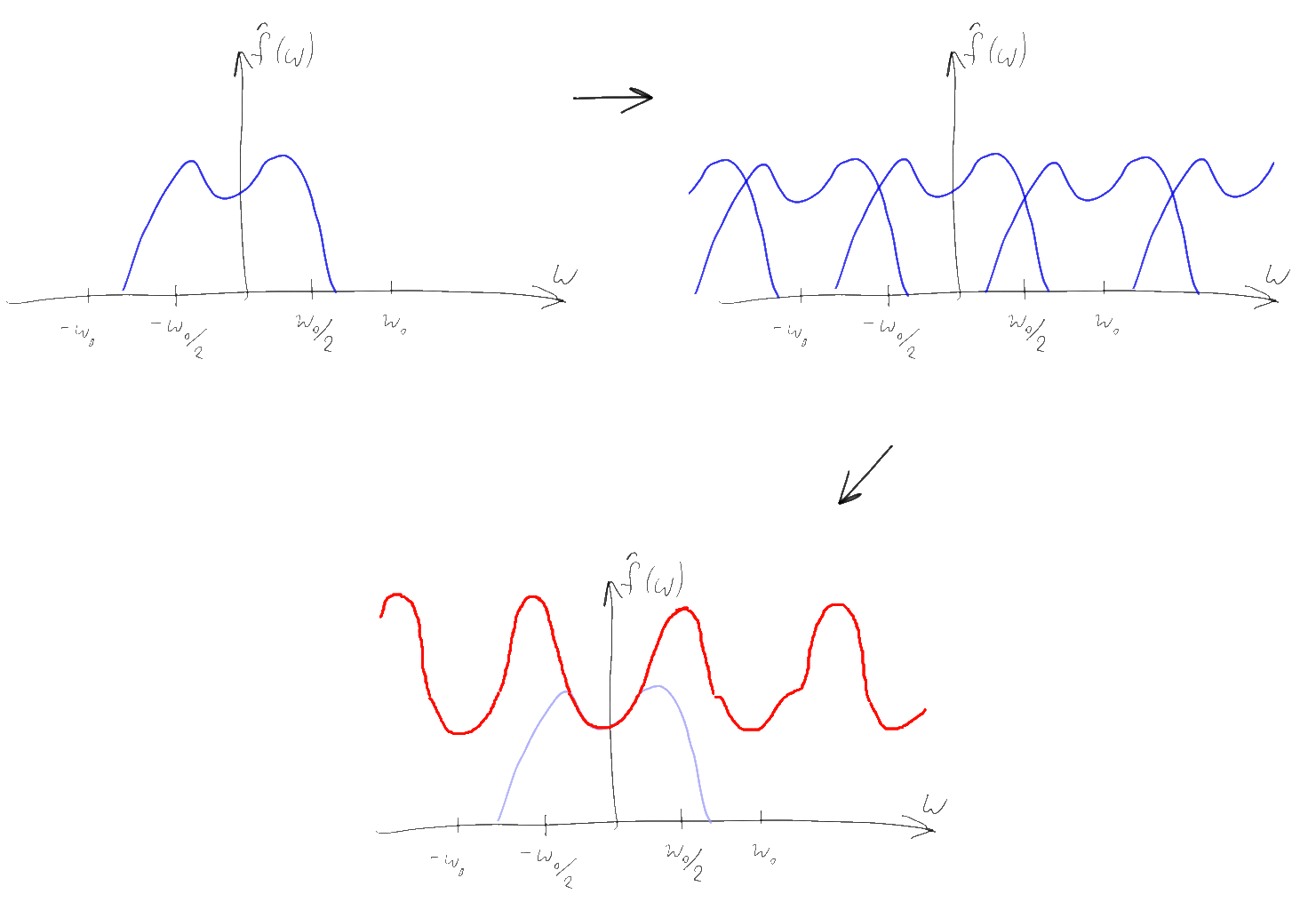

Instead of a continuous function, after such a multiplication, a sequence of delta pulses of a certain height is obtained. Moreover, according to property 5 of the Fourier transform, the spectrum of the resulting discrete signal is a convolution of the original spectrum with the corresponding Dirac comb. It is easy to understand that based on the properties of convolution, the spectrum of the original signal is thus “copied” an infinite number of times along the frequency axis in increments of 1 / T, and then summed.

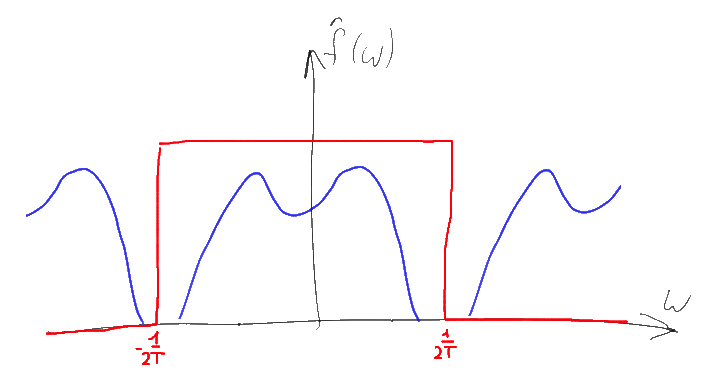

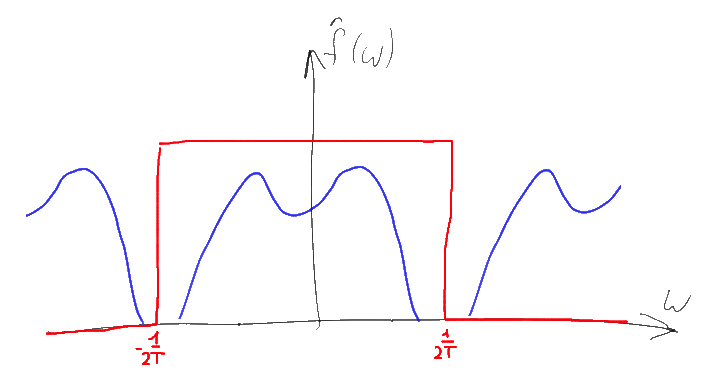

Note that if the original spectrum had a finite width and we used a sufficiently large sampling frequency, then copies of the original spectrum will not overlap, and therefore will not be added together. It is easy to understand that it will be easy to restore the original spectrum from such a “collapsed” spectrum - it will be enough just to take the spectrum component in the region of zero, “cutting off” the excess copies going to infinity. The simplest way to do this is to multiply the spectrum by a rectangular function equal to T in the range -1 / 2T ... 1 / 2T and zero outside this range. A similar Fourier transform corresponds to the function sinc (Tx) and, according to property 4, such multiplication is equivalent to the convolution of the original sequence of delta functions with the function sinc (Tx)

That is, using the Fourier transform, we got a way to easily restore the original signal from a time-discretized one, working under the condition that we use a sampling frequency of at least twice (due to the presence of negative frequencies in the spectrum) exceeding the maximum frequency present in the original signal. This result is widely known and is called the “Kotelnikov / Shannon-Nyquist theorem” . However, as it is now simple (understanding the proof) to notice, this result, contrary to widespread misconception, determines sufficient , but not necessarycondition for restoring the original signal. All we need to do is to ensure that the part of the spectrum that we are interested in does not overlap after sampling the signal and if the signal is sufficiently narrow-band (has a small “width” of the non-zero part of the spectrum), this result can often be achieved even at a sampling frequency much lower than twice the maximum frequency of the signal. This technique is called “undersampling” (sub-sampling, band sampling).) and is quite widely used in the processing of all kinds of radio signals. For example, if we take an FM radio operating in the frequency band from 88 to 108 MHz, then we can use an ADC with a frequency of only 43.5 MHz to digitize it instead of the 216 MHz assumed by Kotelnikov’s theorem. In this case, however, you need a high-quality ADC and a good filter.

I note that “duplication” of high frequencies with frequencies of lower orders (aliasing) is a direct property of signal sampling, irreversibly “spoiling” the result. Therefore, if, in principle, high-order frequencies can be present (that is, almost always), an analog filter is placed in front of the ADC, which “cuts off” everything superfluous directly in the original signal (since it will be too late to do this after sampling). The characteristics of these filters, as analog devices, are imperfect, so some “spoiling” of the signal still occurs, and in practice it follows that the highest frequencies in the spectrum are, as a rule, unreliable. To reduce this problem, the signal is often sampled at an oversample rate,

Another common misconception, by the way, is when the signal at the output of the DAC is drawn “steps”. The “steps” correspond to the convolution of a discretized sequence of signals with a rectangular function of width T and height 1:

The signal spectrum in this transformation is multiplied by the Fourier transform of this rectangular function, and for a similar rectangular function it is again sinc (w), the “extended” the stronger, the less width of the corresponding rectangle. The spectrum of a sampled signal with a similar “DAC” is pointwise multiplied by this spectrum. In this case, unnecessary high frequencies with “extra copies” of the spectrum are not completely cut off, and the upper part of the “useful” part of the spectrum, on the contrary, is weakened.

In practice, of course, no one does. There are many different approaches to the construction of DACs, but even in the weighing type DACs that are closest in meaning, the rectangular pulses in the DACs, on the contrary, are selected as short as possible (approaching the real sequence of delta functions) to avoid unnecessarily suppressing the useful part of the spectrum. The "excess" frequencies in the resulting broadband signal are almost always suppressed by passing the signal through an analog low-pass filter, so that there are no "digital steps" neither "inside" the converter, nor, moreover, at its output.

But back to the Fourier transform. The Fourier transform described above applied to a pre-sampled signal sequence is called the discrete time Fourier transform (DTFT). The spectrum obtained by such a transformation is always 1 / T-periodic; therefore, the DTFT spectrum is completely determined by its values on the interval [0 ... 1 / T), therefore, the DTFT spectrum is often limited to this interval. Moreover, the result of DTFT, despite the fact that it is a spectrum of a sampled signal, is still an “analog” function. In addition, for “ordinary” real-valued signals, the second half of this spectrum, by virtue of property 6, mirrors the left half reflected relative to the Nyquist frequency 1 / 2T.

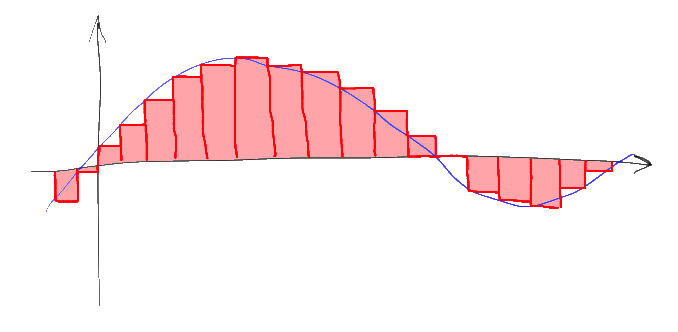

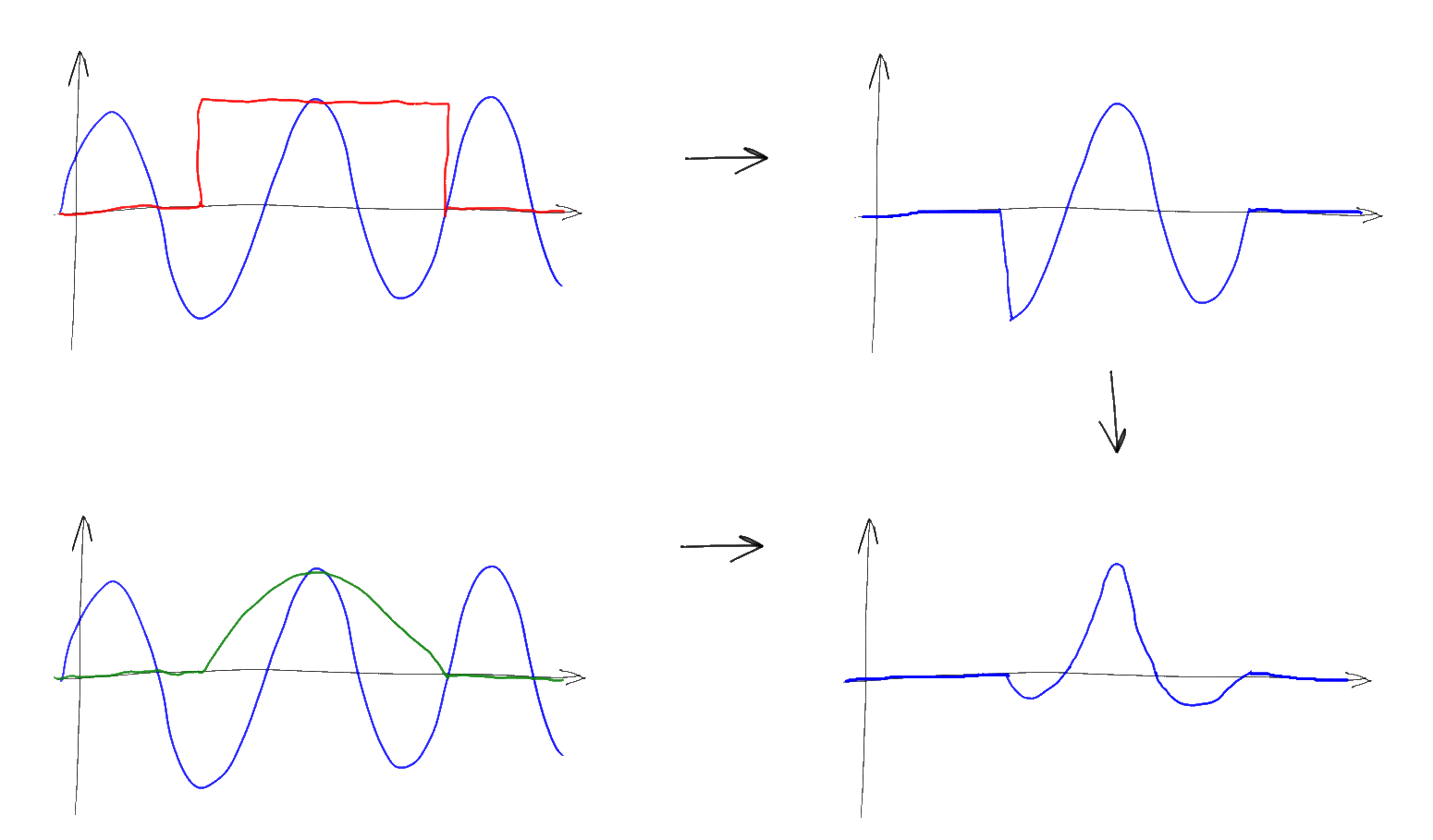

So far, we have assumed that a signal defined from minus to plus infinity arrives at the input of our transformations. However, the real signals available to us always have a finite length - what should I do? To solve this problem in FT and DTFT, the final signal is simply padded with zeros to the left and right to infinity. If the initial signal was initially finite (say, this is a separate impulse) and it fell completely into the Fourier transform, then this approach directly gives the desired result. However, often the “final” signal used for the Fourier transform is actually part of a longer, possibly infinite, signal, such as, for example, a sine wave. In this case, the addition of the final segment by zeros is interpreted as follows: it is believed that the original signal has an infinitely large length, but then it is multiplied by some weighting function - the “window”, which vanishes outside the segment available to us for measurement. In the simplest case, the role of the “window” is played by just a rectangular function, corresponding to the fact that we simply supplement the final signal on the left and on the right with an infinite number of zeros. In more complex ones, the initial sequence is multiplied by weights determined by the “window” function and then, again, supplemented with zeros.

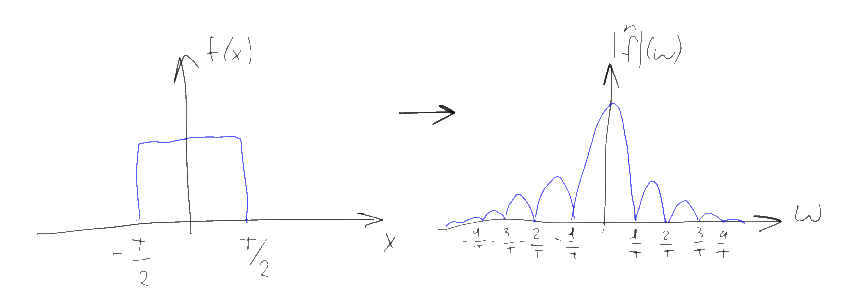

Using property 5 already familiar to us, it is easy to figure out that with such a multiplication, the initial signal is simply collapsed with the spectrum of the window function. For example, if we try to measure the spectrum of a sinusoid (delta function), but limit the measurement interval to a rectangular window, then in the resulting spectrum in place of the delta function we will see the spectrum of the window - i.e. Tsinc (T (xf)):

In this case, T is the length of the interval to which we limited our signal, so the longer the input signal is, the “narrower” the closer to the true delta function the spectrum we observe will be. The final “width” of the main lobe makes it impossible to reliably distinguish between the presence of sinusoids in the source signal that are close to each other in frequency, and the presence of “side lobes” introduces small distortions into far-spaced frequencies, making it difficult to accurately measure the amplitude of individual frequencies, especially if you need to measure the spectrum in regions of small amplitude in the presence of an order of magnitude more powerful components in the spectrum. This effect is called “spectral leakage” and it is impossible to completely defeat it for infinite signals, but the longer the interval at which the signal is measured, the smaller the influence of this leakage. By choosing the window function, one can control the “width” of this leak, either by concentrating it around the main frequency (strongly “blurring” the spectrum, but not interfering with the neighboring frequencies), or by spreading it everywhere (the blurring of the peaks decreases but the “noise” increases and as a result - measurement error of the amplitude of individual frequencies). Note that the selected sampling frequency in the spectral leakage plays almost no role - a short signal length can be sampled at least 10 GHz, but this will only increase the number of measurable frequencies, while the accuracy of determining each individual frequency will still remain low. or smearing it everywhere (the blurring of the peaks decreases but the “noise” grows strongly and, as a result, the error in measuring the amplitude of individual frequencies). Note that the selected sampling frequency in the spectral leakage plays almost no role - a short signal length can be sampled at least 10 GHz, but this will only increase the number of measurable frequencies, while the accuracy of determining each individual frequency will still remain low. or smearing it everywhere (the blurring of the peaks decreases but the “noise” grows strongly and, as a result, the error in measuring the amplitude of individual frequencies). Note that the selected sampling frequency in the spectral leakage plays almost no role - a short signal length can be sampled at least 10 GHz, but this will only increase the number of measurable frequencies, while the accuracy of determining each individual frequency will still remain low.

An interesting special case is the situation in which a signal with a set of discrete frequencies nF is sampled at a frequency mF, where m, n are integers. In this case, the zeros of the “window” and the location of the delta functions in the spectrum are exactly the same, and although the frequencies are still “smeared”, their amplitude at the points mF coincides with the true one — the “noise” is zero. This property allows us to prove an analogue of the Kotelnikov theorem for the discrete Fourier transform, but in practice such signals, unfortunately, do not actually occur.

So, we figured out the “input" - from a continuous function of infinite length, we got a finite number of discrete samples that we can work with and instead got limitations on the width of the spectrum and frequency leakage. However, the “exit” DTFT is still a continuous function, which is difficult for the computer to work with. In practice, this problem is solved very simply - the entire segment [0,1 / T) is divided into k equal parts and DTFT is considered at the points fi = i / kT, where i = 0,1, ... k-1. The resulting design is called the “Discrete Fourier Transform” (DFT).

It is convenient to normalize the last transformation by removing T from it and questions related to the choice of the “window”. This normalized notation is often used as the definition of DFT as a sequence conversion of N complex numbers:

The beauty of the Fourier transform written in this form is that while preserving all the advantages of DTFT, a similar DTF for “smooth” k (for example, powers of two) can be calculated extremely quickly over a time of the order of k log (k). The corresponding algorithms are called the “fast Fourier transform” (FFT), and there are several of them, generally speaking. From a practical point of view, however, they can all be considered as “black boxes”, receiving a sequence of complex numbers at the input and issuing a sequence of complex numbers at the output. Thus, working with a sampled signal of finite length reduces to the fact that this signal is first multiplied by a suitable weighting function, then supplemented with the required number of zeros on the right and passed to the FFT algorithm.

How to interpret the result? In view of the foregoing,

Well, that's all. I hope the Fourier transform and FFT algorithms will now be simple, clear and pleasant to use tools for you.

(c) xkcd

(c) xkcd Without using complex formulas and matlab, I will try to answer the following questions:

- FT, DTF, DTFT - what are the differences and how do completely different seemingly formulas give such conceptually similar results?

- How to correctly interpret the results of the fast Fourier transform (FFT)

- What to do if a signal of 179 samples is given and the FFT requires a sequence of length equal to two to the input

- Why, when trying to get the Fourier spectrum of the sinusoid, instead of the expected single “stick”, a strange squiggle comes out on the graph and what can be done about it

- Why put analog filters in front of the ADC and after the DAC

- Is it possible to digitize an ADC signal with a frequency higher than half the sampling frequency (the school answer is incorrect, the correct answer is possible)

- How to restore the original signal from a digital sequence

I will proceed from the assumption that the reader understands what the integral is , the complex number (as well as its module and argument ), the convolution of functions , plus at least “on the fingers” imagine what the Dirac delta function is . Do not know - it does not matter, read the above links. By “product of functions” in this text I will everywhere mean “pointwise multiplication”.

We must probably start with the fact that the ordinary Fourier transform is some kind of thing that, as you might guess from the name, converts one function to another, that is, puts it in the correspondence of each function of the real variable x (t) is its spectrum or the Fourier transform of y (w):

If we give analogies, then an example of a similar transformation in meaning can serve as differentiation, for example, turning a function into its derivative. That is, the Fourier transform is the same operation, in essence, as the derivative, and it is often denoted in a similar way, drawing a triangular “cap” over the function. Only in contrast to the differentiation that can be determined for real numbers, the Fourier transform always “works” with more general complex numbers. Because of this, problems constantly arise with the display of the results of this transformation, since complex numbers are determined not by one, but by two coordinates on the graph operating with real numbers. The most convenient way, as a rule, is to represent complex numbers in the form of a module and an argument and draw them separately as two separate graphs:

The plot of the argument of the complex value is often called in this case the “phase spectrum”, and the graph of the module is called the “amplitude spectrum”. The amplitude spectrum, as a rule, is of much greater interest, and therefore, the “phase" part of the spectrum is often skipped. In this article, we will also focus on “amplitude” things, but we should not forget about the existence of the missing phase part of the graph. In addition, instead of the usual module of the complex value, its decimal logarithm times 10 is often drawn. The result is a logarithmic graph, the values on which are displayed in decibels (dB).

Please note that not very negative numbers in the logarithmic graph (-20 dB or less) correspond to almost zero numbers on the “normal” graph. Therefore, the long and wide “tails” of various spectra on such graphs, when displayed in “ordinary” coordinates, as a rule practically disappear. The convenience of such a strange at first glance representation arises from the fact that the Fourier images of various functions often need to be multiplied among themselves. With a similar pointwise multiplication of complex-valued Fourier images, their phase spectra add up, and the amplitude ones multiply. The first is easy, and the second is relatively difficult. However, the logarithms of the amplitude when multiplying the amplitudes add up, so the logarithmic amplitude graphs, like phase graphs, can simply be added pointwise. Moreover, in practical problems it is often more convenient to operate not with the "amplitude" of the signal, but with its "power" (squared amplitude). On the logarithmic scale, both graphs (both amplitudes and powers) look identical and differ only in coefficient - all values on the power graph are exactly twice as large as on the amplitudes scale. Accordingly, to plot the power distribution by frequency (in decibels), you can not square anything, but calculate the decimal logarithm and multiply it by 20.

Are you bored? Wait a bit, with the tedious part of the article explaining how to interpret the graphs, we will finish soon :). But before this, one extremely important thing should be understood: although all the above graphs of the spectra were drawn for some limited ranges of values (in particular, positive numbers), all these graphs actually continue in plus and minus infinity. The graphs simply depict some “most meaningful” part of the graph, which is usually mirrored for negative parameter values and is often periodically repeated with some step, if we consider it on a larger scale.

Having decided what is drawn on the graphs, let's get back to the Fourier transform itself and its properties. There are several different ways to determine this transformation, which differ in small details (different normalizations). For example, in our universities, for some reason, they often use the normalization of the Fourier transform that defines the spectrum in terms of the angular frequency (radians per second). I will use a more convenient western formulation defining the spectrum in terms of the usual frequency (hertz). The direct and inverse Fourier transforms in this case are determined by the formulas on the left, and some properties of this transformation that we need are determined by the list of seven items on the right:

The first of these properties is linearity. If we take some linear combination of functions, then the Fourier transform of this combination will be the same linear combination of the Fourier images of these functions. This property allows us to reduce complex functions and their Fourier transforms to simpler ones. For example, the Fourier transform of a sinusoidal function with frequency f and amplitude a is a combination of two delta functions located at points f and -f and with coefficient a / 2:

If we take a function consisting of the sum of the set of sinusoids with different frequencies, then according to the linearity property, the Fourier image of this function will consist of the corresponding set of delta functions. This allows us to give a naive, but visual interpretation of the spectrum according to the principle “if the amplitude a corresponds to the frequency f in the spectrum of the function, then the original function can be represented as the sum of sinusoids, one of which will be a sinusoid with frequency f and amplitude 2a”. Strictly speaking, this interpretation is incorrect, since the delta function and the point on the graph are completely different things, but as we will see later, for discrete Fourier transforms it will not be so far from the truth.

The second property of the Fourier transform is the independence of the amplitude spectrum from the time shift of the signal. If we move the function left or right along the x axis, then only its phase spectrum will change.

The third property is the extension (compression) of the original function along the time axis (x) proportionally compresses (stretches) its Fourier image on the frequency scale (w). In particular, the spectrum of a signal of finite duration is always infinitely wide, and vice versa, the spectrum of a finite width always corresponds to a signal of unlimited duration.

The fourth and fifth properties are perhaps the most useful of all. They allow us to reduce the convolution of functions to the pointwise multiplication of their Fourier images and vice versa - pointwise multiplication of functions to the convolution of their Fourier images. A little further I will show how convenient it is.

The sixth property speaks of the symmetry of Fourier images. In particular, it follows from this property that in the Fourier transform of a real-valued function (that is, any “real” signal), the amplitude spectrum is always an even function, and the phase spectrum (if it is brought to the -pi ... pi range) is odd . For this reason, the negative part of the spectrum is almost never plotted on the spectral graphs - for real-valued signals, it does not provide any new information (but, again, it is not zero).

Finally, the last, seventh property, suggests that the Fourier transform conserves the “energy” of the signal. It is meaningful only for signals of finite duration, the energy of which is finite, and suggests that the spectrum of such signals at infinity quickly approaches zero. Due to this property, as a rule, only the “main” part of the signal, which carries the lion's share of the energy, is usually depicted on the spectral graphs - the rest of the graph simply tends to zero (but, again, is not zero).

Armed with these 7 properties, let's look at the mathematics of the “digitization” of the signal, which allows you to translate a continuous signal into a sequence of numbers. To do this, we need to take the function known as the “Dirac comb”:

A Dirac comb is simply a periodic sequence of delta functions with a unit coefficient, starting at zero and going with step T. To digitize the signals, T is chosen as small as possible, T << 1. The Fourier image of this function is also a Dirac comb, only with a much larger 1 / T step and a slightly lower coefficient (1 / T). From a mathematical point of view, the discretization of a signal by time is simply a pointwise multiplication of the original signal by the Dirac comb. The value 1 / T is called the sampling frequency:

Instead of a continuous function, after such a multiplication, a sequence of delta pulses of a certain height is obtained. Moreover, according to property 5 of the Fourier transform, the spectrum of the resulting discrete signal is a convolution of the original spectrum with the corresponding Dirac comb. It is easy to understand that based on the properties of convolution, the spectrum of the original signal is thus “copied” an infinite number of times along the frequency axis in increments of 1 / T, and then summed.

Note that if the original spectrum had a finite width and we used a sufficiently large sampling frequency, then copies of the original spectrum will not overlap, and therefore will not be added together. It is easy to understand that it will be easy to restore the original spectrum from such a “collapsed” spectrum - it will be enough just to take the spectrum component in the region of zero, “cutting off” the excess copies going to infinity. The simplest way to do this is to multiply the spectrum by a rectangular function equal to T in the range -1 / 2T ... 1 / 2T and zero outside this range. A similar Fourier transform corresponds to the function sinc (Tx) and, according to property 4, such multiplication is equivalent to the convolution of the original sequence of delta functions with the function sinc (Tx)

That is, using the Fourier transform, we got a way to easily restore the original signal from a time-discretized one, working under the condition that we use a sampling frequency of at least twice (due to the presence of negative frequencies in the spectrum) exceeding the maximum frequency present in the original signal. This result is widely known and is called the “Kotelnikov / Shannon-Nyquist theorem” . However, as it is now simple (understanding the proof) to notice, this result, contrary to widespread misconception, determines sufficient , but not necessarycondition for restoring the original signal. All we need to do is to ensure that the part of the spectrum that we are interested in does not overlap after sampling the signal and if the signal is sufficiently narrow-band (has a small “width” of the non-zero part of the spectrum), this result can often be achieved even at a sampling frequency much lower than twice the maximum frequency of the signal. This technique is called “undersampling” (sub-sampling, band sampling).) and is quite widely used in the processing of all kinds of radio signals. For example, if we take an FM radio operating in the frequency band from 88 to 108 MHz, then we can use an ADC with a frequency of only 43.5 MHz to digitize it instead of the 216 MHz assumed by Kotelnikov’s theorem. In this case, however, you need a high-quality ADC and a good filter.

I note that “duplication” of high frequencies with frequencies of lower orders (aliasing) is a direct property of signal sampling, irreversibly “spoiling” the result. Therefore, if, in principle, high-order frequencies can be present (that is, almost always), an analog filter is placed in front of the ADC, which “cuts off” everything superfluous directly in the original signal (since it will be too late to do this after sampling). The characteristics of these filters, as analog devices, are imperfect, so some “spoiling” of the signal still occurs, and in practice it follows that the highest frequencies in the spectrum are, as a rule, unreliable. To reduce this problem, the signal is often sampled at an oversample rate,

Another common misconception, by the way, is when the signal at the output of the DAC is drawn “steps”. The “steps” correspond to the convolution of a discretized sequence of signals with a rectangular function of width T and height 1:

The signal spectrum in this transformation is multiplied by the Fourier transform of this rectangular function, and for a similar rectangular function it is again sinc (w), the “extended” the stronger, the less width of the corresponding rectangle. The spectrum of a sampled signal with a similar “DAC” is pointwise multiplied by this spectrum. In this case, unnecessary high frequencies with “extra copies” of the spectrum are not completely cut off, and the upper part of the “useful” part of the spectrum, on the contrary, is weakened.

In practice, of course, no one does. There are many different approaches to the construction of DACs, but even in the weighing type DACs that are closest in meaning, the rectangular pulses in the DACs, on the contrary, are selected as short as possible (approaching the real sequence of delta functions) to avoid unnecessarily suppressing the useful part of the spectrum. The "excess" frequencies in the resulting broadband signal are almost always suppressed by passing the signal through an analog low-pass filter, so that there are no "digital steps" neither "inside" the converter, nor, moreover, at its output.

But back to the Fourier transform. The Fourier transform described above applied to a pre-sampled signal sequence is called the discrete time Fourier transform (DTFT). The spectrum obtained by such a transformation is always 1 / T-periodic; therefore, the DTFT spectrum is completely determined by its values on the interval [0 ... 1 / T), therefore, the DTFT spectrum is often limited to this interval. Moreover, the result of DTFT, despite the fact that it is a spectrum of a sampled signal, is still an “analog” function. In addition, for “ordinary” real-valued signals, the second half of this spectrum, by virtue of property 6, mirrors the left half reflected relative to the Nyquist frequency 1 / 2T.

So far, we have assumed that a signal defined from minus to plus infinity arrives at the input of our transformations. However, the real signals available to us always have a finite length - what should I do? To solve this problem in FT and DTFT, the final signal is simply padded with zeros to the left and right to infinity. If the initial signal was initially finite (say, this is a separate impulse) and it fell completely into the Fourier transform, then this approach directly gives the desired result. However, often the “final” signal used for the Fourier transform is actually part of a longer, possibly infinite, signal, such as, for example, a sine wave. In this case, the addition of the final segment by zeros is interpreted as follows: it is believed that the original signal has an infinitely large length, but then it is multiplied by some weighting function - the “window”, which vanishes outside the segment available to us for measurement. In the simplest case, the role of the “window” is played by just a rectangular function, corresponding to the fact that we simply supplement the final signal on the left and on the right with an infinite number of zeros. In more complex ones, the initial sequence is multiplied by weights determined by the “window” function and then, again, supplemented with zeros.

Using property 5 already familiar to us, it is easy to figure out that with such a multiplication, the initial signal is simply collapsed with the spectrum of the window function. For example, if we try to measure the spectrum of a sinusoid (delta function), but limit the measurement interval to a rectangular window, then in the resulting spectrum in place of the delta function we will see the spectrum of the window - i.e. Tsinc (T (xf)):

In this case, T is the length of the interval to which we limited our signal, so the longer the input signal is, the “narrower” the closer to the true delta function the spectrum we observe will be. The final “width” of the main lobe makes it impossible to reliably distinguish between the presence of sinusoids in the source signal that are close to each other in frequency, and the presence of “side lobes” introduces small distortions into far-spaced frequencies, making it difficult to accurately measure the amplitude of individual frequencies, especially if you need to measure the spectrum in regions of small amplitude in the presence of an order of magnitude more powerful components in the spectrum. This effect is called “spectral leakage” and it is impossible to completely defeat it for infinite signals, but the longer the interval at which the signal is measured, the smaller the influence of this leakage. By choosing the window function, one can control the “width” of this leak, either by concentrating it around the main frequency (strongly “blurring” the spectrum, but not interfering with the neighboring frequencies), or by spreading it everywhere (the blurring of the peaks decreases but the “noise” increases and as a result - measurement error of the amplitude of individual frequencies). Note that the selected sampling frequency in the spectral leakage plays almost no role - a short signal length can be sampled at least 10 GHz, but this will only increase the number of measurable frequencies, while the accuracy of determining each individual frequency will still remain low. or smearing it everywhere (the blurring of the peaks decreases but the “noise” grows strongly and, as a result, the error in measuring the amplitude of individual frequencies). Note that the selected sampling frequency in the spectral leakage plays almost no role - a short signal length can be sampled at least 10 GHz, but this will only increase the number of measurable frequencies, while the accuracy of determining each individual frequency will still remain low. or smearing it everywhere (the blurring of the peaks decreases but the “noise” grows strongly and, as a result, the error in measuring the amplitude of individual frequencies). Note that the selected sampling frequency in the spectral leakage plays almost no role - a short signal length can be sampled at least 10 GHz, but this will only increase the number of measurable frequencies, while the accuracy of determining each individual frequency will still remain low.

An interesting special case is the situation in which a signal with a set of discrete frequencies nF is sampled at a frequency mF, where m, n are integers. In this case, the zeros of the “window” and the location of the delta functions in the spectrum are exactly the same, and although the frequencies are still “smeared”, their amplitude at the points mF coincides with the true one — the “noise” is zero. This property allows us to prove an analogue of the Kotelnikov theorem for the discrete Fourier transform, but in practice such signals, unfortunately, do not actually occur.

So, we figured out the “input" - from a continuous function of infinite length, we got a finite number of discrete samples that we can work with and instead got limitations on the width of the spectrum and frequency leakage. However, the “exit” DTFT is still a continuous function, which is difficult for the computer to work with. In practice, this problem is solved very simply - the entire segment [0,1 / T) is divided into k equal parts and DTFT is considered at the points fi = i / kT, where i = 0,1, ... k-1. The resulting design is called the “Discrete Fourier Transform” (DFT).

It is convenient to normalize the last transformation by removing T from it and questions related to the choice of the “window”. This normalized notation is often used as the definition of DFT as a sequence conversion of N complex numbers:

The beauty of the Fourier transform written in this form is that while preserving all the advantages of DTFT, a similar DTF for “smooth” k (for example, powers of two) can be calculated extremely quickly over a time of the order of k log (k). The corresponding algorithms are called the “fast Fourier transform” (FFT), and there are several of them, generally speaking. From a practical point of view, however, they can all be considered as “black boxes”, receiving a sequence of complex numbers at the input and issuing a sequence of complex numbers at the output. Thus, working with a sampled signal of finite length reduces to the fact that this signal is first multiplied by a suitable weighting function, then supplemented with the required number of zeros on the right and passed to the FFT algorithm.

How to interpret the result? In view of the foregoing,

- The resulting values are a uniform grid of samples on the DTFT spectrum. The more readings, the finer the grid, the more visible the spectrum. Appending the desired number of zeros to a known sequence, we can calculate an arbitrarily close approximation to the continuous spectrum

- The DTFT spectrum is specified on a frequency interval from 0 to 1 / T (where 1 / T is the sampling frequency) and is periodically repeated to infinity outside this interval

- This spectrum is given by complex numbers (pairs of real). Amplitude is defined as the modulus of a complex number, phase as an argument.

- For a valid input signal, a spectrum in the 1 / 2T ... 1 / T range simply mirrors the 0 ... 1 / 2T spectrum and does not carry a payload accordingly (you can simply cut it off to visualize the spectrum)

- If the original signal contained frequencies above half the sampling frequency, then they will be mapped to lower frequencies (possibly overlapping an existing signal of this frequency) - aliasing

- There is always a “spectral leak” in the spectrum, determined by the selected weighing “window function”. The longer the original signal (before adding zeros!) - the less this leak.

- Spectral leakage limits the usefulness of calculating an FFT with a large complement of zeros. However, the addition is still often useful, because, for example, it allows one to more accurately determine the maximum of a narrow-band sinusoidal signal if it does not fall exactly into one of the frequencies of the form k / T.

- The sinusoid of amplitude A in the amplitude spectrum (for the normalization of the Fourier transform I have chosen) corresponds to the value A * N / 2, with the exception of the zero frequency, which does not decompose into the “plus” and “minus” frequencies and therefore has the amplitude A * N and also the frequencies Nyquist 1 / 2T in which in the extreme case separate copies of the spectrum touch each other (there will also be A * N, but, unlike zero, this value does not get into the FFT output and is never reliable in real circuits anyway). Here N = T1 / T0, where T1 is the length of the initial signal (it determines the coefficient in front of the “window” spectrum), and T0 is the length of one sampling period (it determines the coefficient of the Dirac comb) and, as it is easy to see, this is simply the number of samples in the original signal (before it is supplemented with zeros)

Well, that's all. I hope the Fourier transform and FFT algorithms will now be simple, clear and pleasant to use tools for you.