OpenAI demonstrates the transfer of complex manipulations from simulations to the real world.

- Transfer

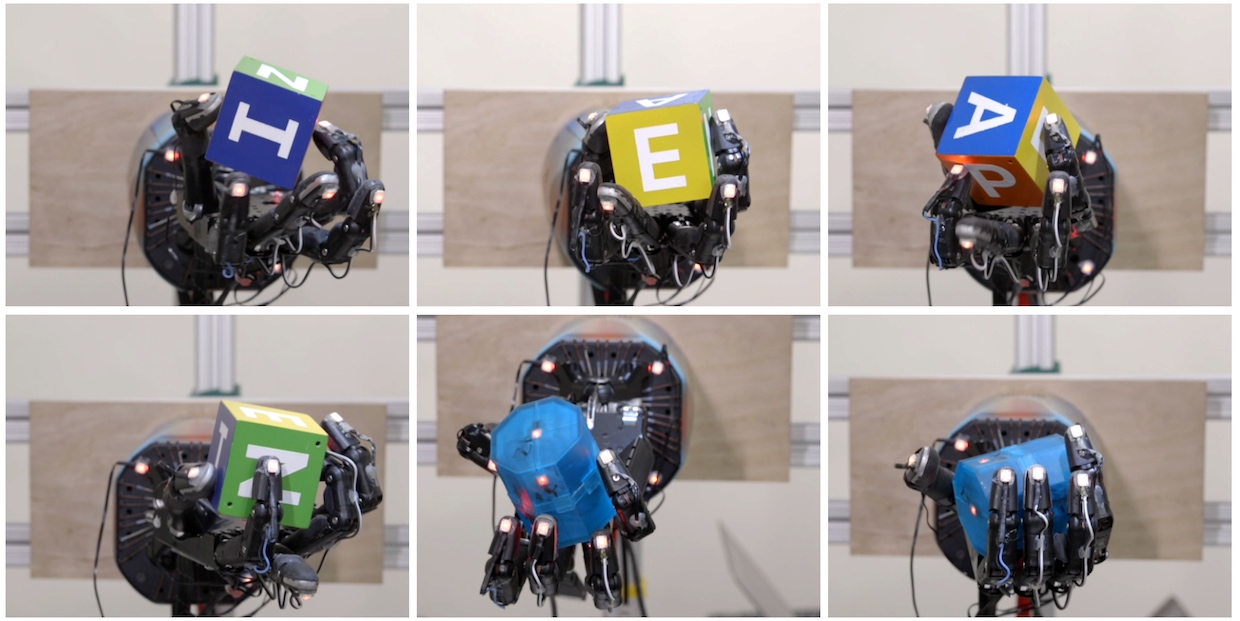

After adding random factors to a relatively simple simulation, a robot from OpenAI learned how to perform complex handheld operations.

Handheld operations are one of those actions that are at the top of the list of "skills that do not require efforts from people and are extremely difficult for robots." Without thinking, we are able to adaptively control the fingers, opposing them with the thumb and palm, taking into account friction and gravity, manipulating objects with one hand, without using the other - during this day you must have done this trick many times, even with your phone.

It takes years of training for people to learn how to work reliably with their fingers, but robots do not have that much training time. Such complex tasks are still solved through practical training and experience, and the task is to find a way to train the robot faster and more efficiently than to just give the robotic arm something that can be manipulated again and again until it realizes that it works, and what does not; it may take a hundred years.

Instead of waiting a hundred years, researchers from OpenAI used reinforcement training to train the convolutional neutron network to control the five-fingered hand of the Shadow robot to manipulate objects — and in just 50 hours. They managed to do this through a simulation, a technique that is notorious as “doomed to success” —but they neatly introduced random factors into it in order to bring it closer to the mutability of the real world. The real Shadow hand was able to successfully carry out handheld manipulations with real objects without any re-training.

Ideally, all robots need to be trained in simulations, because simulations can be scaled without creating a lot of real robots. Do you want to train dofigillion robots dofigillion hours in one dofigilionnuyu fraction of a second? This can be done - if you get enough computing power. But try to do this in the real world - and the problem that no one knows exactly how much it will be, dofigillion, will be the least of your problems.

The problem with training robots in simulations is that the real world cannot be simulated for sure - and it is even more difficult to accurately simulate such minor annoyances as friction, compliance and the interaction of several objects. Therefore, it is generally accepted that simulation is fine, but there is a big and terrible gap between the success of simulation and success in the real world, which in a certain way diminishes the value of simulations. The fact that the very things that it would be nice to simulate (for example, handheld manipulations) at the same time prove to be the most difficult for accurate simulations, because of how physically sophisticated they are, does not improve the situation.

A common approach to this problem is to try to make the simulation as accurate as possible, and hope that it will be close enough to the real world so that you can extract some useful behavior from it. Instead, OpenAI puts in the first place not accuracy, but variability, supplying its moderately realistic simulations with a multitude of small tweaks so that the resulting behavior is reliable enough to work outside the simulation.

The randomization process is the key to what makes the system (called Dactyl) able to effectively move from simulation to the real world. OpenAI understands perfectly well that the simulations they use are not complex enough to simulate the whole mountain of the most important things, from friction to wear on the tips of the fingers of a real roboruki. In order for the robot to summarize what it learns, OpenAI contributes random variables to all possible aspects of the simulation in order to try to cover all the variability of the world that cannot be modeled well. This includes the mass, all measurements of the object, the friction of its surface and the fingers of the robot, damping of the fingers of the robot, the force of the impact of the motors, limitation of joints, play and noise of the motor, and so on. Small random effects are attached to the object in order for the simulation to cope with dynamics that cannot be modeled.

Rows show pictures from the same camera. Columns correspond to images with random changes - all of them are simultaneously fed to the neural networks.

OpenAI calls this “environment randomization,” and in the case of handheld manipulations, they “wanted to see if the scale up of environment randomization could solve the problem that is not available in today's robotics techniques.” And so, what happened as a result of two independently trained systems (one visual, the second for manipulation), which visually recognize the position of the cube and rotate it into different positions.

All these turns of the cube (and the system is capable of at least 50 successful successive manipulations) were made possible thanks to 6144 processors and 8 GPUs, which gained 100 years of simulated robot experience in just 50 hours. The only feedback available to the system (both in simulation and in reality) is the location of the cube and fingers, while the system started without having any specific concepts about how to hold or rotate the cube. She had to independently deal with all of this - including rotating her fingers, simultaneously coordinating several fingers, using gravity, coordinating the application of forces. The robot invented the same techniques that people use, however, with small (and interesting) modifications:

To accurately capture an object, a robot usually uses a little finger instead of the index or middle fingers. This may be due to the presence of the Shadow Dexterous Hand in the little finger of an additional degree of freedom compared to the index, middle and ring fingers, which makes it more mobile. People more mobile usually have an index and middle finger. This means that our system is able to independently invent the grasping technique that people have, but it is better to adapt it to their own limitations and capabilities.

Different types of cleats that the system has learned. From left to right and from top to bottom: grabbing with your fingertips, grabbing with your palm, grabbing with three fingers, four, five-finger grabbing, and powerful gripping.

We observed another interesting parallel in the work of people's fingers and our robot. According to this strategy, the hand holds the object with two fingers and rotates around this axis. It turned out that young children do not have time to develop similar motility, so they usually rotate objects with the help of proximal or middle phalanges of the fingers . And only later in life do they switch to the distal phalanges, as most adults do. Interestingly, our robot usually relies on the distal phalanxes to rotate an object.

Plus, the technology is that, as it turned out, robots can still be trained on complex physical actions in simulations, and then immediately use the accumulated skills in reality - and this is really a great achievement, because training in simulations goes much faster than in reality .

We contacted Jonas Schneider, a member of the OpenAI technical team, to ask more about this project.

Edition : Why is handheld manipulation in robotics so difficult?

Jonas schneider: The manipulations take place in a very limited space, and a large number of degrees of freedom is available to the robot. Successful manipulation strategies require proper coordination in all these degrees of freedom, and this reduces the size of the error in comparison with ordinary interactions with objects — such as simple capture, for example. During handheld manipulations, a lot of contact with the object is recorded. Modeling these contacts is a difficult task, prone to errors. Runtime errors have to be controlled while the arm is working, which causes problems in the traditional approach, based on the planning of movements in advance. For example, a problem may arise when you have linear feedback that does not register the non-linear dynamics of what is happening.

Apparently, random variables are the key to ensuring that the skills obtained in the simulation can be reliably applied in reality. How do you decide which parameters to make random, and how?

During calibration, we roughly estimate which parameters may change, and then decide which ones will most importantly be reproduced in the simulation. Then we set the values of these parameters equal to the calibration ones, and add random variations in the region of the mean value. The amplitude of variations depends on our confidence - for example, we did not vary the size of the object very much, since we can measure it accurately.

Some random variations were based on empirical observations. For example, we observed how our robot sometimes dropped an object, dropping a brush, and not having time to pick it up until the object rolled off it. We found that due to problems with a low-level controller, our actions could sometimes be delayed by several hundred milliseconds. And we could, of course, spend the effort to make the controller more reliable, but instead we simply added randomization to the response time of each controller. It seems to us that at a higher level this may be an interesting approach to the development of future robots; for some tasks, the development of very accurate equipment may be unacceptably expensive, and we have demonstrated

How do you think your results would improve if you waited not 100 years of simulated time, but, for example, 1000?

On the example of a specific task, it is difficult to assess, since we have never performed tests more than 50 turns. It is not yet clear how exactly the asymptotic characteristics curve looks, but we consider our project to be complete, since even one successful turn is far beyond the limits of the possibilities of the best teaching methods existing today. In fact, we chose a figure of 50 turns, because we decided that 25 turns would uniquely demonstrate that the problem was solved, and then added another 25, for 100% of the stock. If your task is to optimize for very long sequences of actions and high reliability, then an increase in training will probably help. But at some point, as we think, the robot will begin to adapt more to the simulation, and worse work in the real world,

How well are your results summarized? For example, how much effort would you have to spend on re-training to rotate a smaller cube, or a cube that would be soft or slippery? What about a different camera arrangement?

We, by the way, for the sake of interest triedto carry out manipulations with soft cubes, and cubes of smaller size, and it turned out that the quality of work is not greatly reduced compared with the rotation of a solid cube. In the simulation, we also experimented with cubes of different sizes, and this also worked well (although we didn’t try this with a real robot). In the simulation, we also used random variations of the size of the cube. We did not try to do this, but I think that if we simply increase the spread of random variations in the size of the cube in the simulation, the hand will be able to manipulate the cubes of various sizes.

As for the cameras, the visual model was trained separately, and while we make only small random variations in the camera position, therefore, with each change in the camera position, we start the exercise again. One of our interns, Xiao-Yu Fish Tan, is just working to make the visual model completely independent of the camera location, using the same basic technique of random variation of the position and orientation of the camera within large limits.

How does training in simulation differ from the “ brute force ” approach , where a bunch of real robots are used?

Interestingly, our project began with the fact that we questioned the idea of using simulations to advance robotics. We have been watching for many yearshow robotics achieves impressive results in simulations using reinforced training. However, in conversations with researchers engaged in classical robotics, we are constantly confronted with the distrust that such methods can work in the real world. The main problem is that simulators are not entirely accurate from a physical point of view (even if they look good to the human eye). Adds problems and the fact that more accurate simulations require large computing power. Therefore, we have decided to establish a new standard that requires working with a very complex in terms of equipment platform on which to face all the limitations of simulations.

As for the “wrist farm” approach, the main limitation in the training of physical robots is the low scalability of the acquired skills for more complex tasks. This can be done by arranging everything so that you have a lot of objects in a self-stabilizing environment that does not have different states (for example, a basket of balls). But it will be very difficult to do it in the same way for the task of assembling something, when after each run your system is in a new state. Again, instead of setting up the entire system once, you will have to set it up N times, and keep it in working condition after it, for example, how the robot moved and broke something. All this is much simpler and easier to do in simulations with elastic computing power.

As a result, our work supports the idea of training in simulations, since we have shown how to solve the transfer problem even in the case of very complex robots. However, this does not negate the idea of learning a real robot; It would be very difficult to circumvent the limitations of simulations when working with deformable objects and liquids.

Where is your system the thinnest place?

At the moment - this is a random variation, developed by hand and sharpened for a specific task. In the future, it may be possible to try learning these variations by adding another layer of optimization, which is the process that we are doing manually today (“try several randomizations and see if they help”). You can also go even further, and use the game between the learning agent and his opponent, who are trying to hinder (but not strongly) his progress. This dynamic can lead to the emergence of very reliable sets of rules for the work of robots, since the better an agent has, the more cunning an opponent must have to interfere with him, which improves the agent's work even more, and so on. This idea has already been studied by other researchers.

You say that your main goal is to create robots for the real world. What else needs to be done before it becomes possible?

We are trying to expand the capabilities of robots to work in an environment without strict restrictions. In such environments, it is impossible to foresee everything in advance and to prepare a model for each object. It is also inconvenient to put some tags on objects outside the laboratory. It turns out that our robots will have to learn how to act in a variety of situations, how to make a reasonable choice in a situation that they have never encountered before.

What will you work on next?

We will continue to create robots with more and more complicated behavior. It is too early to say exactly how. In the long run, we hope to give robots general manipulation skills with objects, so that they can learn to interact with their environment in the way a baby does — playing with existing objects, not necessarily under adult supervision. We think that intelligence is tied to interaction with the real world, and in order to accomplish our task of creating safe general-purpose artificial intelligence, we need to be able to learn from sensory data from the real world, and from simulations.