Researchers: Not all level 2 autopilot work equally well, but progress is evident

Currently, a large number of companies are working to create their own robots or autopilot systems for cars of other companies. Unfortunately, there is no uniform development standard yet, which causes some disagreement between the developers and the regulators themselves. At least in the USA.

In order to find out how effectively second-level autopilots of various automotive companies work, the non-profit organization Insurance Institute for Highway Safety (eng. Insurance Institute for Highway Safety, IIHS) conducted a large-scale study involving five models of autopilot vehicles from different companies.

Before starting to go into the details of the report of this study, it is worth remembering how many levels of autopilot independence there are, and on what basis the division into these levels occurs.

So, there are five of them :

- Zero level. The driver controls everything - brake system, multimedia, vehicle heading, speed, etc. The machine can not do anything on its own - it is simply not provided by the design.

- First level. Already have a certain automation. The driver drives the car himself, but the car can take on some of the functions to a minimum. This, for example, a set of speed or holding the course of the machine.

- Second level. Here the autopilot has more freedom. The car can both keep the course and accelerate, while using information about the situation around the car. For this, of course, we need sensors, sensors, and in some cases cameras. The driver must keep his hands on the wheel of the car and not remove them. The machine can brake if necessary. The second level can be attributed Autopilot from Tesla. Now the company Ilona Mask is doing everything to move from level 2 to levels 3 and 4.

- Third level. Drivers are still needed in the cabin during the trip, but the trip itself takes place almost autonomously. The car during the movement collects a huge amount of data about its surroundings. By the way, the difference between the second and third levels of autopilot is not so big, but the differences between the third and fourth are very large.

- The fourth level is a fully autonomous machine. Almost all functions are duplicated by autopilot, a car with a fourth-level system can move quickly and over long distances without requiring the driver to participate in the driving process. However, the presence of the operator is still recommended. But he can do what he will be mistaken - read books, listen to music, sleep, etc.

- Fifth level Cars with autopilot, which are on the fifth level, drive cars no worse, but most likely, better than drivers - people. Difficult external conditions (dirt or snow covering the markings), heavy traffic on the roads, etc. do not affect the quality of driving a vehicle by the autopilot system of the fifth level.

As for the IIHS report, the organization’s employees only checked level 2 ro-mobiles (levels 3.4 and moreover, 5 currently do not exist, at least such ro-mobiles do not drive on public roads). Representatives of the IIHS evaluated the situation on public roads, in which cars with automatic control systems of various manufacturers fell and compared the actions of autopilot.

The “second level” in the understanding of IIHS coincides with the definition given above. That is, a car with such a system can slow down, accelerate and change course if necessary, and without the participation of the driver. It is clear that the latter, if desired, can take over control completely.

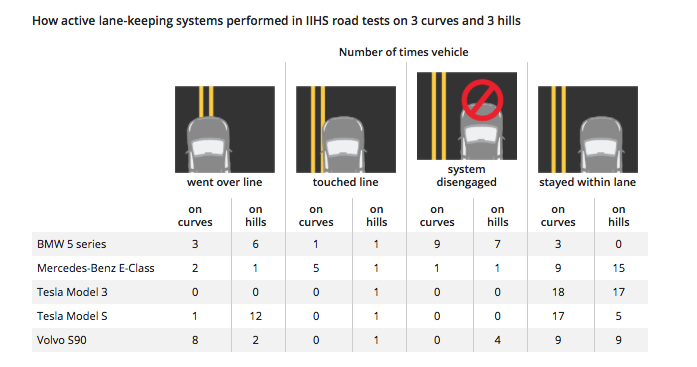

Robo-mobiles such as the 2017 BMW 5 Series (Driving Assistant Plus), 2017 Mercedes-Benz E-Class (Drive Pilot), 2018 Tesla Model 3 (Autopilot 8.1), 2016 Tesla Model S (Autopilot 7.1), and 2018 took part in the tests Volvo S90 (Pilot Assist). All the autopilot systems mentioned above have received high marks from the AEB test (emergency braking). In addition, research participants conducted several tests of cars with autopilot on test roads - this was done in order to check how the systems behave when there are various obstacles on the road. After that, similar tests were conducted on specially marked sections of public roads.

Adaptive cruise control and braking

In the initial tests all the cars showed themselves well - they managed to avoid a collision with a stationary car. The tested vehicles moved at a speed of about 50 km / h. Volvo cars used the braking system most aggressively, so to speak. They did not stop at every opportunity, but braking was carried out quickly, which caused some inconvenience to the driver and passengers.

In addition, the cars were able to exit without damage and in a situation when another car was driving in front of the test machine. In the tests, the car in front dramatically changed its lane, and an obstacle appeared on the track. All auto pilots were able to recognize the situation - to detect an obstacle and stop or roll in time. By the way, this situation is not just a theory - in 2016 there was an accident involving a Tesla electric car, then the problem was precisely that Autopilot did not recognize the obstacle that arose on the road after the car in front changed the lane.

As part of the tests, Tesla 3 showed itself very well, obstacles were avoided. But the autopilots of other cars were failing in tests on a regular road - from time to time they could not recognize a stationary car that unexpectedly sprang ahead of the course after the car ahead went to the right or left. That is why manufacturers of cars with automatic control systems and recommend drivers to keep their hand on the steering wheel.

By the way, the Model 3 “failed” in its own way - the car often slowed down or slowed down after the car in front went to another lane, and there was no obstacle ahead. In some cases, the situation arose because of the shadow on the road, cast by a tree standing next to the road. Of the 12 false positives of the Autopilot Tesla 3 electric vehicle, seven were caused by this very reason. The situation itself can not be called dangerous, but probably the driver would not be too pleased with the braking of the car because of the shadows. On the other hand, in such a situation, a “positive false positive” is better, that is, a car’s reaction to an imaginary obstacle than a “negative false” when there is an obstacle, but the car does not see it.

Also, five of these models of robomobiles were tested in terms of their ability to stick to the selected lane in different situations (for example, when cornering with different curvatures). In this test series, Model 3 performed better than anyone. BMW, Volvo and Mercedes-Benz have not always successfully coped with the task.

Above is a table with test results on public roads. As it turned out, not a single autopilot car, except Tesla 3, was able to pass all tests successfully. Practically every “trial participant” had problems of one kind or another. At the same time, representatives of the IIHS stated that it was too early to talk about any user autopilot rating, since this requires much more tests.

Nevertheless, manufacturers of auto pilots and cars with them are now becoming more and more, therefore, as far as can be judged, in the near future, ratings of this kind will be compiled and published in the public domain. On the other hand, autopilots are not very good yet, so some companies will have to “pull up” their autopilots.

Autopilot 3, 4 and 5 levels

All of them will appear soon. Some manufacturers advertise their development as if it is already a system of autopilot third or fourth levels. But in fact, all this is far from true. Even at the second level, as we see, there are problems, what can be said about the subsequent "stages of evolution."

When a driver or machine operator with an automatic control system begins to think of his digital assistant as an independent driver, problems arise. Everyone now has Uber's robobomies, one of whom knocked down a cyclist at full speed in March of this year.that crossed the road in the wrong place. An investigation conducted by the National Transportation Safety Board of the United States showed that the problem was to blame for the autopilot system, which in time did not recognize the person and the car continued to move.

In some cases, accidents do not occur because of autopilot, but simply because collisions in a particular situation cannot be avoided. This is what happened with Google's romob that collided with a bus on the road a couple of years ago . The bus performed an incorrect maneuver, and circumstances did not allow the autopilot to avoid a collision with it.

Robo-buses also get into accidents, and also not the fault of the autopilot. One of these buses, the model Navya Arma , is unexpected for its passengers.collided with another vehicle - but only because the driver of this vehicle was inattentive on the road.

Finally, in January of this year, Tesla's electric car collided with a fire engine. “The driver said that his Tesla was in autopilot mode. Surprisingly, the man did not receive any damage, ”the US Fire Department tweet says . The proceedings then went on for a long time, and it seemed that it was not possible to find out finally who was right and who was to blame.

In any case, there are more and more reports of accidents with autopilot - but this is not because the autopilot is becoming stupid, but because there are more and more such vehicles. And almost all experts say that “digital assistants” of drivers, even at the current stage of development, can make driving on the road safer. But the evolution of such systems does not stand still, it continues. Most likely, in 5 years we will see the mass appearance of levels 2 and 3 on the public roads.