Logical organization of the processor cache

The other day, I decided to systematize knowledge regarding the principles of mapping RAM to the processor cache memory. As a result, this article was born.

Processor cache memory is used to reduce processor downtime when accessing RAM.

The basic idea of caching is based on the property of locality of data and instructions: if access to a certain address occurs, then it is likely that memory will be accessed at the same address or to neighboring addresses in the near future.

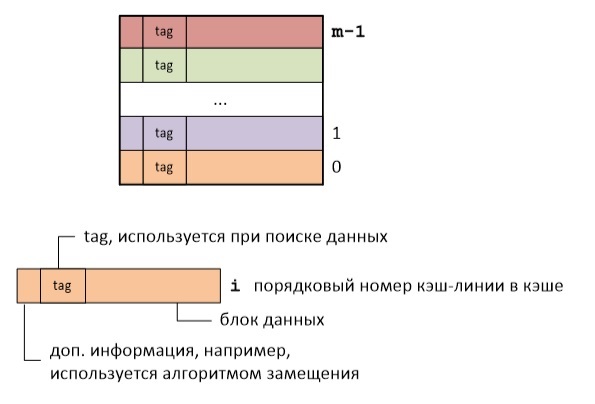

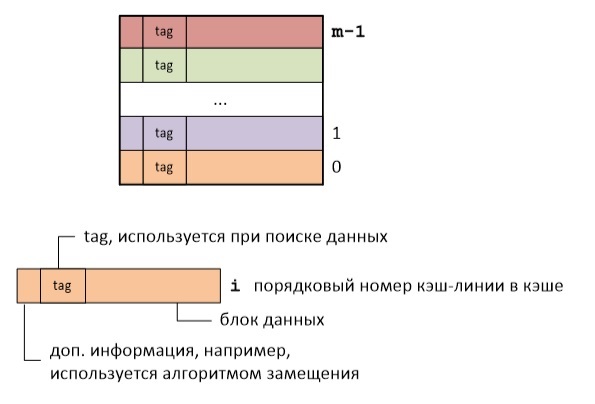

Logically, a cache is a collection of cache lines. Each cache line stores a block of data of a certain size and additional information. The size of the cache line is usually understood as the size of the data block that is stored in it. For x86 architecture, the line cache size is 64 bytes.

So the essence of caching is to split the RAM into cache lines and map them to the cache line cache. Several options for this display are possible.

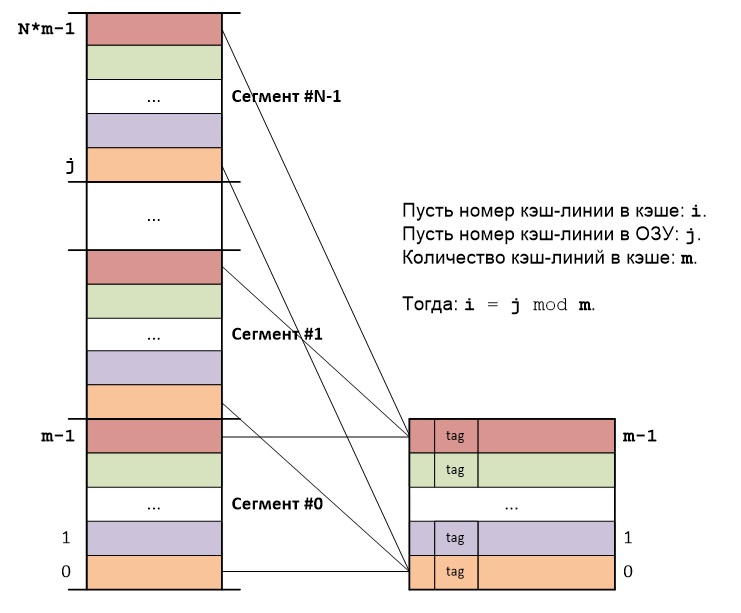

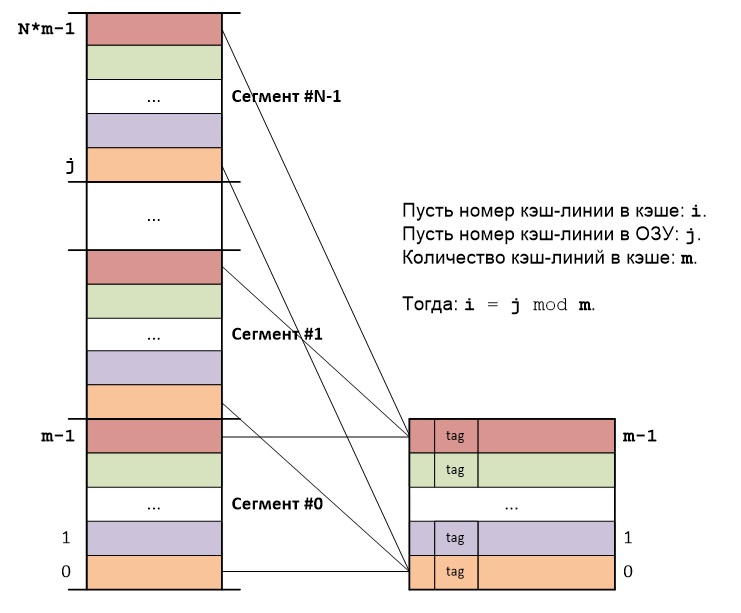

The main idea of direct mapping of RAM to the cache is as follows: RAM is divided into segments, with the size of each segment equal to the size of the cache, and each segment in turn divided into blocks, the size of each block equal to the size of the cache line.

RAM blocks from different segments, but with the same numbers in these segments, will always be mapped to the same cache cache line: The

address of each byte is the sum of the sequence number of the segment, the sequence number of the cache line inside the segment and the sequence number of the byte inside the cache lines. It follows that the byte addresses differ only in the older parts, which are the sequence numbers of the segments, and the sequence numbers of the cache lines inside the segments and the sequence numbers of the bytes inside the cache lines are repeated.

Thus, there is no need to store the full cache line address, it is enough to save only the most important part of the address. The tag of each cache line just stores the most significant part of the address of the first byte in this cache line.

b is the size of the cache line.

m is the number of cache lines in the cache.

To address b bytes within each cache line, you will need: log2b bits.

To address m cache lines within each segment, you will need: log2m bits.

m = Cache Capacity / Line Cache Size.

To address N RAM segments: log2N bits.

N = RAM Capacity / Segment Size.

To address the byte you will need: log2N + log2m + log2b bit.

Stages of search in the cache:

1. The middle part of the address (log2m), which determines the cache line number in the cache, is retrieved.

2. The cache line tag with this number is compared with the highest part of the address (log2N).

If there was a match on one of the tags, then a cache hit occurred.

If there was no match on any of the tags, then a cache miss occurred.

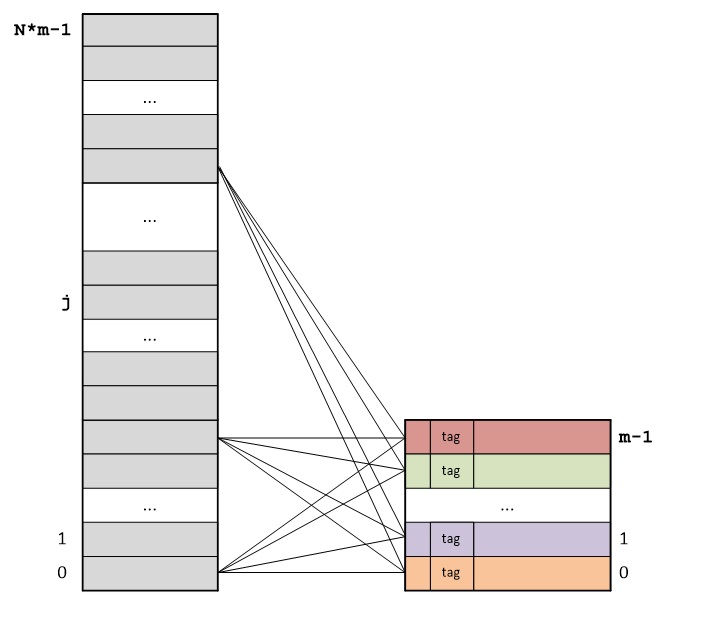

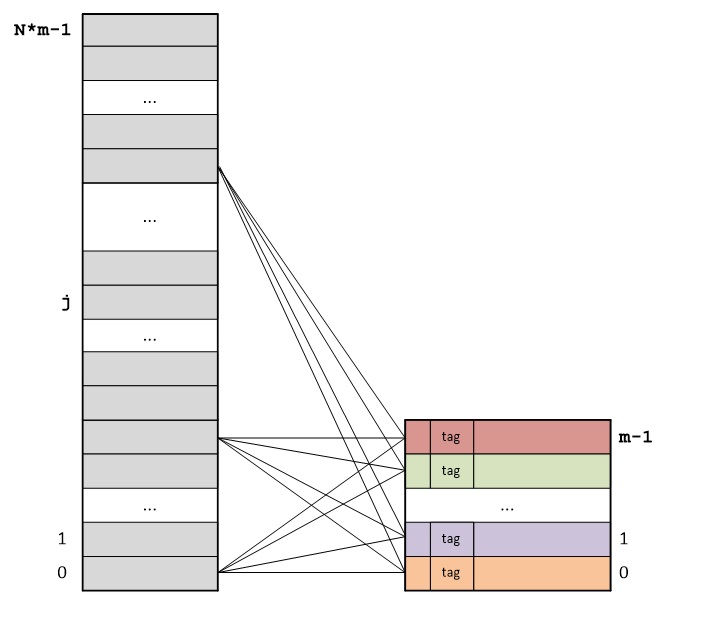

The main idea of a fully associative mapping RAM to cache is as follows: RAM is divided into blocks whose size is equal to the size of the cache lines, and each RAM block can be stored in any cache cache line: The

address of each byte is the sum of the cache line sequence number and the byte sequence number inside the cache line. It follows that byte addresses differ only in the older parts, which are cache line numbers. The byte numbers within the cache lines are repeated.

The tag of each cache line stores the most significant part of the address of the first byte in a given cache line.

b is the size of the cache line.

m is the number of cache lines that fit in RAM.

To address b bytes within each cache line, you will need: log2b bits.

To address m cache lines: log2m bits.

m = RAM size / cache line size.

To address the byte you will need: log2m + log2b bit.

Stages of search in the cache:

1. Tags of all cache lines are compared with the older part of the address at the same time.

If there was a match on one of the tags, then a cache hit occurred.

If there was no match on any of the tags, then a cache miss occurred.

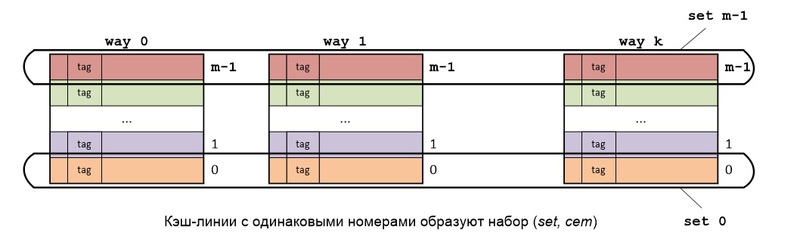

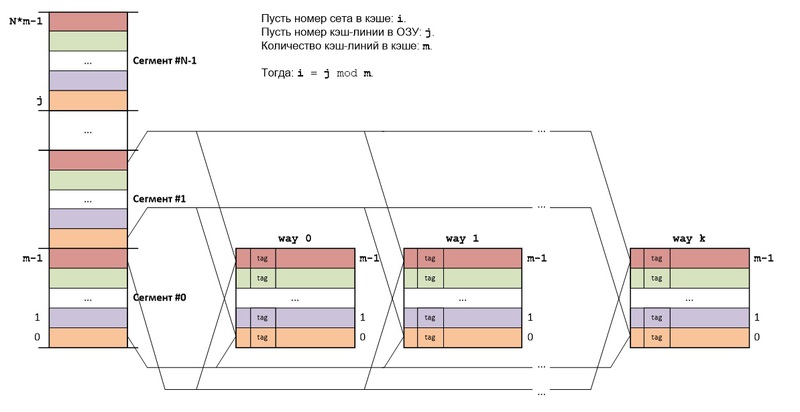

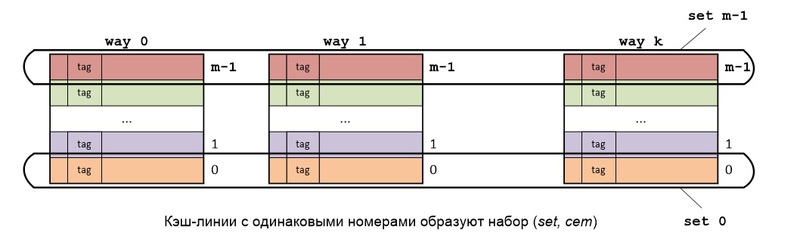

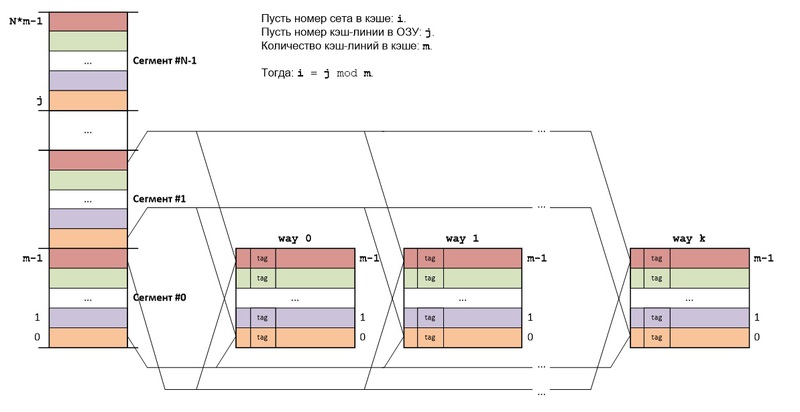

The main idea of set associative mapping RAM to the cache is as follows: RAM is shared as in direct mapping, and the cache itself consists of k caches (k channels) using direct mapping.

Cache lines with the same numbers in all channels form a set (set, set). Each set is a cache that uses a fully associative mapping.

RAM blocks from different segments, but with the same numbers in these segments, will always be mapped to the same cache set. If there are free cache lines in this set, then the block read from RAM will be saved in a free cache line, if all the set cache lines are busy, then the cache line is selected according to the replacement algorithm used.

The structure of the byte address is exactly the same as in the direct mapping: log2N + log2m + log2b bits, but since set is k different cache lines, the search in the cache is a little different.

Stages of search in the cache:

1. The middle part of the address (log2m), which determines the number of the set in the cache, is retrieved.

2. Tags of all cache lines of a given set are compared with the highest part of the address (log2N) at the same time.

If there was a match on one of the tags, then a cache hit occurred.

If there was no match on any of the tags, then a cache miss occurred.

Thus, the number of cache channels determines the number of simultaneously compared tags.

Processor cache memory is used to reduce processor downtime when accessing RAM.

The basic idea of caching is based on the property of locality of data and instructions: if access to a certain address occurs, then it is likely that memory will be accessed at the same address or to neighboring addresses in the near future.

Logically, a cache is a collection of cache lines. Each cache line stores a block of data of a certain size and additional information. The size of the cache line is usually understood as the size of the data block that is stored in it. For x86 architecture, the line cache size is 64 bytes.

So the essence of caching is to split the RAM into cache lines and map them to the cache line cache. Several options for this display are possible.

DIRECT MAPPING

The main idea of direct mapping of RAM to the cache is as follows: RAM is divided into segments, with the size of each segment equal to the size of the cache, and each segment in turn divided into blocks, the size of each block equal to the size of the cache line.

RAM blocks from different segments, but with the same numbers in these segments, will always be mapped to the same cache cache line: The

address of each byte is the sum of the sequence number of the segment, the sequence number of the cache line inside the segment and the sequence number of the byte inside the cache lines. It follows that the byte addresses differ only in the older parts, which are the sequence numbers of the segments, and the sequence numbers of the cache lines inside the segments and the sequence numbers of the bytes inside the cache lines are repeated.

Thus, there is no need to store the full cache line address, it is enough to save only the most important part of the address. The tag of each cache line just stores the most significant part of the address of the first byte in this cache line.

b is the size of the cache line.

m is the number of cache lines in the cache.

To address b bytes within each cache line, you will need: log2b bits.

To address m cache lines within each segment, you will need: log2m bits.

m = Cache Capacity / Line Cache Size.

To address N RAM segments: log2N bits.

N = RAM Capacity / Segment Size.

To address the byte you will need: log2N + log2m + log2b bit.

Stages of search in the cache:

1. The middle part of the address (log2m), which determines the cache line number in the cache, is retrieved.

2. The cache line tag with this number is compared with the highest part of the address (log2N).

If there was a match on one of the tags, then a cache hit occurred.

If there was no match on any of the tags, then a cache miss occurred.

FULLY ASSOCIATIVE MAPPING

The main idea of a fully associative mapping RAM to cache is as follows: RAM is divided into blocks whose size is equal to the size of the cache lines, and each RAM block can be stored in any cache cache line: The

address of each byte is the sum of the cache line sequence number and the byte sequence number inside the cache line. It follows that byte addresses differ only in the older parts, which are cache line numbers. The byte numbers within the cache lines are repeated.

The tag of each cache line stores the most significant part of the address of the first byte in a given cache line.

b is the size of the cache line.

m is the number of cache lines that fit in RAM.

To address b bytes within each cache line, you will need: log2b bits.

To address m cache lines: log2m bits.

m = RAM size / cache line size.

To address the byte you will need: log2m + log2b bit.

Stages of search in the cache:

1. Tags of all cache lines are compared with the older part of the address at the same time.

If there was a match on one of the tags, then a cache hit occurred.

If there was no match on any of the tags, then a cache miss occurred.

SET ASSOCIATIVE MAPPING

The main idea of set associative mapping RAM to the cache is as follows: RAM is shared as in direct mapping, and the cache itself consists of k caches (k channels) using direct mapping.

Cache lines with the same numbers in all channels form a set (set, set). Each set is a cache that uses a fully associative mapping.

RAM blocks from different segments, but with the same numbers in these segments, will always be mapped to the same cache set. If there are free cache lines in this set, then the block read from RAM will be saved in a free cache line, if all the set cache lines are busy, then the cache line is selected according to the replacement algorithm used.

The structure of the byte address is exactly the same as in the direct mapping: log2N + log2m + log2b bits, but since set is k different cache lines, the search in the cache is a little different.

Stages of search in the cache:

1. The middle part of the address (log2m), which determines the number of the set in the cache, is retrieved.

2. Tags of all cache lines of a given set are compared with the highest part of the address (log2N) at the same time.

If there was a match on one of the tags, then a cache hit occurred.

If there was no match on any of the tags, then a cache miss occurred.

Thus, the number of cache channels determines the number of simultaneously compared tags.