Recommender system: useful tasks of text mining

I continue the series of articles on the use of text mining methods to solve various problems that arise in the recommendation system of web pages. Today I’ll talk about two tasks: automatically determining categories for pages from RSS feeds and finding duplicates and plagiarism among web pages. So, in order.

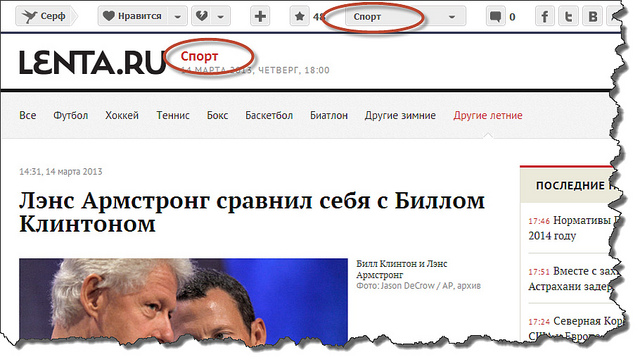

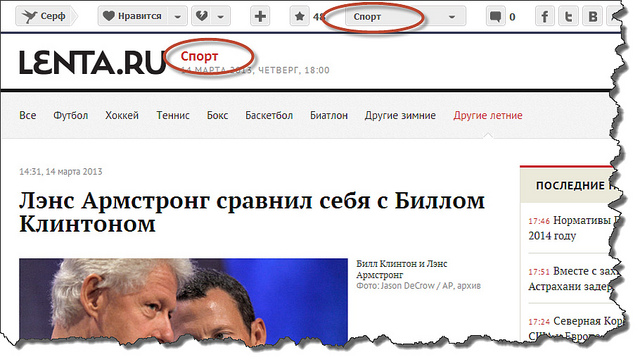

The usual scheme for adding web pages (or rather, links to them) in Surfingbird is as follows: when adding a new link, the user must specify up to three categories to which this link belongs. It is clear that in such a situation, the task of automatically determining categories is not worth it. However, in addition to manual addition, links get to the database from RSS feeds, which are provided by many popular sites. Since there are a lot of links coming through RSS feeds, often moderators (and in this case they are forced to put down categories) simply can not cope with such a volume. The problem arises of creating an intelligent system of automatic classification into categories. For a number of sites (for example, lenta.ru or sueta.ru), categories can be pulled directly from rss-xml and manually linked to our internal categories:

Things are worse for RSS feeds that don’t have fixed categories and instead use custom tags. Tags entered arbitrarily (a typical example are tags in LJ posts) cannot be manually associated with our categories. And here, more subtle mathematics are already included. Text content (title, tags, useful text) is extracted from the page and the LDA model is applied , a brief description of which can be found in my previous article . As a result, the probability vector of the web page belonging to thematic LDA topics is calculated. The resulting vector of LDA site topics is used as a feature vector to solve the classification problem.by category. Moreover, the objects of classification are sites, classes are categories. Logistic regression was used as a classification method , although any other method, such as the naive Bayesian classifier , can be used .

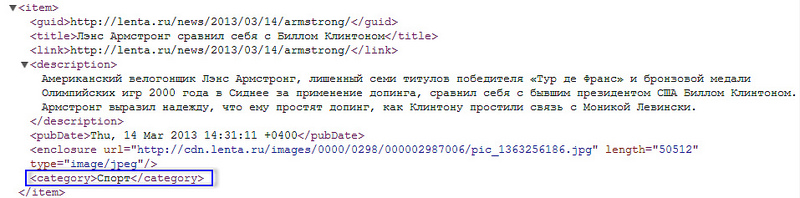

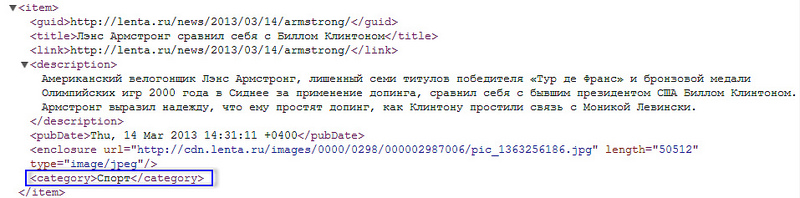

To test the method, model training took place on 5 thousand sites classified by the moderator from RSS feeds, for which the distribution by LDA topics was also known. The results of training on a test sample are presented in the table:

As a result, an acceptable classification quality (according to the share of false detections and the share of false passes) was obtained only in the following categories: Pictures, Erotic, Humour, Music, Travel, Sport, Nature. Too general categories, for example Photo, Video, News, errors are big.

In conclusion, we can say that if, in the case of tight binding to external categories, classification is in principle possible in a fully automatic mode, then classification by text content inevitably has to be done in semi-automatic mode, when the most likely categories are then offered to the moderator. Our goal here is to simplify the work of the moderator as much as possible.

Another task that text mining can solve is finding and filtering links with duplicate content. Repeat content is possible for one of the following reasons:

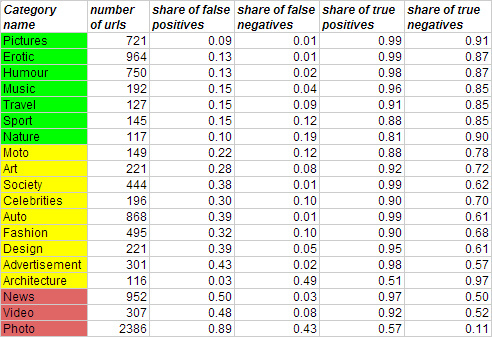

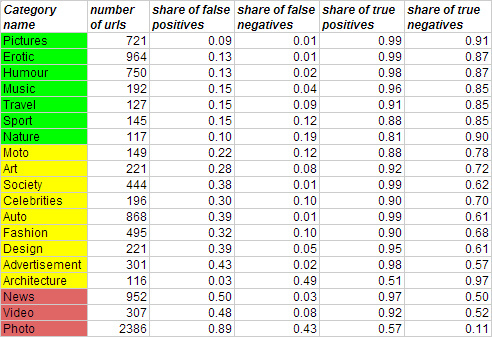

The first and second points are technical and are solved by obtaining end links and literally comparing useful content for all pages in the database. However, even with full copy-paste, a formal comparison of useful text may not work for obvious reasons (for example, random characters or words from the site menu have got), and the text can be slightly modified. We will consider this third option in more detail. A typical double might look like this:

The task of determining borrowings in the broad sense of the word is quite complicated. She interests us in a narrower statement: we don’t need to search for plagiarism in each phrase of the text, but we just need to compare the documents on the entire content.

To do this, you can effectively use the existing model of the "bag of words» ( bag of Words) and the calculated TF-IDF weights of all site terms. There are several different techniques.

To begin with, we introduce the notation:

- a dictionary of all the different words;

- a dictionary of all the different words;

- body of texts (content of web pages);

- body of texts (content of web pages);

- a lot of words in the document.

- a lot of words in the document.

To compare two documents , you

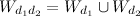

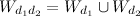

, you  first need to build a combined vector of words

first need to build a combined vector of words  from the words that are found in both documents:

from the words that are found in both documents:

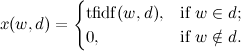

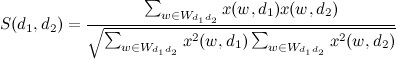

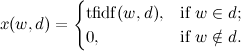

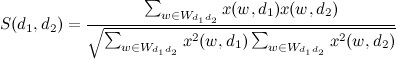

Text similarity is calculated as a scalar product with normalization:

If (threshold of similarity), then pages are considered doubles.

(threshold of similarity), then pages are considered doubles.

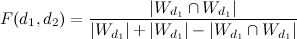

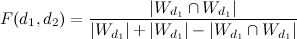

Another approach does not take into account the weight of TF-IDF, but builds similarity using binary data (is there a word in the document or not). To evaluate page differences, useJaccard coefficient :

,

,

wherein - a plurality of common words in the documents.

- a plurality of common words in the documents.

As a result of the application of these methods, several thousand twins were identified. For a formal quality assessment, it is necessary to expertly mark the doubles on the training sample.

That's all for now, I hope the article will be useful to you. And we will be happy with new ideas on how to apply text mining to improve recommendations!

Automatically categorize web pages from RSS feeds

The usual scheme for adding web pages (or rather, links to them) in Surfingbird is as follows: when adding a new link, the user must specify up to three categories to which this link belongs. It is clear that in such a situation, the task of automatically determining categories is not worth it. However, in addition to manual addition, links get to the database from RSS feeds, which are provided by many popular sites. Since there are a lot of links coming through RSS feeds, often moderators (and in this case they are forced to put down categories) simply can not cope with such a volume. The problem arises of creating an intelligent system of automatic classification into categories. For a number of sites (for example, lenta.ru or sueta.ru), categories can be pulled directly from rss-xml and manually linked to our internal categories:

Things are worse for RSS feeds that don’t have fixed categories and instead use custom tags. Tags entered arbitrarily (a typical example are tags in LJ posts) cannot be manually associated with our categories. And here, more subtle mathematics are already included. Text content (title, tags, useful text) is extracted from the page and the LDA model is applied , a brief description of which can be found in my previous article . As a result, the probability vector of the web page belonging to thematic LDA topics is calculated. The resulting vector of LDA site topics is used as a feature vector to solve the classification problem.by category. Moreover, the objects of classification are sites, classes are categories. Logistic regression was used as a classification method , although any other method, such as the naive Bayesian classifier , can be used .

To test the method, model training took place on 5 thousand sites classified by the moderator from RSS feeds, for which the distribution by LDA topics was also known. The results of training on a test sample are presented in the table:

As a result, an acceptable classification quality (according to the share of false detections and the share of false passes) was obtained only in the following categories: Pictures, Erotic, Humour, Music, Travel, Sport, Nature. Too general categories, for example Photo, Video, News, errors are big.

In conclusion, we can say that if, in the case of tight binding to external categories, classification is in principle possible in a fully automatic mode, then classification by text content inevitably has to be done in semi-automatic mode, when the most likely categories are then offered to the moderator. Our goal here is to simplify the work of the moderator as much as possible.

Search for duplicates and plagiarism among web pages

Another task that text mining can solve is finding and filtering links with duplicate content. Repeat content is possible for one of the following reasons:

- for several different links, a redirect to the same end link occurs;

- content is fully copied on various web pages;

- content copied partially or slightly modified.

The first and second points are technical and are solved by obtaining end links and literally comparing useful content for all pages in the database. However, even with full copy-paste, a formal comparison of useful text may not work for obvious reasons (for example, random characters or words from the site menu have got), and the text can be slightly modified. We will consider this third option in more detail. A typical double might look like this:

The task of determining borrowings in the broad sense of the word is quite complicated. She interests us in a narrower statement: we don’t need to search for plagiarism in each phrase of the text, but we just need to compare the documents on the entire content.

To do this, you can effectively use the existing model of the "bag of words» ( bag of Words) and the calculated TF-IDF weights of all site terms. There are several different techniques.

To begin with, we introduce the notation:

- a dictionary of all the different words;

- a dictionary of all the different words;  - body of texts (content of web pages);

- body of texts (content of web pages);  - a lot of words in the document.

- a lot of words in the document. To compare two documents

, you

, you  first need to build a combined vector of words

first need to build a combined vector of words  from the words that are found in both documents:

from the words that are found in both documents:

Text similarity is calculated as a scalar product with normalization:

If

(threshold of similarity), then pages are considered doubles.

(threshold of similarity), then pages are considered doubles. Another approach does not take into account the weight of TF-IDF, but builds similarity using binary data (is there a word in the document or not). To evaluate page differences, useJaccard coefficient :

,

, wherein

- a plurality of common words in the documents.

- a plurality of common words in the documents. As a result of the application of these methods, several thousand twins were identified. For a formal quality assessment, it is necessary to expertly mark the doubles on the training sample.

That's all for now, I hope the article will be useful to you. And we will be happy with new ideas on how to apply text mining to improve recommendations!