Review of storage system Lenovo DS6200

Hi Habr! We offer you an excellent overview of the storage system (DSS) Lenovo DS6200, made by the publication THG .

The Lenovo Storage SAN Portfolio is divided into three product lines:

It is also worth noting that the Lenovo DSS-G solution , which is based on IBM Spectrum Scale software, can be attributed to the family of software-defined storage systems in the company's product portfolio .

At our disposal was the older model of the DS - DS6200 line with convergent controllers, 16 Gbps FC transceivers and 24 3.84 TB solid state drives. Detailed specifications are given in the table.

The DS6200 comes in two versions — with SAS controllers and converged FC / iSCSI controllers. Execution option - 2U, 24 SFF disks (2.5 "). LFF disks (3.5") can be used only in the respective expansion shelves. The scope of delivery includes telescopic mounting rails (635–889 mm), a quick guide, a mini-USB cable for connecting to a serial console, and power cables.

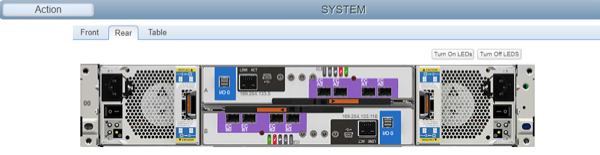

In the back, two power supply units are located on the sides, in which fans cool both the power supplies themselves and other storage nodes. Centered, one on the other are the modules of the controllers.

The power cables are fixed in the power supply connectors with plastic clips to prevent accidental disconnection. It is also impossible to accidentally pull out the power supply - the metal bracket is fixed in the closed position with a plastic latch. Four LED indicators on the back of the power supply unit show the status of the PSU, the status of the fan, and the input and output voltages.

The controller module is a typical SBB form factor block. The panel includes: a SAS3 x4 SFF-8644 connector for connecting expansion shelves, an RJ-45 connector for network control, a mini-USB connector for connecting a serial console (sealed with a warning label to install the driver before connecting the cable), several service connectors and interface ports.

In this configuration, converged controllers with four SFP + connectors are installed, two of which are equipped with 16 Gbps FC transceivers. The lower-end DS2200 controller has only two SFP + or SAS connectors, depending on the type of controller.

Above the host ports are five LEDs: cache status, identifier, controller readiness to retrieve. Port for connecting expansion shelves and all host ports are equipped with a link indication.

Disc tray. Robust metal and plastic construction. The length of the disk tray is due to the fact that the Lenovo DS series storage systems were designed in accordance with the SBB (Storage Bridge Bay) specification describing the form factor of the controller modules and the method of connecting them to the backplane. The architecture of such storage systems requires the use of dual-port SAS disks to provide access to two controllers to each of the disks. The choice of SAS drives during the development of the specification was limited, therefore the connection of SATA drives through an additional multiplexing card (interposer) was provided, which provided for SATA drives a two-port connection. Considering the possible installation of such a board, the length of disk trays in the specification has been increased. For DS6200As with other modern storage systems, only SAS disks are offered.

The tested storage comes bundled with 24 Lenovo Storage 3.84TB 1DWD 2.5 "SAS SSD (PM1633a) solid state drives 01KP065: 3.84 TB of volume, 1 rewriting resource per day. Lenovo does not hide the OEM part number, it is important for many customers to know the model in advance used drives and detailed specifications, in this case the PM1633a, a Samsung drive based on 3D TLC NAND.

The storage virtualization stack on Lenovo Storage DS systems is based on the concept of storage pools. At the lower level are the physical disks and drives, combined into disk groups. Several disk groups are combined into a single storage pool (one pool per controller), space is allocated from the pool to create volumes. Based on virtual pools based work of many functions provided by the storage system: snapshots, tiered storage, caching, scaling performance, fine allocation of disk space.

A disk pool is not just a RAID-0 from several disk groups. The distribution of data in the pool is dynamic. When adding disk groups to the pool, the blocks are redistributed with data for load balancing. The reverse operation is also possible - releasing a disk group (for example, with the goal of changing settings or for transferring to another system) if there is enough free space in the pool.

To increase fault tolerance and performance, physical disks and drives are combined into disk groups using traditional RAID levels: 1, 10, 5 and 6. The maximum number of disks in standard RAID groups is 16, to further increase the volume and performance of several disk groups are combined into a common pool. The new version of the firmware added another type of disk groups - ADAPT.

Tiering is a technology that optimizes the use of drives with different levels of performance due to the dynamic redistribution of blocks with data depending on the load between the so-called tiers, arrays with different levels of performance. This storage system has three tiers: the Archive (archive) to drive 7200 rev / min, of Standard (Standard) Disc 10/15 thousand rev / min. Perfomance (productive) for the SSD, which requires an additional license. It is possible to assign a priority at the volume level by assigning a binding to the archive or production tier.

Guide to the DS6200 It is recommended, if possible, to use two tiers instead of three to optimize performance - SSD in combination with disks 10/15 thousand rpm or 7200 rpm.

An alternative way to optimize performance is caching. For these purposes, you can use one or two SSDs for each of the two storage pools. The DS6200 SSD cache is read- only, but it has several advantages compared to long-term storage:

Recently, Lenovo has released a new firmware version G265, which significantly expands the functionality of all storage systems DS series.

In addition to standard RAID groups (0, 1, 10, 5, 6), you can now create ADAPT disk groups ( Rapid Data Protection Technology). The ADAPT array corresponds to RAID-6 in terms of failsafe; up to two disks can be lost, but, unlike traditional RAID, block allocation among disks in ADAPT is not strictly fixed. The system can redistribute blocks with data, blocks of checksums and spare space across all disks in a group, which makes it possible, for example, to efficiently use disks of different sizes in one group or add disks to a group with subsequent balancing of data placement. The main advantage of ADAPT disk groups is a significant reduction in failover recovery time. The estimated recovery time for a group of 96 4-TB disks when a single disk fails is only 70 minutes versus 11 or more hours in a RAID-6.

Asynchronous replication in the new firmware has been significantly improved. In addition to support for replication via Fiber Channel, there is support for simultaneous replication to several target nodes, up to four.

Added the ability to flexibly configure user access rights to the storage interface based on groups. System logs can now be downloaded via Secure File Transfer Protocol (sFTP).

The new firmware allows you to get performance up to 600,000 IOPS with a delay of less than 1 ms for DS6200 systems and up to 325,000 IOPS for DS4200. The performance testing we conducted, the results of which are described below, shows that these indicators are true. This high performance limit provides the Lenovo DS6200 a significant competitive advantage, the Dell SC4020 (300,000 IOPS) and the HP MSA2052 (200,000 IOPS).

With the rapid growth of the volume of stored data, the standard form factor, which allows you to install 12 3.5-inch disks in a 2U case, is not enough. Disk shelves with increased placement density, achieved due to the vertical installation of disks and rational use of case space, also exist in Lenovo The D3284 disk shelf allows you to place 84 disks in a 5U enclosure - almost three times as many disks per one unit.

Until recently, D3284 disk shelves were supported only as expansion options to Lenovo ThinkSystem servers, but now they can also be connected to DS storage systems. The new firmware allows you to connect up to three D3284 models DS4200 and DS6200 .

The management interface of Lenovo Storage DS systems is already quite simple and convenient, but with the addition of the EZ-Start function, the user can configure the system in just a minute. EZ-Start is a simplified version of the corresponding tabs of the main interface. As a result, the whole process from creating disk groups to connecting volumes to hosts can be done with a few clicks.

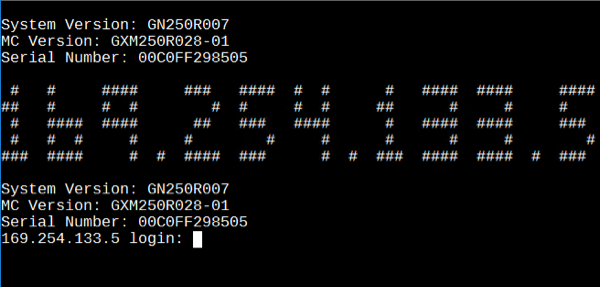

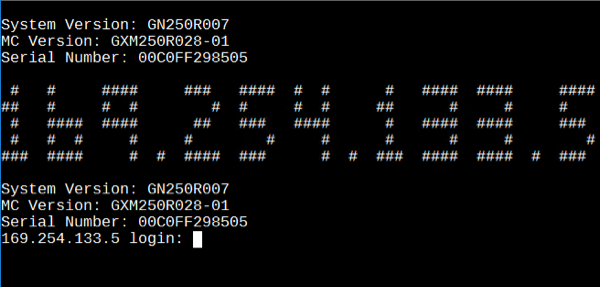

The primary means of managing storage systems for the Lenovo DS series is the Lenovo Storage Manager, an embedded application with a web interface. By default, DSS network management interfaces are configured to use DHCP. If there is no DHCP on your network and you need to configure the interfaces before connecting, then a serial console comes to the rescue. You need to connect a mini-USB cable to the corresponding port of the first controller. The port is closed with a sticker warning you to install the driver before connecting the cable. The driver is only needed for Windows Server 2012 R2 and can be downloaded on the support page. For Windows Server 2016, a driver is not required; for Linux, you need to load a regular usbserial module with additional parameters specified in the manualThinkSystem DS6200 / DS4200 / DS2200 / DS EXP Hardware Installation and Maintenance Guide .

After launching Tera Term using the parameters specified in the manual, you must press Enter to display the console interface. The default login and password is manage /! Manage.

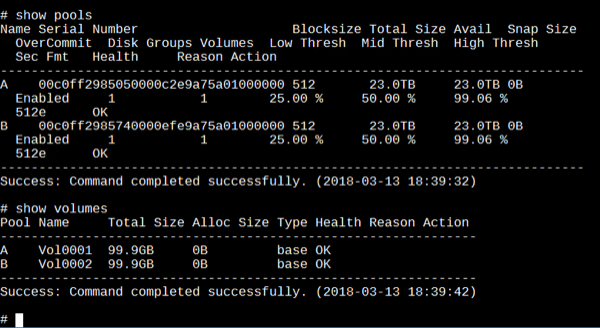

In addition to configuring service interfaces, a serial console is needed to switch the mode of operation of host ports in converged FC / iSCSI controllers. Through the command line interface, you can perform any actions to configure and monitor storage. It is also available on the network, via Telnet and SSH, which allows it to be used for automation.

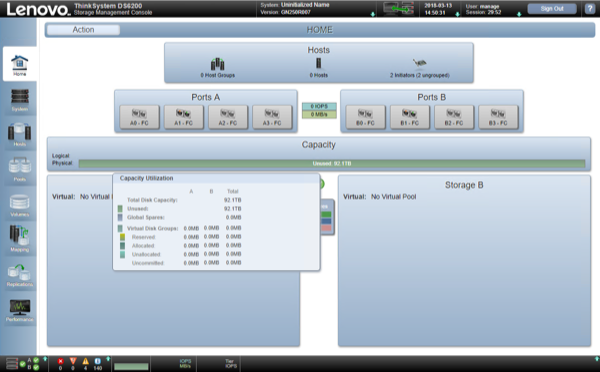

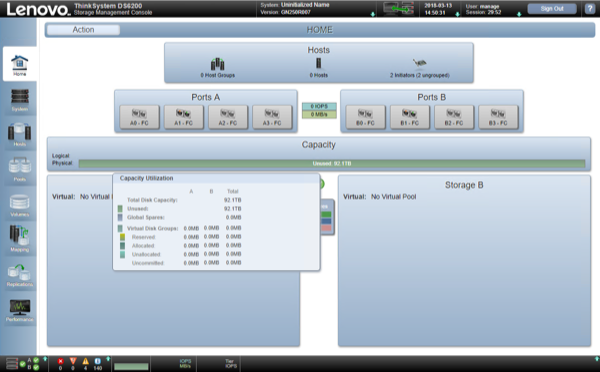

After configuring the network ports, you can connect to the web interface - Lenovo Storage Management Console (hereinafter - SMC). The interface of the main screen of the SMC will be clear to any user who has worked with similar block storage systems.

At the top of the main screen (Hosts window) you can see the number of configured hosts and the corresponding initiators. In this case, the storage system automatically identified two connections to the FC ports and indicates the presence of two initiators.

The Ports A and Ports B windows display the status of the host ports of the first and second controllers. The Capacity window shows the status of disk space: the number of disk groups in pools A and B, the amount allocated to them. The unconfigured system shows the total raw disk capacity.

At the bottom of the screen there are icons of indicators of the state of components, counters of various notifications and load indicators in IOPS and MB / s. On the left side of the screen there are several tabs that correspond to the logical components of the system.

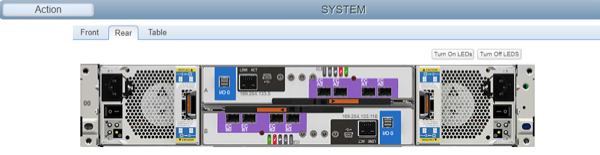

We proceed to configure the system. First you need to make sure there are no hardware errors. The System tab shows the status of all system components, both visual, in the form of duplication of physical LED indicators, and in the form of a table (Table tab). The Turn On LEDs button turns on the display LED on the storage system, expansion shelf, or disk so that the desired component can be found in the rack.

The Hosts tab is responsible for configuring initiators and hosts. The initiators can be grouped by hosts; several hosts can be grouped together; if there are logical volumes, they can be configured to configure their own mapping of volumes. In this case, two initiators are displayed that correspond to the two FC HBA ports. There are no volumes yet, we will return to this tab later.

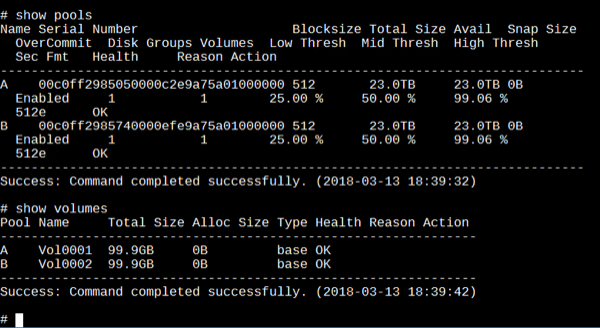

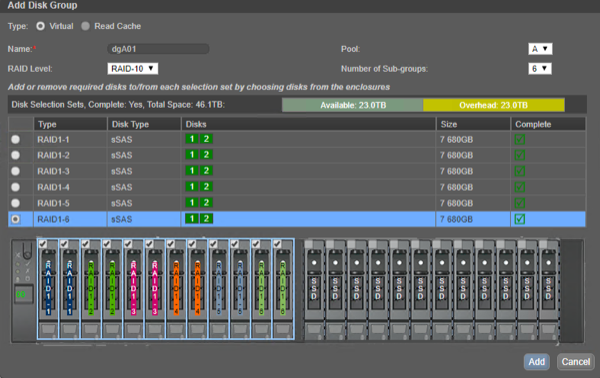

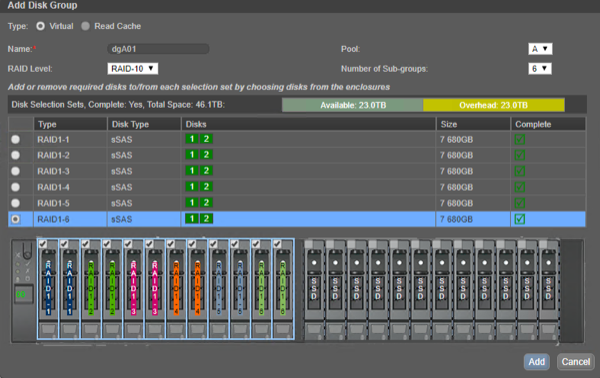

On the Pools tab, create disk groups. The screenshot shows an example of creating a RAID-10 disk group from 12 disks in pool A. Then a similar group from the remaining disks was created in pool B.

Instead of the usual disk group, you can add a group of one or two SSDs for read caching (the Read Cache item).

On the next tab, volumes are created from the free volume in the disk pools (Virtual Volumes). If there are different types of disks in the system (HDD 7200 rpm, HDD 10/15 thous. Rpm, SSD), you can use Tiering by assigning an affiliation to the volumes with the archive tier (Archive Tier) or productive (Perfomance Tier) . This property is responsible for the preferred distribution of data volumes on the disks of the appropriate level of performance. For example, a volume affiliated with an archive tier, whenever possible (if space is available), will always be located on 7200 rpm disks, regardless of the load on this volume. Further, this volume can be used to store backups, and even with a significant load (for example, when periodically testing backups for the possibility of recovery, the system will not waste resources by moving blocks from this volume to productive disks. For systems with long-term storage, the possibility of such a setting is extremely necessary; it avoids unnecessary redistribution of blocks between tiers and ensures a guaranteed level of performance for priority volumes.

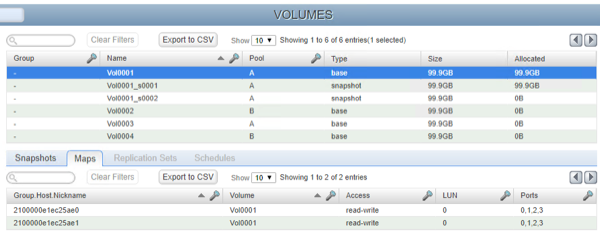

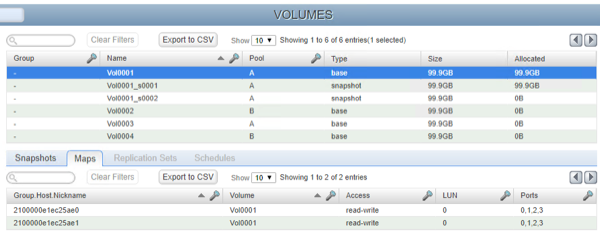

The screenshot shows the creation of a 100 GB volume from pool A, then a similar volume was created from pool B. On the same tab, you can manage snapshots (snapshots) of volumes.

Snapshots can be created manually or on a schedule, based on basic volumes, or based on other snapshots. There is a snapshot update function - reset snapshot updates the selected snapshot to the current state of the volume.

After the volumes are created, the mapping setting remains - determining the initiators' access to the volumes. In this configuration, one host with two initiators is connected to the storage system, the first is connected to controller A, and the second is connected to controller B. In this case, each of the two volumes can be primped through both initiators; the operating system will have two disks with two paths to each . Of course, snapshots can also be connected to hosts, with read-only or read-write access.

For multi-path I / O to work properly in Windows Server, MPIO must be installed. On the Discover Multi-Paths tab, the connected storage will be displayed, you need to select it, click the Add button to enable MPIO support and restart the OS.

The presence of an “optimal” and “non-optimal” path to each of the volumes is due to the architecture of ALUA. Both controllers in a storage system with such an architecture are capable of accepting requests from the host to a specific volume, but only one controller at a certain point in time is the "master" of the volume and is directly involved in servicing I / O, the second controller only sends it data on the internal bus. Access through the main controller is faster than through the “alien”, so this path is considered optimal, and I / O is performed only along the optimal paths, and only if they are absent (for example, when the cable is disconnected or problems with the transceiver) is switched to non-optimal ones. On Lenovo DS storage systems, all volumes of pool A are served by the first controller (A), volumes of pool B are served by controller B. The ALUA architecture, in comparison with Active-Passive, reduces the time for switching paths in the event of a failure. The Perfomance tab allows you to monitor system performance. The load is displayed at the level of ports, disks, disk groups and pools.

Test server characteristics:

The stated performance limit for the used FC HBA is 500,000 IOPS and 3200 MiB / s per port. The volume from one pool was tested, during tests both FC HBA ports were connected to the first controller. The performance of the disk group RAID-10 of 12 SSD Lenovo 01KP065 (Samsung PM1633a) was tested. Testing was conducted after the completion of the initialization process of the RAID group.

The following test was used to evaluate performance:

The results were averaged over 4 rounds from the selected steady state window (linear approximation within the window does not exceed the average 0.9 / 1.1). The performance of one controller was tested, with the connection of two FC 16 Gbps links. The graphs show double IOPS values. Before each series of tests the volume was filled with random data.

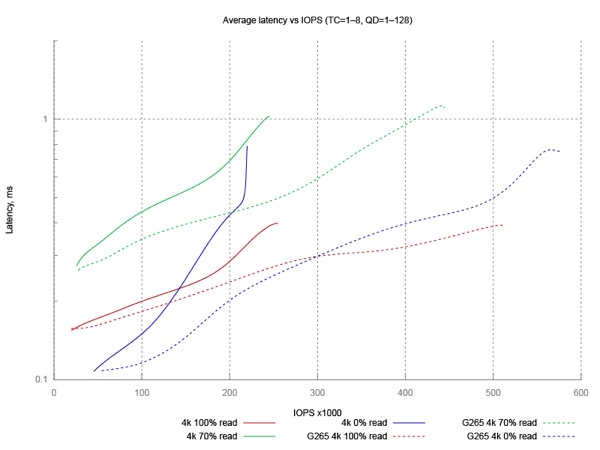

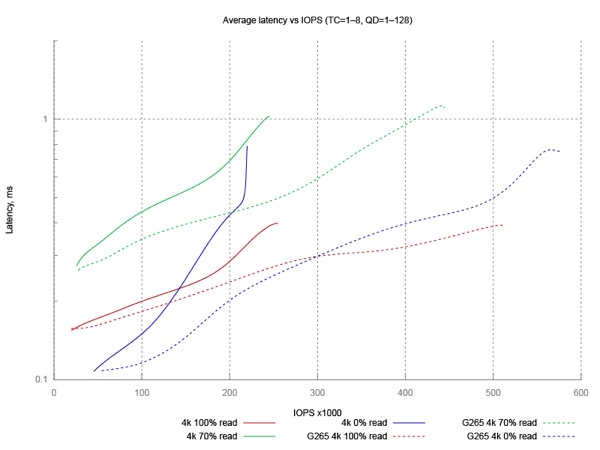

The DS6200 performs well. The level of the average delay with the new firmware G265 does not exceed 1 ms in almost the entire load range. 100,000 IOPS with a mixed load with a delay of less than 0.3 ms is an excellent result for modern storage systems with SSD drives.

We decided to check the manufacturer’s specifications of 600 thousand IOPS with a delay of <1 ms and random access to data. It was possible to get about 560 thousand IOPS before reaching the average delay of 1 ms - the result is quite close to the stated. Some lag can be attributed to high processor load (load exceeded 65%) and FC interface (SAS interface can provide a lower level of delay). Additional performance gains with FC / iSCSI connection could be obtained by following the advice from the system manual: connections to two of the four ports can be spread across different groups of ports, since each group is serviced by its own chip, or by using all available connection ports.

The DS6200 shows fairly stable latency values. The average delay at low loads does not exceed 300 µs, which is an excellent result. Even the 99.99-percentile delay does not go beyond the boundaries of 3 and 20 ms with a load of 100% and 70% reading, respectively. Peak values begin to grow only with write access under high loads with large queues, due to the characteristics of the installed SSDs.

Lenovo Storage DS is a modern line of dual-controller storage systems for small and medium businesses. The older DS6200 model provides a high level of performance for this class. Lenovo has every reason to recommend the DS6200 for use with modern solid state drives. An intuitive interface allows you to put the system into operation in minutes. Three-tier tier storage and technology of distributed arrays ADAPT significantly increase the efficiency of drive utilization.

It is worth noting the competent organization of the entire product line of Lenovo Storage DS. Two controllers provide a wide range of connectivity interfaces: SAS, iSCSI via gigabit or 10 gigabit interfaces, 8- or 16 gigabit Fiber Channel. Additional functionality (tiered storage using SSD, replication, increasing the maximum number of snapshots) is offered in the form of additional licenses, due to which the initial cost of the system remains very attractive against the background of competitors.

The Lenovo Storage SAN Portfolio is divided into three product lines:

- DS Series . Two-controller storage with block access. Presented by three models, which differ from each other in performance and scalability. The smaller DS2200 supports up to 96 drives and provides performance up to 100,000 IOPS, while the average DS4200 and older DS6200 models scale to 276 drives and provide up to 325,000 or 600,000 IOPS, respectively. All storage systems of this line support the installation of various types of disks and drives (2.5 "or 3.5") and controllers (SAS and converged FC / iSCSI).

Lenovo DS series storage systems use the same storage stack virtualization model using disk pools. Modern functionality is provided: snapshots, asynchronous replication, thin allocation of resources, data storage tier, SSD cache for reading. - V Series . Presents entry-level systems v3700 V2 and v3700 V2 XP, as well as more productive v5030 and v7000. Like the DS Series, this line also uses virtualization based on disk pools, but based on another software platform. Lenovo v3700 V2 XP was studied in detail in our lab a little over a year ago. Storage systems of this family are of particular interest for companies operating systems of previous generations from IBM. In addition to the familiar management interface, users are provided with a convenient opportunity to transfer data to a new storage system.

- DX Series . Software-defined storage systems built on standard Lenovo ThinkSystem rack server and partner software. Systems of this class provide block, object, or unified data access, and one of them, the DX8200D, can virtualize external storage systems from other manufacturers. Currently, the DX Series product line includes three models: DX8200N using NexentaStor software, DX8200C with Cloudian HyperStore software, and DX8200D with DataCore SANsymphony software. All necessary software components are pre-installed and pre-tested at the Lenovo factory during manufacturing, which ensures their full compatibility and performance. In addition, Lenovo also provides comprehensive service support for all of these systems.

It is also worth noting that the Lenovo DSS-G solution , which is based on IBM Spectrum Scale software, can be attributed to the family of software-defined storage systems in the company's product portfolio .

Storage Overview Lenovo DS6200 | Main technical characteristics

At our disposal was the older model of the DS - DS6200 line with convergent controllers, 16 Gbps FC transceivers and 24 3.84 TB solid state drives. Detailed specifications are given in the table.

| Form factor | 2U, rack mount. |

| Controllers | - DS6200 SAS controller - DS6200 FC / iSCSI storage controller comes only in 2-controller configuration. Both controllers in the system must be identical. |

| RAID types supported | RAID 1, 5, 6, 10; Rapid Data Protection Technology (ADAPT). |

| Memory | 32 GB per system (16 GB per controller). Cache protection (flash + supercapacitors). High speed cache mirroring between controllers. |

| Disk Bays | up to 240 SFF disks (2.5 "): - 24 SFF disks in the storage system - 24 SFF disks in the DS Series SFF expansion unit shelves with a maximum of 9 shelves up to 132 (24 SFF and 108 LFF) disks - 24 SFF disks in the storage system - 12 LFF disks in the DS Series LFF expansion unit shelves, a maximum of 9 shelves up to 276 (24 SFF and 252 LFF) disks - 24 SFF disks in the storage system - 84 LFF disks in the D3284 expansion shelves, a maximum of 3 shelves Supported configurations from DS expansion shelves for SFF and LFF disks Mixed configurations from DS expansion shelves and D3284 expansion shelves are not supported. |

| Connecting drives | Dual-port SAS3 (12 Gb / s) disks are used. Storage with controllers (ports on each of the controllers): - 24 internal SAS3 ports (12 Gbit / s) - one 12 Gbit / s expansion port SAS x4 (Mini-SAS HD SFF-8644 ) for connecting disk shelves DS expansion shelves with two expander modules (ports on each of the modules): - 24 internal SAS3 ports (12 Gbit / s) (SFF shelf) - 12 internal SAS3 ports (12 Gbit / s) (LFF shelf) - three 12 Gbps SAS x4 expansion ports (Mini-SAS HD SFF-8644). Two ports (A and C) for cascading other disk shelves, port B is not used. |

| Disks and Drives | SFF DS (2.5 ") drives : - 300 GB, 600 GB, and 900 GB 15 thousand RPM 12 Gbit / s SAS HDD - 600 GB, 900 GB, 1.2 TB, 1.8 TB, 2 , 4 TB 10 thousand rev / min 12 Gbit / s SAS HDD - 1 TB and 2 TB 7200 rpm 12 Gbit / s SAS HDD nearline class - 3.84 TB, 7.68 TB, and 15.36 TB 12 Gbit / s SAS SSD (1 DWPD resource) - 400 GB, 800 GB, 1.6 TB 12 Gbit / s SAS SSD (3 resource and 10 DWPD) LFF DS (3.5 ") disks: - 900 GB 10 thousand rev / min 12 Gbps SAS HDDs - 2 TB, 4 TB, 6 TB, 8 TB, 10 TB, and 12 TB 7200 rpm 12 Gbit / s SAS HDD nearline class - 400 GB 12 Gbit / s SAS SSD (resource 3 and 10 DWPD) Disks for expansion shelves D3284 - 4 TB, 6 TB, 8 TB, 10 TB, and 12 TB 7200 rpm 12 Gbit / s SAS HDD nearline class - 400 GB 12 Gbit / s SAS SSD (resource 3 and 10 DWPD) - 3.84 TB, 7.68 TB, and 15.36 TB 12 Gbit / s SAS SSD (resource 1 DWPD) |

| Disk space | Up to 2 PB |

| Connection | DS6200 SAS controller: 4 12 Gbps SAS ports (Mini-SAS HD, SFF-8644). FC / iSCSI controller: 4 SFP / SFP + ports in two convergent groups (both ports in the group must have the same connection type, the groups may have different connection types). Converged connectivity options: - 1 Gbps iSCSI SFP (1 Gbps, UTP, RJ-45 twisted pair) - 10 Gbps iSCSI SFP + (1/10 Gbps, SW fiber, LC) - 8 Gbps SFP + FC (4/8 Gbps, SW optic fiber, LC) - 16 Gbps FC SFP + (4/8/16 Gbps, SW fiber, LC) - 10 Gbps iSCSI SFP +, direct connect cables (DAC) |

| Operating system support | Microsoft Windows Server 2012 R2 and 2016; Red Hat Enterprise Linux (RHEL) 6 and 7; SUSE Linux Enterprise Server (SLES) 11 and 12; VMware vSphere 5.5, 6.0, and 6.5. |

| Configuration limits | - Maximum number of storage pools: 2 (1 per controller) - Maximum volume of storage pool: 1 Pi - Maximum number of logical volumes: 1024 - Maximum volume of logical volume: 128 TiB - Maximum number of disks in a RAID group: 16 - Maximum number of RAID- groups: 32 - Maximum number of disks in the ADAPT disk group: 128 (minimum 12 disks) - Maximum number of ADAPT disk groups: 2 (1 for each pool) - Maximum number of spare disks: 16 - Maximum number of initiators: 8192 (1024 for each controller host port) - The maximum number of initiators on the host: 128 - M ksimalnoe number of host groups: 32 - The maximum number of hosts in the host group: 256 - Maximum number of disks in a disk group for an SSD cache per read: 2 (RAID-0) - Maximum number of disk groups for an SSD cache per read: 2 (1 for each pool) - Maximum SSD cache per read: 4 TB - Maximum number of snapshots: 1024 (with an additional license) - Maximum number of replication nodes: 4 (with an additional license) - Maximum number of volumes with replication: 32 (with an additional license) |

| Cooling | Failsafe cooling, two fans in the combined power / cooling units. |

| Nutrition | Failsafe power, two power supply 580 watts. |

| Hot-swappable components | Controller modules, expansion modules, SFP / SFP + transceivers, drives and drives, power supplies. |

| Control | Gigabit Ethernet port (UTP, RJ-45) and serial port (Mini-USB) in the controller modules. Web-based interface, command-line interface (Telnet, SSH or serial console), SNMP, e-mail notifications, optional - Lenovo XClarity. |

| Security | SSL, SSH, sFTP. |

| Warranty | Three year warranty period. Nodes can be replaced by a user or a Lenovo technician (9x5 mode, the next business day). Optional extended warranty: Installing any nodes by a Lenovo technician in 24x7 mode with a response of 2 or 4 hours, 6 or 24-hour recovery time, extending the warranty period for 1 or 2 years, YourDrive YourData service, installation and configuration services. |

| Dimensions | Height: 88 mm; width: 443 mm; depth: 630 mm. |

| Weight | - Storage DS6200 SFF (fully loaded): 30 kg - DS Series SFF expansion shelf (fully loaded): 25 kg - DS Series LFF expansion shelf (fully loaded): 25 kg |

The DS6200 comes in two versions — with SAS controllers and converged FC / iSCSI controllers. Execution option - 2U, 24 SFF disks (2.5 "). LFF disks (3.5") can be used only in the respective expansion shelves. The scope of delivery includes telescopic mounting rails (635–889 mm), a quick guide, a mini-USB cable for connecting to a serial console, and power cables.

Storage Overview Lenovo DS6200 | Components

In the back, two power supply units are located on the sides, in which fans cool both the power supplies themselves and other storage nodes. Centered, one on the other are the modules of the controllers.

The power cables are fixed in the power supply connectors with plastic clips to prevent accidental disconnection. It is also impossible to accidentally pull out the power supply - the metal bracket is fixed in the closed position with a plastic latch. Four LED indicators on the back of the power supply unit show the status of the PSU, the status of the fan, and the input and output voltages.

The controller module is a typical SBB form factor block. The panel includes: a SAS3 x4 SFF-8644 connector for connecting expansion shelves, an RJ-45 connector for network control, a mini-USB connector for connecting a serial console (sealed with a warning label to install the driver before connecting the cable), several service connectors and interface ports.

In this configuration, converged controllers with four SFP + connectors are installed, two of which are equipped with 16 Gbps FC transceivers. The lower-end DS2200 controller has only two SFP + or SAS connectors, depending on the type of controller.

Above the host ports are five LEDs: cache status, identifier, controller readiness to retrieve. Port for connecting expansion shelves and all host ports are equipped with a link indication.

Disc tray. Robust metal and plastic construction. The length of the disk tray is due to the fact that the Lenovo DS series storage systems were designed in accordance with the SBB (Storage Bridge Bay) specification describing the form factor of the controller modules and the method of connecting them to the backplane. The architecture of such storage systems requires the use of dual-port SAS disks to provide access to two controllers to each of the disks. The choice of SAS drives during the development of the specification was limited, therefore the connection of SATA drives through an additional multiplexing card (interposer) was provided, which provided for SATA drives a two-port connection. Considering the possible installation of such a board, the length of disk trays in the specification has been increased. For DS6200As with other modern storage systems, only SAS disks are offered.

The tested storage comes bundled with 24 Lenovo Storage 3.84TB 1DWD 2.5 "SAS SSD (PM1633a) solid state drives 01KP065: 3.84 TB of volume, 1 rewriting resource per day. Lenovo does not hide the OEM part number, it is important for many customers to know the model in advance used drives and detailed specifications, in this case the PM1633a, a Samsung drive based on 3D TLC NAND.

Storage Overview Lenovo DS6200 | Functionality

Storage Virtualization Stack

The storage virtualization stack on Lenovo Storage DS systems is based on the concept of storage pools. At the lower level are the physical disks and drives, combined into disk groups. Several disk groups are combined into a single storage pool (one pool per controller), space is allocated from the pool to create volumes. Based on virtual pools based work of many functions provided by the storage system: snapshots, tiered storage, caching, scaling performance, fine allocation of disk space.

A disk pool is not just a RAID-0 from several disk groups. The distribution of data in the pool is dynamic. When adding disk groups to the pool, the blocks are redistributed with data for load balancing. The reverse operation is also possible - releasing a disk group (for example, with the goal of changing settings or for transferring to another system) if there is enough free space in the pool.

RAID Disk Groups

To increase fault tolerance and performance, physical disks and drives are combined into disk groups using traditional RAID levels: 1, 10, 5 and 6. The maximum number of disks in standard RAID groups is 16, to further increase the volume and performance of several disk groups are combined into a common pool. The new version of the firmware added another type of disk groups - ADAPT.

Tiered data storage

Tiering is a technology that optimizes the use of drives with different levels of performance due to the dynamic redistribution of blocks with data depending on the load between the so-called tiers, arrays with different levels of performance. This storage system has three tiers: the Archive (archive) to drive 7200 rev / min, of Standard (Standard) Disc 10/15 thousand rev / min. Perfomance (productive) for the SSD, which requires an additional license. It is possible to assign a priority at the volume level by assigning a binding to the archive or production tier.

Guide to the DS6200 It is recommended, if possible, to use two tiers instead of three to optimize performance - SSD in combination with disks 10/15 thousand rpm or 7200 rpm.

SSD cache

An alternative way to optimize performance is caching. For these purposes, you can use one or two SSDs for each of the two storage pools. The DS6200 SSD cache is read- only, but it has several advantages compared to long-term storage:

- The caching mechanism is simpler, it does not create an additional load on the storage system due to the lack of the need to move data.

- The read cache does not require fault tolerance.

Storage Overview Lenovo DS6200 | G265 firmware enhancements

Recently, Lenovo has released a new firmware version G265, which significantly expands the functionality of all storage systems DS series.

Rapid Data Protection

In addition to standard RAID groups (0, 1, 10, 5, 6), you can now create ADAPT disk groups ( Rapid Data Protection Technology). The ADAPT array corresponds to RAID-6 in terms of failsafe; up to two disks can be lost, but, unlike traditional RAID, block allocation among disks in ADAPT is not strictly fixed. The system can redistribute blocks with data, blocks of checksums and spare space across all disks in a group, which makes it possible, for example, to efficiently use disks of different sizes in one group or add disks to a group with subsequent balancing of data placement. The main advantage of ADAPT disk groups is a significant reduction in failover recovery time. The estimated recovery time for a group of 96 4-TB disks when a single disk fails is only 70 minutes versus 11 or more hours in a RAID-6.

Replication

Asynchronous replication in the new firmware has been significantly improved. In addition to support for replication via Fiber Channel, there is support for simultaneous replication to several target nodes, up to four.

Security

Added the ability to flexibly configure user access rights to the storage interface based on groups. System logs can now be downloaded via Secure File Transfer Protocol (sFTP).

Performance improvement

The new firmware allows you to get performance up to 600,000 IOPS with a delay of less than 1 ms for DS6200 systems and up to 325,000 IOPS for DS4200. The performance testing we conducted, the results of which are described below, shows that these indicators are true. This high performance limit provides the Lenovo DS6200 a significant competitive advantage, the Dell SC4020 (300,000 IOPS) and the HP MSA2052 (200,000 IOPS).

Storage Overview Lenovo DS6200 | Disk Shelf Support D3284

With the rapid growth of the volume of stored data, the standard form factor, which allows you to install 12 3.5-inch disks in a 2U case, is not enough. Disk shelves with increased placement density, achieved due to the vertical installation of disks and rational use of case space, also exist in Lenovo The D3284 disk shelf allows you to place 84 disks in a 5U enclosure - almost three times as many disks per one unit.

Until recently, D3284 disk shelves were supported only as expansion options to Lenovo ThinkSystem servers, but now they can also be connected to DS storage systems. The new firmware allows you to connect up to three D3284 models DS4200 and DS6200 .

Storage Overview Lenovo DS6200 | Ez-start

The management interface of Lenovo Storage DS systems is already quite simple and convenient, but with the addition of the EZ-Start function, the user can configure the system in just a minute. EZ-Start is a simplified version of the corresponding tabs of the main interface. As a result, the whole process from creating disk groups to connecting volumes to hosts can be done with a few clicks.

Storage Overview Lenovo DS6200 | Control

Command line

The primary means of managing storage systems for the Lenovo DS series is the Lenovo Storage Manager, an embedded application with a web interface. By default, DSS network management interfaces are configured to use DHCP. If there is no DHCP on your network and you need to configure the interfaces before connecting, then a serial console comes to the rescue. You need to connect a mini-USB cable to the corresponding port of the first controller. The port is closed with a sticker warning you to install the driver before connecting the cable. The driver is only needed for Windows Server 2012 R2 and can be downloaded on the support page. For Windows Server 2016, a driver is not required; for Linux, you need to load a regular usbserial module with additional parameters specified in the manualThinkSystem DS6200 / DS4200 / DS2200 / DS EXP Hardware Installation and Maintenance Guide .

After launching Tera Term using the parameters specified in the manual, you must press Enter to display the console interface. The default login and password is manage /! Manage.

In addition to configuring service interfaces, a serial console is needed to switch the mode of operation of host ports in converged FC / iSCSI controllers. Through the command line interface, you can perform any actions to configure and monitor storage. It is also available on the network, via Telnet and SSH, which allows it to be used for automation.

Lenovo Storage Management Console

After configuring the network ports, you can connect to the web interface - Lenovo Storage Management Console (hereinafter - SMC). The interface of the main screen of the SMC will be clear to any user who has worked with similar block storage systems.

At the top of the main screen (Hosts window) you can see the number of configured hosts and the corresponding initiators. In this case, the storage system automatically identified two connections to the FC ports and indicates the presence of two initiators.

The Ports A and Ports B windows display the status of the host ports of the first and second controllers. The Capacity window shows the status of disk space: the number of disk groups in pools A and B, the amount allocated to them. The unconfigured system shows the total raw disk capacity.

At the bottom of the screen there are icons of indicators of the state of components, counters of various notifications and load indicators in IOPS and MB / s. On the left side of the screen there are several tabs that correspond to the logical components of the system.

We proceed to configure the system. First you need to make sure there are no hardware errors. The System tab shows the status of all system components, both visual, in the form of duplication of physical LED indicators, and in the form of a table (Table tab). The Turn On LEDs button turns on the display LED on the storage system, expansion shelf, or disk so that the desired component can be found in the rack.

The Hosts tab is responsible for configuring initiators and hosts. The initiators can be grouped by hosts; several hosts can be grouped together; if there are logical volumes, they can be configured to configure their own mapping of volumes. In this case, two initiators are displayed that correspond to the two FC HBA ports. There are no volumes yet, we will return to this tab later.

On the Pools tab, create disk groups. The screenshot shows an example of creating a RAID-10 disk group from 12 disks in pool A. Then a similar group from the remaining disks was created in pool B.

Instead of the usual disk group, you can add a group of one or two SSDs for read caching (the Read Cache item).

On the next tab, volumes are created from the free volume in the disk pools (Virtual Volumes). If there are different types of disks in the system (HDD 7200 rpm, HDD 10/15 thous. Rpm, SSD), you can use Tiering by assigning an affiliation to the volumes with the archive tier (Archive Tier) or productive (Perfomance Tier) . This property is responsible for the preferred distribution of data volumes on the disks of the appropriate level of performance. For example, a volume affiliated with an archive tier, whenever possible (if space is available), will always be located on 7200 rpm disks, regardless of the load on this volume. Further, this volume can be used to store backups, and even with a significant load (for example, when periodically testing backups for the possibility of recovery, the system will not waste resources by moving blocks from this volume to productive disks. For systems with long-term storage, the possibility of such a setting is extremely necessary; it avoids unnecessary redistribution of blocks between tiers and ensures a guaranteed level of performance for priority volumes.

The screenshot shows the creation of a 100 GB volume from pool A, then a similar volume was created from pool B. On the same tab, you can manage snapshots (snapshots) of volumes.

Snapshots can be created manually or on a schedule, based on basic volumes, or based on other snapshots. There is a snapshot update function - reset snapshot updates the selected snapshot to the current state of the volume.

After the volumes are created, the mapping setting remains - determining the initiators' access to the volumes. In this configuration, one host with two initiators is connected to the storage system, the first is connected to controller A, and the second is connected to controller B. In this case, each of the two volumes can be primped through both initiators; the operating system will have two disks with two paths to each . Of course, snapshots can also be connected to hosts, with read-only or read-write access.

For multi-path I / O to work properly in Windows Server, MPIO must be installed. On the Discover Multi-Paths tab, the connected storage will be displayed, you need to select it, click the Add button to enable MPIO support and restart the OS.

The presence of an “optimal” and “non-optimal” path to each of the volumes is due to the architecture of ALUA. Both controllers in a storage system with such an architecture are capable of accepting requests from the host to a specific volume, but only one controller at a certain point in time is the "master" of the volume and is directly involved in servicing I / O, the second controller only sends it data on the internal bus. Access through the main controller is faster than through the “alien”, so this path is considered optimal, and I / O is performed only along the optimal paths, and only if they are absent (for example, when the cable is disconnected or problems with the transceiver) is switched to non-optimal ones. On Lenovo DS storage systems, all volumes of pool A are served by the first controller (A), volumes of pool B are served by controller B. The ALUA architecture, in comparison with Active-Passive, reduces the time for switching paths in the event of a failure. The Perfomance tab allows you to monitor system performance. The load is displayed at the level of ports, disks, disk groups and pools.

Storage Overview Lenovo DS6200 | Performance

Configuration

Test server characteristics:

- Processor: Intel Xeon E5-2620 V4

- Motherboard: Supermicro X10SRi-F, BIOS 3.0a

- Memory: 4x8 GB DDR4-2400 ECC RDIMM

- System disk: Intel S3700 100 GB

- Operating system: Microsoft Windows Server 2016

- Load generator: FIO 3.2

- Fiber Channel HBA: Dual Port 16 Gbps Lenovo 00Y3343 (Qlogic QLE2662)

The stated performance limit for the used FC HBA is 500,000 IOPS and 3200 MiB / s per port. The volume from one pool was tested, during tests both FC HBA ports were connected to the first controller. The performance of the disk group RAID-10 of 12 SSD Lenovo 01KP065 (Samsung PM1633a) was tested. Testing was conducted after the completion of the initialization process of the RAID group.

Testing

The following test was used to evaluate performance:

- Block size: 4096 bytes

- Access: random (old firmware, firmware G265), sequential (firmware G265)

- Read / write ratio: 100/0, 70/30, 0/100

- Queue depth: 1, 2, 4, 8, 16, 32, 64, 128

- Number of threads: 1, 2, 4, 8

- Number of rounds: 15

- Duration of the round: 30 s

- Warming interval of the round: 5 s

The results were averaged over 4 rounds from the selected steady state window (linear approximation within the window does not exceed the average 0.9 / 1.1). The performance of one controller was tested, with the connection of two FC 16 Gbps links. The graphs show double IOPS values. Before each series of tests the volume was filled with random data.

Average delay

The DS6200 performs well. The level of the average delay with the new firmware G265 does not exceed 1 ms in almost the entire load range. 100,000 IOPS with a mixed load with a delay of less than 0.3 ms is an excellent result for modern storage systems with SSD drives.

We decided to check the manufacturer’s specifications of 600 thousand IOPS with a delay of <1 ms and random access to data. It was possible to get about 560 thousand IOPS before reaching the average delay of 1 ms - the result is quite close to the stated. Some lag can be attributed to high processor load (load exceeded 65%) and FC interface (SAS interface can provide a lower level of delay). Additional performance gains with FC / iSCSI connection could be obtained by following the advice from the system manual: connections to two of the four ports can be spread across different groups of ports, since each group is serviced by its own chip, or by using all available connection ports.

99 and 99.99% lag percentages

The DS6200 shows fairly stable latency values. The average delay at low loads does not exceed 300 µs, which is an excellent result. Even the 99.99-percentile delay does not go beyond the boundaries of 3 and 20 ms with a load of 100% and 70% reading, respectively. Peak values begin to grow only with write access under high loads with large queues, due to the characteristics of the installed SSDs.

Storage Overview Lenovo DS6200 | Conclusion

Lenovo Storage DS is a modern line of dual-controller storage systems for small and medium businesses. The older DS6200 model provides a high level of performance for this class. Lenovo has every reason to recommend the DS6200 for use with modern solid state drives. An intuitive interface allows you to put the system into operation in minutes. Three-tier tier storage and technology of distributed arrays ADAPT significantly increase the efficiency of drive utilization.

It is worth noting the competent organization of the entire product line of Lenovo Storage DS. Two controllers provide a wide range of connectivity interfaces: SAS, iSCSI via gigabit or 10 gigabit interfaces, 8- or 16 gigabit Fiber Channel. Additional functionality (tiered storage using SSD, replication, increasing the maximum number of snapshots) is offered in the form of additional licenses, due to which the initial cost of the system remains very attractive against the background of competitors.