News from the bowels of the CS188.1x Artificial Intelligence or final impressions

Prologue

Hello again!

Since the first part was received favorably, I decided to write about all my impressions, after the completion of the course.

Summary of the previous series: I decided to learn python, after Lutz and Nick Parlante signed up for a fundamental CS course (unfortunately, not always python style), for the easy course “Python for the smallest” (already completed). Well, somewhere between them I got involved in CS188.1x AI , judging now that I train python, so on serious things.

In the previous review, I managed to consider the first 2 weeks of the course (about 30%), actually on November 19 I passed the hard deadline for the final exam, and I want to summarize.

We continue to get acquainted with the intricacies of AI-being

After

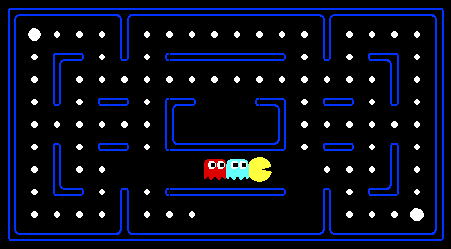

Next we were waiting for Game Trees and Decision Theory. Then the professor acquainted with the solution of game problems in the face of opposition from the enemy (in the case of Pakmen it was ghosts). In principle, the same Search on the Game Tree, taking into account all kinds of moves of the opponent, is not optimal either. Alpha-Beta Pruning stands alone as a way to significantly reduce the time it takes to traverse a large tree by cutting off individual guaranteed unpromising branches.

Project 2 said to me: "Man, come here again your python." Actually again a sharp, but no longer unexpected transition from words to code. The same Pacman World remained, only enemy agents were added.

It was required to implement first ReflexAgent, which does not plan anything, acts exclusively from the current game situation. Next - MinimaxAgent, proceeds from the optimal actions of the enemy and looks not far (works slowly) into the future. Then you clearly understand how time is reduced by an order of magnitude when looking a few moves ahead using Alpha-Beta. ExpectimaxAgent acts on the basis of the possible stupidity of the opponent, which sometimes allows you to emerge victorious from seemingly fatal game situations. Well, for dessert - "Your extreme ghost-hunting, pellet-nabbing, food-gobbling, unstoppable evaluation function." I got 0/6 for it, because I wrote the logic and did not manage to debug it really. Conclusion - do not sit down at the project right before the deadline, if it is on Sunday night, and you should work on Monday.

Learning AI to learn, or the inimitable "Claw"

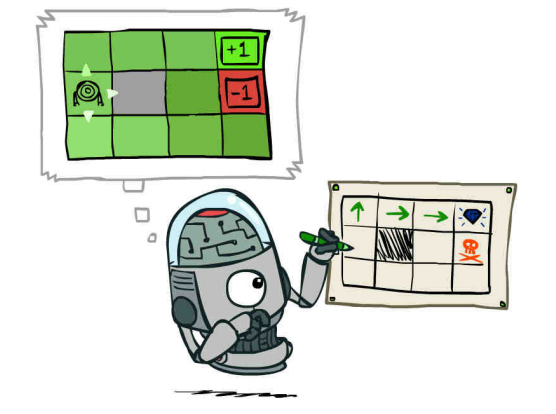

The last two weeks have been devoted to Markov Decision Processes (MDP), a variant of representing the world as MDP and Reinforcement Learning (RL), when we do not know anything about the conditions of the world around us, and we must somehow know it. The key idea is rewards, positive or negative rewards for various actions.

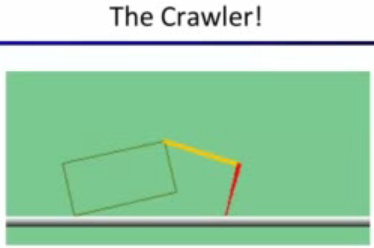

Then of course, from the first meeting, he took possession of my mind, the great and terrible Claw.

At the same time funny and scary, he makes a stupid wave of his paw, but he needs to learn how to walk to go to college :) I am enclosing a piece of the video from the lecture, it will be more understandable. Looking ahead, I’ll say that in the last project I managed to teach my own pet to walk.

Offtopic and dreams

Just at that time, an excellent article ran on Habré with great DIY videos about the hexapod. Now the idea of collecting the same is haunting me (STMF4DISCOVERY is lying around with a gyroscope and accelerometer, a wifi / bluetooth whistle is attached), and try to teach him how to walk. Just to see how the insect pinches pseudo-life. Eh, to force yourself ...

These two topics seemed rather complicated, for example, the question of how to find a balance of the optimal behavior of Exploration vs Exploitation, when it is enough to decide what to learn, it is time to act in accordance with the acquired knowledge.

In project 3, we got a little distracted from the labyrinth with a yellow bun, and solved the problems of the so-called GridWorld.

How to decide where it is best to go in the conditions when we press the “north” and with some probability at the same time step to the “east”? How to behave in the process of exploring this world? What is good, what is bad? The implemented algorithms turned out to be working also for Claw. A little dopilivanie - the peckman is also ready to study. It turned out to be very interesting to go back and compare how our pecker-killer trained in several hundred games against ghosts behaves. Here it is the difference of approaches, write ExpectiMax Search to solve the game in the maze, or teach the peckman how to win.

Finish line

A week was given for the completion of project 3 and training for myself in Final Exam Practice, for which grades were not taken into account. Another week of time was allotted for passing the final exam, it was possible to choose a 48-hour corridor, and at that time calmly answer. This was both a plus and a relaxing minus. Another brutal discovery was one single attempt to answer most of the questions (about 40 out of 54, the others gave two attempts). And if in questions from the True / False series this is justified, then in some others with 4-6 checkboxes - it bothered. With two attempts, answering was the easiest. Questions covered the entire course quite tightly. The lecturers evaluated the exam at 2-5 hours, I spent about 1 + 4 hours in 2 days (I answered slowly, I reviewed the lectures in some places), and I could not pass the exam without errors,

Instead of concluding or summing up your impressions

Very interesting! In lectures and tests, python does not smell, but it smells very strongly in specialized pacman projects. The full feeling of using the language as a tool for solving specific problems - I think it is very good for studying. The presentation of the material by the lecturer is excellent, and I personally had enough information in the lectures to solve the problems, although some extra. materials are listed in the course wiki. The forum was very useful several times. Well, the technical part is on top, everything is convenient, buttons, flags, answers. It is worth noting that on Sunday evenings before the deadlines, auto-grader is very slow (it checks the code for up to 20-30 minutes). The forum slows down if you watch it directly under the video / question section, but here I sin on my old netbook.

Unfortunately, after playing with youtube-dl, I still did not understand how to get lectures with edx subtitles, and not youtube auto-subtitles, if someone solved this issue, write plz.

I spent 4 hours a week on lectures and homework, and 10 hours (or maybe more, it’s hard to count) on each project (1 time in 2 weeks). I didn’t keep my own notes, now I regret it a little.

Well, Easter eggs from CS188x team delivered a special pleasure, such as:

if 0 == 1:

print 'We are in a world of arithmetic pain' As a bonus, I collected several links (with time) for videos in lectures that cover various real-world applications of robo-AI: aibo soccer , google car , shirt-folding robot , terminator , aibo learns to walk , a humanoid robot learns to walk . For students of the course, the veil of secrecy over how all the same it is programmed a little opened.

In the 2nd part of the course, you should sign up anyway!

See you at Professor Klein.