Five selfish reasons to work reproducibly

- Transfer

annotation

So, my fellow scientists, do not ask what you can do for reproducibility — ask what reproducibility can do for you!

Here I will list five reasons why the reproducibility of data pays off in the long run and is of personal interest to every ambitious, career-oriented scientist.

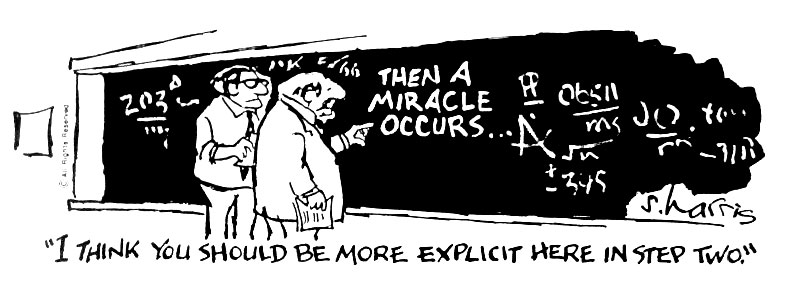

A complex equation in the left half of a black board, an even more complex equation in the right one. A short sentence links two equations: "A miracle happens here." Two mathematics, in deep thought. “I think you should be more specific in the second step,” says one to the other.

Something like this looks like when you are trying to understand how the author moved from a large and complex data set to a dense article with a lot of complex graphs. Without access to data and analytical code, such a transition can only be explained by a miracle. And in science there should be no miracles.

The ability to work transparently and reproducibly relies heavily on empathy — put yourself in the shoes of one of your colleagues and ask: “Can this person access my data and understand the meaning of my analysis?”. Mastering such "tools" (Box 1) requires the involvement and huge investments of your time and energy. A priori, it is not obvious why the advantages of such a work format exceed the costs.

Here are a few reasons that are usually given in such cases: “Because reproducibility is right,” “Because it is the basis of science!”, “Because the world would be a better place if everyone worked transparently and reproducibly!”. Do you know how this reasoning sounds to me? Like “blah blah blah” ...

Not that I consider these arguments to be untenable. I'm just not a very idealist: I don't care what science should be. I am a realist: I try to do the best that is in my power, based on how science really works. And, like it or not, science is about career growth, an increase in the impact factor, the number of publications and the amount of money. More, more, more ... So how does reproducibility help me achieve more as a scientist?

Reproducibility: why should I?

In this article, I give five reasons why this approach to reproducibility pays off in the long term and is in the interests of every ambitious career-oriented scientist.

Reason # 1: Reproducibility helps avoid catastrophe.

“As a loud promise in cancer testing failed,” was the title of The New York Times article published in the summer of 2011 [1], which highlights the work of Keith Baggerley and Kevin Coombs, two biostatists of the Cancer Center. M.D. Anderson. They identified the problem of analyzing data on deaths in a series of articles by scientists from Duke University, who had a great influence on breast cancer research [2].

The problems discovered by Baggerly and Coombs could be easily noticed by any co-author of the article before it was submitted. The data sets there are not so large, they can be easily checked on a standard laptop. You don’t have to be a statistics genius to understand that the number of patients there varies, labels are swapped or samples are presented several times with conflicting annotations in the same data set. Why no one noticed these problems before it was too late? Because the data and analysis were not transparent and required knowledge of criminal bioinformatics in order to understand them [2].

This example motivates me to be more transparent and reproducible in my own work. Even smaller incidents can embarrass you.

Here is an example from my research. Our experimental partners checked the track model we created. However, when writing this article, we faced a serious obstacle: no matter how hard we tried, we could not reproduce our original travel model. Perhaps the data has changed, perhaps the code was different, or maybe we just could not correctly remember the settings of the parameters of our method. If we published this result, we would not be able to demonstrate how we arrived at the approved hypothesis from the original data. We would have published a miracle.

This experience showed me two things. First of all, the project is more than a beautiful result. You need to describe in detail how this result was obtained.

And besides, thinking about reproducibility in the early stages, you will save time in the future. We have spent years of our time and partners time, having failed to reproduce our own results. All of this could have been avoided if we had been better at tracking how data and analyzes changed over time.

Reason 2: Reproducibility makes it easier to write articles.

Transparency in your analysis greatly simplifies writing articles. For example, in a dynamic document (Box 1), all results are automatically updated when data changes. You can be sure that your numbers, graphs and tables will remain relevant. In addition, this transparency of analysis is more attractive, more people will be able to familiarize themselves with it, and it becomes much easier to detect errors.

Here is another example from my work. In another project [3], we discussed with the doctor why some results of survival in a multicenter study did not meet our expectations. Since all the data and analytical code were available to us in an easily readable file, we were able to study this question ourselves.

Just by creating a table with a variable describing the stage of the tumor, we were able to identify the problem: we expected to see the numbers of the stages from 1 to 4, and we saw something like “XXX”, “Fred” and “999”. The people who provided us with the data seemed to have read them badly. Studying the data yourself turned out to be much faster and easier than going to the postdoc working on the project and saying, “Explain this to us.” My co-author and I are too busy to waste time on low-level data cleaning, and without a well-documented analysis, we would not be able to contribute. But since we had very transparent data and code, it took us only five minutes to detect the error.

Reason # 3: Reproducibility helps reviewers see data through your eyes.

Many of us love to complain about reviewing. Most often, I hear: "The reviewers did not even read the article and have no idea what we actually investigated."

This contrasts sharply with my experience of reviewing a recent article [4], for which we made the data and documented code easily accessible to reviewers. One of them suggested making a small change in some of the analyzes, and since he had access to all the data, he was able to directly test his ideas and see how the results changed. The reviewer was fully involved, and the only thing left to discuss was which method of data analysis would be the best. So a constructive review should be arranged. And this would not have been possible without the transparent and reproducible presentation of our analysis.

Reason 4: Reproducibility ensures the continuity of your work.

I would be surprised if you had not heard the following remarks before (and maybe even voiced them themselves): “I’m so busy that I can’t remember the details of all my projects thoroughly” or “I did this analysis 6 months ago. Of course, I cannot remember all the details after such a long period ”or“ My supervisor (PI) said that I should continue the project of the previous postdoc, but that postdoc was long gone and did not save any scripts or data. ”

Think about it: all of these problems can be solved by documenting and making data and code available. This is especially important for leading researchers who are working on complex long-term projects. How can you ensure continuity of work in your laboratory, if the way it is advanced is not documented in reproducible form? In my group, I don’t even discuss with students the results if they are poorly documented. No proof of reproducibility - no result!

Reason number 5: Reproducibility helps reputation

In several articles, we have made our data, code, and analyzes available in the form of a package for the Bioconductor [5]. When I came to work under a contract, I provided all these packages as the results of research in my laboratory.

As a rule, the analysis presented in this way helps to create a reputation as an honest and thorough researcher. If ever there is a problem with one of your articles, it will be very easy for you to protect your name and show that you have reported everything in good faith.

A recent article published in the journal Science - “Scientific Standards. Promoting an open research culture ”[6], summarizes eight standards and three levels of recommendations for reproducibility. Using tools such as R and knitR (Box 1) will allow you to easily follow the highest standards, which, again, is good for your reputation.

What is holding you back?

I convinced you? Probably no. Here is a selection of reactions that I often get when I insist on reproducibility (and the way I answer it):

- “Only the result matters!”. You're wrong.

- “I would prefer to do real science rather than tidy up my data.” If your results are not reproducible, you are not engaged in science at all [7].

- “Go about your business! I document my data as I want! ” Yes please! There are many ways to work so that it is reproducible [8] - you can choose any that you like.

- “Excel works fine. I don't need any newfangled R, Python or anything else. ” The tool you mentioned may work well if you need to make many manual edits. But if you perform data analysis, fewer clicks and more scripts are the best solution. Imagine that you need to do a simple analysis - for example, build a regression graph - 5 (10, 20) times. Compare the processing of this manually with the writing of a simple cycle that will do it for you. Now imagine that you need to do it again after 3 weeks, because the data has changed a little. In this case, it’s definitely worth using R and Python.

- “Reproducibility sounds good, but my code and data are scattered on so many hard drives and directories that it takes too much effort to put everything in one place.” Just think what you just said. Lack of organization puts you and your project in mortal danger.

- "We can always sort the code and data after submitting an application for consideration." Above, my travel model example demonstrates the danger of such a strategy. In addition, preparing a manuscript can take a long time, so you may not even remember all the details of your analysis when it comes time to present the results.

- "There is a lot of competition in my research, and spending too much time is too much of a risk." And that is why you should start working with reproducibility at an early stage in order not to waste this time in the long run.

When should you worry about reproducibility?

Suppose I have convinced you that reproducibility and transparency are

in your own interests. When should we start worrying?

Long answer:

- before the project starts - because you may have to learn tools like R or git .;

- while you are analyzing - because if you wait too long, you may lose a lot of time trying to remember what you did two months ago;

- when you are writing an article - because you want your numbers, tables and figures to be relevant;

- when you are a co-author of the article - because you want to be sure that the analysis presented in the document with your name is correct;

- when you are viewing a document - because you cannot judge the results if you don’t know how the authors came to them.

The short answer is: always!

Achieve a culture of reproducibility

For whom reproducibility and transparency are important? It is obvious that students and postdocs play an important role in reproducible work, because most often they are the people who actually do this work. My advice: learn reproducibility tools as quickly as possible (Box 1) and use them in every project.

With effort, you will get a lot of advantages:

- you will make fewer mistakes and it is easier to correct existing ones;

- you will be more efficient and will develop much faster in the long run;

- if you think that your supervisor is not very involved in the work, then by making the analysis more understandable, you can contribute to the fact that the mentor becomes more involved.

Leading researchers, leaders of groups and teams, professors - behind you create a “culture of reproducibility” over the technical base that your students and postdocs represent. In my laboratory, I made reproducibility a key element in documents that I distribute to beginners [9]. If you want to support your colleagues, ask for analysis documentation every time a team member shows you the results. You do not need to go into details - a quick glance will show how well it is made. What really improved reproducibility in my own laboratory is the requirement that prior to the application being submitted by one member of the team, a colleague who is not participating in the project should try to independently analyze and reproduce our results.

If you do not create a culture of reproducibility in your laboratory, you will miss the great scientific advantages that it has in the long term.

Science is becoming more transparent and reproducible every day. You can become leaders in this process! Advanced trendsetters! Come on, I know - you want it too.

Box 1

At the lowest level, working reproducibly means simply avoiding the mistakes of newbies. Keep your project in an organized state, assign informative names to files and directories, save data and code in one place with backup software. Do not scatter data across different servers, laptops and hard drives.

In order to achieve the following levels of reproducibility, you need to study some tools of computational reproducibility [8]. Overall, reproducibility is improved with fewer clicks and inserts and more scripts and coding. For example, do your analysis in R or Python and document it with knitR or IPython .

These tools help to combine descriptive text with analytical code into dynamic documents that can be automatically updated each time a data or code changes.

Next, learn how to use a version control system, such as git , on a shared platform, such as GitHub . Finally, if you want to become a professional, learn how to use dockers who will make your analysis seamless and easily portable to different systems.

Acknowledgments

I developed a selfish approach to reproducibility for the Postdoctoral Master Class on Reproducibility, which I taught at the Gourdon Institute in Cambridge with Gordon Brown (CRUK Cambridge Institute) and Stephen J. Eglen (DAMTP Cambridge). I thank them for their input.

All materials are available on the GitHub link , and my report is recorded on my blog .

- Kolata G. How bright promise in cancer testing fell apart. The New York Times. 2011. http://www.nytimes.com/2011/07/08/health/research/08genes.html?_r=0 .

- Baggerly KA, Coombes KR. Deriving chemosensitivity from cell lines: forensic bioinformatics and reproducible research in high-throughput biology. Ann Appl Stat. 2009;3:1309–34.

https://projecteuclid.org/euclid.aoas/1267453942. - Martins FC, Santiago I, Trinh A, Xian J, Guo A, Sayal K, et al. Combined image and genomic analysis of high-grade serous ovarian cancer reveals PTEN loss as a common driver event and prognostic classifier. Genome Biol. 2014;15:526.

https://genomebiology.biomedcentral.com/articles/10.1186/s13059-014-0526-8. - Schwarz RF, Ng CKY, Cooke SL, Newman S, Temple J, Piskorz AM, et al. Spatial and temporal heterogeneity in high-grade serous ovarian cancer: a phylogenetic analysis. PLoS Med. 2015;12:1001789.

http://journals.plos.org/plosmedicine/article?id=10.1371/journal.pmed.1001789. - Castro MAA, Fletcher M, Markowetz F, Meyer K. Gene expression data from breast cancer cells under FGFR2 signalling perturbation. BioConductor Experimental Package. http://bioconductor.org/packages/release/data/experiment/html/Fletcher2013a.html. Accessed 27 Nov 2015.

- Nosek BA, Alter G, Banks GC, Borsboom D, Bowman SD, Breckler SJ, et al. Scientific standards. Promoting an open research culture. Science. 2015;348:1422–5.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4550299. - Watson M. When will’open science’ become simply’science’? Genome Biol. 2015;16:101.

- Piccolo SR, Lee AB, Frampton MB. Tools and techniques for computational reproducibility. 2015. http://biorxiv.org/content/early/2015/07/17/022707. Accessed 27 Nov 2015.

- Markowetz F. You are not working for me; I am working with you. PLoS Comput Biol. 2015;11:1004387.

http://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1004387.Twitter и блог

Florian on Twitter @markowetzlab and on his blog: http://scientificbsides.wordpress.com/.