2 million points on the map? easy!

Not so long ago, to create a service (and put a module “in the buffer”), it was necessary to come up with a way to quickly select points located on the map from sql database.

The code will be small, so as not to distract from the understanding of the system as a whole.

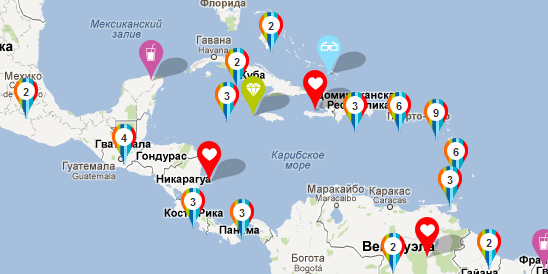

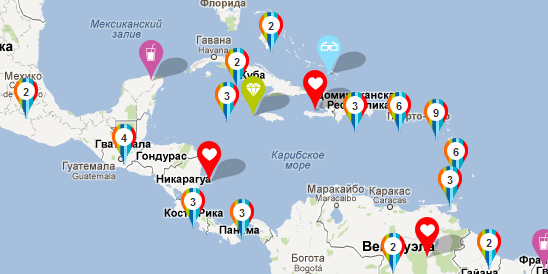

And so, at the "entrance" has a base of 2 million points (so far only a test one, since the real one is still small), a Google map (or Yandex, not fundamentally), each point has coordinates. The user must move around the map, see all the points, the server should not be the size of the floor rack, taking into account the "desired" attendance of 200 people per second.

There are many points. Lots of. Variants with checks for the entry of a point into the borders of the map are not suitable (like (x1 <x <x2) and (y1 <y

When searching for solutions, we came to the following points:

1. work with the

tile map (square, block) 2. store qtree tiles in the database at the point

3. when moving around the map without zooming, load only points from the “new” tiles

To understand qtree en .wikipedia.org / wiki / Quadtree

To understand what comes of it koti.mbnet.fi/ojalesa/quadtree/quadtree_intro.htm

A line with a "tree" for each point in the database looks like 01320232312222100231210323203 (the number of digits is equal to the maximum zoom level).

In fact, it means that if the world map is divided into 4 parts 2x2, then the point is in 1 block (we have omitted the numbering from scratch and the “main block” with number 0 to simplify), if this block is divided again by 2x2, then it will be in block 3 and so on. With each zoom level, we must look “deeper” into our “tree”.

What is the beauty of storing data in this form?

The beauty is that knowing the coordinates of the tile and scale, we get a qtree of the form 132023231, and in order to find all the points in this area on the map at a given level of scale, we need to make a request with the condition point_qtree LIKE '132023231%'(look for the occurrence of a substring in a string with the first character). With this point_qtree field we must remember to index that the sampling rate will raise to the maximum level. SQL will build a qtree tree, which will simplify the selection task as much as possible.

Hurrah. The selection of points began to take many times less time.

When moving around the map, we have to do a number of things:

To determine the visible tiles in any map API, you can request the coordinates of the map window and the zoom level. And proceeding from these data, get the tile of the upper left and lower right edges and list all the others located between them.

We get an array of the form 012, 013, 021, 020.

Save it in a variable, which we call oldTile.

Each time you move around the map, we calculate all the tiles and compare with the current oldTile array, and we should get a newTile array that lists all the tiles that are not in oldTile yet. Next, we must send an ajax request to the server to select points for each tile (you can send an array of new tiles in the request, but I will explain the purpose of splitting into separate requests below), let's say / point / 012, add new points to the map (without clearing the old ones) . Next, do a simple oldTile = oldTile + oldTile.

When zooming in on the map, we will have to clear oldTile (and points on the map, one of the options. The second option: do not clear the points, but then we need to not clear the oldTile array as well, but recount, for example, from 01 we should get 010, 011, 012, 013 ) And after that “according to the plan” calculate the visible tiles of the map, etc.

What does this method give us:

As the next stage of system optimization:

1. When adding a new point to the database, forcibly update all arrays of the entire tree branch to the memcache. Thus, we can ensure that requests further nginx + memcache will not go away and the data will always be relevant, which will allow us to switch from memcache to radish and not be afraid of “cold starts”.

The code will be small, so as not to distract from the understanding of the system as a whole.

TK

And so, at the "entrance" has a base of 2 million points (so far only a test one, since the real one is still small), a Google map (or Yandex, not fundamentally), each point has coordinates. The user must move around the map, see all the points, the server should not be the size of the floor rack, taking into account the "desired" attendance of 200 people per second.

Ideas?

There are many points. Lots of. Variants with checks for the entry of a point into the borders of the map are not suitable (like (x1 <x <x2) and (y1 <y

When searching for solutions, we came to the following points:

1. work with the

tile map (square, block) 2. store qtree tiles in the database at the point

3. when moving around the map without zooming, load only points from the “new” tiles

To understand qtree en .wikipedia.org / wiki / Quadtree

To understand what comes of it koti.mbnet.fi/ojalesa/quadtree/quadtree_intro.htm

A line with a "tree" for each point in the database looks like 01320232312222100231210323203 (the number of digits is equal to the maximum zoom level).

In fact, it means that if the world map is divided into 4 parts 2x2, then the point is in 1 block (we have omitted the numbering from scratch and the “main block” with number 0 to simplify), if this block is divided again by 2x2, then it will be in block 3 and so on. With each zoom level, we must look “deeper” into our “tree”.

What is the beauty of storing data in this form?

The beauty is that knowing the coordinates of the tile and scale, we get a qtree of the form 132023231, and in order to find all the points in this area on the map at a given level of scale, we need to make a request with the condition point_qtree LIKE '132023231%'(look for the occurrence of a substring in a string with the first character). With this point_qtree field we must remember to index that the sampling rate will raise to the maximum level. SQL will build a qtree tree, which will simplify the selection task as much as possible.

Hurrah. The selection of points began to take many times less time.

Let's deal with the map

When moving around the map, we have to do a number of things:

- determine which tiles we see

- determine for which tiles we have already loaded points

To determine the visible tiles in any map API, you can request the coordinates of the map window and the zoom level. And proceeding from these data, get the tile of the upper left and lower right edges and list all the others located between them.

We get an array of the form 012, 013, 021, 020.

Save it in a variable, which we call oldTile.

Each time you move around the map, we calculate all the tiles and compare with the current oldTile array, and we should get a newTile array that lists all the tiles that are not in oldTile yet. Next, we must send an ajax request to the server to select points for each tile (you can send an array of new tiles in the request, but I will explain the purpose of splitting into separate requests below), let's say / point / 012, add new points to the map (without clearing the old ones) . Next, do a simple oldTile = oldTile + oldTile.

When zooming in on the map, we will have to clear oldTile (and points on the map, one of the options. The second option: do not clear the points, but then we need to not clear the oldTile array as well, but recount, for example, from 01 we should get 010, 011, 012, 013 ) And after that “according to the plan” calculate the visible tiles of the map, etc.

What does this method give us:

- we request only new points from the new displayed places on the map

- we get a good opportunity to dump the server’s response from the memcache and, having configured Nginx to work with it, get points in the tiles already from it (this is why we stopped at a few requests for a specific tile, but don’t do bulk selections).

- reduce the number of queries to the database server

- reduce traffic

As the next stage of system optimization:

1. When adding a new point to the database, forcibly update all arrays of the entire tree branch to the memcache. Thus, we can ensure that requests further nginx + memcache will not go away and the data will always be relevant, which will allow us to switch from memcache to radish and not be afraid of “cold starts”.