PCIe bus: Does physical constraints only affect transfer speed?

I'll start from afar. Last winter I had a chance to make a USB device with a core placed in the FPGA. Of course, I really wanted to check the actual bandwidth of this bus. After all, in the controller - there is too much of everything. You can always say that there is a delay here, or over there. In the case of FPGAs - I see a block pumping data, so he told me that there is data in it. But I set that everything is processed, and I am ready to accept a new batch (at the same time, it is already receiving data in the second buffer of the same end point). Well, we set the readiness from the very first clock and see what happens when the USB can thresh without stopping.

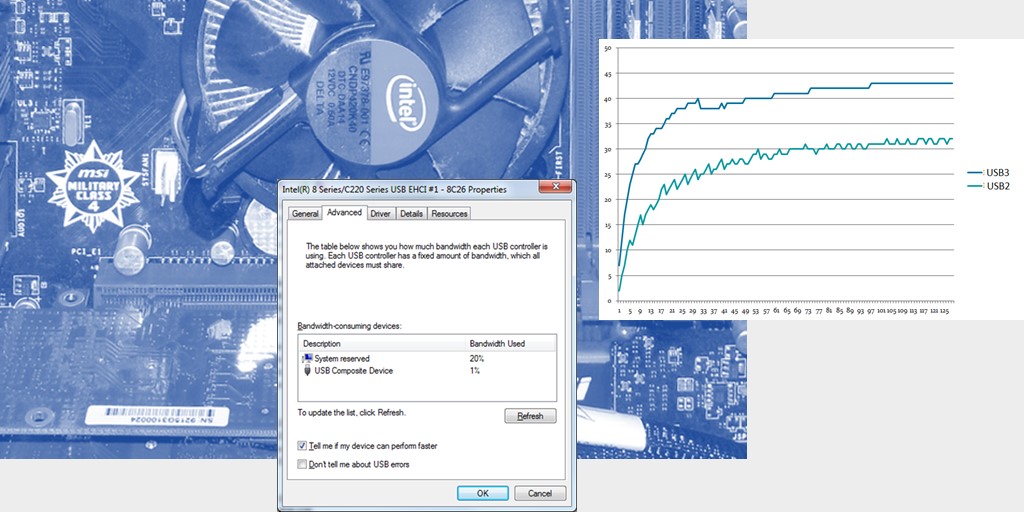

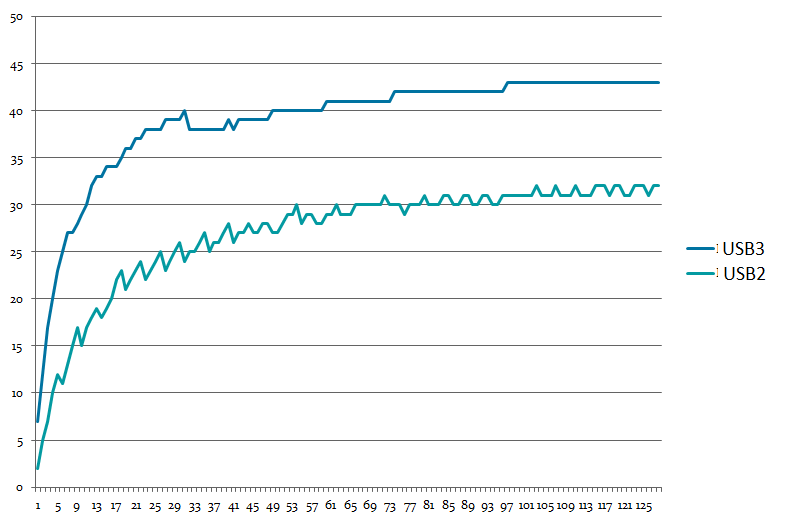

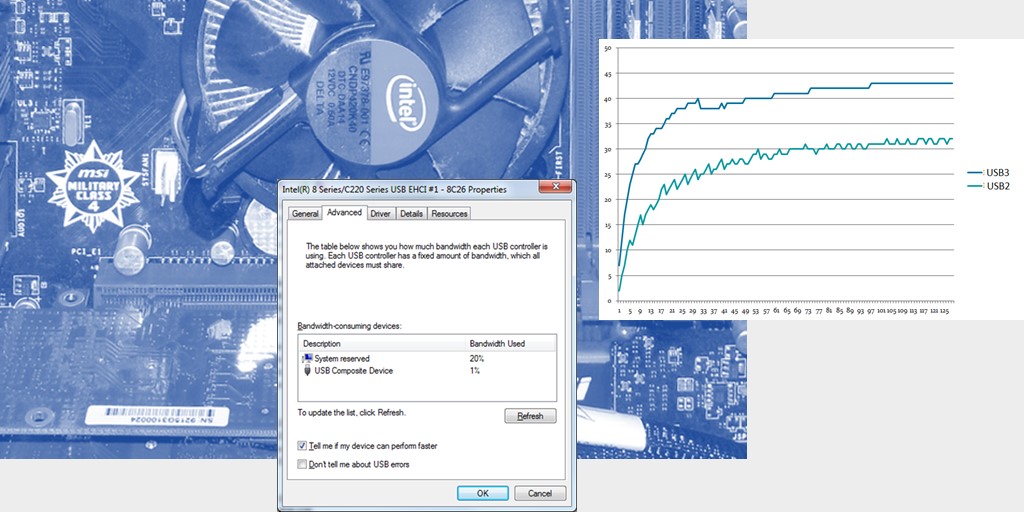

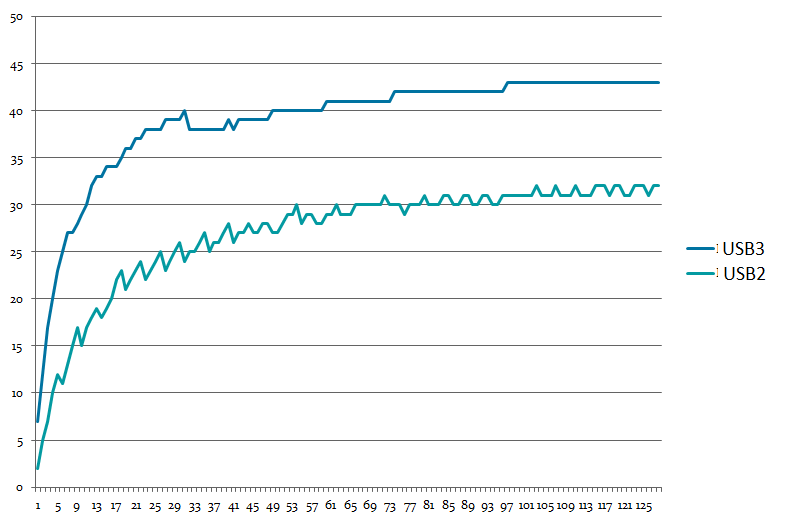

And it turns out an amazing thing. If the USB 2.0 device is plugged into the "blue" connector (which is USB 3.0), then the speed is the same. If the "little black" - the other. Here is my graph of USB write speed versus data transfer length. USB3 and USB2 are a type of connector, the device is always USB 2.0 HS.

I tried in different machines. The result is close. No one could explain this phenomenon to me. Later I found the most likely cause. And the reason is very simple. Here are the properties of the USB 2.0 controller:

The controllers that control the "blue" connector do not have this. And the difference is just about 20 percent.

From this we conclude that not always bandwidth limitations are determined by the physical properties of the tire. Sometimes some other things are superimposed. We turn with this knowledge these days.

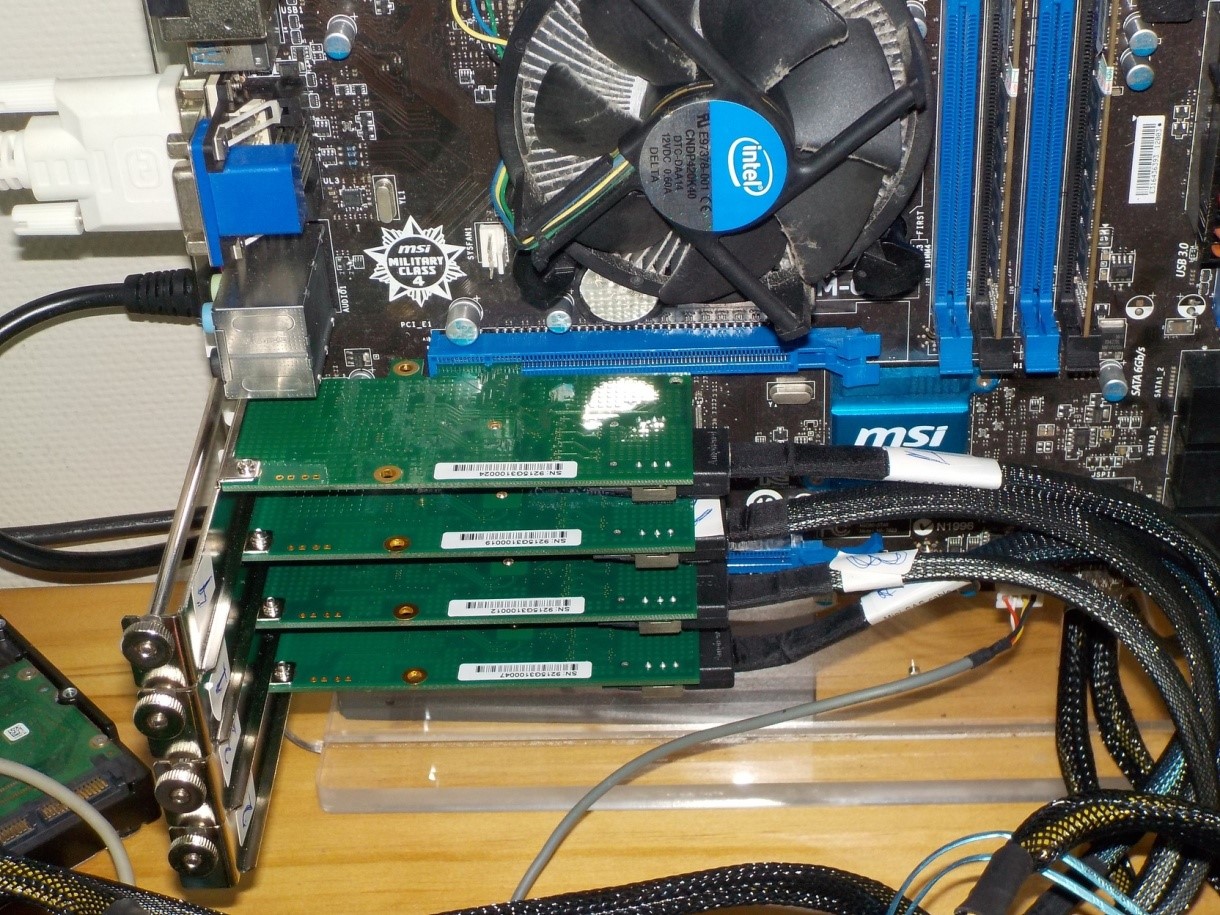

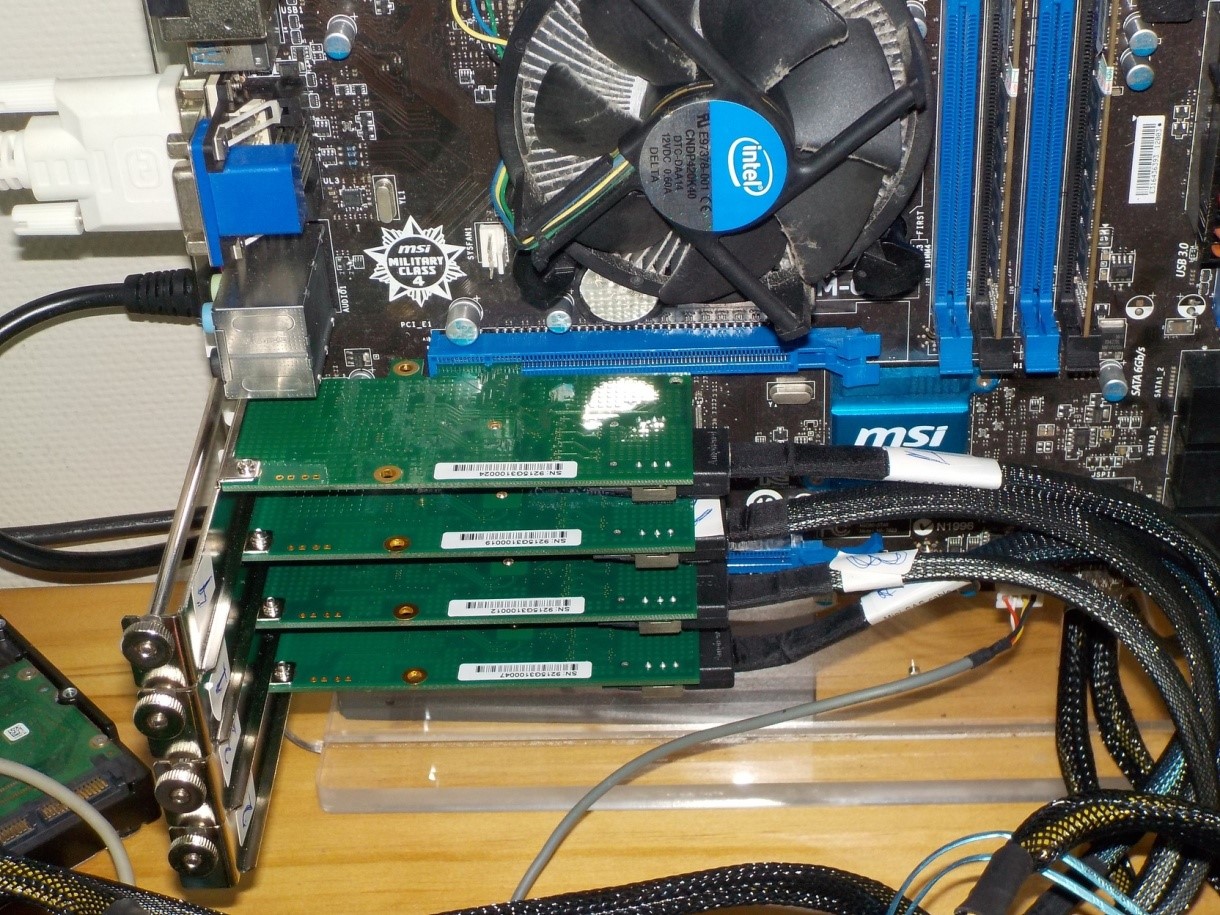

So. It all started very casually. There was a check of one program. Checked the process of writing data to multiple disks simultaneously. The hardware is simple: there is a motherboard with four PCIe slots. In all the slots, exactly the same cards are stuck with AHCI-controllers, each of which only supports PCIe x1.

Each card serves 4 drives.

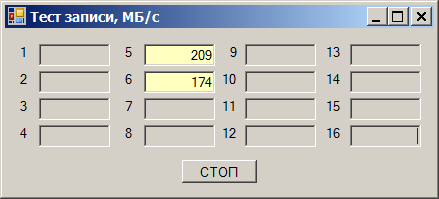

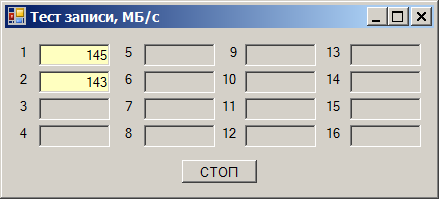

And it turns out the following effect. We take one disk and start recording data on it. We get the speed from 180 to 220 megabytes per second (hereinafter, megabytes is 1024 * 1024 bytes):

We take the second drive. The write speed on it is from 170 to 190 MB / s:

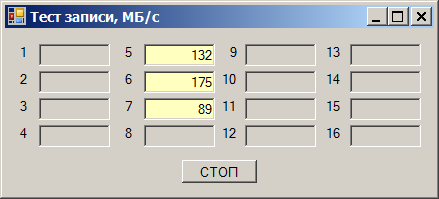

We write directly to both - we get a speed sink:

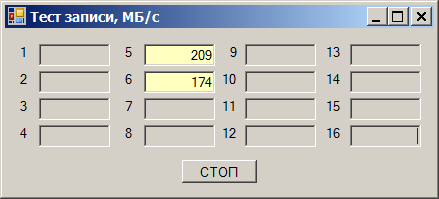

The total speed is obtained in the region of 290 MB / s. But the amazing thing is that we debugged (so it happened) this program on the same drives, but on other channels. And everything was fine there. Quickly poke into those channels (they will go through another card), we get an excellent job:

I’ll say right away that it’s not worth blaming any other components. Everything here is written by us, starting from the program itself and ending with the drivers. So the entire data path can be monitored. Unknown comes only when the request went to the equipment.

After the initial analysis, it turned out that the speed is not limited in the “long” PCIe slots and limited in the “short” ones. Long - this is where you can insert x16 cards (although one of them works in a mode no higher than x4), and short ones - only for x1 cards.

All anything, but the controllers in the current cards in principle can not work in a mode other than PCIex1. That is, all controllers must be in absolutely identical conditions, regardless of the slot length! But no. Who lives in the "long" - works quickly, who in the "short" - slowly. Good. And quickly - how fast? We add the third drive, we write on all three.

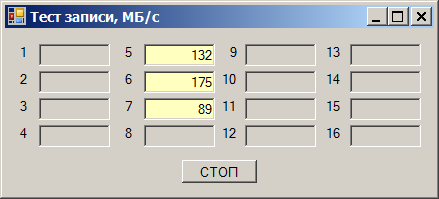

In the "short" slots the restriction is still around 290 MB / s:

In the "long " slots - around 400 MB / s:

I rummaged through the entire Internet. First, after a while I laughed from the articles, which states that the bandwidth of PCIe gen 1 and gen 2 for x1 is 250 and 500 MB / s. These are "raw" megabytes. At the expense of the overhead projector (I use this non-Russian word to denote the service exchange going along the same lines as the main data) for gen 2 it turns out exactly 400 megabytes per second of the useful flow. Secondly, I stubbornly could not find anything about the magic number 290 (looking ahead - I still have not found it).

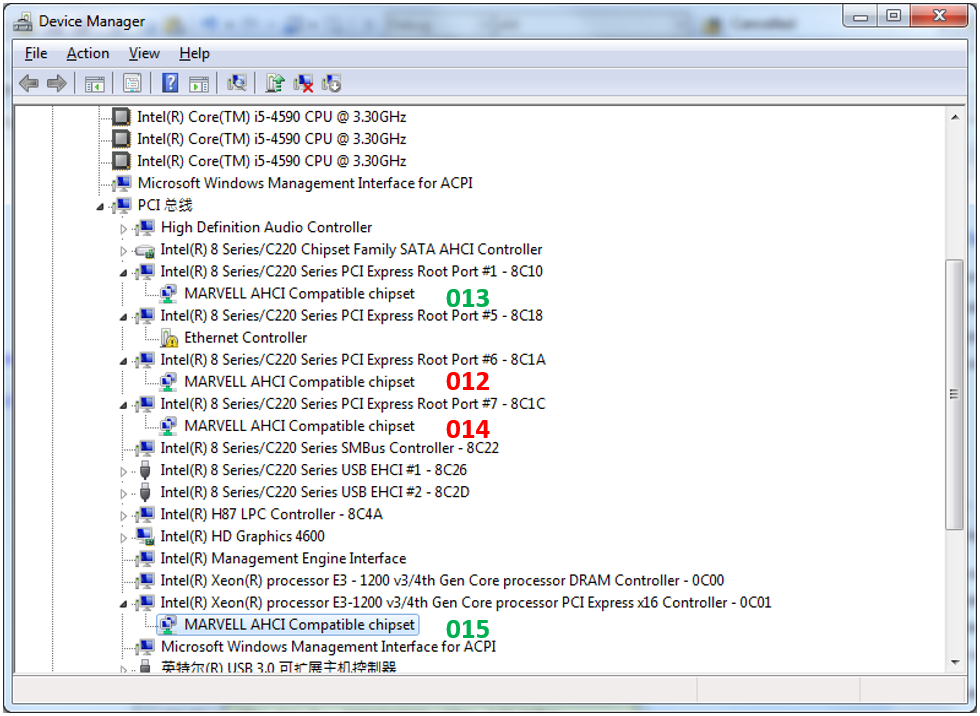

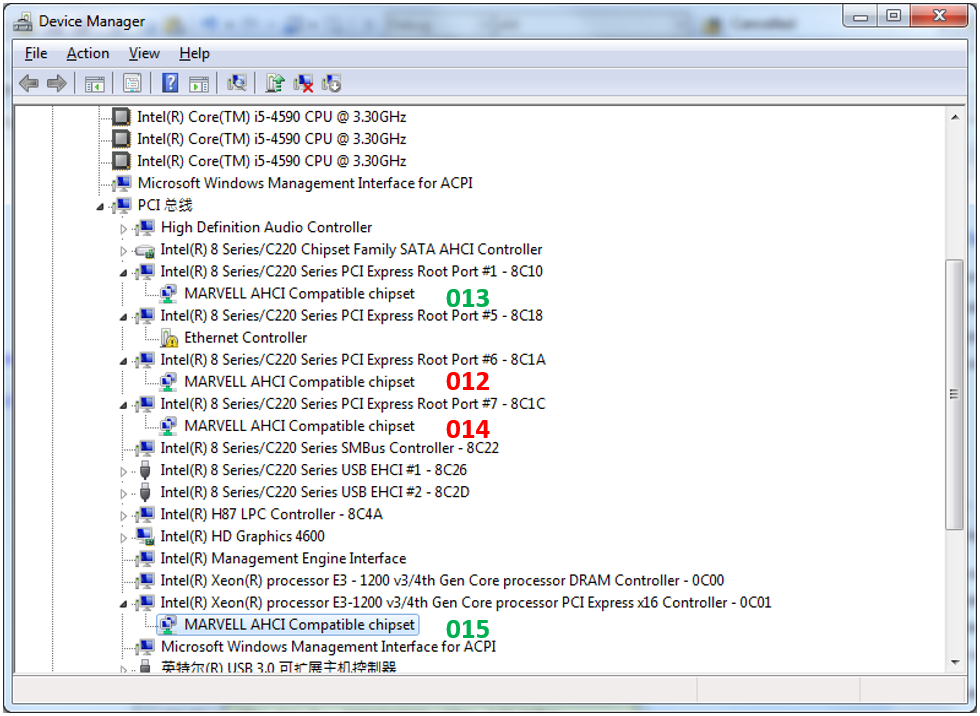

Fine. We are trying to look at the topology of the inclusion of our controllers. Here it is (013-015 - these are the suffixes of the names of devices, by which I compared them, in order to somehow distinguish). Green — fast, red — slow.

Controller "015" we do not even consider. He lives in a privileged slot designed for a video card. But the 013th is connected to the same switch as the 012th with 014th. How is it different?

Separate articles say that different cards may differ with Max Payload parameters. I studied the configuration space of all maps - this option is in all the same, the minimum possible value. Moreover, the documentation on the chipset of this motherboard says that there can be no other meaning.

In general, I rummaged everything in configuration space - everything is configured identically. And the speed is different! I re-read the documentation for the chipset many times - no bandwidth settings. Priorities - yes, something has been written about them, but tests are being conducted with no load on other channels! That is not the point.

Just in case, I even turned off the program for interruptions. The load on the processor has increased to insane quantities, because now he constantly stupidly reads the ready bit, but the speed readings have not changed. So blame this subsystem for problems too.

We tried to change the motherboard for exactly the same. No changes. We tried to replace the processor (there was reason to believe that it was messing up). Also, no changes in speed (but the old processor is really messed up). We put a new generation motherboard - everything just flies in all slots. And the maximum speed is no longer 400, but 418 megabytes per second, at least in the "long", even in the "short" slots:

But here - no miracles. With a familiar hand movement (already used for these days), we read the configuration space and see that the Max Payload parameter is set not to 128, but to 256 bytes.

A larger package size means fewer packages. There is less overhead to send them - more useful data manages to run over the same time. That's right.

I will not give an exact answer to the question from the title, with reference to the documents. But my thought went in the following way: suppose the flow restriction is set inside the chipset. It can not be programmed, it is set tightly, but it is. For example, it is equal to 290 megabytes per second for each diff. a couple. More - it is already cut somewhere inside the chipset on its internal mechanisms. Therefore, in the "long" slot (where you can stick cards up to x4) inside the chipset for our card, nothing is cut, and we run into the physical limit of the bus x1. In the "short" connector, we rest on this restriction.

In fact, check it is not easy, but very simple. We stick in the 013th slot not the AHCI, but the SAS controller, which serves 8 drives at once and can work in PCIe modes up to x4. We connect it to 4 smart SSD drives. We look at the recording speed - the soul is already happy:

Now we add those 4 disks that appeared in the first tests. The speed of the SSD predictably subsided:

Calculate the total speed passing through the SAS controller, we get 1175 megabytes per second. We divide by 4 (so many lines go to the "long" slot), we get ... Drum roll ... 293 megabytes per second. Somewhere I have already seen this number!

So, within the framework of this project, it was proved that the matter is not in our program or driver, but in the strange limitations of the chipset, which are surely “wired” tightly. A method for selecting motherboards that can be used in the project was developed. But in general, we make the following conclusions.

And it turns out an amazing thing. If the USB 2.0 device is plugged into the "blue" connector (which is USB 3.0), then the speed is the same. If the "little black" - the other. Here is my graph of USB write speed versus data transfer length. USB3 and USB2 are a type of connector, the device is always USB 2.0 HS.

I tried in different machines. The result is close. No one could explain this phenomenon to me. Later I found the most likely cause. And the reason is very simple. Here are the properties of the USB 2.0 controller:

The controllers that control the "blue" connector do not have this. And the difference is just about 20 percent.

From this we conclude that not always bandwidth limitations are determined by the physical properties of the tire. Sometimes some other things are superimposed. We turn with this knowledge these days.

Primary experiment

So. It all started very casually. There was a check of one program. Checked the process of writing data to multiple disks simultaneously. The hardware is simple: there is a motherboard with four PCIe slots. In all the slots, exactly the same cards are stuck with AHCI-controllers, each of which only supports PCIe x1.

Each card serves 4 drives.

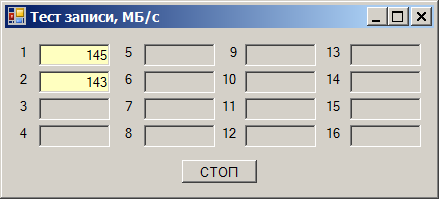

And it turns out the following effect. We take one disk and start recording data on it. We get the speed from 180 to 220 megabytes per second (hereinafter, megabytes is 1024 * 1024 bytes):

We take the second drive. The write speed on it is from 170 to 190 MB / s:

We write directly to both - we get a speed sink:

The total speed is obtained in the region of 290 MB / s. But the amazing thing is that we debugged (so it happened) this program on the same drives, but on other channels. And everything was fine there. Quickly poke into those channels (they will go through another card), we get an excellent job:

Buy a slot in a good area

I’ll say right away that it’s not worth blaming any other components. Everything here is written by us, starting from the program itself and ending with the drivers. So the entire data path can be monitored. Unknown comes only when the request went to the equipment.

After the initial analysis, it turned out that the speed is not limited in the “long” PCIe slots and limited in the “short” ones. Long - this is where you can insert x16 cards (although one of them works in a mode no higher than x4), and short ones - only for x1 cards.

All anything, but the controllers in the current cards in principle can not work in a mode other than PCIex1. That is, all controllers must be in absolutely identical conditions, regardless of the slot length! But no. Who lives in the "long" - works quickly, who in the "short" - slowly. Good. And quickly - how fast? We add the third drive, we write on all three.

In the "short" slots the restriction is still around 290 MB / s:

In the "long " slots - around 400 MB / s:

I rummaged through the entire Internet. First, after a while I laughed from the articles, which states that the bandwidth of PCIe gen 1 and gen 2 for x1 is 250 and 500 MB / s. These are "raw" megabytes. At the expense of the overhead projector (I use this non-Russian word to denote the service exchange going along the same lines as the main data) for gen 2 it turns out exactly 400 megabytes per second of the useful flow. Secondly, I stubbornly could not find anything about the magic number 290 (looking ahead - I still have not found it).

Fine. We are trying to look at the topology of the inclusion of our controllers. Here it is (013-015 - these are the suffixes of the names of devices, by which I compared them, in order to somehow distinguish). Green — fast, red — slow.

Controller "015" we do not even consider. He lives in a privileged slot designed for a video card. But the 013th is connected to the same switch as the 012th with 014th. How is it different?

Separate articles say that different cards may differ with Max Payload parameters. I studied the configuration space of all maps - this option is in all the same, the minimum possible value. Moreover, the documentation on the chipset of this motherboard says that there can be no other meaning.

In general, I rummaged everything in configuration space - everything is configured identically. And the speed is different! I re-read the documentation for the chipset many times - no bandwidth settings. Priorities - yes, something has been written about them, but tests are being conducted with no load on other channels! That is not the point.

Just in case, I even turned off the program for interruptions. The load on the processor has increased to insane quantities, because now he constantly stupidly reads the ready bit, but the speed readings have not changed. So blame this subsystem for problems too.

And what about the other boards?

We tried to change the motherboard for exactly the same. No changes. We tried to replace the processor (there was reason to believe that it was messing up). Also, no changes in speed (but the old processor is really messed up). We put a new generation motherboard - everything just flies in all slots. And the maximum speed is no longer 400, but 418 megabytes per second, at least in the "long", even in the "short" slots:

But here - no miracles. With a familiar hand movement (already used for these days), we read the configuration space and see that the Max Payload parameter is set not to 128, but to 256 bytes.

A larger package size means fewer packages. There is less overhead to send them - more useful data manages to run over the same time. That's right.

So who is to blame?

I will not give an exact answer to the question from the title, with reference to the documents. But my thought went in the following way: suppose the flow restriction is set inside the chipset. It can not be programmed, it is set tightly, but it is. For example, it is equal to 290 megabytes per second for each diff. a couple. More - it is already cut somewhere inside the chipset on its internal mechanisms. Therefore, in the "long" slot (where you can stick cards up to x4) inside the chipset for our card, nothing is cut, and we run into the physical limit of the bus x1. In the "short" connector, we rest on this restriction.

In fact, check it is not easy, but very simple. We stick in the 013th slot not the AHCI, but the SAS controller, which serves 8 drives at once and can work in PCIe modes up to x4. We connect it to 4 smart SSD drives. We look at the recording speed - the soul is already happy:

Now we add those 4 disks that appeared in the first tests. The speed of the SSD predictably subsided:

Calculate the total speed passing through the SAS controller, we get 1175 megabytes per second. We divide by 4 (so many lines go to the "long" slot), we get ... Drum roll ... 293 megabytes per second. Somewhere I have already seen this number!

So, within the framework of this project, it was proved that the matter is not in our program or driver, but in the strange limitations of the chipset, which are surely “wired” tightly. A method for selecting motherboards that can be used in the project was developed. But in general, we make the following conclusions.

Conclusion

- Often, in real life, the equipment has lower performance than theoretically possible. Restrictions can even be imposed by drivers, as shown in the case of USB. Sometimes it is possible to select such equipment, which (or drivers of which) does not have such limitations.

- Restrictions may even be undocumented, but clearly expressed.

- There are a lot of articles that say that one differential pair of PCIe gen. 1 and gen 2 gives about 250 and 500 megabytes per second, erroneous. They copy the same error from each other - megabytes of raw data per second. The overhead accumulates at several levels of the interface. With Max Payload 128 bytes, on PCIe gen2 it’s really about 400 megabytes per second. In newer PCIe generations, everything should be a little better, since there physical is not 8b / 10b, but more economical, but so far no one disk controller has been found that could be tested in practice.