Gcc vs Intel C ++ Compiler: building FineReader Engine for Linux

A prerequisite for writing this article was a completely natural desire to improve FineReader Engine performance.

It is believed that the compiler from Intel produces much faster code than gcc. And it would be nice to increase the recognition speedwithout doing anything by simply compiling the FR Engine with another compiler.

At the moment, the FineReader Engine is not being built by the newest compiler - gcc 4.2.4. It's time to move on to something more modern. We considered two alternatives - this is the new version of gcc - 4.4.4, and the compiler from Intel - Intel C ++ Compiler (icc).

Porting a large project to a new compiler may not be the easiest thing, so for starters we decided to test the compilers on the benchmark .

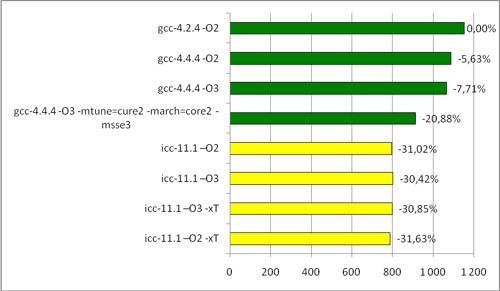

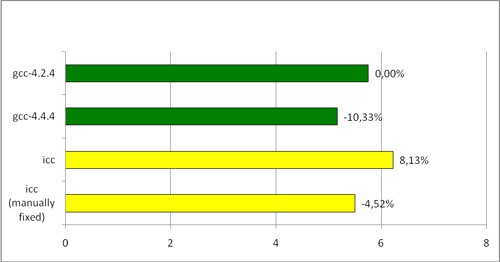

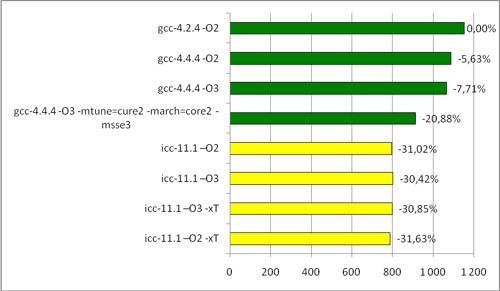

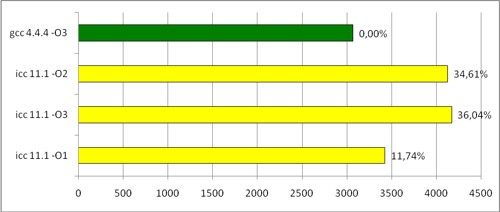

Here are the brief results on the Intel Core2 Duo processor:

The povray test time on the Intel processor (sec)

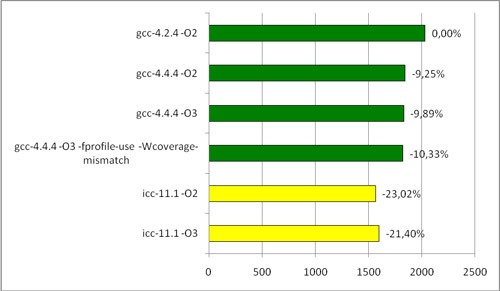

It was also interesting to look

at AMD : The povray test time on the AMD processor (sec)

Briefly about the flags:

-O1, -O2 and -O3 - various levels of optimization.

For gcc, –O3 is considered the best option, and it is used almost everywhere when building the FineReader Engine.

For icc, -O2 is considered the optimal option, and -O3 includes some additional loop optimizations, which, however, may not work.

-mtune = core2 -march = core2 -msse3 - optimization for a specific processor, in this case, for Core2 Duo.

-xT is a similar flag for the compiler from Intel.

-fprofile-use - PGO

Tests with optimizations for a specific processor are given just for fun. A binary built with optimization for one processor may not start on another. FineReader Engine should not be bound to a specific processor, therefore, such optimizations cannot be used.

So, apparently, a performance gain is possible: icc accelerates significantly on an Intel processor. On AMD, it behaves more modestly , but still gives a good increase compared to gcc.

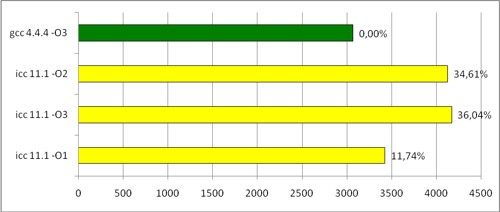

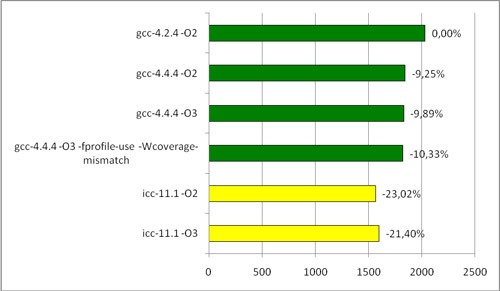

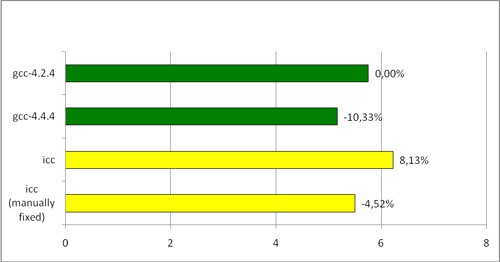

It's time to move on to what we all started this for - to build FineReader Engine. We compiled FineReader Engine with different compilers, launched recognition on the image package. Here are the results:

Running time of FREngine compiled by various compilers (seconds per page)

Unexpected result. The ratio gcc-4.4.4 / gcc-4.2.4 is quite consistent with measurements on the benchmark, and even slightly exceeds expectations. But what about icc? He loses not only to the new gcc, but also to gcc two years ago!

We went for the truth to oprofile, and here's what we managed to find out: in some (rather rare) cases, icc has problems with optimizing loops. Here is the code that I managed to write based on the results of profiling:

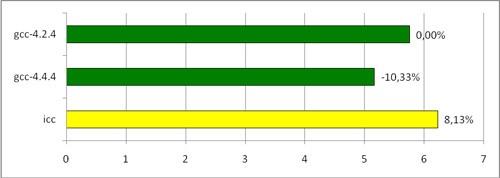

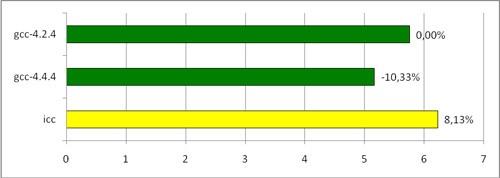

Running the function on different input data, we got something like this:

Cycle execution time (ms)

Adding other optimization flags did not produce tangible results, so I gave only the main ones.

Unfortunately, Intel does not explicitly indicate that they include -O1, -O2 and -O3, so it was not possible to find out which optimization made the code slow down. In fact, in most cases, icc optimizes loops better, but in some special cases (like the one above), difficulties arise.

With the help of profiling, it was possible to find another, more serious problem. In the profiler report for the version of FineReader Engine compiled by icc, a similar line was at rather high positions:

while in the version from gcc there was no such function at all.

A :: GetValue () returns a simple structure containing several fields. Most often, this method is called for a global constant object in a view construct

that is of type int. All of the above Get .. () methods are trivial - they simply return some field of the object (by value or by reference). Thus, GetValue () returns an object with several fields, after which GetField () pulls out one of these fields. In this case, instead of constantly copying the entire structure in order to pull out just one field, it is quite possible to turn the chain into one call, returning the desired number. In addition, all fields of the GlobalConstObject object are known (can be calculated) at the compilation stage, so the chain of these methods can be replaced by a constant.

gcc does just that (at least it avoids unnecessary construction), however icc with the -O2 or -O3 optimizations turned on leaves everything as it is. And given the number of such calls, this place becomes critical, although no useful work is being done here.

Now about the treatment methods:

1) Automatic. Icc has a wonderful -ipo flag, which asks the compiler to perform interprocedural optimization , also between files. Here’s what Intel’s Optimization manuals says:

This seems to be what you need. Everything would be fine, but the FineReader Engine contains a huge amount of code. An attempt to start it to assemble with the -ipo flag led to the fact that the linker (namely, it performs ipo) took up all the memory, all swap and only the page recognition module (however, the largest one) was going to take several hours. Hopelessly.

2) Manual. If everywhere in the code you manually replace the call chain with a constant, the Intel compiler gets a good performance gain relative to the old results.

The last line is the version compiled by icc, where in the most critical places the call chain has been replaced by a constant. As you can see, this allowed icc to run faster than gcc-4.2.4, but still slower than gcc-4.4.4.

You can try to catch all such critical places and manually correct the code. The drawback is obvious - it will take a huge amount of time and effort.

3) Combined. It is possible to assemble not the entire module with the -ipo flag, but only some of its parts. This will give an acceptable compilation time. However, which files should be compiled with this flag will have to be determined manually, which again potentially leads to high labor costs.

So, we summarize. Intel C ++ Compiler is potentially good. But due to the features described above and a large amount of code, we decided that it does not give a significant speed gain - significant enough to justify the labor involved in porting and "sharpening" the code for this compiler.

It is believed that the compiler from Intel produces much faster code than gcc. And it would be nice to increase the recognition speed

At the moment, the FineReader Engine is not being built by the newest compiler - gcc 4.2.4. It's time to move on to something more modern. We considered two alternatives - this is the new version of gcc - 4.4.4, and the compiler from Intel - Intel C ++ Compiler (icc).

Porting a large project to a new compiler may not be the easiest thing, so for starters we decided to test the compilers on the benchmark .

Here are the brief results on the Intel Core2 Duo processor:

The povray test time on the Intel processor (sec)

It was also interesting to look

at AMD : The povray test time on the AMD processor (sec)

Briefly about the flags:

-O1, -O2 and -O3 - various levels of optimization.

For gcc, –O3 is considered the best option, and it is used almost everywhere when building the FineReader Engine.

For icc, -O2 is considered the optimal option, and -O3 includes some additional loop optimizations, which, however, may not work.

-mtune = core2 -march = core2 -msse3 - optimization for a specific processor, in this case, for Core2 Duo.

-xT is a similar flag for the compiler from Intel.

-fprofile-use - PGO

Tests with optimizations for a specific processor are given just for fun. A binary built with optimization for one processor may not start on another. FineReader Engine should not be bound to a specific processor, therefore, such optimizations cannot be used.

So, apparently, a performance gain is possible: icc accelerates significantly on an Intel processor. On AMD, it behaves more modestly , but still gives a good increase compared to gcc.

It's time to move on to what we all started this for - to build FineReader Engine. We compiled FineReader Engine with different compilers, launched recognition on the image package. Here are the results:

Running time of FREngine compiled by various compilers (seconds per page)

Unexpected result. The ratio gcc-4.4.4 / gcc-4.2.4 is quite consistent with measurements on the benchmark, and even slightly exceeds expectations. But what about icc? He loses not only to the new gcc, but also to gcc two years ago!

We went for the truth to oprofile, and here's what we managed to find out: in some (rather rare) cases, icc has problems with optimizing loops. Here is the code that I managed to write based on the results of profiling:

static const int aim = ..;

static const int range = ..;

int process( int* line, int size )

{

int result = 0;

for( int i = 0; i < size; i++ ) {

if( line[i] == aim ) {

result += 2;

} else {

if( line[i] < aim - range || line[i] > aim + range ) {

result--;

} else {

result++;

}

}

}

return result;

}

* This source code was highlighted with Source Code Highlighter.Running the function on different input data, we got something like this:

Cycle execution time (ms)

Adding other optimization flags did not produce tangible results, so I gave only the main ones.

Unfortunately, Intel does not explicitly indicate that they include -O1, -O2 and -O3, so it was not possible to find out which optimization made the code slow down. In fact, in most cases, icc optimizes loops better, but in some special cases (like the one above), difficulties arise.

With the help of profiling, it was possible to find another, more serious problem. In the profiler report for the version of FineReader Engine compiled by icc, a similar line was at rather high positions:

Namespace::A::GetValue() const (На всякий случай все имена изменены на ничего не значащие)while in the version from gcc there was no such function at all.

A :: GetValue () returns a simple structure containing several fields. Most often, this method is called for a global constant object in a view construct

GlobalConstObject.GetValue().GetField()that is of type int. All of the above Get .. () methods are trivial - they simply return some field of the object (by value or by reference). Thus, GetValue () returns an object with several fields, after which GetField () pulls out one of these fields. In this case, instead of constantly copying the entire structure in order to pull out just one field, it is quite possible to turn the chain into one call, returning the desired number. In addition, all fields of the GlobalConstObject object are known (can be calculated) at the compilation stage, so the chain of these methods can be replaced by a constant.

gcc does just that (at least it avoids unnecessary construction), however icc with the -O2 or -O3 optimizations turned on leaves everything as it is. And given the number of such calls, this place becomes critical, although no useful work is being done here.

Now about the treatment methods:

1) Automatic. Icc has a wonderful -ipo flag, which asks the compiler to perform interprocedural optimization , also between files. Here’s what Intel’s Optimization manuals says:

IPO allows the compiler to analyze your code to determine where you can benefit from a variety of optimizations:

• Inline function expansion of calls, jumps, branches and loops.

• Interprocedural constant propagation for arguments, global variables and return values.

• Monitoring module-level static variables to identify further optimizations and loop invariant code.

• Dead code elimination to reduce code size.

• Propagation of function characteristics to identify call deletion and call movement

• Identification of loop-invariant code for further optimizations to loop invariant code.

This seems to be what you need. Everything would be fine, but the FineReader Engine contains a huge amount of code. An attempt to start it to assemble with the -ipo flag led to the fact that the linker (namely, it performs ipo) took up all the memory, all swap and only the page recognition module (however, the largest one) was going to take several hours. Hopelessly.

2) Manual. If everywhere in the code you manually replace the call chain with a constant, the Intel compiler gets a good performance gain relative to the old results.

The last line is the version compiled by icc, where in the most critical places the call chain has been replaced by a constant. As you can see, this allowed icc to run faster than gcc-4.2.4, but still slower than gcc-4.4.4.

You can try to catch all such critical places and manually correct the code. The drawback is obvious - it will take a huge amount of time and effort.

3) Combined. It is possible to assemble not the entire module with the -ipo flag, but only some of its parts. This will give an acceptable compilation time. However, which files should be compiled with this flag will have to be determined manually, which again potentially leads to high labor costs.

So, we summarize. Intel C ++ Compiler is potentially good. But due to the features described above and a large amount of code, we decided that it does not give a significant speed gain - significant enough to justify the labor involved in porting and "sharpening" the code for this compiler.