Cloud Resource Management

The words “cloud”, “cloud computing”, “cloud” are used for anything horrible. New buzzword, buzzword. We see “cloud antiviruses”, “cloud blade servers”. Even eminent network equipment vendors do not hesitate to display switches labeled “for cloud computing”. This causes an instinctive dislike, roughly, like "organic" food.

Any techie who tries to deal with the technologies underlying the “cloud” after several hours of struggle with the flow of advertising enthusiasm will find that these clouds are the same VDSs, side view. He will be right. The clouds, as they are now made, are ordinary virtual machines.

However, the clouds are not only marketing and renamed VDS. The word “cloud” (or, more precisely, the phrase “cloud computing”) has its own technical truth. It’s not as pathetic and delightfully innovative as marketers say, but it does exist. It was invented many decades ago, but only now the infrastructure (primarily the Internet and x86 virtualization technologies) has grown to a level that allows it to be implemented in droves.

So, first of all, about the reason that generally created the need for clouds:

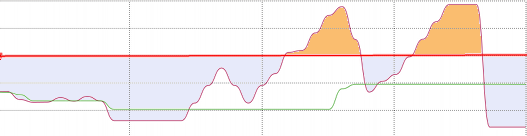

Here is what the provided service looks like for ordinary VDS (there can be any resource in place of this graph: processor, memory, disk):

Please note: this is a weekly schedule. Existing technologies for temporarily increasing the resource consumption limit (burst, grace period) are not able to solve this problem at such long intervals. Those. a machine lacks resources when it most needs it.

The second problem - look at the huge intervals (blue color), when the resources, in fact, had to be paid, but could not be used. A virtual machine at night just does not need so many resources. She is idle. And despite this, the owner pays in full.

A problem arises: a person is forced to order more resources than is necessary on average in order to survive peaks without problems. The rest of the time, resources are idle. The provider sees that the server is not loaded, begins to sell more resources than it is (this is called "oversell"). At some point, for example, due to the peak load on several customers, the provider violates its obligations. He promised 70 people 1 GHz each - but he only has 40 (2.5 * 16 cores). Not good.

Selling the strip honestly (without oversell) is unprofitable (and non-market prices are obtained). Override - reduce the quality of service, violate the terms of the contract.

This problem is not related to VDS or virtualization, it is a general question: how to honestly sell idle resources?

The answer to this problem was the idea of "cloud computing". Words, although fashionable, go back to the days of large mainframes, when machine time was sold.

Cloud computing does the same thing - instead of limits and quotas, which are paid regardless of real consumption, users are given the opportunity to use resources without restriction, with payment for real consumption (and only what was actually used). This is the essence of the "cloud."

Instead of selling “500 MHz 500 MB memory of a 3 GB disk”, the user (or rather, his virtual machine) is given everything she needs. As much as needs.

Do you have a peak in resource consumption before March 8, and then a dead season? Or you just can’t predict when you will have these peaks? Instead of looking for a compromise between lags and money down the drain, a different payment model is proposed: what is consumed is paid.

Machine time is taken into account (it can be counted in ticks, but it’s ideally correct to do it in the old style: count seconds of machine time), of course, if you had 3 cores threshed with 50% load, this is one and a half seconds of machine time per second. If you have a 3% load, then in a minute you will spend only 2s of machine time.

Memory is allocated "on demand" - and is limited only by the capabilities of the hoster and your greed. It is also paid on a time basis - a megabyte of memory costs so much per second. (in fact, it was like that on old VDS, you paid “megabytes per month”, but they were idle most of the time).

Disk space is also allocated on demand - payment per megabyte per second. If you needed a place for a temporary terabyte dump, you do not need to pay a terabyte for a whole month - enough for an hour and a half (the main thing is not to forget to erase the excess later).

Disk space is a complex resource: it is not only “storage”, but also “access”. Payment is taken both for the consumed place (megabyte-seconds), and for iops (pieces), and for the amount of recorded-read. Each of the components costs money. Since money is taken three times for one resource, the price, in fact, is divided into three.

And here the fun begins: the person who put the files, but does not touch them, pays less than the one who actively uses them to give back to the client. And the use can be different - I read it in megabytes ten times and “read it in kilobytes ten times” - there is a difference. Or, on the contrary, I read a 10MB file for 10 requests per megabyte, or for a long and painful read in kilobyte in any order, yes also mixed up with the record ...

The pay-as-you-go model is the most honest, for both the consumer and the service provider: the consumer pays only for what he has consumed, while the supplier no longer experiences annoyance from “fat” customers who consume all resources. Consumed resource - sold resource. Moreover, the reverse principle also applies: an unused resource is not paid. If the hoster has not managed to provide everyone with a processor “on demand” (a traffic jam has occurred), then it will lose money automatically, without trial and abuse, without a long correspondence, because it sold less than they wanted to buy. Clients, though they feel a nuisance due to congestion, will receive at least satisfaction - they will pay less for this time ... This congestion problem has an even more beautiful, cloudy solution, but about it next time.

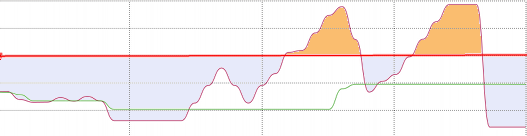

For example, a graph of memory usage. Pay attention - orange areas are areas that will not just “slow down”, but areas in which oom_killer will work. With all the consequences. If the payment was for consumption, then these peaks would not be so expensive, but they would solve the “peak” situation without any problems.

And look again, again huge idle resources that I absolutely do not want to pay for. After all, this memory is not used? Not. Why, then, pay for it?

Clouds allow you to take memory when you need it. And give back when the memory is no longer needed. And unlike other resources (memory / disk performance), the lack of which only leads to some slowdown, memory is a vital resource. They didn’t give a memory - someone saw the page of happiness from nginx'a “Bad gateway” or simply did not see anything, because the connection was closed ...

By the way, this principle applies to the network. We are all used to home at anlim (unlimited access at a fixed maximum speed). But work is not home. And it’s a pity to pay for a tight band of 30 MB, for 100 MB it’s expensive (see the first chart). And perhaps there are further single peaks under 500MB, but rarely? How to be in this situation? Cheap traffic is cheaper and provides a better quality of service than a band (anlim) sandwiched in a narrow vice with the same actual volume of traffic per month. Most people are used to the fact that “paid traffic is expensive” (thanks to the opsos). And if not? And if a gigabyte of traffic costs 80 cents? (three orders of magnitude cheaper?)

Why do you have to pay hundreds (thousands?) rubles for traffic if your virtual machine eats less than a hundred rubles?

By the way, the same applies to the local network. Yes, there a gigabyte of traffic will cost a penny. But he is? It consumes resources, for its sake fast switches are installed ... So, it must be paid.

Sometimes, for "ordinary VDS" provide the ability for the user to set limits on resource consumption. Like, there is not enough 500MHz, put 600, here you have a smooth slider, set the limits for yourself. However, this is not a solution to the problem. Well, yes, instead of 500 we bet 600 - and what do we get? The same VDS, with the same (or even large) areas of downtime for which we pay - but which we do not receive. We cannot know exactly how many resources are needed for a server that serves clients (the number of which we cannot predict). Thus, payment "upon consumption" is more fair.

You can call this analogy: there are cars that have a carburetor. And the suction handle. Which need to regulate the fuel supply. Overfilled? Unfilled? It’s going badly. And there are cars with injection engines that themselves take as much gas as they need.

This is the difference between a cloud and a pedal-powered VDS. You do not need so much gasoline - they pour and pour into the engine (at your expense!). You are crawling uphill and you would have to add - not give. More precisely, they give, but with hands, hands ... Looking up from the analogy: in a car you, at least, are driving. And in VDS - do you monitor resource consumption around the clock?

It may seem that everything written concerns hosters. This is not true. The difference between the position of the host and the administrator of his own fleet of enterprise servers is that the provider operates with money, and the administrator operates with available resources. All considerations regarding shared use of resources apply to servers in a “private” cloud. Instead of backing up the memory using the square-nested method, using a shared pool gives a much higher density of virtual machines, solves the problems "oh, sql fell, I set the memory limit incorrectly."

I'll try to write out what resources should be in an ideal cloud:

Any techie who tries to deal with the technologies underlying the “cloud” after several hours of struggle with the flow of advertising enthusiasm will find that these clouds are the same VDSs, side view. He will be right. The clouds, as they are now made, are ordinary virtual machines.

However, the clouds are not only marketing and renamed VDS. The word “cloud” (or, more precisely, the phrase “cloud computing”) has its own technical truth. It’s not as pathetic and delightfully innovative as marketers say, but it does exist. It was invented many decades ago, but only now the infrastructure (primarily the Internet and x86 virtualization technologies) has grown to a level that allows it to be implemented in droves.

So, first of all, about the reason that generally created the need for clouds:

Here is what the provided service looks like for ordinary VDS (there can be any resource in place of this graph: processor, memory, disk):

Please note: this is a weekly schedule. Existing technologies for temporarily increasing the resource consumption limit (burst, grace period) are not able to solve this problem at such long intervals. Those. a machine lacks resources when it most needs it.

The second problem - look at the huge intervals (blue color), when the resources, in fact, had to be paid, but could not be used. A virtual machine at night just does not need so many resources. She is idle. And despite this, the owner pays in full.

A problem arises: a person is forced to order more resources than is necessary on average in order to survive peaks without problems. The rest of the time, resources are idle. The provider sees that the server is not loaded, begins to sell more resources than it is (this is called "oversell"). At some point, for example, due to the peak load on several customers, the provider violates its obligations. He promised 70 people 1 GHz each - but he only has 40 (2.5 * 16 cores). Not good.

Selling the strip honestly (without oversell) is unprofitable (and non-market prices are obtained). Override - reduce the quality of service, violate the terms of the contract.

This problem is not related to VDS or virtualization, it is a general question: how to honestly sell idle resources?

The answer to this problem was the idea of "cloud computing". Words, although fashionable, go back to the days of large mainframes, when machine time was sold.

Cloud computing does the same thing - instead of limits and quotas, which are paid regardless of real consumption, users are given the opportunity to use resources without restriction, with payment for real consumption (and only what was actually used). This is the essence of the "cloud."

Instead of selling “500 MHz 500 MB memory of a 3 GB disk”, the user (or rather, his virtual machine) is given everything she needs. As much as needs.

Do you have a peak in resource consumption before March 8, and then a dead season? Or you just can’t predict when you will have these peaks? Instead of looking for a compromise between lags and money down the drain, a different payment model is proposed: what is consumed is paid.

Resource accounting

Machine time is taken into account (it can be counted in ticks, but it’s ideally correct to do it in the old style: count seconds of machine time), of course, if you had 3 cores threshed with 50% load, this is one and a half seconds of machine time per second. If you have a 3% load, then in a minute you will spend only 2s of machine time.

Memory is allocated "on demand" - and is limited only by the capabilities of the hoster and your greed. It is also paid on a time basis - a megabyte of memory costs so much per second. (in fact, it was like that on old VDS, you paid “megabytes per month”, but they were idle most of the time).

Disk space is also allocated on demand - payment per megabyte per second. If you needed a place for a temporary terabyte dump, you do not need to pay a terabyte for a whole month - enough for an hour and a half (the main thing is not to forget to erase the excess later).

Disk space

Disk space is a complex resource: it is not only “storage”, but also “access”. Payment is taken both for the consumed place (megabyte-seconds), and for iops (pieces), and for the amount of recorded-read. Each of the components costs money. Since money is taken three times for one resource, the price, in fact, is divided into three.

And here the fun begins: the person who put the files, but does not touch them, pays less than the one who actively uses them to give back to the client. And the use can be different - I read it in megabytes ten times and “read it in kilobytes ten times” - there is a difference. Or, on the contrary, I read a 10MB file for 10 requests per megabyte, or for a long and painful read in kilobyte in any order, yes also mixed up with the record ...

The pay-as-you-go model is the most honest, for both the consumer and the service provider: the consumer pays only for what he has consumed, while the supplier no longer experiences annoyance from “fat” customers who consume all resources. Consumed resource - sold resource. Moreover, the reverse principle also applies: an unused resource is not paid. If the hoster has not managed to provide everyone with a processor “on demand” (a traffic jam has occurred), then it will lose money automatically, without trial and abuse, without a long correspondence, because it sold less than they wanted to buy. Clients, though they feel a nuisance due to congestion, will receive at least satisfaction - they will pay less for this time ... This congestion problem has an even more beautiful, cloudy solution, but about it next time.

RAM

For example, a graph of memory usage. Pay attention - orange areas are areas that will not just “slow down”, but areas in which oom_killer will work. With all the consequences. If the payment was for consumption, then these peaks would not be so expensive, but they would solve the “peak” situation without any problems.

And look again, again huge idle resources that I absolutely do not want to pay for. After all, this memory is not used? Not. Why, then, pay for it?

Clouds allow you to take memory when you need it. And give back when the memory is no longer needed. And unlike other resources (memory / disk performance), the lack of which only leads to some slowdown, memory is a vital resource. They didn’t give a memory - someone saw the page of happiness from nginx'a “Bad gateway” or simply did not see anything, because the connection was closed ...

Network

By the way, this principle applies to the network. We are all used to home at anlim (unlimited access at a fixed maximum speed). But work is not home. And it’s a pity to pay for a tight band of 30 MB, for 100 MB it’s expensive (see the first chart). And perhaps there are further single peaks under 500MB, but rarely? How to be in this situation? Cheap traffic is cheaper and provides a better quality of service than a band (anlim) sandwiched in a narrow vice with the same actual volume of traffic per month. Most people are used to the fact that “paid traffic is expensive” (thanks to the opsos). And if not? And if a gigabyte of traffic costs 80 cents? (three orders of magnitude cheaper?)

Why do you have to pay hundreds (thousands?) rubles for traffic if your virtual machine eats less than a hundred rubles?

By the way, the same applies to the local network. Yes, there a gigabyte of traffic will cost a penny. But he is? It consumes resources, for its sake fast switches are installed ... So, it must be paid.

Fake clouds

Sometimes, for "ordinary VDS" provide the ability for the user to set limits on resource consumption. Like, there is not enough 500MHz, put 600, here you have a smooth slider, set the limits for yourself. However, this is not a solution to the problem. Well, yes, instead of 500 we bet 600 - and what do we get? The same VDS, with the same (or even large) areas of downtime for which we pay - but which we do not receive. We cannot know exactly how many resources are needed for a server that serves clients (the number of which we cannot predict). Thus, payment "upon consumption" is more fair.

You can call this analogy: there are cars that have a carburetor. And the suction handle. Which need to regulate the fuel supply. Overfilled? Unfilled? It’s going badly. And there are cars with injection engines that themselves take as much gas as they need.

This is the difference between a cloud and a pedal-powered VDS. You do not need so much gasoline - they pour and pour into the engine (at your expense!). You are crawling uphill and you would have to add - not give. More precisely, they give, but with hands, hands ... Looking up from the analogy: in a car you, at least, are driving. And in VDS - do you monitor resource consumption around the clock?

Enterprise and Hosters

It may seem that everything written concerns hosters. This is not true. The difference between the position of the host and the administrator of his own fleet of enterprise servers is that the provider operates with money, and the administrator operates with available resources. All considerations regarding shared use of resources apply to servers in a “private” cloud. Instead of backing up the memory using the square-nested method, using a shared pool gives a much higher density of virtual machines, solves the problems "oh, sql fell, I set the memory limit incorrectly."

Perfect cloud

I'll try to write out what resources should be in an ideal cloud:

- CPU resources on request. Perhaps with the dynamic connection of additional cores, automatic migration of the virtual machine to a more free / faster server if the resources of the current server begin to be insufficient. Payment of processor time for ticks (or seconds of busy processor).

- Unlimited memory that is paid upon consumption-release (accounting in kilobyte-seconds or multiple units).

- Disk space, taken into account according to the same principles: payment in gigabyte-hours. I put a terabyte archive for two hours - I paid 2000 gigabyte-hours. (The main thing is not to forget to erase too much) Put 2GB for a day - paid 48 gigabyte-hours.

- Disk operations - individually, disk traffic - per gigabyte

- The network is unlimited, the speed is in gigabits, and then in the top ten. Payment for traffic.

- Full legal right to turn off the virtual machine and pay only for the “dead weight” contents of its disks

- Ability to export / import your virtual machines

- The ability to have multiple virtual machines on one account

- Charts of resource consumption in real or near time