Nature asks whether modern scientific experiments are reproducible?

Accidentally in the stream of news and information came across an article in Nature Scientific Reports . It presents data from a survey of 1500 scientists on the reproducibility of research results. If earlier this problem was raised for biological and medical research , where it is understandable on the one hand (false correlations, the overall complexity of the systems under study, sometimes even scientific software is blamed ), on the other hand it has a phenomenological character (for example, mice tend to behave differently different sexes ( 1 and 2 )).

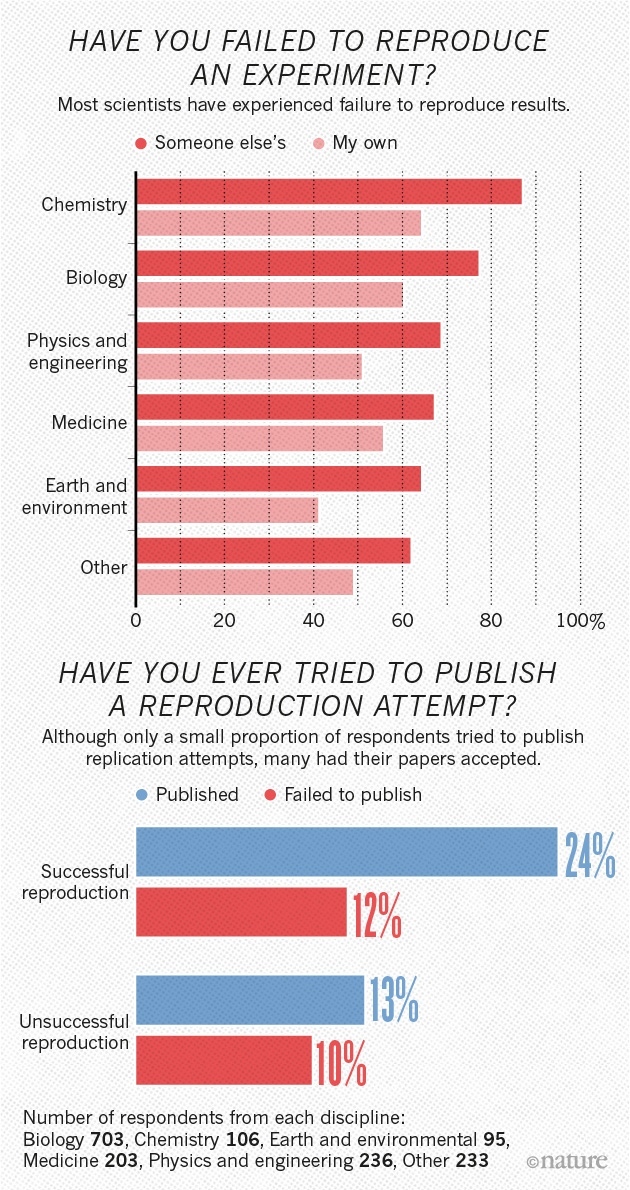

However, not everything goes smoothly and with morenatural sciences, such as physics and engineering, chemistry, ecology. It would seem that these disciplines are based on “absolutely” reproducible experiments conducted under the most controlled conditions, alas, terrific - in every sense of the word - the result of the survey: up to 70% of researchers encountered non-reproducible experiments and results obtained not only by other groups of scientists , BUT and by the authors / co-authors of published scientific works!

Every sandpiper praises its swamp?

Although 52% of respondents indicate a crisis of reproducibility in science, less than 31% consider the published data to be fundamentally incorrect and most indicated that they still trust the published work.

Question: Is there a crisis of reproducible results?

Of course, you should not chop off the shoulder and lynch all science as such only on the basis of this survey: half of the respondents were still scientists who were connected, in one way or another, with biological disciplines. As the authors note, in physics and chemistry the level of reproducibility and confidence in the results obtained is much higher (see the diagram below), but still not 100%. But in medicine, things are very bad compared to the rest.

A joke comes to mind:

-Какова вероятность встретить динозавра на улице?

-50:50. Либо встретишь, либо нет.

-50:50. Либо встретишь, либо нет.

Marcus Munafo (Marcus Munafo), a biological psychologist from the University of Bristol (England) has a long-standing interest in the problem of reproducibility of scientific data. Recalling the times of student youth, he says:

Once I tried to reproduce the experiment from literary sources, which seemed simple to me, but I just could not do it. I had a crisis of trust, but then I realized that my experience was not such a rarity.

Question: How many already published works in your industry are replicable?

Latitude and longitude depth problems

Imagine that you are a scientist. You come across an interesting article, but the results / experiments can not be reproduced in the laboratory. It is logical to write about this to the authors of the original article, ask for advice and ask clarifying questions. According to the survey, less than 20% have ever done it in their scientific career!

The authors of the study note that perhaps such contacts and conversations are too difficult for the scientists themselves, because they reveal their incompetence and inconsistency in certain issues or reveal too many details of the current project.

Moreover, an absolute minority of scholars attempted to publish a refutation of irreproducible results, while encountering opposition from editors and reviewers who demandeddownplay comparison with original research. Is it any wonder that the chance to report the irreproducibility of scientific results is about 50%.

First question: Have you tried to reproduce the results of the experiment?

Second question: Did you try to publish your attempt to reproduce the results?

It may be worth then, at least within the laboratory, to carry out a reproducibility check? The saddest thing is that a third of the respondents did n’t even EVER think of creating methods for checking data on reproducibility. Only 40% indicated that they regularly use such techniques.

Question:Have you ever developed special techniques / technical processes to improve the reproducibility of the results?

Another example, a biochemist from the United Kingdom who did not want to disclose her name, says that trying to repeat, reproduce work for her laboratory project simply doubles the time and material costs, giving nothing and not bringing new things to work. Additional checks are carried out only for innovative projects and unusual results.

And of course, the eternal Russian questions that began to torture foreign colleagues: who is to blame and what to do?

Who is guilty?

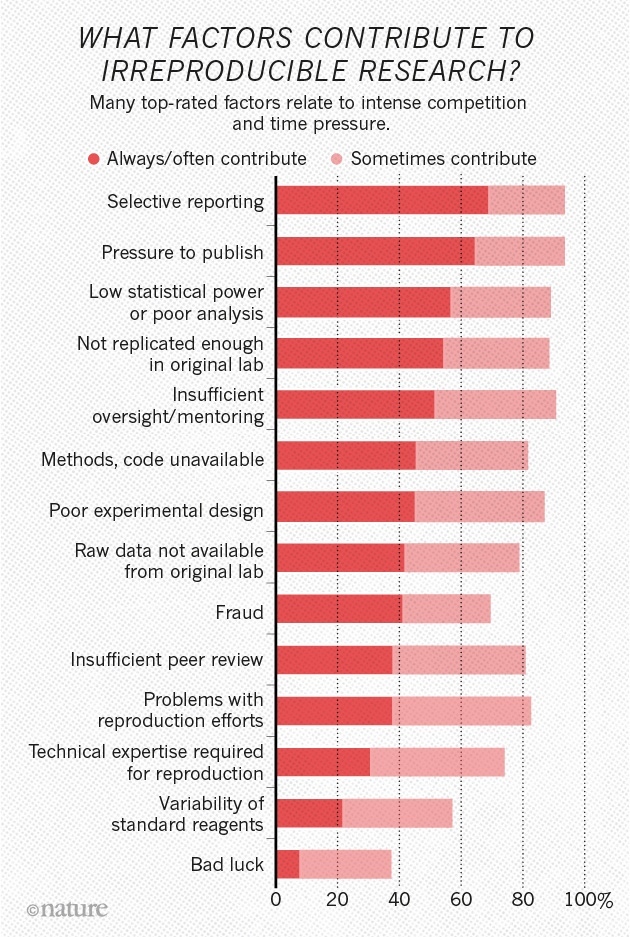

The authors identified three main problems of reproducible results:

- The pressure from the authorities that the work was published in time

- Selective reporting (apparently, it means the silence of some data that "spoil" the whole picture)

- Insufficient data analysis (including statistical)

Question: What factors are guilty of irreproducible scientific results?

Answers (top to bottom): –– Sample reporting –– Head pressure –– Poor analysis / statistics –– Insufficient experiment repeatability in the laboratory –– Insufficient supervision –– Lack of methodology or code –– Poor planning of the experiment –– Lack of raw data from the primary laboratory –– Fraud –– Insufficient testing by experts / reviewers –Problems with replay attempts –An technical expertise is needed to reproduce –Variance of standard reagents - “Nyudachka and pichalka”

What to do?

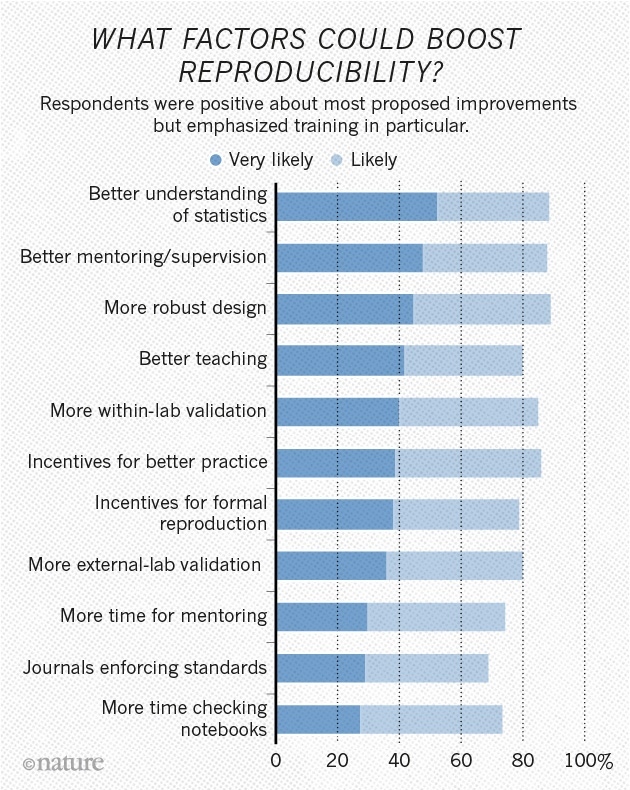

Of the 1,500 respondents, more than 1,000 specialists spoke in favor of improving statistics in collecting and processing data, improving the quality of supervision by the bosses, and also more rigorous planning of experiments.

Question: What factors will help improve reproducibility?

Answers (top to bottom): -Best statistics understanding -More strict supervision -Improved design of experiments -Training -Vnutrilaboratornaya -improving verification of practical skills -stimulation to a formal cross-checking data -Mezhlaboratornaya check -Vydelenie used for ng bigger amount of time for project management -Increase scientific standards -Vydelenie logs used for ng bigger amount of time to work with laboratory records

Conclusion and some personal experience

First , even for me, as a scientist, the results are staggering, although I have already got used to some degree of irreproducibility of results. This is especially pronounced in the works performed by the Chinese and Hindus without external “audit” in the form of American / European professors. It’s good that they recognized the problem and thought about its solution (s). Tactfully keep silent about Russian science in connection with the recent scandal , although many honestly do their work.

Secondly, the article ignores (or rather, does not consider) the role of scientific metrics and peer-reviewed scientific journals in the emergence and development of the problem of irreproducibility of research results. In pursuit of the speed and frequency of publications (read, increasing the citation indices), the quality drops sharply and there is no time left for additional verification of the results.

As they say, all the characters are fictional, but based on real events. It was somehow possible for one student to review the article, because not every professor has the time and energy to read articles thoughtfully, so the opinion of 2-3-4 students and doctors is collected, which makes up a review. A review was written, it pointed out that the results are not reproducible by the method described in the article. This was clearly demonstrated to the professor. But in order not to spoil relations with the “colleagues” - after all, everything turns out for them - the review was “adjusted”. And these articles published 2 or 3 pieces.

It turns out a vicious circle. The scientist sends an article to the journal editor, where he indicates the “ desired ” and, most importantly , “ undesirable»Reviewers, that is, in fact leaving only positive-minded to the authors. They review the work, but they cannot “foul in comments” in the black way and try to choose the lesser of two evils - here is a list of questions that need to be answered, and then we will publish the article.

Another example of which Nature’s editor was talking about a month ago was the Grazel solar panels . Due to the huge interest in this topic in the scientific community (after all, they still want an article in Nature!), The editors had to create a special questionnaire in which they needed to specify a lot of parameters, provide equipment calibrations, certificates, etc., to confirm that the efficiency measurement method The panels conforms to some general principles and standards.

And thirdlywhen once again you hear about the miraculous vaccine, winning everything and everyone, a new story about Jobs in a skirt , new batteries or the dangers / benefits of GMOs or radiation of smartphones , especially if it was written by yellow writers from journalism, then treat with understanding do not make hasty conclusions. Wait to confirm the results of other groups of scientists, the accumulation of the array and data samples.

And what do you, dear Habra / GT users, think about the reproducibility of scientific data? Share your opinion in the comments!

PS: The article was translated and written in haste, about all the errors and inaccuracies noticed, please write in the LAN.

Sometimes briefly, and sometimes not so much about the news of science and technology, you can read on my Telegram channel - welcome;)