Network Factory for Cisco ACI Data Center - Admin Help

With this magic piece of Cisco ACI script, you can quickly configure your network.

The network factory for the Cisco ACI data center has been around for five years, but nothing was said about it at Habr, so I decided to fix it a bit. I’ll tell you from my own experience what it is, what good it is, and where it has a rake.

What is it and where did it come from?

By the time of the announcement of ACI (Application Centric Infrastructure) in 2013, competitors were attacking traditional approaches to data center networks on three sides at once.

On the one hand, the “first generation” SDN solutions based on OpenFlow promised to make networks more flexible and cheaper at the same time. The idea was to make decision-making, traditionally performed by the proprietary software of the switches, on the central controller.

This controller would have a single vision of everything that was happening and, based on this, would program the hardware of all switches at the level of the rules for processing specific flows.

On the other hand, overlay network solutions made it possible to implement the necessary connectivity and security policies without any changes in the physical network, building software tunnels between virtualized hosts. The most famous example of this approach was the decision from Nicira, which by that time had already been acquired by VMWare for $ 1.26 billion and gave rise to the current VMWare NSX. To some piquancy of the situation, Nicira was co-founded by the same people who previously stood at the origins of OpenFlow, who now said that OpenFlow was not suitable for building a data center factory .

And finally, the switching chips available in the open market (what is called merchant silicon) have reached a degree of maturity at which they have become a real threat to traditional switch manufacturers. Previously, each vendor himself developed chips for his switches, but over time, chips from third-party manufacturers, primarily from Brоadcom, began to reduce the distance with the vendor chips in terms of functions, and surpassed them in terms of price / performance ratio. Therefore, many believed that the days of switches on chips of their own design are numbered.

ACI was the “asymmetric response” of Cisco (or rather, Insieme, which was founded by its former employees), to all of the above.

What is the difference with OpenFlow?

In terms of distribution of functions, ACI is actually the opposite of OpenFlow.

In the OpenFlow architecture, the controller is responsible for prescribing detailed rules (flows)

in the equipment of all switches, that is, in a large network, it can be responsible for maintaining and, most importantly, changing tens of millions of records in hundreds of points in the network, therefore its performance and reliability in a large implementation, they become a bottleneck.

ACI uses the opposite approach: of course, there is a controller, but the switches receive high-level declarative policies from it, and the switch itself renders them into the details of specific settings in the hardware. The controller can be rebooted or turned off altogether, and nothing bad will happen to the network, except, of course, the lack of control at this moment. Interestingly, there are situations in ACI in which OpenFlow is still used, but locally within the host for programming Open vSwitch.

ACI is entirely built on overlay transport based on VXLAN, but at the same time includes the underlying IP transport as part of a single solution. Cisco called this the term "integrated overlay." As the termination point for overlays in ACI, in most cases factory switches are used (they do this at the speed of the channel). Hosts are not required to know anything about the factory, encapsulations, etc., however, in some cases (say, for connecting OpenStack hosts), VXLAN traffic can also be brought to them.

Overlays are used in ACI not only to provide flexible connectivity through the transport network, but also to transmit meta-information (it is used, for example, to apply security policies).

Broadcom's chips were previously used by Cisco in the Nexus 3000 series of switches. The Nexus 9000 family, specially released to support ACI, originally implemented a hybrid model called Merchant +. The switch simultaneously used the new Broadcom Trident 2 chip, and the Cisco development chip that complements it, realizing all the magic of ACI. Apparently, this made it possible to accelerate the output of the product and reduce the price tag of the switch to a level close to the models just for Trident 2. This approach was enough for the first two to three years of ACI supplies. During this time, Cisco developed and launched the next generation Nexus 9000 on its own chips with more performance and features, but at the same price level. External specifications in terms of interaction in the factory are fully preserved.

How Cisco ACI Architecture Works

In the simplest case, ACI is built on topology of the Clos network, or, as they often say, Spine-Leaf. Spine-level switches can be from two (or one, if we do not care about fault tolerance) to six. Accordingly, the more there are, the higher the fault tolerance (less reduction in bandwidth and reliability during an accident or maintenance of one Spine) and overall performance. All external connections go to Leaf-level switches: these are the servers, and connecting to external networks via L2 or L3, and connecting APIC controllers. In general, with ACI, not only configuration, but also statistics collection, failure monitoring and so on - everything is done through the interface of the controllers, which are three in standard-size implementations.

You don’t have to connect to the switches with the console ever, even to start the network: the controller itself detects the switches and collects the factory from them, including the settings of all service protocols, therefore, by the way, it’s very important to record the serial numbers of the installed equipment during installation so that you don’t have to guess which switch in which rack is located. To troubleshoot, you can connect to the switches via SSH if necessary: they use the usual Cisco show commands rather carefully.

Inside the factory uses IP transport, so there is no Spanning Tree and other horrors of the past in it: all links are involved, and convergence in case of failures is very fast. Factory traffic is transmitted through VXLAN tunnels. More precisely, Cisco itself calls the encapsulation iVXLAN, and it differs from the usual VXLAN in that the reserved fields in the network header are used to transmit overhead information, primarily about the relation of traffic to the EPG group. This allows you to implement the rules of interaction between groups in the equipment, using their numbers in the same way that addresses are used in ordinary access lists.

Tunnels allow you to stretch through the internal IP-transport and L2-segments, and L3 (i.e. VRF). In this case, the default gateway is distributed. This means that each switch is involved in routing the traffic entering the factory. In terms of traffic transfer logic, ACI is similar to a factory based on VXLAN / EVPN.

If so, what are the differences? Everything else!

The number one difference you encounter in ACI is how the servers are connected to the network. In traditional networks, the inclusion of both physical servers and virtual machines goes to VLANs, and everything else dances from them: connectivity, security, etc. The ACI uses a design that Cisco calls EPG (End-point Group), from which there is nowhere to get away. Is it possible to equate it to a VLAN? Yes, but in this case there is a chance to lose most of what ACI gives.

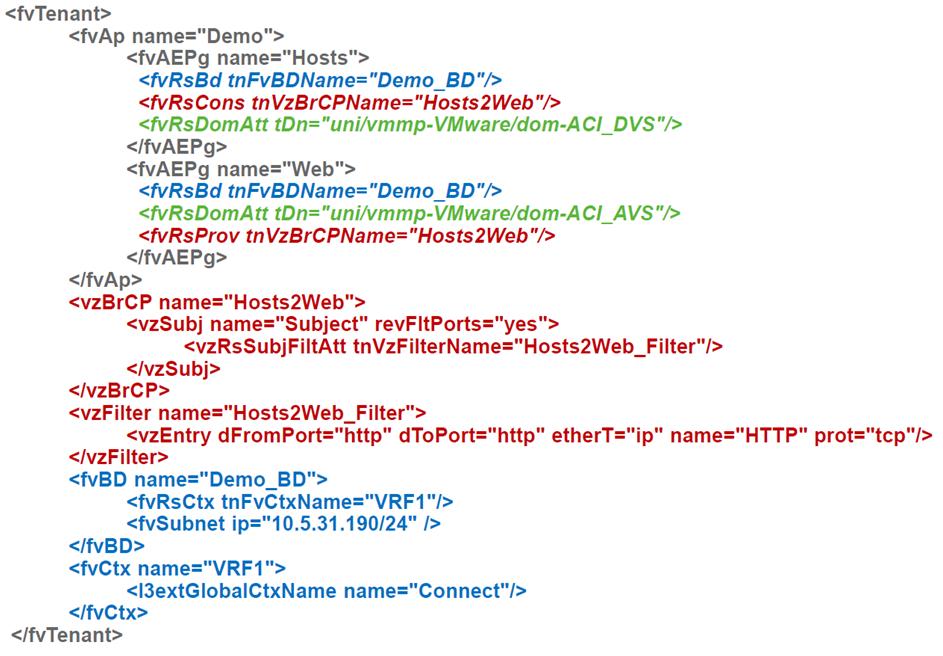

Regarding EPG, all access rules are formulated, and the ACI uses the principle of a “white list” by default, that is, only traffic is allowed, the transmission of which is explicitly allowed. That is, we can create EPG-groups “Web” and “MySQL” and define a rule that allows interaction between them only on port 3306. This will work without reference to network addresses and even within the same subnet!

We have customers who chose ACI precisely because of this feature, since it allows you to restrict access between servers (virtual or physical - it doesn’t matter) without dragging them between subnets, and therefore without touching the addressing. Yes, yes, we know that no one prescribes IP addresses in application configurations with their hands, right?

ACI traffic rules are called contracts. In such a contract, one or more groups or levels in a multi-tier application becomes a service provider (say, a database service), others become a consumer. A contract can simply skip traffic, or it can do something trickier, for example, direct it to a firewall or a balancer, and also change the QoS value.

How do servers get into these groups? If these are physical servers or something connected to the existing network into which we created the VLAN trunk, then to place them in the EPG you will need to point to the switch port and the VLAN used on it. As you can see, VLANs appear where you cannot do without them.

If the servers are virtual machines, then it is enough to refer to the connected virtualization environment, and then everything will happen by itself: a port group (in terms of VMWare) will be created to connect the VM, the necessary VLANs or VXLANs will be assigned, they will be registered on the necessary switch ports, etc. e. So, although ACI is built around a physical network, connections for virtual servers look much simpler than for physical ones. ACI already has a bundle with VMWare and MS Hyper-V, as well as support for OpenStack and RedHat Virtualization. At some point, built-in support for container platforms appeared: Kubernetes, OpenShift, Cloud Foundry, and it applies to both policy enforcement and monitoring, that is, the network administrator can immediately see which hosts work on which hosts and which groups they belong to.

In addition to being included in a particular port group, virtual servers have additional properties: name, attributes, etc., which can be used as criteria for transferring them to another group, for example, when renaming a VM or when it has an additional tag. Cisco calls this micro-segmentation groups, although by and large, the design itself with the ability to create many security segments in the form of EPG on the same subnet is also micro-segmentation. Well, the vendor knows better.

EPGs themselves are purely logical constructions that are not tied to specific switches, servers, etc., so that with them and constructions based on them (applications and tenants) you can do things that are difficult to do on regular networks, for example, to clone. As a result, let's say it’s very easy to create a clone of the productive environment in order to get a test environment guaranteed to be identical to the productive. It can be done manually, but better (and easier) - through the API.

In general, the control logic in ACI is completely different from what you usually encounter

in traditional networks from the same Cisco: the software interface is primary, and the GUI or CLI are secondary, because they work through the same API. Therefore, almost everyone who deals with ACI, after a while, begins to navigate the object model used for management, and to automate something for their needs. The easiest way to do this is from Python: there are convenient ready-made tools for it.

Promised Rake

The main problem is that many things in ACI are done differently. To start working with her normally, you need to relearn. This is especially true for network operation teams in large customers, where engineers have been engaged in “prescribing VLANs" for applications for years. The fact that VLANs are now no longer VLANs, and for laying new networks into virtualized hosts, you don’t need to create VLANs with your hands at all, completely “blows away” the traditional networkers and forces you to cling to familiar approaches. It should be noted that Cisco tried to sweeten the pill a bit and added a "NXOS-like" CLI to the controller, which allows you to configure from an interface similar to traditional switches. But still, in order to start using ACI normally, you will have to understand how it works.

From the point of view of the price on large and medium-sized ACI networks, it practically does not differ from traditional networks on Cisco equipment, because the same switches are used to build them (Nexus 9000 can work both in ACI and in traditional mode and now the main "workhorse" for new data center projects). But for data centers of two switches, the availability of controllers and Spine-Leaf architecture, of course, make themselves felt. A Mini ACI factory has recently appeared, in which two of the three controllers are replaced by virtual machines. This allows you to reduce the difference in cost, but it still remains. So for the customer, the choice is dictated by how much he is interested in security features, integration with virtualization, a single point of management and more.