OpenStack LBaaS UI Implementation

When I implemented the user interface of the load balancer for a virtual private cloud, I had to face significant difficulties. This led me to reflect on the role of the frontend, which I want to share in the first place. And then justify their thoughts, using the example of a specific task.

In my opinion, the solution to the problem turned out to be quite creative and I had to look for it in a very limited framework, so I think that it can be interesting.

Frontend role

I must say right away that I do not pretend to the truth and raise a controversial issue. I am somewhat depressed by the irony of the front-end and the web in particular, as something insignificant. And it is even more depressing that at times this happens reasonably. Now the fashion was already asleep, but there was a time when everyone was running around with frameworks, paradigms and other entities, loudly saying that all this is super-important and super-necessary, and in return they received an irony that the front-end deals with the output of forms and processing clicks on buttons, which can be done “on the knee”.

Now, it seems, everything has more or less returned to normal. No one really wants to talk about each minor release of the next framework. Few people are looking for the perfect tool or approach, due to the increasing awareness of their utility. But even this, for example, does not interfere with almost unreasonably scolding Electron and applications on it. I think this is due to a lack of understanding of the task being solved by the front-end.

The frontend is not just a means of displaying information provided by the backend, and not just a means of processing user actions. The frontend is something more, something abstract, and if you give it a simple, clear definition, then the meaning will inevitably be lost.

The frontend is in some “framework”. For example, in technical terms, it is between the API provided by the backend and the API provided by the I / O facilities. In terms of tasks, it is between the tasks of the user interface that UX solves and the tasks that the backend solves. Thus, a rather narrow frontend specialization is obtained, a specialization of the layer. This does not mean that front-end providers cannot influence areas outside their specialization, but at the moment when this influence is impossible, the true front-end task arises.

This problem can be expressed through a contradiction. The user interface is not required to conform to data models and backend behavior. The behavior and data models of the backend are not required to fit the tasks of the user interface. And then the task of the front-end is to eliminate this contradiction. The greater the discrepancy between the tasks of the backend and the user interface, the more important the role of the frontend. And to make it clear what I'm talking about, I will give an example where this discrepancy, for some reason, turned out to be significant.

Formulation of the problem

OpenStack LBaaS, in my opinion, is a hardware-software complex of tools necessary for balancing the load between servers. It is important for me that its implementation depends on objective factors, on physical display. Because of this, there are some peculiarities in the API and in the ways of interacting with this API.

When developing a user interface, the main interest is not the technical features of the backend, but its fundamental capabilities. The interface is created for the user, and the user needs an interface for managing balancing parameters, and the user does not need to dive into the internal features of the backend implementation.

The backend is for the most part developed by the community, and it is possible to influence its development in very limited quantities. One of the key features for me is that the backend developers are ready to sacrifice the convenience and simplicity of the controls for the sake of performance, and this is absolutely justified, since it is a matter of balancing the load.

There is one more subtle point, and I want to immediately outline it, warning some questions. It is clear that on OpenStack and their API the light did not converge. You can always develop your own set of tools or a “layer” that will work with the OpenStack API, producing its own API that is convenient for user tasks. The only question is expediency. If initially available tools allow you to implement the user interface as it was intended, does it make sense to produce entities?

The answer to this question is multifaceted and for business it will rest on developers, their employment, their competence, questions of responsibility, support and so on. In our case, it was most expedient to solve some of the tasks on the front-end.

Features of OpenStack LBaaS

I want to identify only those features that had a strong influence on the frontend. Questions why these features arose or what they rely on are already beyond the scope of this article.

I work with ready-made documentation and have to accept its features. Those who are interested in what OpenStack Octavia is from the inside can get acquainted with the official documentation . Octavia is the name of a set of tools designed to balance the load in the OpenStack ecosystem.

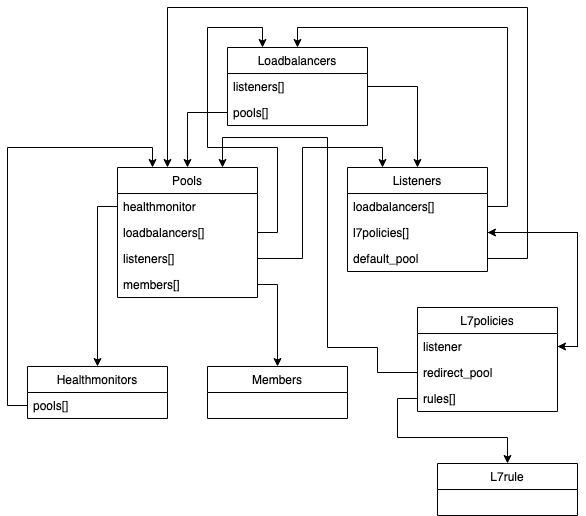

The first feature that I encountered during development is the large number of models and relationships needed to display the state of the balancer. In the Octavia API 12 models are described, but only 7 is needed for the client side. These models have connections, often denormalized, the image below shows an approximate diagram:

“Seven” doesn’t sound very impressive, but in reality, to ensure the full operation of the interface, at the time of writing this text, I had to use 16 data models and about 30 relationships between them. Since Octavia is only a balancer, it requires other OpenStack modules to work. And all this is needed for only two pages in the user interface.

The second and third features are asynchronous and transactional Octavia. Data models have a status field that reflects the state of operations performed on an object.

| Status | Description |

|---|---|

| ACTIVE | Object in good condition |

| DELETED | Object deleted |

| Error | Object is corrupted |

| PENDING_CREATE | Object in the making |

| PENDING_UPDATE | Object in the process of updating |

| PENDING_DELETE | Object in the process of deletion |

After sending a request for creation, we can know that the record has appeared, we can read it, but until the creation operation is completed, we cannot perform any other operations on this record. Any such attempt will result in an error. The operation of changing an object can be initiated only when the object is in the ACTIVE status ; you can send an object for deletion in the ACTIVE and ERROR statuses .

These statuses can come via WebSockets, which greatly facilitates their processing, but transactions are a much bigger problem. When making changes to any object, all related models will also participate in the transaction. For example, when making changes to Member , the associated Pool , Listener, and Loadbalancer will be blocked . This is what it looks like in terms of events received on web sockets:

- the first four events are the transfer of objects to the PENDING_UPDATE status : the target field contains the model name of the object participating in the transaction;

- the fifth event is just a duplicate (I don’t know what it is connected with);

- the last four is a return to ACTIVE status . In this case, this is a weight change operation, and it takes less than a second, but sometimes it takes a lot more time.

You can also see in the screenshot that the order of events does not have to be strict. Thus, it turns out that in order to initiate any operation, it is necessary to know not only the status of the object itself, but also the statuses of all dependencies that will also participate in the transaction.

User Interface Features

Now imagine yourself in the place of a user who needs to know somewhere that for balancing between two servers:

- It is necessary to create a listener in which the balancing algorithm will be defined.

- Create a pool.

- Assign a pool to the listener.

- Add links to balanced ports to the pool.

Each time it is necessary to wait for the completion of the operation, which depends on all previously created objects.

As an internal study showed, in the ordinary user’s view, there is only an approximate realization that the balancer must have an entry point, there must be exit points and the parameters of the balancing to be carried out: algorithm, weight, and others. The user does not have to know what OpenStack is.

I don’t know how complicated the interface should be for perception, where the user himself must follow all the technical features of the backend described above. For the console, this may be permissible, since its use implies a high level of immersion in technology, but for the web such an interface is horrible.

On the web, the user waits for him to fill out one clear and logical form, press one button, wait and everything will work. Perhaps this can be argued, but I propose to concentrate on the features that affect the implementation of the frontend.

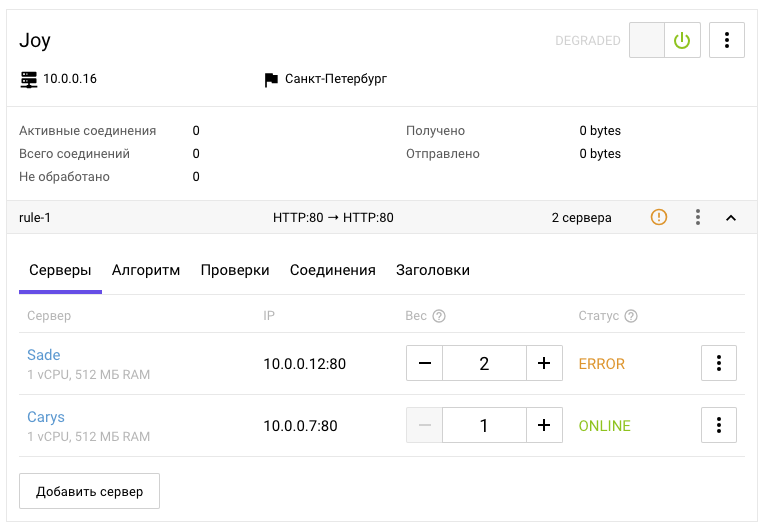

The interface was designed in such a way that involves the cascading use of operations: one action in the interface may involve several operations. The interface does not imply that the user can perform actions that are currently not possible, but the interface assumes that the user must understand why this is so. The interface is a single whole, and therefore, its individual elements can use information from various dependent entities, including meta-information.

If we take into account that there are some features of the interface that are not unique to the balancer, such as switches, accordions, tabs, a context menu and assume that their operating principles are clear initially, then I think for a user who knows what load balancing is, not it will be very difficult to read most of the interface above and make an assumption on how to manage it. But to highlight which parts of the interface are hidden behind the models of the balancer, listener, pool, member and other entities is no longer the most obvious task.

Resolving Contradictions

I hope I was able to show that the features of the backend do not fit the interface well, and that these features cannot always be eliminated by the backend. Along with this, the features of the interface do not fit well on the backend, and also can not always be eliminated without complicating the interface. Each of these areas solves its own problems. The responsibility of the front-end is to solve problems to ensure the necessary level of interaction between the interface and the back-end.

In my practice, I immediately rushed into the pool with my head, not paying attention, or rather not even trying to figure out those features that are higher, but I was lucky or the experience helped (and the correct vector was chosen). I have repeatedly noticed for myself that when using a third-party API or library, it is very useful to familiarize yourself with the documentation in advance: the more details, the better. The documentation is often similar to each other, people still rely on the experience of other people, but there is a description of the features of each individual system, and it is contained in the details.

If I initially spent a couple of extra hours studying the documentation, rather than pulling out the necessary information by keywords, I would have thought about the problems that would have to be faced, and this knowledge could have an impact on the project architecture from the very early stages. Going back to eliminate mistakes made at the very beginning is very demoralizing. And without a full context, sometimes you have to come back several times.

As an option, you can bend your line, gradually generating more and more code “with a bite”, but the more this heap of code is, the more it will be raked in the end. When designing the architecture, of course, one should not dive too deeply, take into account all possible and impossible options, spending a huge amount of time on it, it is important to find a balance. But more or less detailed acquaintance with the documentation often proves to be a very useful investment of not a very large amount of time.

Nevertheless, from the very beginning, having seen a large number of models involved, I realized that it would be necessary to build a mapping of the backend state to the client with all the connections preserved. After I managed to display all the necessary information on the client, with all the connections and so on, it was necessary to organize a task queue.

Data is updated asynchronously, the availability of operations is determined by a variety of conditions, and when cascading operations are required, no queue can be dispensed with in such conditions. Perhaps, in a nutshell, this is the whole architecture of my solution: storage with a reflection of the backend state and the task queue.

Solution Architecture

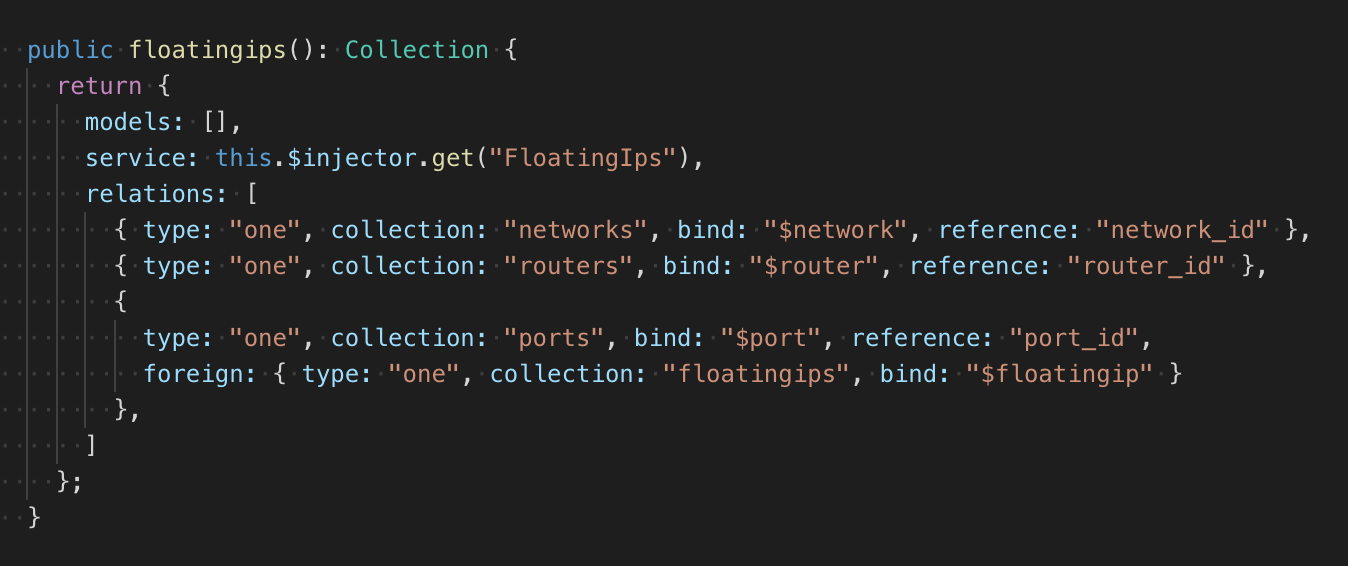

Due to the indefinite number of models and relationships, I put scalability into the structure of the repository by doing this using a factory that returns a declarative description of the collections of the repository. The collection has a service, a simple model class with CRUD. It would be possible to make a description of the links in the model, as is done, for example, in RoR or in the good old Backbone, but this would require a large amount of code to be changed. Therefore, the description of relations lies next to the model class:

In total, I got 2 types of connections: one to one, one to many. Feedback can also be described. In addition to the type, the collection of dependencies is indicated, the field to which the found dependency is attached and the field from which the ID of the dependent object is read (in case of one-to-many communication, the list of IDs is read). If an object’s condition for communication is more complicated than simple links to objects, then in the factory one can describe the function of testing two objects, the results of which will determine the presence of a connection. It all looks a bit "bicycle", but it works without unnecessary dependencies and exactly as it should.

The repository has a module for waiting for adding and deleting a resource, in essence it is processing one-time events with conditional checking and with a promis interface. When subscribing, the type of event (add, delete), test function, and handler are passed. When a certain event occurs and with a positive test result, the handler is executed, after which the tracking stops. An event may occur when subscribing synchronously.

The use of such a pattern made it possible to automatically affix arbitrarily complex relationships between models, and do it in one place. This place I called a tracker. When adding an object to the repository, it starts tracking its relationships. The waiting module allows you to respond to events and check for a connection between the monitored object and the object in the storage. If the object was already in the repository, then the wait module calls the handler immediately.

Such a storage device allows you to describe any number of collections and the relationships between them. When adding and deleting objects, the store automatically puts or resets properties with the contents of dependent objects. The advantages of this approach are that all relationships are described explicitly, and they are monitored and updated by one system; cons - in the complexity of implementation and debugging.

In general, such a repository is rather trivial and I did it myself, because it would be much more difficult to integrate a ready-made solution into an existing code base, but it would be even harder to attach a task queue to a ready-made solution.

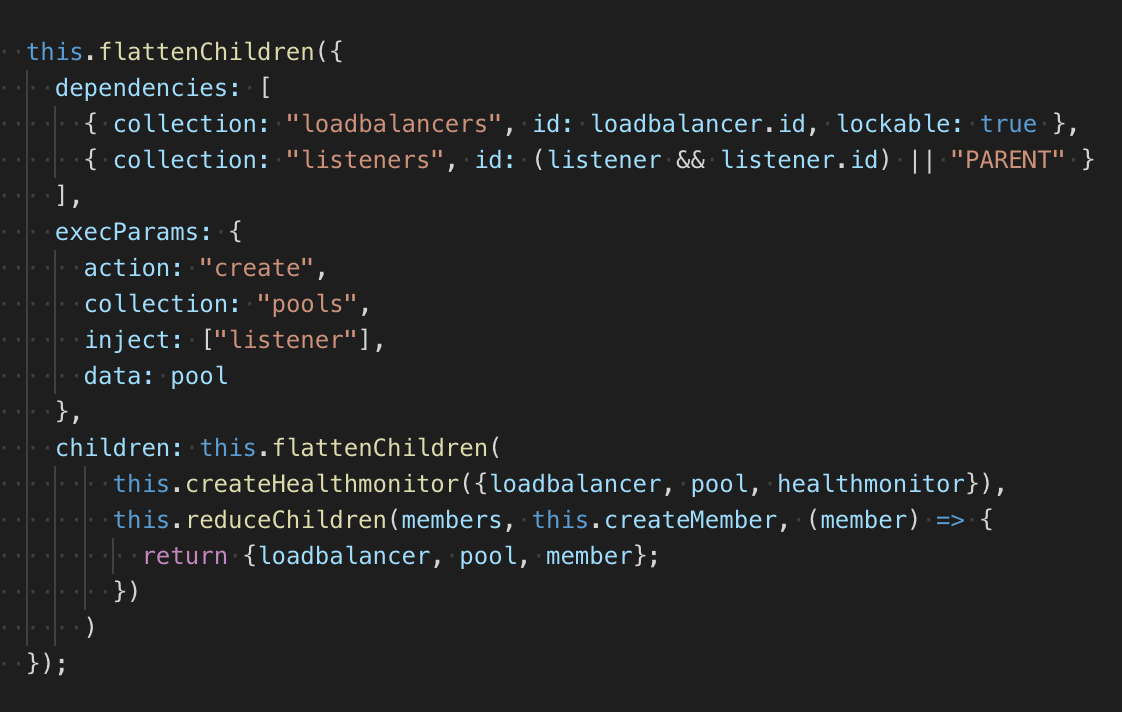

All tasks, like collections, have a declarative description and are created by the factory. Tasks can have in the description the conditions for starting and a list of tasks that will need to be added to the queue after the current one is completed.

The example above describes the task of creating a pool. In the dependencies, the balancer and the listener are indicated, by default, a check is performed for the ACTIVE status . The object of the balancer is locked, because processing tasks in the queue can occur synchronously, locking allows you to avoid conflicts at the time when the request for execution was sent, but the status has not changed, but it is assumed that it will change. Instead of PARENT , if the pool is created as a result of the cascade of tasks, the ID will be substituted automatically.

After creating a pool, tasks will be added to the queue to create an availability monitor and create all members of this pool. The output is a structure that can be fully converted to JSON. This is done to be able to restore the queue in case of failure.

The queue, based on the task description, independently monitors all changes in the repository and checks the conditions that must be met to run the task. As I already said, statuses come via web sockets, and it is very simple to generate the necessary events for the queue, but if necessary it will not be a problem to attach a timer data update mechanism (this was originally laid down in the architecture, since web sockets were for various reasons may not work very stable). After the task is completed, the queue automatically informs the repository about the need to update the links in the specified objects.

Conclusion

The need for scalability has led to a declarative approach. The need to display models and the relationships between them has led to a single repository. The need for processing dependent objects has led to the queue.

Combining these needs may not be the easiest task in terms of implementation (but this is a separate issue). But in terms of architecture, the solution is very simple and allows you to eliminate all the contradictions between the tasks of the backend and the user interface, to establish their interaction and lay the foundation for other possible features of any of the parties.

From the side of the Selectel control panel, the balancing process is simple and clear, which allows customers to serviceDo not spend resources on the independent implementation of the balancer, while maintaining the ability to flexibly manage traffic.

Try our balancer in action now and write your review in the comments.