How to waste your time and SSD resource in vain? Simply and easily

“Testing cannot be diagnosed” - where would you put the comma in this sentence? We hope that after reading this material you can clearly give an answer to this question without any problems. Many users have ever encountered data loss for one reason or another, whether it is a software or hardware problem of the drive itself or a non-standard physical impact on it, if you understand what we are talking about. But it’s not about physical damage that will be discussed today. We will just talk about what does not depend on our hands. Is it worth it to test SSD every day / week / month or is it a waste of its resource? And how do you test them at all? When you get certain results, do you understand them correctly? And how can you simply and quickly make sure that the drive is in order or your data is at risk?

At first glance, diagnostics implies testing, if you think globally. But in the case of drives, be it HDD or SSD, everything is a little different. By testing, an ordinary user means checking his characteristics and comparing the obtained indicators with the declared ones. And under the diagnostics is the study of SMART, which we will also talk about today, but a little later. The classic HDD also got into the photo, which, in fact, is not an accident ...

It just so happened that the data storage subsystem in desktop systems is one of the most vulnerable places, since the service life of drives is often less than that of the other components of a PC, all-in-one, or laptop. If earlier this was due to the mechanical component (plates rotate in the hard disks, heads move) and some problems could be identified without running any programs, now everything has become a bit more complicated - there is no crunch inside the SSD and cannot be. So what do SSD owners do?

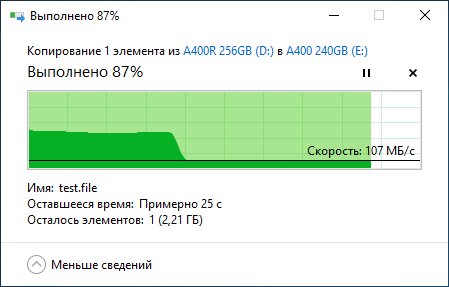

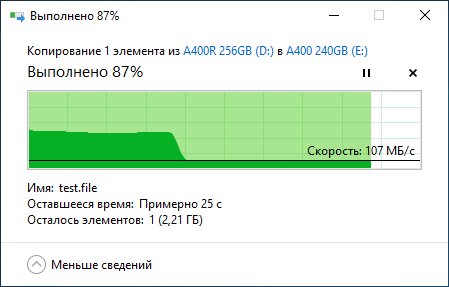

There are a lot of programs for testing SSDs. Some of them have become popular and are constantly being updated, some of them have long been forgotten, and some are so good that developers have not updated them for years - there is simply no point. In severe cases, you can run a full test using the international Solid State Storage (SSS) Performance Test Specification (PTS), but we will not rush to the extreme. Immediately, we also note that some manufacturers claim the same speed of operation, but in fact the speed may be noticeably lower: if the drive is new and serviceable, then we have a solution with SLC caching, where the maximum speed is available only the first few gigabytes (or tens of gigabytes) if the disk capacity is more than 900 GB), and then the speed drops. This is a completely normal situation. How to understand cache size and make sure that the problem is not really the problem? Take a file, for example, with a capacity of 50 GB and copy it to the experimental drive from obviously faster media. The speed will be high, then it will decrease and remain uniform until the very end within 50-150 MB / s, depending on the SSD model. If the test file is not copied unevenly (for example, there are pauses with a drop in speed to 0 MB / s), then you should think about additional testing and studying the status of the SSD using proprietary software from the manufacturer.

A vivid example of the correct operation of SSDs with SLC caching technology is presented in the screenshot:

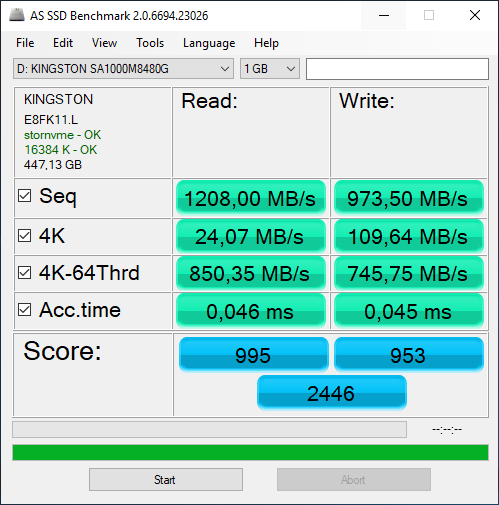

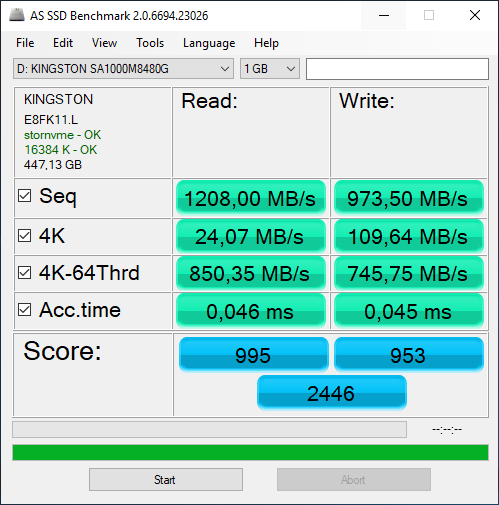

Those users who use Windows 10 can find out about the problems without further action - as soon as the operating system sees negative changes in SMART, it warns about this with a recommendation to backup data. But let's go back a little, namely to the so-called benchmarks. AS SSD Benchmark, CrystalDiskMark, Anvils Storage Utilities, ATTO Disk Benchmark, TxBench and, after all, Iometer are familiar names, aren't they? It is undeniable that each of you with any periodicity launches these very benchmarks in order to check the speed of the installed SSD. If the drive is alive and well, then we see, so to speak, beautiful results that are pleasing to the eye and ensure peace of mind for the money in the wallet. And what kind of numbers do we see? Most often, four indicators are measured - sequential read and write, 4K (KB) block operations, multithreaded 4K block operations, and drive response time. All of the above indicators are important. Yes, each of them can be completely different for different drives. For example, for drives No. 1 and No. 2, the same sequential read and write speeds are declared, but the speeds with 4K blocks can vary by an order of magnitude - it all depends on the memory, controller and firmware. Therefore, it is simply impossible to compare the results of different models. For a correct comparison, only completely identical drives are allowed. There is still such an indicator as IOPS, but it depends on the other indicators listed above, so we should not talk about this separately.

And, as you might guess, the results of each program can show different data - it all depends on the testing parameters that the developer sets. In some cases, they can be changed to get different results. But if you test "on the forehead", then the numbers can be very different. Here is another test example where, with the default settings, we see noticeably distinguishable results of sequential read and write. But you should also pay attention to the speed of working with 4K blocks - here all programs already show about the same result. Actually, this test is one of the key.

But, as we noticed, only one of the key. Yes, and something else to keep in mind - the state of the drive. If you brought a disc from a store and tested it in one of the benchmarks listed above, you will almost always get the declared characteristics. But if you repeat the test after a while, when the disk is partially or almost completely full or was full, but you deleted a certain amount of data in the most usual way, then the results can be very different. This is due precisely to the principle of operation of solid-state drives with data, when they are not deleted immediately, but only marked for deletion. In this case, before writing new data (the same test files from benchmarks), the old data is deleted first. We talked about this in more detail in the previous article..

In fact, depending on the scenarios, you need to choose the parameters yourself. It's one thing - home or office systems that use Windows / Linux / MacOS, and quite another - server systems designed to perform certain tasks. For example, in servers working with databases, NVMe drives can be installed that perfectly digest queue depths of at least 256 and for which such 32 or 64 is a baby talk. Of course, using the classic benchmarks listed above, in this case, is a waste of time. Large companies use proprietary test scripts, for example, based on the fio utility. Those who do not need to reproduce certain tasks can use the international SNIA methodology., which describes all the tests and proposed pseudo-scripts. Yes, it will take a little work on them, but you can get fully automated testing, according to which you can understand the drive’s behavior - identify its strengths and weaknesses, see how it behaves under prolonged loads and get an idea of performance when working with different data blocks .

In any case, it must be said that each manufacturer has its own test software. Most often, the name, version and parameters of the benchmark chosen by him are added in the specification in small print somewhere below. Of course, the results are roughly comparable, but differences in results can certainly be. From this it follows, no matter how sad it may sound, that the user needs to be careful when testing: if the result does not match the declared, then perhaps other test parameters are set, on which a lot depends.

Theory is good, but let's get back to the real state of things. As we already said, it is important to find data on the testing parameters by the manufacturer of the particular drive that you purchased. Think it all? No, not all. Much depends on the hardware platform - the test bench on which testing is carried out. Of course, this data can also be indicated in the specification for a particular SSD, but this does not always happen. What depends on it? For example, before buying an SSD, you read a few reviews. In each of them, the authors used the same standard benchmarks, which showed different results. Who to believe? If the motherboards and software (including the operating system) were the same - the question is fair, you have to look for an additional independent source of information. But if the boards or OS are different - the differences in the results can be considered in the order of things. Another driver, another operating system, another motherboard, as well as different temperature drives during testing - all this affects the final results. It is for this reason that it is almost impossible to get the numbers that you see on manufacturers' websites or in reviews. And for this very reason, it makes no sense to worry about the differences between your results and those of other users. For example, third-party SATA controllers are sometimes implemented on the motherboard (to increase the number of corresponding ports), and they most often have worse speeds. Moreover, the difference can be up to 25-35%! In other words, to reproduce the claimed results, it will be necessary to strictly observe all aspects of the testing methodology. Therefore, if the speed indicators you received do not correspond to the declared ones, you should not carry the purchase back to the store on the same day. Unless, of course, this is not a critical situation with minimal speed and failures when reading or writing data. In addition, the speeds of most SSDs change at a worse speed over time, stopping at a certain point called stationary performance. So the question is: is it necessary to constantly test the SSD in the end? Although not quite right. That's better: does it make sense to constantly test SSDs? the speeds of most SSDs change at a worse speed over time, stopping at a certain point called stationary performance. So the question is: is it necessary to constantly test the SSD in the end? Although not quite right. That's better: does it make sense to constantly test SSDs? the speeds of most SSDs change at a worse speed over time, stopping at a certain point called stationary performance. So the question is: is it necessary to constantly test the SSD in the end? Although not quite right. That's better: does it make sense to constantly test SSDs?

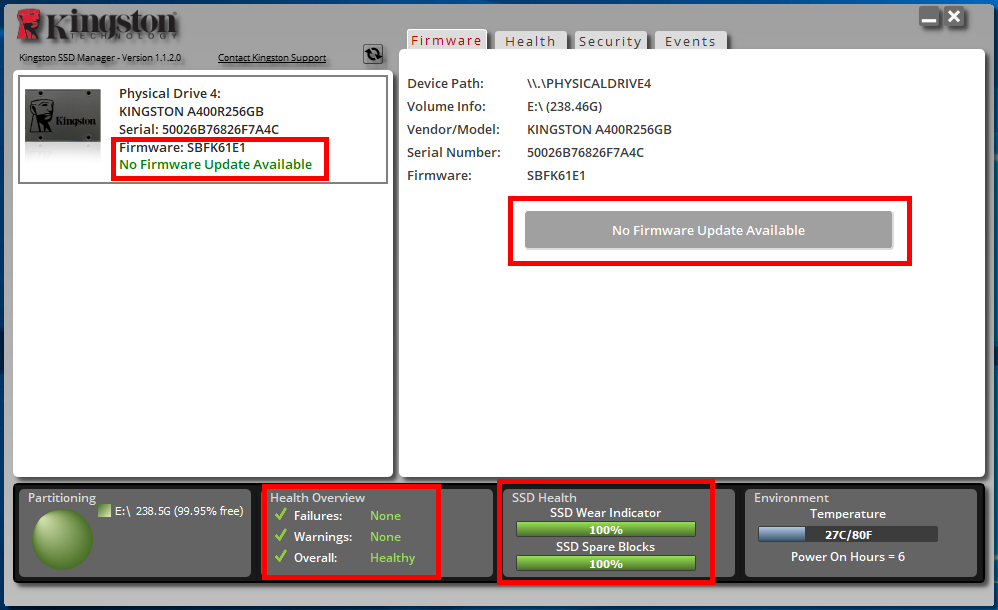

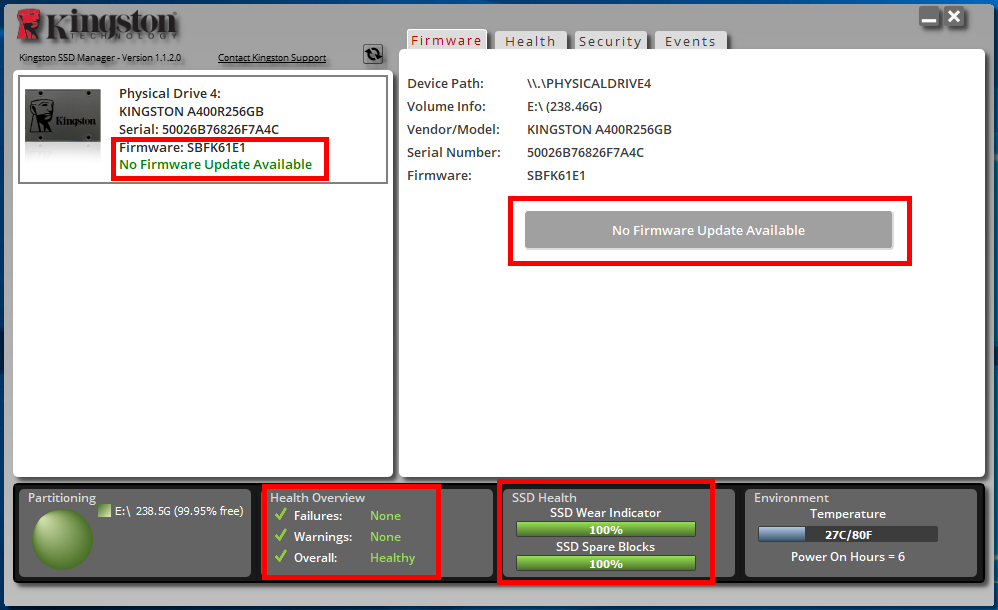

So whether it is necessary, coming home from work, to begin to drive away the benchmark once again? This is just not recommended. Like it or not, but any of the existing programs of this type writes data to the drive. Some more, some less, but they write. Yes, compared with the SSD resource, the recorded volume is quite small, but it is. And the TRIM / Deallocate functions will take time to process the deleted data. In general, it makes no sense to run tests regularly or with nothing to do. But if in everyday work you begin to notice a slowdown of the system or heavy software installed on the SSD, as well as freezes, BSODs, errors in writing and reading files, then you should already be puzzled by identifying the cause of the problem. It is possible that the problem may be on the side of other components, but checking the drive is the easiest. This will require proprietary software from the manufacturer of the SSD. For our drives - Kingston SSD Manager. But first of all, back up important data, and only then do diagnostics and testing. First, look at the SSD Health area. It has two indicators in percent. The first is the so-called wear of the drive, the second is the use of the spare memory area. The lower the value, the more concern you should have. Of course, if the values decrease by 1-2-3% per year with very intensive use of the drive, then this is a normal situation. Another thing is if, without special loads, the values decrease unusually quickly. Nearby there is another area - Health Overview. It briefly reports on whether errors of various kinds have been recorded, and the general status of the drive is indicated. We also check for new firmware. More precisely, the program does it itself. If there is one, and the disk behaves strangely (there are errors, the level of "health" is reduced and other components are generally excluded), then we can safely install it.

If the manufacturer of your SSD does not take care of the support in the form of proprietary software, then you can use a universal one, for example - CrystalDiskInfo. No, Intel has its own software, the screenshot below is just an example :) What should I look for? By the percentage of health status (at least approximately, but the situation will be clear), by the total operating time, the number of inclusions and the volumes of recorded and read data. Not always these values will be displayed, and some of the attributes in the list will be visible as Vendor Specific. More about this later.

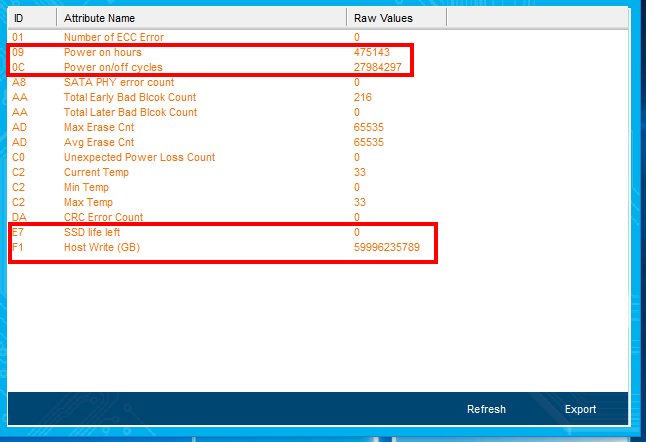

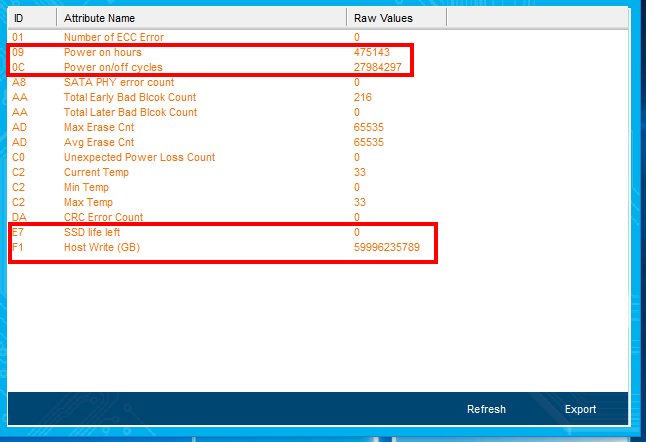

And here is a vivid example of a drive that has already failed, which worked for a relatively short time, but then began to work “once in a while”. When turned on, the system did not see it, and after a reboot everything was fine. And this situation was repeated in random order. The main thing with this behavior of the drive is to immediately backup important data, which, however, we said recently. But we will not tire of repeating this. The number of starts and runtimes are completely unattainable. Almost 20 thousand days of work. Or about 54 years old ...

But that is not all - take a look at the values from the manufacturer's proprietary software! Incredible values, right? In such cases, updating the firmware to the current version can help. If not, it is best to contact the manufacturer as part of the warranty service. And if there is a new firmware, then after the update, do not throw important data onto the disk, but carefully work with it and look at the subject of stability. Perhaps the problem will be solved, but maybe not.

You can add one more thing. Some users, out of habit or ignorance, use software that has been familiar to them for a long time, which they monitor the status of classic hard drives. This is strongly discouraged, since the HDD and SSD operation algorithms are strikingly different, as is the set of controller commands. This is especially true for NVMe SSDs. Some programs (for example, Victoria) received SSD support, but they still continue to be finalized (and will they be finalized?) In terms of the correctness of the demonstration of information about connected media. For example, only about a month has passed since the moment when the SMART readings for Kingston SSDs acquired at least some correct form, and even then not completely. All this applies not only to the aforementioned program, but also to many others. That is why, to avoid misinterpretation of data,

Some manufacturers implement in their software the ability to check the addresses of each logical unit (LBA) for errors when reading. During such testing, all the free space of the drive is used to write arbitrary data and read it back to verify integrity. Such a scan can take more than one hour (depends on the volume of the drive and the free space on it, as well as its speed indicators). This test allows you to identify bad cells. But it does not do without nuances. Firstly, in a good way, the SSD must be empty to check the maximum memory. Another problem arises from this: you need to make backups and fill them back, which takes away the resource of the drive. Secondly, even more memory resource is spent on the test itself. Not to mention the time spent. And what do we learn from the test results? There are two options, as you understand, either there will be broken cells or not. In the first case, we are wasting a resource and time, and in the second, we are wasting a resource and time. Yes, that’s what it sounds like. Bad cells and without such testing will make themselves felt when the time comes. So there is no point in checking each LBA.

Everyone once saw a set of specific names (attributes) and their values displayed in a list in the corresponding section or directly in the main program window, as seen in the screenshot above. But what do they mean and how to understand them? Let's go back a bit to understand what's what. In theory, each manufacturer brings something of their own to the product in order to attract a potential buyer with this uniqueness. But with SMART it turned out a little differently.

Depending on the manufacturer and model of the drive, the set of parameters may vary, so universal programs may not know certain values, marking them as Vendor Specific. Many manufacturers provide open access documentation for understanding the attributes of their drives - SMART Attribute. It can be found on the manufacturer’s website.

That is why it is recommended that you use proprietary software that is aware of all the intricacies of compatible drive models. In addition, it is strongly recommended to use the English interface to get reliable information about the status of the drive. Often the translation into Russian is not entirely correct, which can lead to confusion. And the documentation itself, which we mentioned above, is most often provided in English.

Now we will look at the basic attributes using the Kingston UV500 drive as an example. To whom it is interesting - we read, to whom it is not - we press PageDown a couple of times and we read the conclusion. But we hope you are still interested - the information is useful, anyway. The construction of the text may look unusual, but it will be more convenient for everyone - you will not need to enter extra words-variables, and it will be easier to find the original words in the report about your drive.

(ID 1) Read Error Rate - contains the frequency of errors during reading.

(ID 5) Reallocated Sector Count- the number of reassigned sectors. It is, in fact, the main attribute. If an SSD finds a bad sector in the process, it may consider it irreparably damaged. In this case, the disk uses the sector from the spare area instead. The new sector receives the logical LBA number of the old one, after which, when accessing the sector with this number, the request will be redirected to the one located in the backup area. If the error is single - this is not a problem. But if such sectors appear regularly, then the problem can be considered critical.

(ID 9) Power On Hours - the drive’s operating hours in hours, including idle mode and all kinds of energy saving modes.

(ID 12) Power Cycle Count- the number of drive on and off cycles, including sudden blackouts (incorrect shutdown).

(ID 170) Used Reserved Block Count - the number of used reserve blocks to replace damaged ones.

(ID 171) Program Fail Count - counts failures of writing to memory.

(ID 172) Erase Fail Count - counting failures of clearing memory cells.

(ID 174) Unexpected Power Off Count - the number of incorrect shutdowns (power failures) without clearing the cache and metadata.

(ID 175) Program Fail Count Worst Die - counts errors of write failures in the worst memory chip.

(ID 176) Erase Fail Count Worst Die - error counting of failures of cleaning the cells of the worst memory chip.

(ID 178) Used Reserved Block Count worst Die - the number of used backup blocks to replace damaged in the worst memory chip.

(ID 180) Unused Reserved Block Count (SSD Total) - the number (or percentage, depending on the type of display) of the available backup memory blocks.

(ID 187) Reported Uncorrectable Errors - the number of uncorrected errors.

(ID 194) Temperature - temperature of the drive.

(ID 195) On-the-Fly ECC Uncorrectable Error Count - the total number of fixed and uncorrectable errors.

(ID 196) Reallocation Event Count - the number of reassignment operations.

(ID 197) Pending Sector Count - the number of sectors requiring reassignment.

(ID 199) UDMA CRC Error Count - counter of errors that occur during data transfer via SATA interface.

(ID 201) Uncorrectable Read Error Rate - the number of uncorrected errors for the current period of operation of the drive.

(ID 204) Soft ECC Correction Rate - the number of fixed errors for the current period of the drive.

(ID 231) SSD Life Left - an indication of the remaining life of the drive based on the number of write / erase cycles.

(ID 241) GB Written from Interface - the amount of data in GB written to the drive.

(ID 242) GB Read from Interface - the amount of data in GB read from the drive.

(ID 250) Total Number of NAND Read Retries- the number of attempts to read from the drive.

Perhaps this concludes the list. Of course, for other models of attributes, there may be more or less, but their values within the manufacturer will be identical. And decrypting the values is quite simple for the average user, everything is logical: increasing the number of errors is worse than the disk, decreasing the backup sectors is also bad. By temperature - everything is already clear. Each of you will be able to add something of your own - this is expected, since the full list of attributes is very large, and we have listed only the main ones.

As practice shows, testing is only necessary to confirm the claimed speed characteristics. The rest is a waste of storage resource and your time. There is no practical benefit in this, if only you can mentally reassure yourself after investing a certain amount of money in an SSD. If there are problems, they will make themselves felt. If you want to monitor the status of your purchase, then just open the proprietary software and look at the indicators that we talked about today and which are clearly shown in the screenshots. This will be the fastest and most correct way to diagnose. And add a few words about the resource. Today we said that testing drives is wasting their resource. On the one hand, it is. But if you think a little, then a couple of, or even a dozen recorded gigabytes - not so much. For example, take the budget Kingston A400R with a capacity of 256 GB. Its TBW value is 80 TB (81920 GB), and the warranty period is 1 year. That is, in order to fully develop the drive’s resource for this year, 224 GB of data must be recorded on it daily. How to do it in office PCs or laptops? In fact, nothing. Even if you record about 25 GB of data per day, the resource will be developed only after almost 9 years. But in the A1000 series drives, the resource is from 150 to 600 TB, which is much more! Taking into account the 5-year warranty, on the flagship with a capacity of 960 GB you need to write over 330 GB per day, which is unlikely even if you are an avid player and love new games that easily occupy a hundred gigabytes. In general, why all this? Moreover, killing a drive’s resource is a rather difficult task. Much more important to monitor for errors which does not require the use of familiar benchmarks. Use proprietary software - and everything will be under control. Designed for Kingston and HyperX drivesSSD Manager , which has everything necessary for the average user functionality. Although, it is unlikely that your Kingston or HyperX will fail ... That's all, success in all and long life of your drives!

PS In case of problems with SSD, plantain still does not help :(

For more information on Kingston products, please visit the company's website.

Testing or diagnostics? There are many programs, but the essence is one

At first glance, diagnostics implies testing, if you think globally. But in the case of drives, be it HDD or SSD, everything is a little different. By testing, an ordinary user means checking his characteristics and comparing the obtained indicators with the declared ones. And under the diagnostics is the study of SMART, which we will also talk about today, but a little later. The classic HDD also got into the photo, which, in fact, is not an accident ...

It just so happened that the data storage subsystem in desktop systems is one of the most vulnerable places, since the service life of drives is often less than that of the other components of a PC, all-in-one, or laptop. If earlier this was due to the mechanical component (plates rotate in the hard disks, heads move) and some problems could be identified without running any programs, now everything has become a bit more complicated - there is no crunch inside the SSD and cannot be. So what do SSD owners do?

There are a lot of programs for testing SSDs. Some of them have become popular and are constantly being updated, some of them have long been forgotten, and some are so good that developers have not updated them for years - there is simply no point. In severe cases, you can run a full test using the international Solid State Storage (SSS) Performance Test Specification (PTS), but we will not rush to the extreme. Immediately, we also note that some manufacturers claim the same speed of operation, but in fact the speed may be noticeably lower: if the drive is new and serviceable, then we have a solution with SLC caching, where the maximum speed is available only the first few gigabytes (or tens of gigabytes) if the disk capacity is more than 900 GB), and then the speed drops. This is a completely normal situation. How to understand cache size and make sure that the problem is not really the problem? Take a file, for example, with a capacity of 50 GB and copy it to the experimental drive from obviously faster media. The speed will be high, then it will decrease and remain uniform until the very end within 50-150 MB / s, depending on the SSD model. If the test file is not copied unevenly (for example, there are pauses with a drop in speed to 0 MB / s), then you should think about additional testing and studying the status of the SSD using proprietary software from the manufacturer.

A vivid example of the correct operation of SSDs with SLC caching technology is presented in the screenshot:

Those users who use Windows 10 can find out about the problems without further action - as soon as the operating system sees negative changes in SMART, it warns about this with a recommendation to backup data. But let's go back a little, namely to the so-called benchmarks. AS SSD Benchmark, CrystalDiskMark, Anvils Storage Utilities, ATTO Disk Benchmark, TxBench and, after all, Iometer are familiar names, aren't they? It is undeniable that each of you with any periodicity launches these very benchmarks in order to check the speed of the installed SSD. If the drive is alive and well, then we see, so to speak, beautiful results that are pleasing to the eye and ensure peace of mind for the money in the wallet. And what kind of numbers do we see? Most often, four indicators are measured - sequential read and write, 4K (KB) block operations, multithreaded 4K block operations, and drive response time. All of the above indicators are important. Yes, each of them can be completely different for different drives. For example, for drives No. 1 and No. 2, the same sequential read and write speeds are declared, but the speeds with 4K blocks can vary by an order of magnitude - it all depends on the memory, controller and firmware. Therefore, it is simply impossible to compare the results of different models. For a correct comparison, only completely identical drives are allowed. There is still such an indicator as IOPS, but it depends on the other indicators listed above, so we should not talk about this separately.

And, as you might guess, the results of each program can show different data - it all depends on the testing parameters that the developer sets. In some cases, they can be changed to get different results. But if you test "on the forehead", then the numbers can be very different. Here is another test example where, with the default settings, we see noticeably distinguishable results of sequential read and write. But you should also pay attention to the speed of working with 4K blocks - here all programs already show about the same result. Actually, this test is one of the key.

But, as we noticed, only one of the key. Yes, and something else to keep in mind - the state of the drive. If you brought a disc from a store and tested it in one of the benchmarks listed above, you will almost always get the declared characteristics. But if you repeat the test after a while, when the disk is partially or almost completely full or was full, but you deleted a certain amount of data in the most usual way, then the results can be very different. This is due precisely to the principle of operation of solid-state drives with data, when they are not deleted immediately, but only marked for deletion. In this case, before writing new data (the same test files from benchmarks), the old data is deleted first. We talked about this in more detail in the previous article..

In fact, depending on the scenarios, you need to choose the parameters yourself. It's one thing - home or office systems that use Windows / Linux / MacOS, and quite another - server systems designed to perform certain tasks. For example, in servers working with databases, NVMe drives can be installed that perfectly digest queue depths of at least 256 and for which such 32 or 64 is a baby talk. Of course, using the classic benchmarks listed above, in this case, is a waste of time. Large companies use proprietary test scripts, for example, based on the fio utility. Those who do not need to reproduce certain tasks can use the international SNIA methodology., which describes all the tests and proposed pseudo-scripts. Yes, it will take a little work on them, but you can get fully automated testing, according to which you can understand the drive’s behavior - identify its strengths and weaknesses, see how it behaves under prolonged loads and get an idea of performance when working with different data blocks .

In any case, it must be said that each manufacturer has its own test software. Most often, the name, version and parameters of the benchmark chosen by him are added in the specification in small print somewhere below. Of course, the results are roughly comparable, but differences in results can certainly be. From this it follows, no matter how sad it may sound, that the user needs to be careful when testing: if the result does not match the declared, then perhaps other test parameters are set, on which a lot depends.

Theory is good, but let's get back to the real state of things. As we already said, it is important to find data on the testing parameters by the manufacturer of the particular drive that you purchased. Think it all? No, not all. Much depends on the hardware platform - the test bench on which testing is carried out. Of course, this data can also be indicated in the specification for a particular SSD, but this does not always happen. What depends on it? For example, before buying an SSD, you read a few reviews. In each of them, the authors used the same standard benchmarks, which showed different results. Who to believe? If the motherboards and software (including the operating system) were the same - the question is fair, you have to look for an additional independent source of information. But if the boards or OS are different - the differences in the results can be considered in the order of things. Another driver, another operating system, another motherboard, as well as different temperature drives during testing - all this affects the final results. It is for this reason that it is almost impossible to get the numbers that you see on manufacturers' websites or in reviews. And for this very reason, it makes no sense to worry about the differences between your results and those of other users. For example, third-party SATA controllers are sometimes implemented on the motherboard (to increase the number of corresponding ports), and they most often have worse speeds. Moreover, the difference can be up to 25-35%! In other words, to reproduce the claimed results, it will be necessary to strictly observe all aspects of the testing methodology. Therefore, if the speed indicators you received do not correspond to the declared ones, you should not carry the purchase back to the store on the same day. Unless, of course, this is not a critical situation with minimal speed and failures when reading or writing data. In addition, the speeds of most SSDs change at a worse speed over time, stopping at a certain point called stationary performance. So the question is: is it necessary to constantly test the SSD in the end? Although not quite right. That's better: does it make sense to constantly test SSDs? the speeds of most SSDs change at a worse speed over time, stopping at a certain point called stationary performance. So the question is: is it necessary to constantly test the SSD in the end? Although not quite right. That's better: does it make sense to constantly test SSDs? the speeds of most SSDs change at a worse speed over time, stopping at a certain point called stationary performance. So the question is: is it necessary to constantly test the SSD in the end? Although not quite right. That's better: does it make sense to constantly test SSDs?

Regular testing or monitoring behavior?

So whether it is necessary, coming home from work, to begin to drive away the benchmark once again? This is just not recommended. Like it or not, but any of the existing programs of this type writes data to the drive. Some more, some less, but they write. Yes, compared with the SSD resource, the recorded volume is quite small, but it is. And the TRIM / Deallocate functions will take time to process the deleted data. In general, it makes no sense to run tests regularly or with nothing to do. But if in everyday work you begin to notice a slowdown of the system or heavy software installed on the SSD, as well as freezes, BSODs, errors in writing and reading files, then you should already be puzzled by identifying the cause of the problem. It is possible that the problem may be on the side of other components, but checking the drive is the easiest. This will require proprietary software from the manufacturer of the SSD. For our drives - Kingston SSD Manager. But first of all, back up important data, and only then do diagnostics and testing. First, look at the SSD Health area. It has two indicators in percent. The first is the so-called wear of the drive, the second is the use of the spare memory area. The lower the value, the more concern you should have. Of course, if the values decrease by 1-2-3% per year with very intensive use of the drive, then this is a normal situation. Another thing is if, without special loads, the values decrease unusually quickly. Nearby there is another area - Health Overview. It briefly reports on whether errors of various kinds have been recorded, and the general status of the drive is indicated. We also check for new firmware. More precisely, the program does it itself. If there is one, and the disk behaves strangely (there are errors, the level of "health" is reduced and other components are generally excluded), then we can safely install it.

If the manufacturer of your SSD does not take care of the support in the form of proprietary software, then you can use a universal one, for example - CrystalDiskInfo. No, Intel has its own software, the screenshot below is just an example :) What should I look for? By the percentage of health status (at least approximately, but the situation will be clear), by the total operating time, the number of inclusions and the volumes of recorded and read data. Not always these values will be displayed, and some of the attributes in the list will be visible as Vendor Specific. More about this later.

And here is a vivid example of a drive that has already failed, which worked for a relatively short time, but then began to work “once in a while”. When turned on, the system did not see it, and after a reboot everything was fine. And this situation was repeated in random order. The main thing with this behavior of the drive is to immediately backup important data, which, however, we said recently. But we will not tire of repeating this. The number of starts and runtimes are completely unattainable. Almost 20 thousand days of work. Or about 54 years old ...

But that is not all - take a look at the values from the manufacturer's proprietary software! Incredible values, right? In such cases, updating the firmware to the current version can help. If not, it is best to contact the manufacturer as part of the warranty service. And if there is a new firmware, then after the update, do not throw important data onto the disk, but carefully work with it and look at the subject of stability. Perhaps the problem will be solved, but maybe not.

You can add one more thing. Some users, out of habit or ignorance, use software that has been familiar to them for a long time, which they monitor the status of classic hard drives. This is strongly discouraged, since the HDD and SSD operation algorithms are strikingly different, as is the set of controller commands. This is especially true for NVMe SSDs. Some programs (for example, Victoria) received SSD support, but they still continue to be finalized (and will they be finalized?) In terms of the correctness of the demonstration of information about connected media. For example, only about a month has passed since the moment when the SMART readings for Kingston SSDs acquired at least some correct form, and even then not completely. All this applies not only to the aforementioned program, but also to many others. That is why, to avoid misinterpretation of data,

Keeping an eye on each cell is bold. Stupid but brave

Some manufacturers implement in their software the ability to check the addresses of each logical unit (LBA) for errors when reading. During such testing, all the free space of the drive is used to write arbitrary data and read it back to verify integrity. Such a scan can take more than one hour (depends on the volume of the drive and the free space on it, as well as its speed indicators). This test allows you to identify bad cells. But it does not do without nuances. Firstly, in a good way, the SSD must be empty to check the maximum memory. Another problem arises from this: you need to make backups and fill them back, which takes away the resource of the drive. Secondly, even more memory resource is spent on the test itself. Not to mention the time spent. And what do we learn from the test results? There are two options, as you understand, either there will be broken cells or not. In the first case, we are wasting a resource and time, and in the second, we are wasting a resource and time. Yes, that’s what it sounds like. Bad cells and without such testing will make themselves felt when the time comes. So there is no point in checking each LBA.

Could you give more details about SMART?

Everyone once saw a set of specific names (attributes) and their values displayed in a list in the corresponding section or directly in the main program window, as seen in the screenshot above. But what do they mean and how to understand them? Let's go back a bit to understand what's what. In theory, each manufacturer brings something of their own to the product in order to attract a potential buyer with this uniqueness. But with SMART it turned out a little differently.

Depending on the manufacturer and model of the drive, the set of parameters may vary, so universal programs may not know certain values, marking them as Vendor Specific. Many manufacturers provide open access documentation for understanding the attributes of their drives - SMART Attribute. It can be found on the manufacturer’s website.

That is why it is recommended that you use proprietary software that is aware of all the intricacies of compatible drive models. In addition, it is strongly recommended to use the English interface to get reliable information about the status of the drive. Often the translation into Russian is not entirely correct, which can lead to confusion. And the documentation itself, which we mentioned above, is most often provided in English.

Now we will look at the basic attributes using the Kingston UV500 drive as an example. To whom it is interesting - we read, to whom it is not - we press PageDown a couple of times and we read the conclusion. But we hope you are still interested - the information is useful, anyway. The construction of the text may look unusual, but it will be more convenient for everyone - you will not need to enter extra words-variables, and it will be easier to find the original words in the report about your drive.

(ID 1) Read Error Rate - contains the frequency of errors during reading.

(ID 5) Reallocated Sector Count- the number of reassigned sectors. It is, in fact, the main attribute. If an SSD finds a bad sector in the process, it may consider it irreparably damaged. In this case, the disk uses the sector from the spare area instead. The new sector receives the logical LBA number of the old one, after which, when accessing the sector with this number, the request will be redirected to the one located in the backup area. If the error is single - this is not a problem. But if such sectors appear regularly, then the problem can be considered critical.

(ID 9) Power On Hours - the drive’s operating hours in hours, including idle mode and all kinds of energy saving modes.

(ID 12) Power Cycle Count- the number of drive on and off cycles, including sudden blackouts (incorrect shutdown).

(ID 170) Used Reserved Block Count - the number of used reserve blocks to replace damaged ones.

(ID 171) Program Fail Count - counts failures of writing to memory.

(ID 172) Erase Fail Count - counting failures of clearing memory cells.

(ID 174) Unexpected Power Off Count - the number of incorrect shutdowns (power failures) without clearing the cache and metadata.

(ID 175) Program Fail Count Worst Die - counts errors of write failures in the worst memory chip.

(ID 176) Erase Fail Count Worst Die - error counting of failures of cleaning the cells of the worst memory chip.

(ID 178) Used Reserved Block Count worst Die - the number of used backup blocks to replace damaged in the worst memory chip.

(ID 180) Unused Reserved Block Count (SSD Total) - the number (or percentage, depending on the type of display) of the available backup memory blocks.

(ID 187) Reported Uncorrectable Errors - the number of uncorrected errors.

(ID 194) Temperature - temperature of the drive.

(ID 195) On-the-Fly ECC Uncorrectable Error Count - the total number of fixed and uncorrectable errors.

(ID 196) Reallocation Event Count - the number of reassignment operations.

(ID 197) Pending Sector Count - the number of sectors requiring reassignment.

(ID 199) UDMA CRC Error Count - counter of errors that occur during data transfer via SATA interface.

(ID 201) Uncorrectable Read Error Rate - the number of uncorrected errors for the current period of operation of the drive.

(ID 204) Soft ECC Correction Rate - the number of fixed errors for the current period of the drive.

(ID 231) SSD Life Left - an indication of the remaining life of the drive based on the number of write / erase cycles.

(ID 241) GB Written from Interface - the amount of data in GB written to the drive.

(ID 242) GB Read from Interface - the amount of data in GB read from the drive.

(ID 250) Total Number of NAND Read Retries- the number of attempts to read from the drive.

Perhaps this concludes the list. Of course, for other models of attributes, there may be more or less, but their values within the manufacturer will be identical. And decrypting the values is quite simple for the average user, everything is logical: increasing the number of errors is worse than the disk, decreasing the backup sectors is also bad. By temperature - everything is already clear. Each of you will be able to add something of your own - this is expected, since the full list of attributes is very large, and we have listed only the main ones.

Paranoia or a sober view of data security?

As practice shows, testing is only necessary to confirm the claimed speed characteristics. The rest is a waste of storage resource and your time. There is no practical benefit in this, if only you can mentally reassure yourself after investing a certain amount of money in an SSD. If there are problems, they will make themselves felt. If you want to monitor the status of your purchase, then just open the proprietary software and look at the indicators that we talked about today and which are clearly shown in the screenshots. This will be the fastest and most correct way to diagnose. And add a few words about the resource. Today we said that testing drives is wasting their resource. On the one hand, it is. But if you think a little, then a couple of, or even a dozen recorded gigabytes - not so much. For example, take the budget Kingston A400R with a capacity of 256 GB. Its TBW value is 80 TB (81920 GB), and the warranty period is 1 year. That is, in order to fully develop the drive’s resource for this year, 224 GB of data must be recorded on it daily. How to do it in office PCs or laptops? In fact, nothing. Even if you record about 25 GB of data per day, the resource will be developed only after almost 9 years. But in the A1000 series drives, the resource is from 150 to 600 TB, which is much more! Taking into account the 5-year warranty, on the flagship with a capacity of 960 GB you need to write over 330 GB per day, which is unlikely even if you are an avid player and love new games that easily occupy a hundred gigabytes. In general, why all this? Moreover, killing a drive’s resource is a rather difficult task. Much more important to monitor for errors which does not require the use of familiar benchmarks. Use proprietary software - and everything will be under control. Designed for Kingston and HyperX drivesSSD Manager , which has everything necessary for the average user functionality. Although, it is unlikely that your Kingston or HyperX will fail ... That's all, success in all and long life of your drives!

PS In case of problems with SSD, plantain still does not help :(

For more information on Kingston products, please visit the company's website.