Building a Service Oriented Architecture on Rails + Kafka

Hello, Habr! I present to you the post, which is a text adaptation of the performance of Stella Cotton at RailsConf 2018 and a translation of the article "Building a Service-oriented Architecture with Rails and Kafka" by Stella Cotton.

Recently, the transition from monolithic architecture to microservices is clearly visible. In this guide, we will learn the basics of Kafka and how an event-driven approach can improve your Rails application. We will also talk about the problems of monitoring and scalability of services that work through an event-oriented approach.

I’m sure that you would like to have information about how your users came to your platform or what pages they visit, which buttons they click, etc. A truly popular application can generate billions of events and send a huge amount of data to analytics services, which can be a serious challenge for your application.

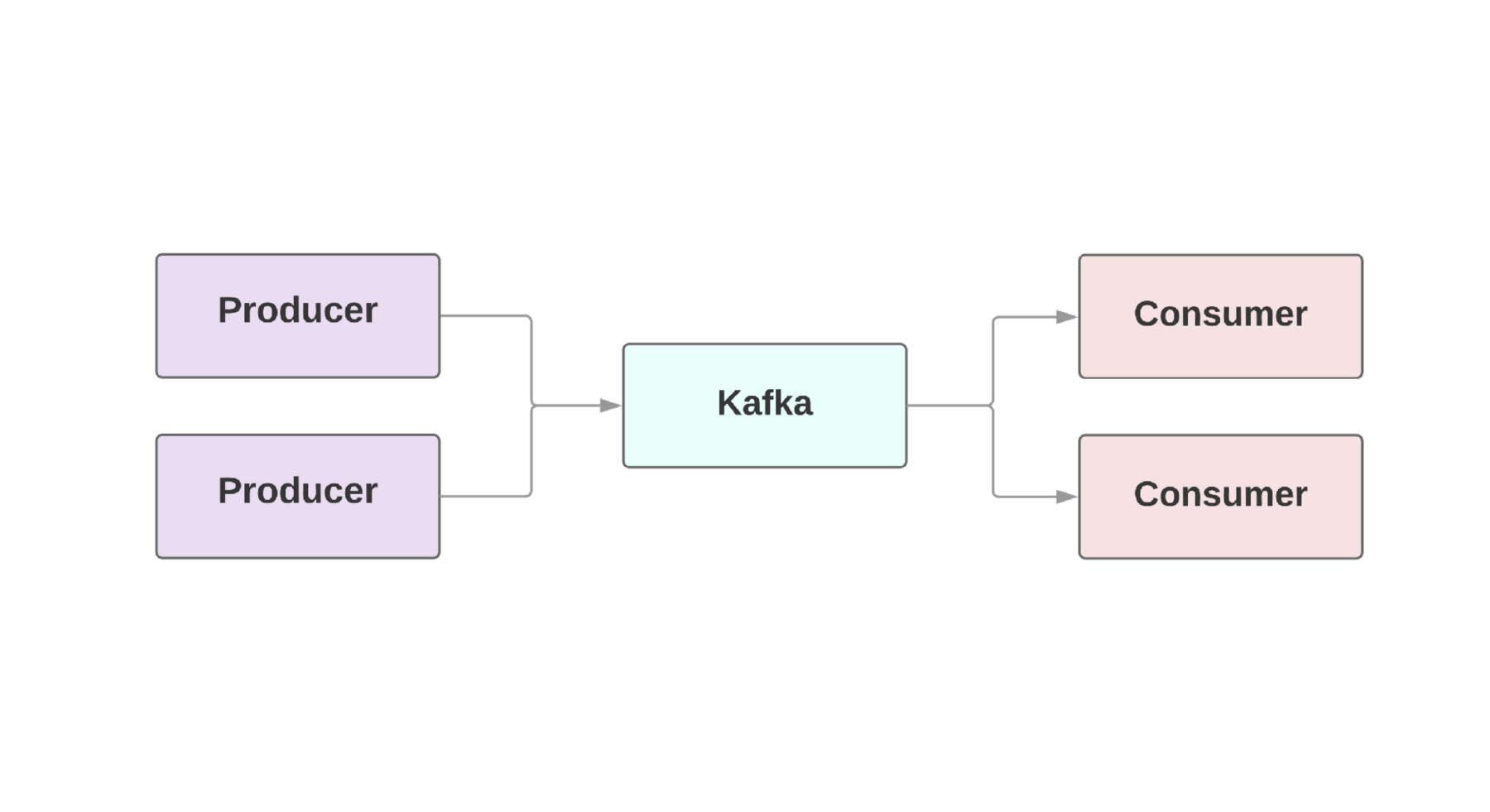

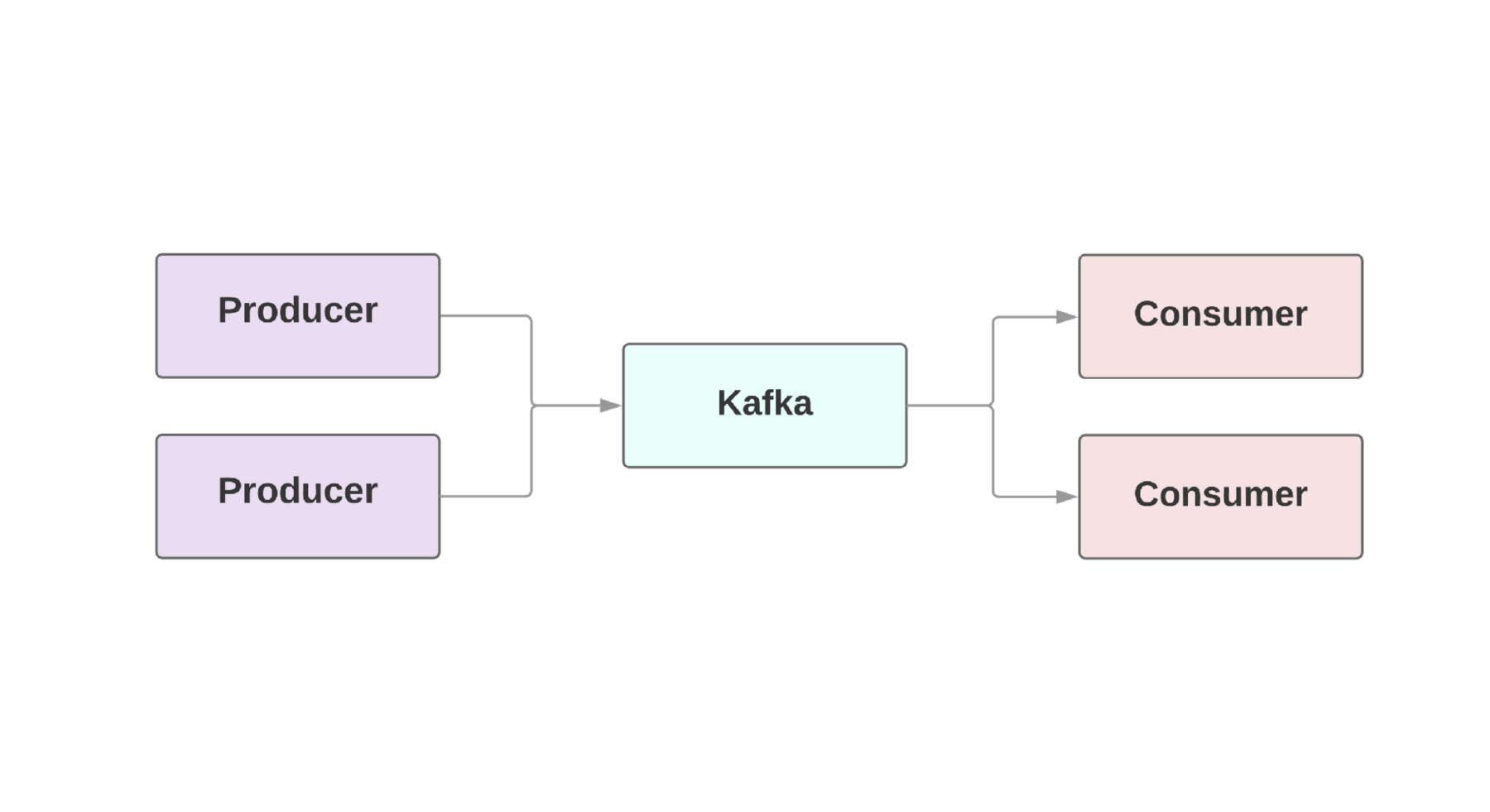

As a rule, an integral part of web applications requires the so-called real-time data flow . Kafka provides a failsafe connection between producers , event generators and consumers, those who receive these events. There may even be several producers and consumers in one application. In Kafka, every event exists for a given time, so several consumers can read the same event again and again. The Kafka cluster includes several brokers who are Kafka instances.

A key feature of Kafka is the high speed of event processing. Traditional queuing systems, such as AMQP, have an infrastructure that monitors the processed events for each consumer. When the number of consumers grows to a decent level, the system hardly begins to cope with the load, because it has to monitor an increasing number of conditions. Also, there are big issues with consistency between consumer and event processing. For example, is it worth immediately marking a message as sent as soon as it is processed by the system? And if a consumer falls on the other end without receiving a message?

Kafka also has a fail-safe architecture. The system runs as a cluster on one or more servers, which can be scaled horizontally by adding new machines. All data is written to disk and copied to several brokers. In order to understand the possibilities of scalability, it is worth taking a look at such companies as Netflix, LinkedIn, Microsoft. All of them send trillions of messages per day through their Kafka clusters!

Heroku provides a Kafka cluster add-on that can be used for any environment. For ruby applications, we recommend using the ruby-kafka gem . The minimal implementation looks something like this:

After configuring the config, you can use the gem to send messages. Thanks to the asynchronous sending of events, we can send messages from anywhere:

We’ll talk about serialization formats below, but for now we’ll use the good old JSON. The argument

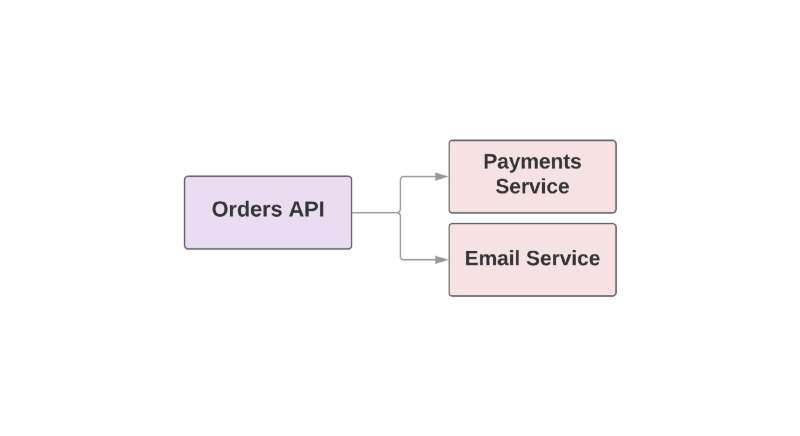

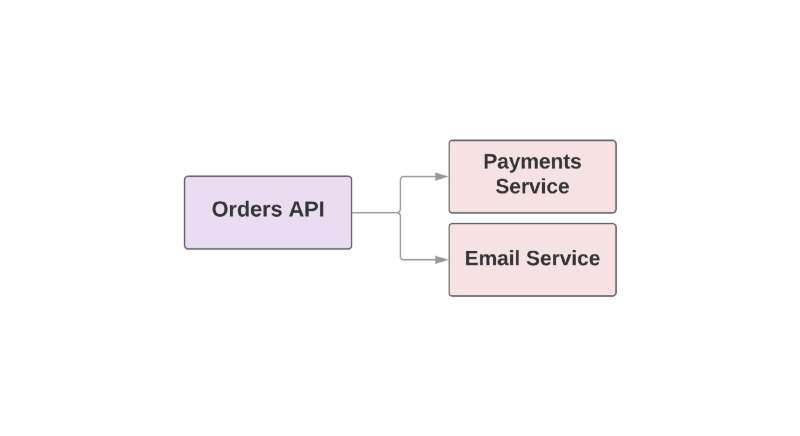

Some of the features that make Kafka a useful tool also make it a failover RPC between services. Take a look at an example of an e-commerce application:

When the user places an order, the function is called

One of the problems with this approach is that the superior service in the hierarchy is responsible for monitoring the availability of the downstream service. If the service for sending letters turned out to be a bad day, the higher service needs to know about it. And if the sending service is unavailable, then you need to repeat a certain set of actions. How can Kafka help in this situation?

For example:

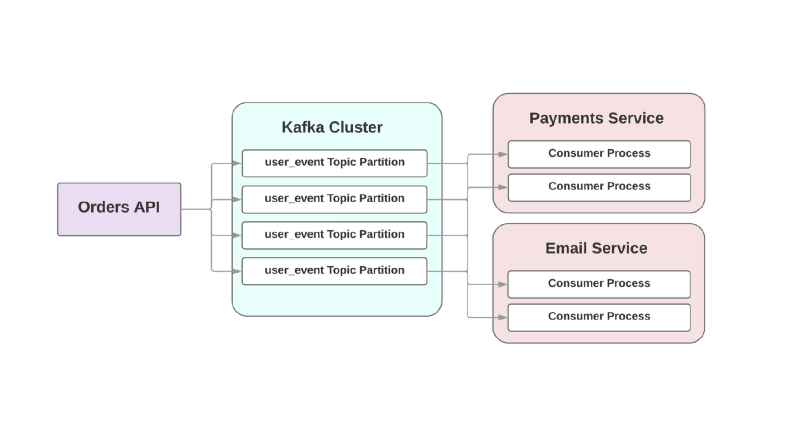

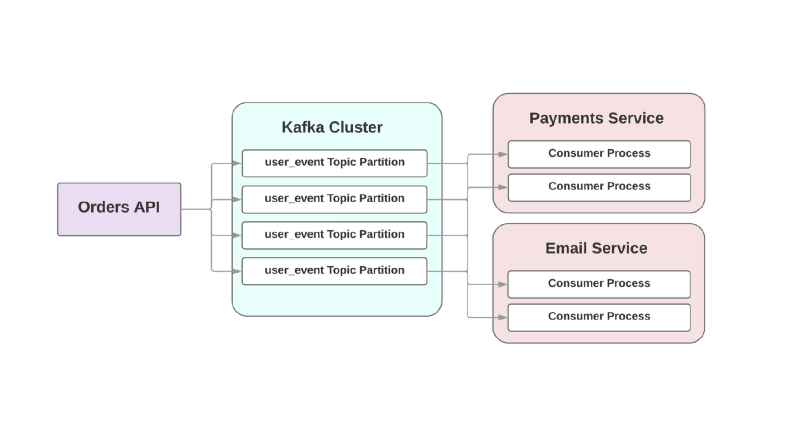

In this event-oriented approach, a superior service can record an event in Kafka that an order has been created. Because of the so-called at least onceapproach, the event will be recorded in Kafka at least once and will be available to downstream consumers for reading. If the service of sending letters lies, the event will wait on disk until the consumer rises and reads it.

Another problem with RPC-oriented architecture is in fast-growing systems: adding a new downstream service entails changes in the upstream. For example, you would like to add one more step after creating an order. In an event-driven world, you will need to add another consumer to handle a new type of event.

A post titled “ What do you mean by“ Event-Driven ”by Martin Fowler discusses confusion around event-driven applications. When developers discuss such systems, they are actually talking about a huge number of different applications. In order to give a general understanding of the nature of such systems, Fowler defined several architectural patterns.

Let's take a look at what these patterns are. If you want to know more, I advise you to read his report at GOTO Chicago 2017.

The first Fowler pattern is called Event Notification . In this scenario, the producer service notifies consumers of the event with a minimum amount of information:

If consumers need more information about the event, they make a request to producer and get more data.

The second template is called Event-Carried State Transfer . In this scenario, producer provides additional information about the event and consumer can store a copy of this data without making additional calls:

Fowler called the third template Event-Sourced and it is rather architectural. The release of the template involves not only communication between your services, but also the preservation of the presentation of the event. This ensures that even if you lose the database, you can still restore the state of the application by simply running the saved event stream. In other words, each event saves a certain state of the application at a certain moment.

The big problem with this approach is that the application code always changes, and with it the format or amount of data that producer gives can change. This makes restoring the state of the application problematic.

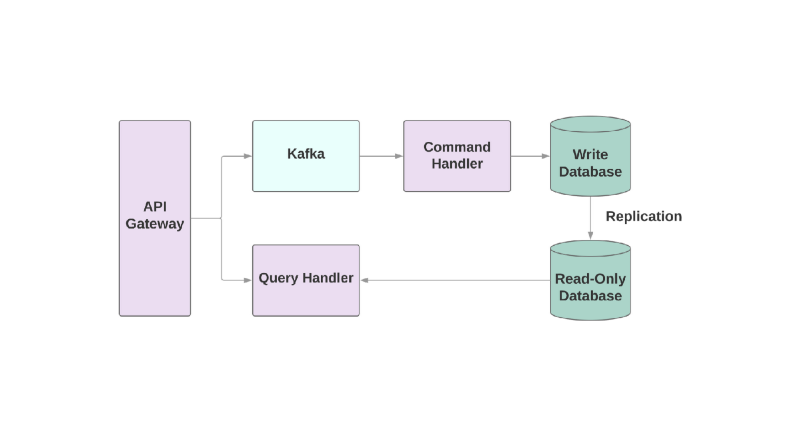

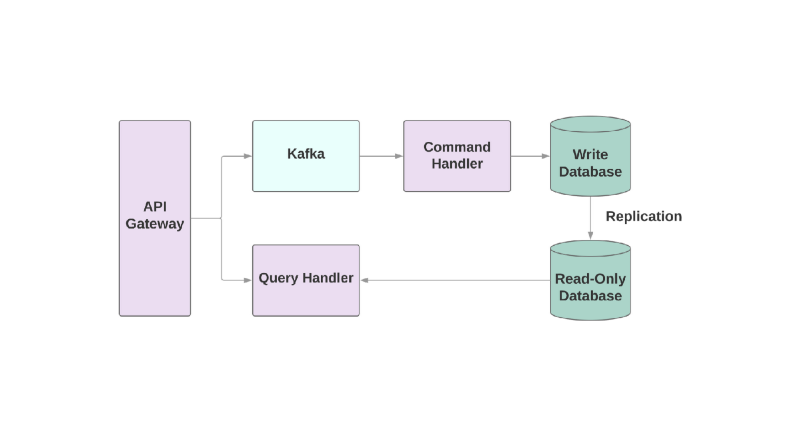

And the last template is Command Query Responsibility Segregation , or CQRS. The idea is that the actions that you apply to the object, for example: create, read, update, should be divided into different domains. This means that one service should be responsible for the creation, another for the update, etc. In object-oriented systems, everything is often stored in one service.

A service that writes to the database will read the flow of events and process commands. But any requests occur only in the read-only database. Dividing the read and write logic into two different services increases complexity, but allows you to optimize performance separately for these systems.

Let's talk about some of the problems you might encounter when integrating Kafka into your service-oriented application.

The first problem might be slow consumer ones. In an event-oriented system, your services should be able to process events instantly when they are received from a superior service. Otherwise, they will simply hang without any alerts about the problem or timeouts. The only place where you can define timeouts is a socket connection with Kafka brokers. If the service does not handle the event quickly enough, the connection can be interrupted by timeout, but restoring the service requires additional time, because creating such sockets is expensive.

If the consumer is slow, how can you increase the speed of event processing? In Kafka, you can increase the number of consumers in a group, so more events can be processed in parallel. But at least 2 consumers will be required for one service: in case one falls, damaged sections can be reassigned.

It is also very important to have metrics and alerts to monitor the speed of event processing. ruby-kafka can work with ActiveSupport alerts, it also has StatsD and Datadog modules, which are enabled by default. In addition, the gem provides a list of recommended metrics for monitoring.

Another important aspect of building systems with Kafka is the design of consumers with the ability to handle failures. Kafka is guaranteed to send an event at least once; excluded the case when the message was not sent at all. But it is important that consumers are prepared to handle recurring events. One way to do this is to always use

One of the surprises when working with Kafka may be its simple attitude to the data format. You can send anything in bytes and the data will be sent to the consumer without any verification. On the one hand, it gives flexibility and allows you not to care about the data format. On the other hand, if producer decides to change the data being sent, there is a chance that some consumer will eventually break.

Before building an event-oriented architecture, select a data format and analyze how it will help in the future to register and develop schemes.

One of the formats recommended for use, of course, is JSON. This format is human-readable and supported by all known programming languages. But there are pitfalls. For example, the size of the final data in JSON can become frighteningly large. The format is required to store key-value pairs, which is flexible enough, but the data is duplicated in each event. Changing the schema is also a difficult task because there is no built-in support for overlaying one key on another if you need to rename the field.

Kafka team advises Avro as a serialization system. Data is sent in binary form, and this is not the most human-readable format, but inside there is more reliable support for circuits. The final entity in Avro includes both schema and data. Avro also supports both simple types, such as numbers, and complex types: dates, arrays, etc. In addition, it allows you to include documentation inside the scheme, which allows you to understand the purpose of a specific field in the system and contains many other built-in tools for working with the scheme.

avro-builder is a gem created by Salsify that offers a ruby-like DSL for creating schemas. You can read more about Avro in this article .

If you are interested in how to host Kafka or how it is used in Heroku, there are several reports that may be of interest to you.

Jeff Chao's at DataEngConf SF '17 “ Beyond 50,000 Partitions: How Heroku Operates and Pushes the Limits of Kafka at Scale ”

Pavel Pravosud at Dreamforce '16 “ Dogfooding Kafka: How We Built Heroku's Real-Time Platform Event Stream ”

Enjoy!

Recently, the transition from monolithic architecture to microservices is clearly visible. In this guide, we will learn the basics of Kafka and how an event-driven approach can improve your Rails application. We will also talk about the problems of monitoring and scalability of services that work through an event-oriented approach.

What is Kafka?

I’m sure that you would like to have information about how your users came to your platform or what pages they visit, which buttons they click, etc. A truly popular application can generate billions of events and send a huge amount of data to analytics services, which can be a serious challenge for your application.

As a rule, an integral part of web applications requires the so-called real-time data flow . Kafka provides a failsafe connection between producers , event generators and consumers, those who receive these events. There may even be several producers and consumers in one application. In Kafka, every event exists for a given time, so several consumers can read the same event again and again. The Kafka cluster includes several brokers who are Kafka instances.

A key feature of Kafka is the high speed of event processing. Traditional queuing systems, such as AMQP, have an infrastructure that monitors the processed events for each consumer. When the number of consumers grows to a decent level, the system hardly begins to cope with the load, because it has to monitor an increasing number of conditions. Also, there are big issues with consistency between consumer and event processing. For example, is it worth immediately marking a message as sent as soon as it is processed by the system? And if a consumer falls on the other end without receiving a message?

Kafka also has a fail-safe architecture. The system runs as a cluster on one or more servers, which can be scaled horizontally by adding new machines. All data is written to disk and copied to several brokers. In order to understand the possibilities of scalability, it is worth taking a look at such companies as Netflix, LinkedIn, Microsoft. All of them send trillions of messages per day through their Kafka clusters!

Setting up Kafka in Rails

Heroku provides a Kafka cluster add-on that can be used for any environment. For ruby applications, we recommend using the ruby-kafka gem . The minimal implementation looks something like this:

# config/initializers/kafka_producer.rb

require "kafka"

# Configure the Kafka client with the broker hosts and the Rails

# logger.

$kafka = Kafka.new(["kafka1:9092", "kafka2:9092"], logger: Rails.logger)

# Set up an asynchronous producer that delivers its buffered messages

# every ten seconds:

$kafka_producer = $kafka.async_producer(

delivery_interval: 10,

)

# Make sure to shut down the producer when exiting.

at_exit { $kafka_producer.shutdown }After configuring the config, you can use the gem to send messages. Thanks to the asynchronous sending of events, we can send messages from anywhere:

class OrdersController < ApplicationController

def create

@comment = Order.create!(params)

$kafka_producer.produce(order.to_json, topic: "user_event", partition_key: user.id)

end

endWe’ll talk about serialization formats below, but for now we’ll use the good old JSON. The argument

topicrefers to the log to which Kafka writes this event. Topics are spread out in different sections, which allow you to split the data for a particular topic into different brokers for better scalability and reliability. And it’s really a good idea to have two or more sections for each topic, because if one of the sections falls, your events will be recorded and processed anyway. Kafka ensures that events are delivered in the order of the queue within the section, but not within the whole topic. If the order of events is important, then sending partition_key ensures that all events of a particular type are stored on the same partition.Kafka for your services

Some of the features that make Kafka a useful tool also make it a failover RPC between services. Take a look at an example of an e-commerce application:

def create_order

create_order_record

charge_credit_card # call to Payments Service

send_confirmation_email # call to Email Service

endWhen the user places an order, the function is called

create_order. This creates an order in the system, deducts money from the card and sends an email with confirmation. As you can see, the last two steps are taken out in separate services.

One of the problems with this approach is that the superior service in the hierarchy is responsible for monitoring the availability of the downstream service. If the service for sending letters turned out to be a bad day, the higher service needs to know about it. And if the sending service is unavailable, then you need to repeat a certain set of actions. How can Kafka help in this situation?

For example:

In this event-oriented approach, a superior service can record an event in Kafka that an order has been created. Because of the so-called at least onceapproach, the event will be recorded in Kafka at least once and will be available to downstream consumers for reading. If the service of sending letters lies, the event will wait on disk until the consumer rises and reads it.

Another problem with RPC-oriented architecture is in fast-growing systems: adding a new downstream service entails changes in the upstream. For example, you would like to add one more step after creating an order. In an event-driven world, you will need to add another consumer to handle a new type of event.

Integrating Events into Service Oriented Architecture

A post titled “ What do you mean by“ Event-Driven ”by Martin Fowler discusses confusion around event-driven applications. When developers discuss such systems, they are actually talking about a huge number of different applications. In order to give a general understanding of the nature of such systems, Fowler defined several architectural patterns.

Let's take a look at what these patterns are. If you want to know more, I advise you to read his report at GOTO Chicago 2017.

Event notification

The first Fowler pattern is called Event Notification . In this scenario, the producer service notifies consumers of the event with a minimum amount of information:

{

"event": "order_created",

"published_at": "2016-03-15T16:35:04Z"

}If consumers need more information about the event, they make a request to producer and get more data.

Event-carried state transfer

The second template is called Event-Carried State Transfer . In this scenario, producer provides additional information about the event and consumer can store a copy of this data without making additional calls:

{

"event": "order_created",

"order": {

"order_id": 98765,

"size": "medium",

"color": "blue"

},

"published_at": "2016-03-15T16:35:04Z"

}Event-sourced

Fowler called the third template Event-Sourced and it is rather architectural. The release of the template involves not only communication between your services, but also the preservation of the presentation of the event. This ensures that even if you lose the database, you can still restore the state of the application by simply running the saved event stream. In other words, each event saves a certain state of the application at a certain moment.

The big problem with this approach is that the application code always changes, and with it the format or amount of data that producer gives can change. This makes restoring the state of the application problematic.

Command Query Responsibility Segregation

And the last template is Command Query Responsibility Segregation , or CQRS. The idea is that the actions that you apply to the object, for example: create, read, update, should be divided into different domains. This means that one service should be responsible for the creation, another for the update, etc. In object-oriented systems, everything is often stored in one service.

A service that writes to the database will read the flow of events and process commands. But any requests occur only in the read-only database. Dividing the read and write logic into two different services increases complexity, but allows you to optimize performance separately for these systems.

Problems

Let's talk about some of the problems you might encounter when integrating Kafka into your service-oriented application.

The first problem might be slow consumer ones. In an event-oriented system, your services should be able to process events instantly when they are received from a superior service. Otherwise, they will simply hang without any alerts about the problem or timeouts. The only place where you can define timeouts is a socket connection with Kafka brokers. If the service does not handle the event quickly enough, the connection can be interrupted by timeout, but restoring the service requires additional time, because creating such sockets is expensive.

If the consumer is slow, how can you increase the speed of event processing? In Kafka, you can increase the number of consumers in a group, so more events can be processed in parallel. But at least 2 consumers will be required for one service: in case one falls, damaged sections can be reassigned.

It is also very important to have metrics and alerts to monitor the speed of event processing. ruby-kafka can work with ActiveSupport alerts, it also has StatsD and Datadog modules, which are enabled by default. In addition, the gem provides a list of recommended metrics for monitoring.

Another important aspect of building systems with Kafka is the design of consumers with the ability to handle failures. Kafka is guaranteed to send an event at least once; excluded the case when the message was not sent at all. But it is important that consumers are prepared to handle recurring events. One way to do this is to always use

UPSERTto add new records to the database. If the record already exists with the same attributes, the call will essentially be inactive. In addition, you can add a unique identifier to each event and simply skip events that have already been processed previously.Data formats

One of the surprises when working with Kafka may be its simple attitude to the data format. You can send anything in bytes and the data will be sent to the consumer without any verification. On the one hand, it gives flexibility and allows you not to care about the data format. On the other hand, if producer decides to change the data being sent, there is a chance that some consumer will eventually break.

Before building an event-oriented architecture, select a data format and analyze how it will help in the future to register and develop schemes.

One of the formats recommended for use, of course, is JSON. This format is human-readable and supported by all known programming languages. But there are pitfalls. For example, the size of the final data in JSON can become frighteningly large. The format is required to store key-value pairs, which is flexible enough, but the data is duplicated in each event. Changing the schema is also a difficult task because there is no built-in support for overlaying one key on another if you need to rename the field.

Kafka team advises Avro as a serialization system. Data is sent in binary form, and this is not the most human-readable format, but inside there is more reliable support for circuits. The final entity in Avro includes both schema and data. Avro also supports both simple types, such as numbers, and complex types: dates, arrays, etc. In addition, it allows you to include documentation inside the scheme, which allows you to understand the purpose of a specific field in the system and contains many other built-in tools for working with the scheme.

avro-builder is a gem created by Salsify that offers a ruby-like DSL for creating schemas. You can read more about Avro in this article .

Additional Information

If you are interested in how to host Kafka or how it is used in Heroku, there are several reports that may be of interest to you.

Jeff Chao's at DataEngConf SF '17 “ Beyond 50,000 Partitions: How Heroku Operates and Pushes the Limits of Kafka at Scale ”

Pavel Pravosud at Dreamforce '16 “ Dogfooding Kafka: How We Built Heroku's Real-Time Platform Event Stream ”

Enjoy!