Docker'ization: what every .Net developer needs to know

In the age of the victorious DevOps, developers simply need to know about Docker containers, why they are needed and how to work with them. This makes work a lot easier. And the power of containerization can feel even those who work with .Net Core development environment Visual Studio 2017. On the available tools and settings Docker under VS told Paul Skiba, head of server-side development, on mitape Panda-Meetup the C # .Net .

What should a developer know? "Program" - you will answer and ... Guess. But if earlier the list of necessary knowledge ended there, now in the DevOps age it is just beginning. When we write code, we definitely need to know the structure of the network: what interacts with what. Support of several programming languages is required at once, and different pieces of code in a project can be written on anything.

We need to know how to roll back the software if an error is found. We have to manage the configurations for different media used in the company - at least a few dev-environments, test and combat environments. Oh yeah, you also need to understand the scripts on different servers / operating systems, because not everything can be done with code, sometimes you have to write scripts.

We need to know the security requirements, and they are getting tougher and eat off the developer a lot of time. Do not forget about the support and development of related software: Git, Jenkins, and so on. As a result, the developer may simply not have enough time for pure development.

What to do? There is a way out, and it lies in the Docker-containers and their management system. Once you deploy this whole complex colossus, and you, like in the good old days, again you will write only code. All the rest will be controlled by other people or the system itself.

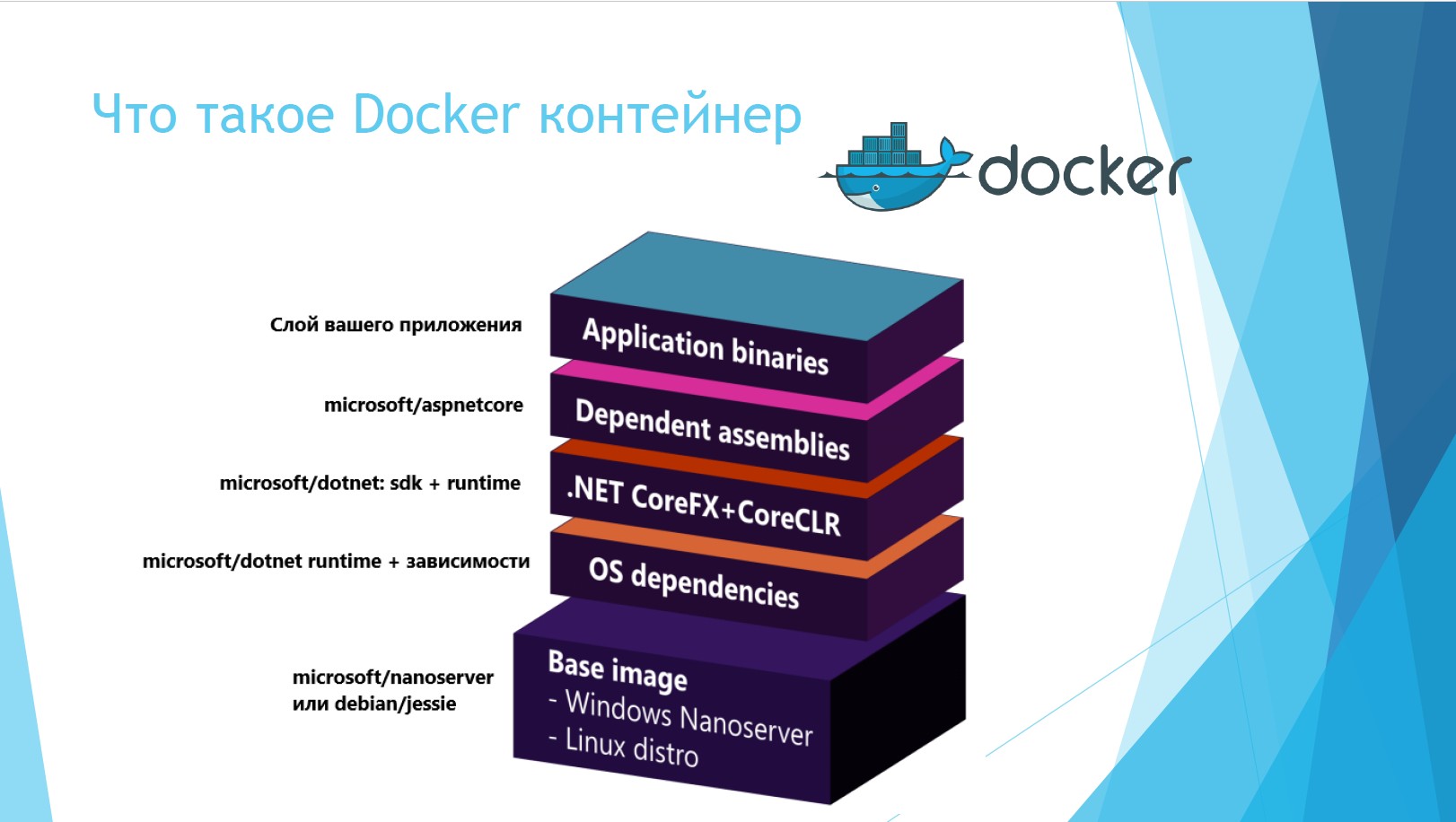

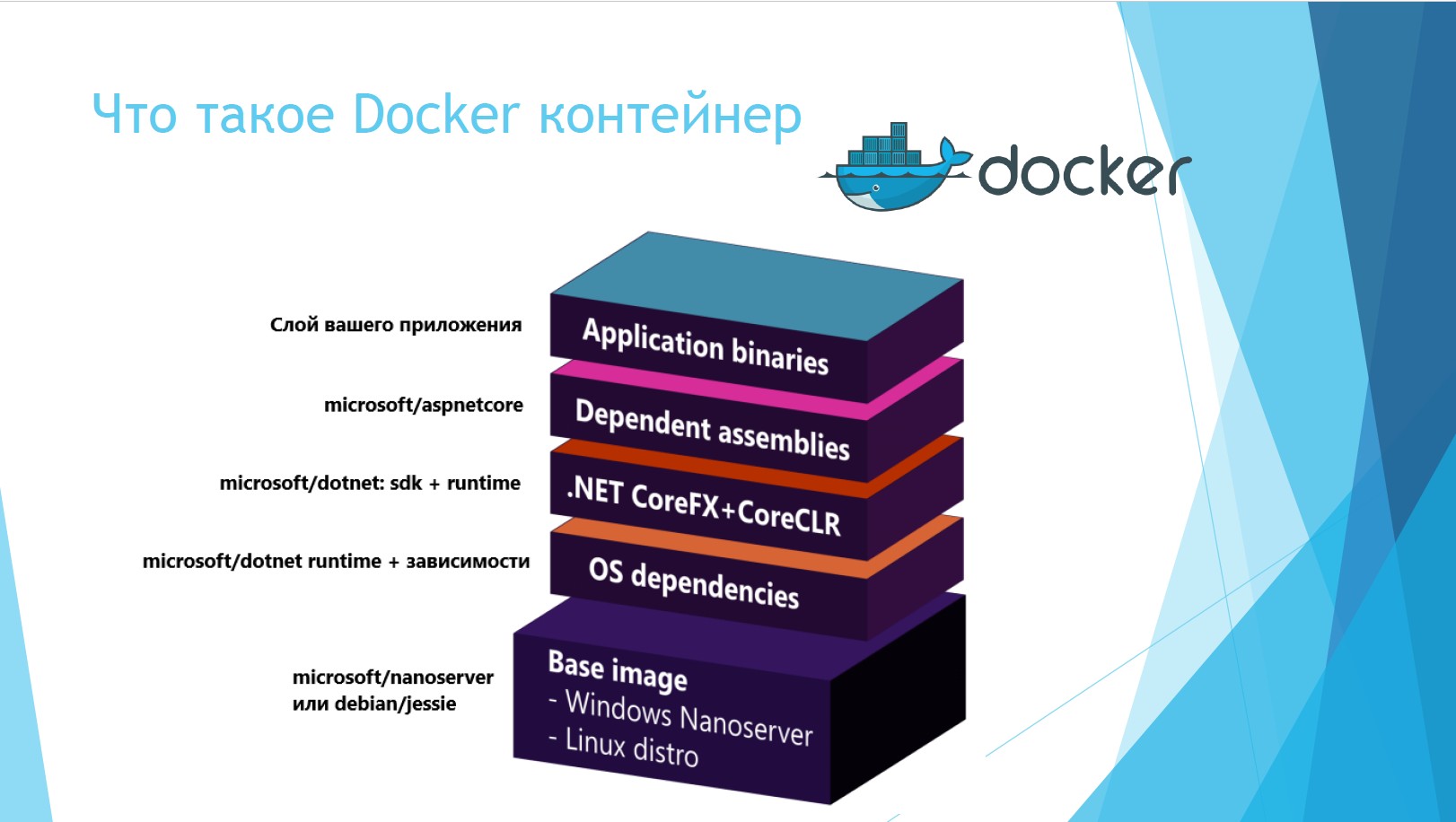

What is a docker container? This is a construction consisting of several layers. The top layer is the binary layer of your application. The second and third layers are now merged into .Net Core, the container is already going SDK-shny. The next layer is depending on the operating system on which the container is deployed. And the bottommost layer is the operating system itself.

At the bottom level is deployed Windows Nanoserver. This is a mega-clipped squeeze from Windows Server, which can do nothing but maintain a deployed service program. But her volume is 12 times smaller.

If we compare the physical and virtual servers and containers, the benefits of the latter are obvious.

When everything worked on physical servers, we faced a bunch of problems. There was no isolation in the library codes, some applications could interfere with each other. For example: one application worked on .Net 1.1, and another on .Net 2.0. Most often this led to tragedy. After some time virtual servers appeared, the problem of isolation was solved, there were no common libraries. True, it has become very expensive in terms of resources and labor-intensive: it was necessary to keep track of how many virtual lovers are spinning on one virtual machine, on Hyper-V, and on a piece of hardware.

Containers were designed to be an inexpensive and convenient solution, minimally dependent on the OS. Let's see how they differ. Virtual servers inside the system are located approximately like this.

The bottom layer is the host server. It can be both physical and virtual. The next layer is any operating system with virtualization, above is a hypervisor. From above there are virtual servers that can be divided into guest OS and applications. That is, a guest OS is deployed for each virtual server on top of the OS, and this is a waste of resources.

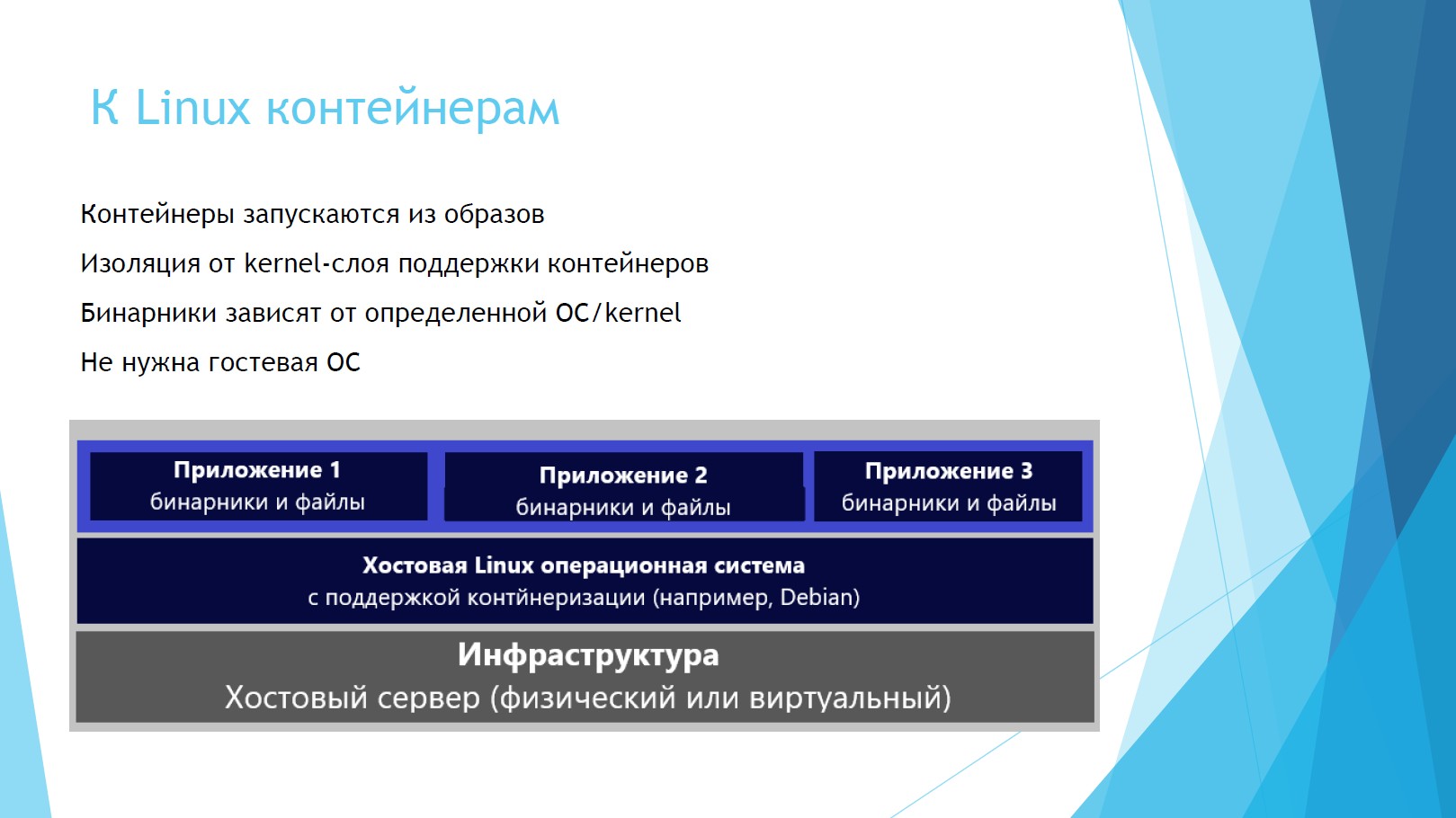

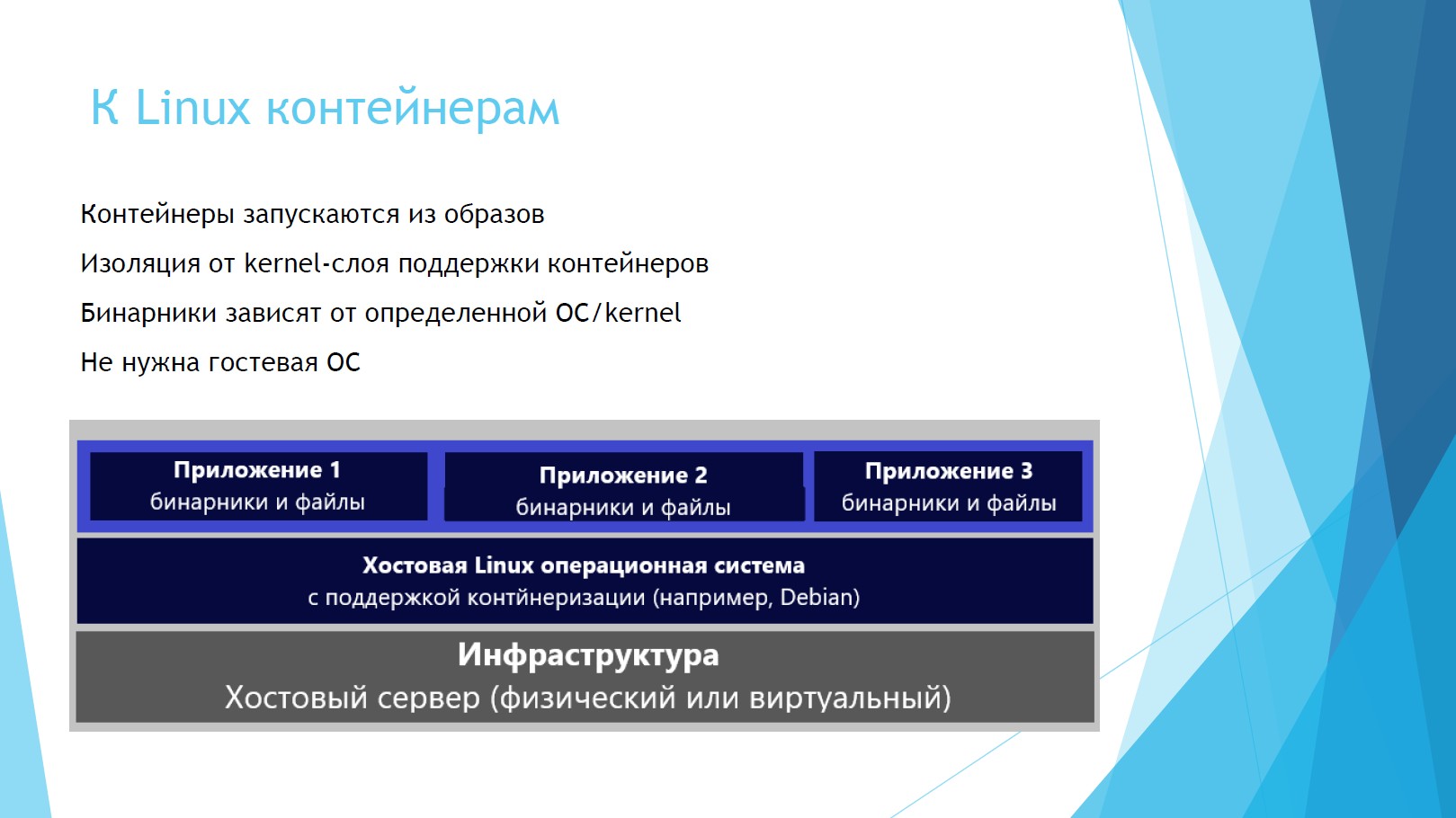

Let's see how the Linux containers are located in the system.

As you can see, the binaries with applications are immediately located above the host server and OS. Guest OS is not needed, resources are released, guest OS licenses are not needed.

Windows containers are slightly different from Linux.

The basic layers are the same: infrastructure, host OS (but now Windows). But further containers can work directly with the OS or be deployed on top of the hypervisor. In the first case, the isolation of processes and spaces is there, but they use the same core with other containers, which is not ice from the point of view of safety. If you use containers through Hyper-V, then everything will be isolated.

Let's get to the Docker itself. Suppose you have Visual Studio and you are doing the first installation of the Docker client for Windows. At the same time, Docker will deploy the Docker demon server, Rest interface for access to it and the client itself - the Docker command line. It will allow us to manage everything related to containers: the network, images, containers, layers.

The slide shows the simplest commands: pull out the Docker container, run it, build, commit, send back.

Docker is very organic with Visual Studio. The screenshot shows the panel menu from Visual Studio 2017. Docker compose is integrated directly into Intellisense, Dockerfile is supported, and all artifacts work on the command line.

Interestingly, we can use Docker to debug containers in real time. And if your containers are connected to each other, they will immediately debug everything all at once, and you will not need to run several environments.

How are containers assembled? The main element here is the dockerfile file, which contains instructions for building the image. Each project creates its own dockerfile. It indicates: from where we take the base image, what arguments we pass, what is the name of the working directory with files, ports.

This source argument has two parameters. The second parameter is the path that the result of the assembly will be placed in the project, the value is set by default. In my opinion, this is not a very good option. There is often a lot of garbage in this folder, it needs to be cleaned periodically, and we can rub the assembly when cleaning this folder. So if you want, you can change it, it is set by the Docker_build_source system parameter, which can also be hammered by hand.

The Entrypoint statement allows you to set up a container as an executable file. This line is needed for .Net Core, so that after the container is successfully launched, it sends the message “Your application is running” to the command line.

Now about the debugging of containers. Here, everything looks like an ordinary .Net, you hardly notice the difference. Most often, I run .Net Core as self-hosted under dotnet.exe. It uses CLRDBG debugger, NuGet packet cache and source code.

ASP.Net 4.5+ is hosted by IIS or IIS Express, it uses the Microsoft Visual Studio Debugger and the sources of the site root in IIS.

There are two environments for debugging: Debug and Release. The debugging image tag is marked as dev, and the latest release. The Source argument for debugging is best set to obj / Docker / empty, so as not to be confused, and with the release of obj / Docker / publish. Here you can use all the same binaries, views, wwwroot folder and all the dependencies that exist.

Let's move on to the most interesting part - the Docker-compose orchestration tool. Consider an example: you have some kind of business service that affects 5-6 containers. And you need to somehow fix how they should be assembled, in what order. This is where Docker-compose comes in handy, which will provide all the build, launch and scaling of containers. It is controlled simply, everything is going to one team.

Docker-compose uses YAML files that store configuration, how exactly containers need to be assembled. They describe what settings to use for the images themselves, assemblies, services, volumes, networks, environments. The syntax is identical for publishing to clusters. That is, such a file was written once, and if you later need to expand the business service into a cluster, you will not have to add anything else.

Consider the structure of a YAML file. Image is a Docker image. The image is a container without an application layer; it is unchanged.

Build indicates how to build, where to build and where to deploy.

Depends_on - dependence on which services it depends on.

Environment - here we set the environment.

Ports - port mapping, on which port your container will be available.

Consider an example. We just have an API without a service, in fact there are 3 containers: there is SQL.data on Linux, there is an application itself, it depends on webapi, and webapi depends on SQL.data.

It does not matter in which sequence the components are written in the file. If everything is correctly described, Compose will automatically correctly build this information based on dependencies in the project. This file is enough to collect all the containers at once, the output will be a ready release.

There is a sort of "container containers", a special container docker-compose.ci.build.yml, which contains the entire composition. From the command line of Visual Studio, you can run this special container, and it will be able to perform the entire build on the build server, for example, in Jenkins.

Let's look inside the file. The example contains the working directory and where it comes from. He recovers the project from GIT, he himself makes the publication of this solution, the Release configuration and puts the result. That's the whole team to build, nothing else needs to be written. Enough once to register it, and then launch the publication with one button.

What else you should pay attention. Docker-compose for each environment collects images, for each configuration a separate file. For each configuration, Visual Studio has a file with the settings you need for your environment.

Directly from VS, you can remotely start debugging of the entire composition.

Finally, let's touch on a topic like cluster orchestrators. We should not think about how containers further exist, what people or systems are managed. To do this, there are 4 of the most popular container management systems: Google Kubernetes, Mesos DC / OS, Docker Swarm and Azure Service Fabric. They allow you to manage clustering and composition of containers.

These systems are able to cope with a huge layer of microservices, providing them with everything they need. The developer will only need to adjust this layer once.

The full performance on Panda Meetup is available below.

For those who want to dive deeper into the topic, I advise you to study the following materials:

Http://dot.net

Http://docs.docker.com

Http://hub.docker.com/microsoft

Http://docs.microsoft.com

Http://visualstudio.com

And finally, an important advice from practice: the most difficult thing is to remember where everything lies.

Documentation when working with docker containers will fall on your shoulders. Without documentation, you will forget where in what container what is connected with what and what is working with. The more services, the greater the total web of connections.

What should a developer know? "Program" - you will answer and ... Guess. But if earlier the list of necessary knowledge ended there, now in the DevOps age it is just beginning. When we write code, we definitely need to know the structure of the network: what interacts with what. Support of several programming languages is required at once, and different pieces of code in a project can be written on anything.

We need to know how to roll back the software if an error is found. We have to manage the configurations for different media used in the company - at least a few dev-environments, test and combat environments. Oh yeah, you also need to understand the scripts on different servers / operating systems, because not everything can be done with code, sometimes you have to write scripts.

We need to know the security requirements, and they are getting tougher and eat off the developer a lot of time. Do not forget about the support and development of related software: Git, Jenkins, and so on. As a result, the developer may simply not have enough time for pure development.

What to do? There is a way out, and it lies in the Docker-containers and their management system. Once you deploy this whole complex colossus, and you, like in the good old days, again you will write only code. All the rest will be controlled by other people or the system itself.

Understanding Containers

What is a docker container? This is a construction consisting of several layers. The top layer is the binary layer of your application. The second and third layers are now merged into .Net Core, the container is already going SDK-shny. The next layer is depending on the operating system on which the container is deployed. And the bottommost layer is the operating system itself.

At the bottom level is deployed Windows Nanoserver. This is a mega-clipped squeeze from Windows Server, which can do nothing but maintain a deployed service program. But her volume is 12 times smaller.

If we compare the physical and virtual servers and containers, the benefits of the latter are obvious.

When everything worked on physical servers, we faced a bunch of problems. There was no isolation in the library codes, some applications could interfere with each other. For example: one application worked on .Net 1.1, and another on .Net 2.0. Most often this led to tragedy. After some time virtual servers appeared, the problem of isolation was solved, there were no common libraries. True, it has become very expensive in terms of resources and labor-intensive: it was necessary to keep track of how many virtual lovers are spinning on one virtual machine, on Hyper-V, and on a piece of hardware.

Containers were designed to be an inexpensive and convenient solution, minimally dependent on the OS. Let's see how they differ. Virtual servers inside the system are located approximately like this.

The bottom layer is the host server. It can be both physical and virtual. The next layer is any operating system with virtualization, above is a hypervisor. From above there are virtual servers that can be divided into guest OS and applications. That is, a guest OS is deployed for each virtual server on top of the OS, and this is a waste of resources.

Let's see how the Linux containers are located in the system.

As you can see, the binaries with applications are immediately located above the host server and OS. Guest OS is not needed, resources are released, guest OS licenses are not needed.

Windows containers are slightly different from Linux.

The basic layers are the same: infrastructure, host OS (but now Windows). But further containers can work directly with the OS or be deployed on top of the hypervisor. In the first case, the isolation of processes and spaces is there, but they use the same core with other containers, which is not ice from the point of view of safety. If you use containers through Hyper-V, then everything will be isolated.

We study Docker under VS

Let's get to the Docker itself. Suppose you have Visual Studio and you are doing the first installation of the Docker client for Windows. At the same time, Docker will deploy the Docker demon server, Rest interface for access to it and the client itself - the Docker command line. It will allow us to manage everything related to containers: the network, images, containers, layers.

The slide shows the simplest commands: pull out the Docker container, run it, build, commit, send back.

Docker is very organic with Visual Studio. The screenshot shows the panel menu from Visual Studio 2017. Docker compose is integrated directly into Intellisense, Dockerfile is supported, and all artifacts work on the command line.

Interestingly, we can use Docker to debug containers in real time. And if your containers are connected to each other, they will immediately debug everything all at once, and you will not need to run several environments.

How are containers assembled? The main element here is the dockerfile file, which contains instructions for building the image. Each project creates its own dockerfile. It indicates: from where we take the base image, what arguments we pass, what is the name of the working directory with files, ports.

This source argument has two parameters. The second parameter is the path that the result of the assembly will be placed in the project, the value is set by default. In my opinion, this is not a very good option. There is often a lot of garbage in this folder, it needs to be cleaned periodically, and we can rub the assembly when cleaning this folder. So if you want, you can change it, it is set by the Docker_build_source system parameter, which can also be hammered by hand.

The Entrypoint statement allows you to set up a container as an executable file. This line is needed for .Net Core, so that after the container is successfully launched, it sends the message “Your application is running” to the command line.

Now about the debugging of containers. Here, everything looks like an ordinary .Net, you hardly notice the difference. Most often, I run .Net Core as self-hosted under dotnet.exe. It uses CLRDBG debugger, NuGet packet cache and source code.

ASP.Net 4.5+ is hosted by IIS or IIS Express, it uses the Microsoft Visual Studio Debugger and the sources of the site root in IIS.

There are two environments for debugging: Debug and Release. The debugging image tag is marked as dev, and the latest release. The Source argument for debugging is best set to obj / Docker / empty, so as not to be confused, and with the release of obj / Docker / publish. Here you can use all the same binaries, views, wwwroot folder and all the dependencies that exist.

Learn Docker Compose

Let's move on to the most interesting part - the Docker-compose orchestration tool. Consider an example: you have some kind of business service that affects 5-6 containers. And you need to somehow fix how they should be assembled, in what order. This is where Docker-compose comes in handy, which will provide all the build, launch and scaling of containers. It is controlled simply, everything is going to one team.

Docker-compose uses YAML files that store configuration, how exactly containers need to be assembled. They describe what settings to use for the images themselves, assemblies, services, volumes, networks, environments. The syntax is identical for publishing to clusters. That is, such a file was written once, and if you later need to expand the business service into a cluster, you will not have to add anything else.

Consider the structure of a YAML file. Image is a Docker image. The image is a container without an application layer; it is unchanged.

Build indicates how to build, where to build and where to deploy.

Depends_on - dependence on which services it depends on.

Environment - here we set the environment.

Ports - port mapping, on which port your container will be available.

Consider an example. We just have an API without a service, in fact there are 3 containers: there is SQL.data on Linux, there is an application itself, it depends on webapi, and webapi depends on SQL.data.

It does not matter in which sequence the components are written in the file. If everything is correctly described, Compose will automatically correctly build this information based on dependencies in the project. This file is enough to collect all the containers at once, the output will be a ready release.

There is a sort of "container containers", a special container docker-compose.ci.build.yml, which contains the entire composition. From the command line of Visual Studio, you can run this special container, and it will be able to perform the entire build on the build server, for example, in Jenkins.

Let's look inside the file. The example contains the working directory and where it comes from. He recovers the project from GIT, he himself makes the publication of this solution, the Release configuration and puts the result. That's the whole team to build, nothing else needs to be written. Enough once to register it, and then launch the publication with one button.

What else you should pay attention. Docker-compose for each environment collects images, for each configuration a separate file. For each configuration, Visual Studio has a file with the settings you need for your environment.

Directly from VS, you can remotely start debugging of the entire composition.

Cluster Orchestrators

Finally, let's touch on a topic like cluster orchestrators. We should not think about how containers further exist, what people or systems are managed. To do this, there are 4 of the most popular container management systems: Google Kubernetes, Mesos DC / OS, Docker Swarm and Azure Service Fabric. They allow you to manage clustering and composition of containers.

These systems are able to cope with a huge layer of microservices, providing them with everything they need. The developer will only need to adjust this layer once.

The full performance on Panda Meetup is available below.

For those who want to dive deeper into the topic, I advise you to study the following materials:

Http://dot.net

Http://docs.docker.com

Http://hub.docker.com/microsoft

Http://docs.microsoft.com

Http://visualstudio.com

And finally, an important advice from practice: the most difficult thing is to remember where everything lies.

Documentation when working with docker containers will fall on your shoulders. Without documentation, you will forget where in what container what is connected with what and what is working with. The more services, the greater the total web of connections.