Fear and Loathing DevSecOps

We had 2 code analyzers, 4 tools for dynamic testing, our own crafts and 250 scripts. Not that all this was necessary in the current process, but since I started to implement DevSecOps, then we must go to the end.

Source . Character authors: Justin Royland and Dan Harmon.

What is SecDevOps? What about DevSecOps? What are the differences? Application Security - what is it about? Why doesn't the classic approach work anymore? Yury Shabalin from Swordfish Security knows the answer to all these questions .Yuri will answer everything in detail and analyze the problems of switching from the classic Application Security model to the DevSecOps process: how to approach the integration of the secure development process into the DevOps process and not break anything, how to go through the main stages of security testing, what tools can be used, what they differ and how to configure them correctly to avoid pitfalls.

About the speaker: Yuri Shabalin - Chief Security Architect at Swordfish Security . He is responsible for the implementation of SSDL, for the general integration of application analysis tools into a single development and testing ecosystem. 7 years of experience in information security. He worked at Alfa Bank, Sberbank and Positive Technologies, which develops software and provides services. Speaker of international conferences ZerONights, PHDays, RISSPA, OWASP.

Application Security is the security section that is responsible for application security. This does not apply to infrastructure or network security, namely, what we write and what developers are working on - these are the flaws and vulnerabilities of the application itself.

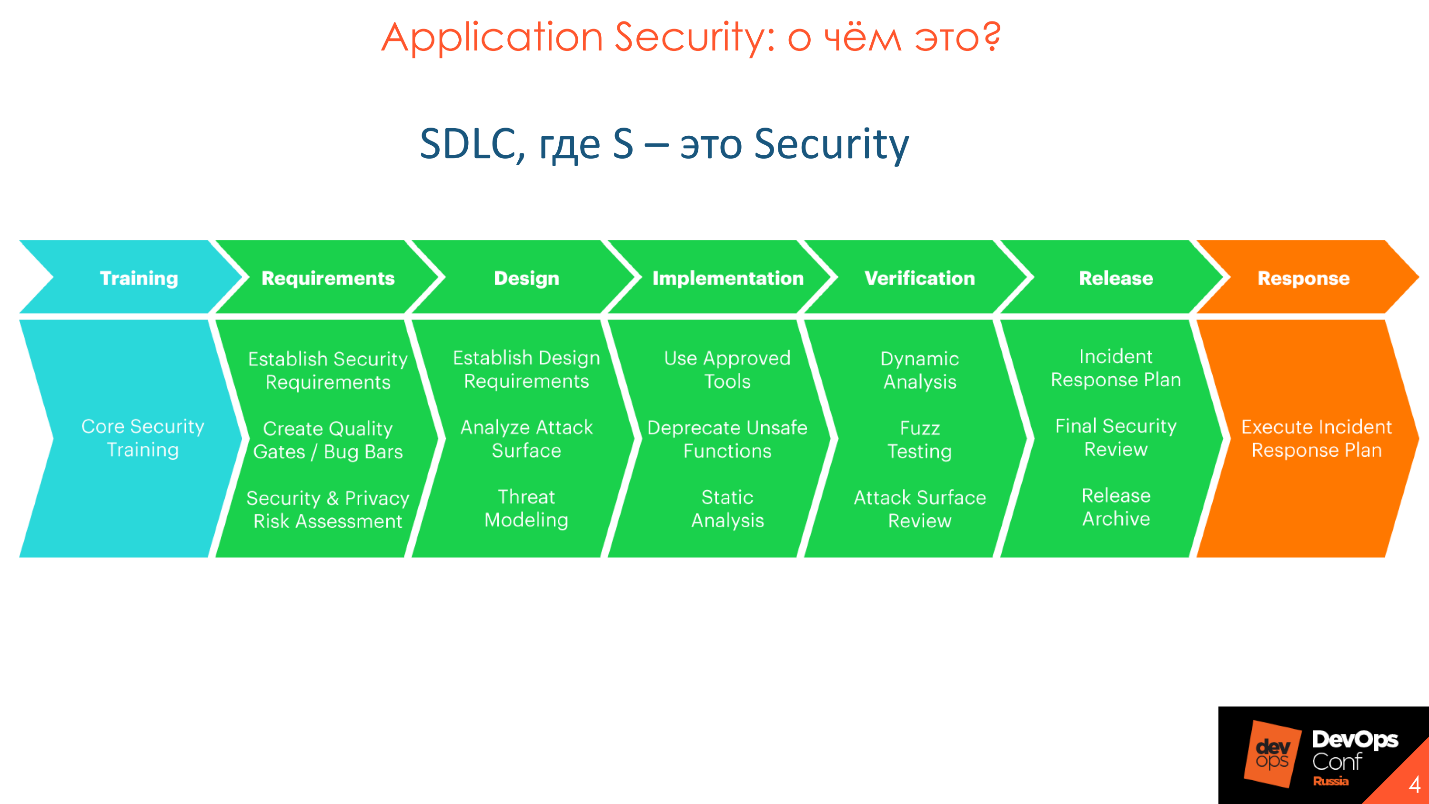

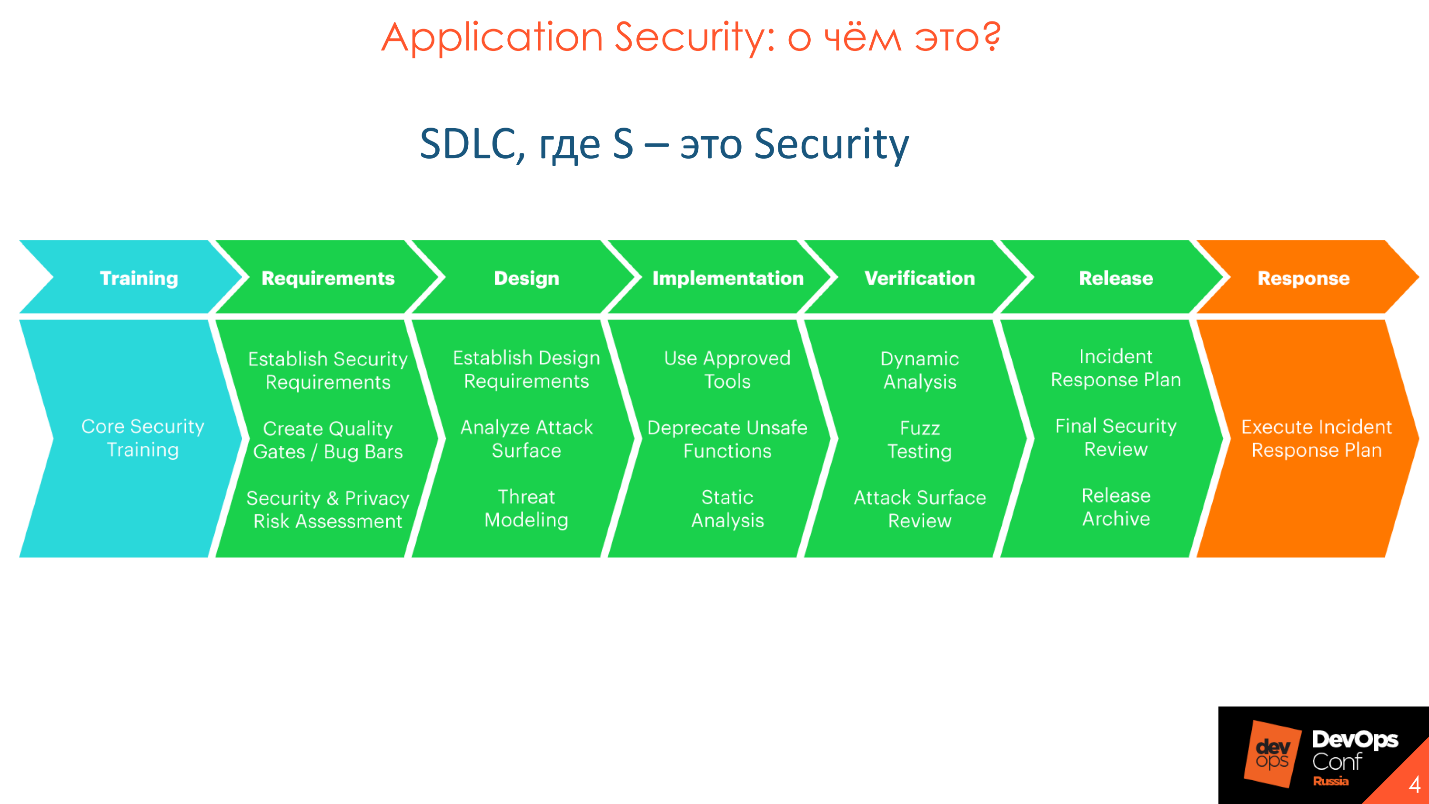

The direction of SDL or SDLC - Security development lifecycle - was developed by Microsoft. The diagram shows the canonical SDLC model, the main task of which is the participation of security at each stage of development, from requirements, to release and release into production. Microsoft realized that there were too many bugs in the prom, there were more of them and something needed to be done, and they proposed this approach, which became canonical.

Application Security and SSDL are not aimed at detecting vulnerabilities, as is commonly believed, but at preventing their occurrence. Over time, the canonical approach from Microsoft was improved, developed, and a deeper detailed immersion appeared in it.

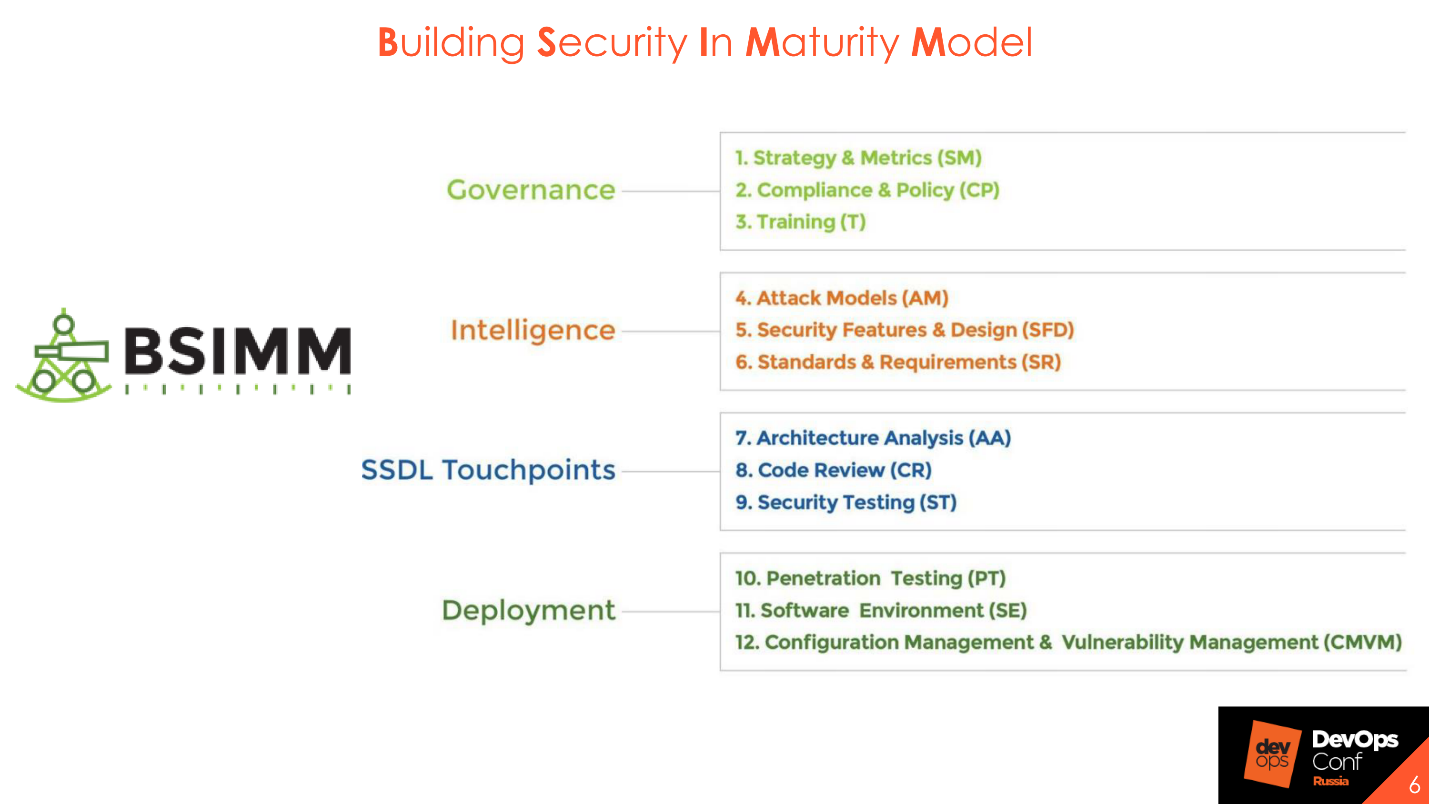

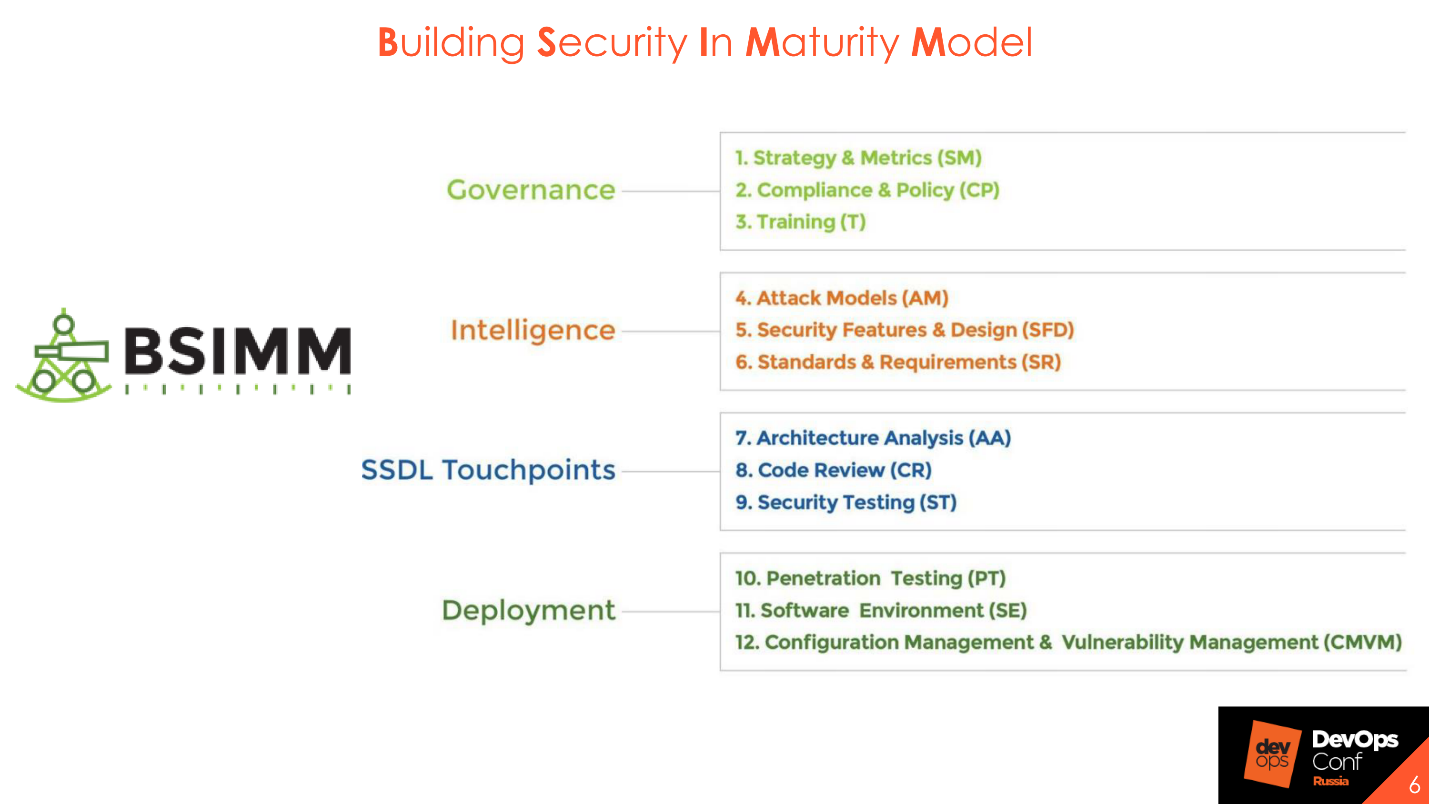

Canonical SDLC is highly detailed in various methodologies - OpenSAMM, BSIMM, OWASP. The methodologies are different, but generally similar.

I like BSIMM the most - Building Security In Maturity Model . The basis of the methodology is the separation of the Application Security process into 4 domains: Governance, Intelligence, SSDL Touchpoints and Deployment. Each domain has 12 practices, which are represented as 112 activities.

Each of 112 activities has 3 maturity levels : elementary, intermediate and advanced. All 12 practices can be studied in sections, select important things for you, understand how to implement them and gradually add elements, for example, static and dynamic code analysis or code review. You paint the plan and work calmly on it as part of the implementation of the selected activities.

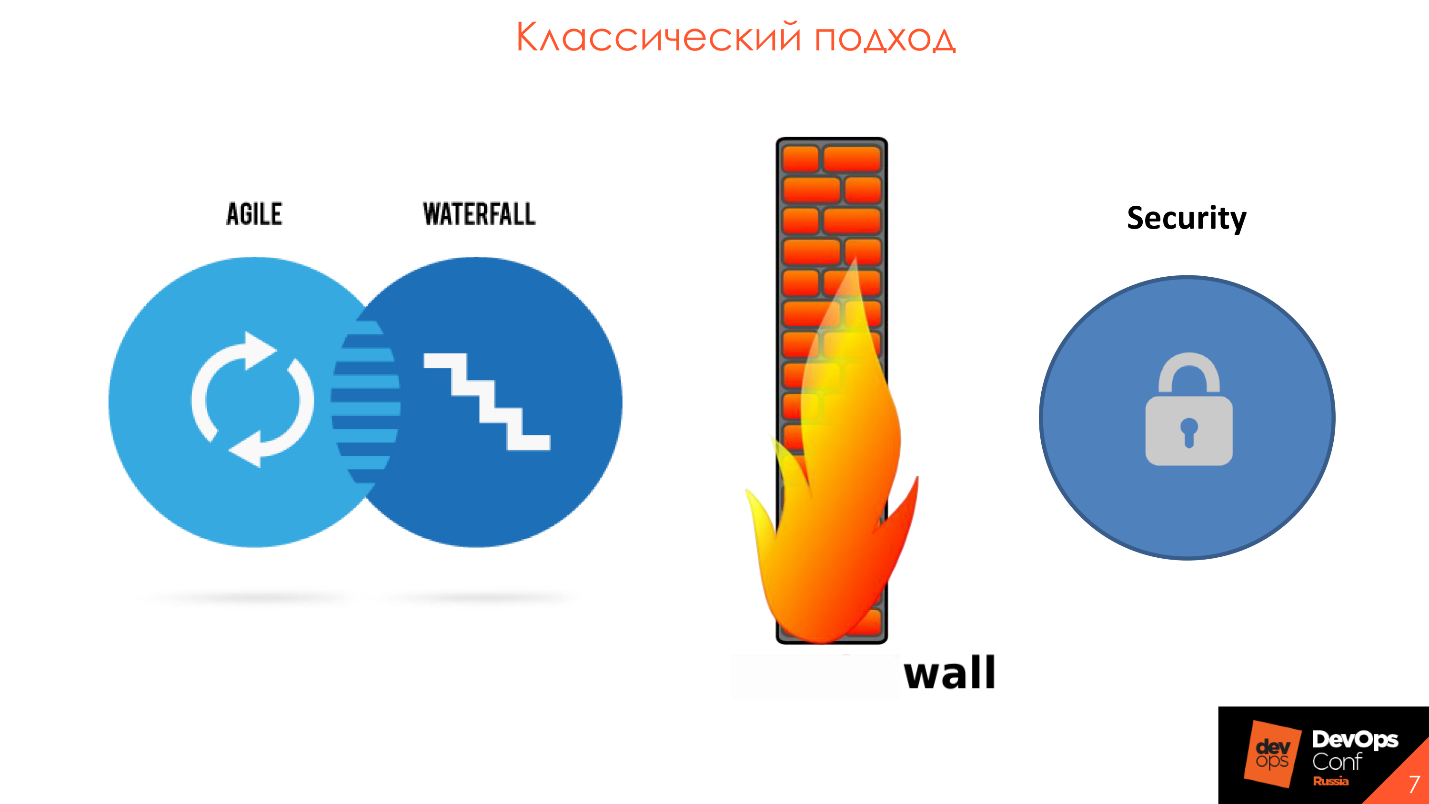

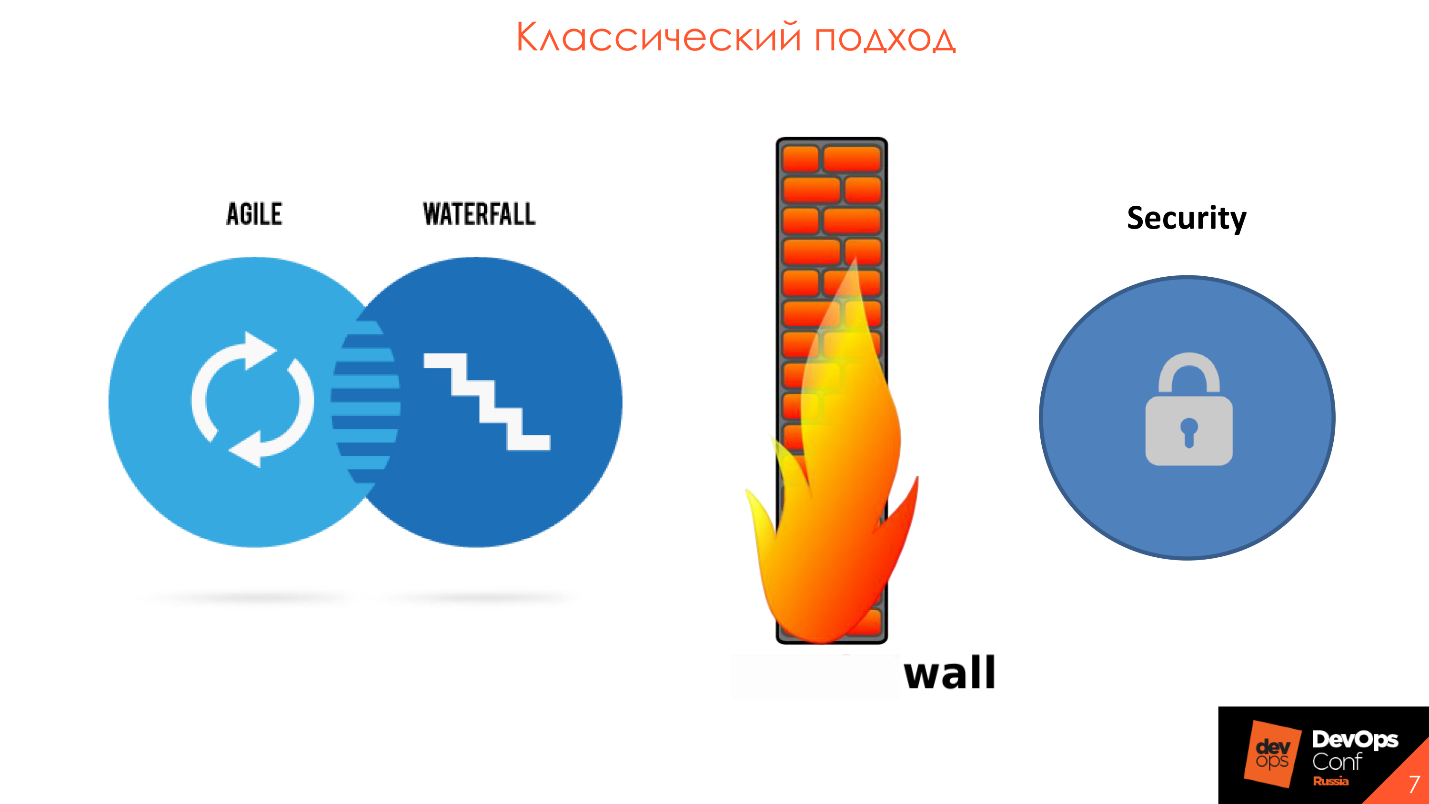

Initially, DevOps included security checks. In practice, the number of security teams was much smaller than now, and they did not act as participants in the process, but as a control and oversight body that sets requirements to it and checks the quality of the product at the end of the release. This is a classic approach in which security teams were behind the wall from development and were not involved in the process.

The main problem is that information security is separate from development. Usually this is a kind of IB circuit and it contains 2-3 large and expensive tools. Once every six months, the source code or application arrives that needs to be checked, and once a year pentests are made. This all leads to the fact that the deadlines for entering the prom are postponed, and a huge number of vulnerabilities from automated tools fall out on the developer. All this cannot be disassembled and repaired, because the results for the previous six months have not been sorted out, and here is a new batch.

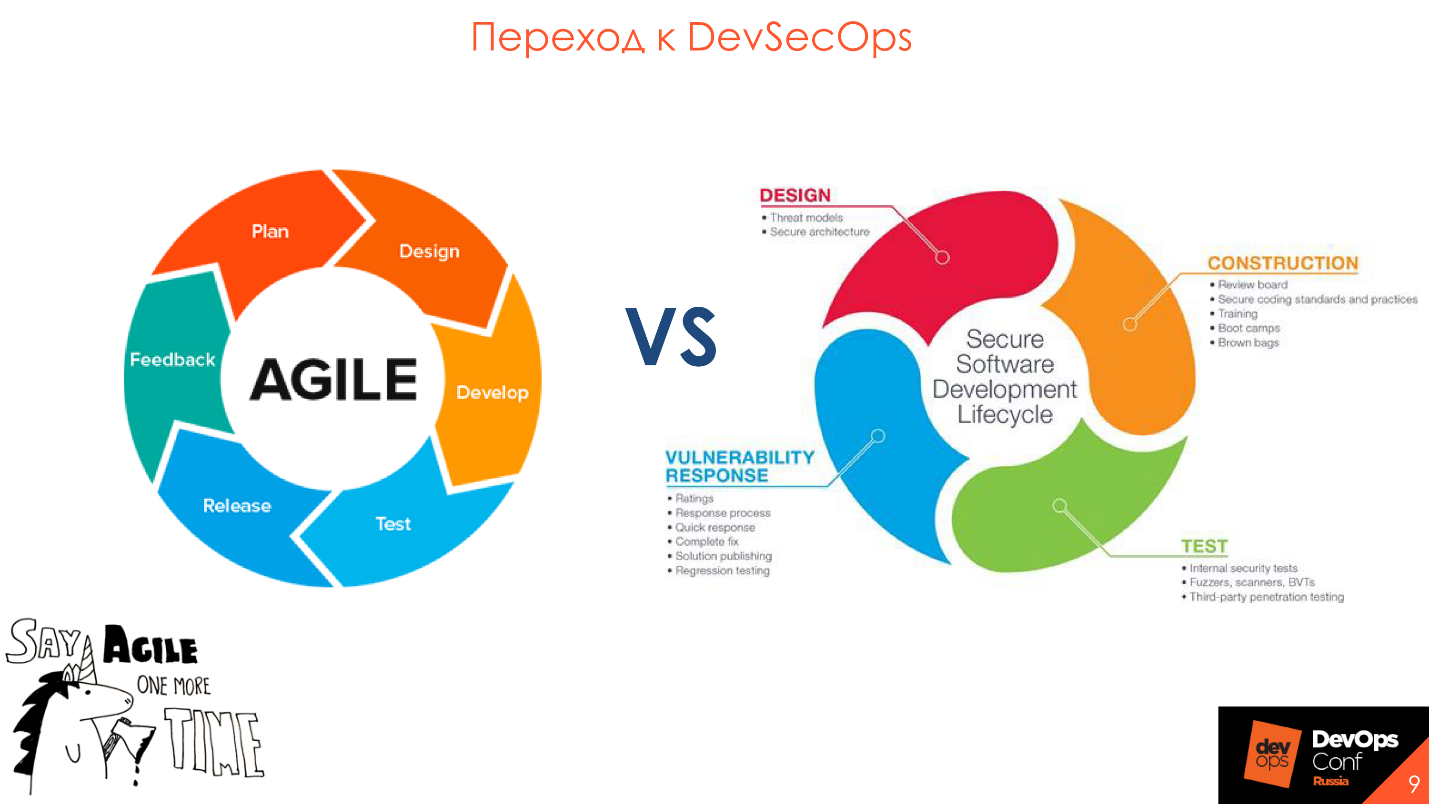

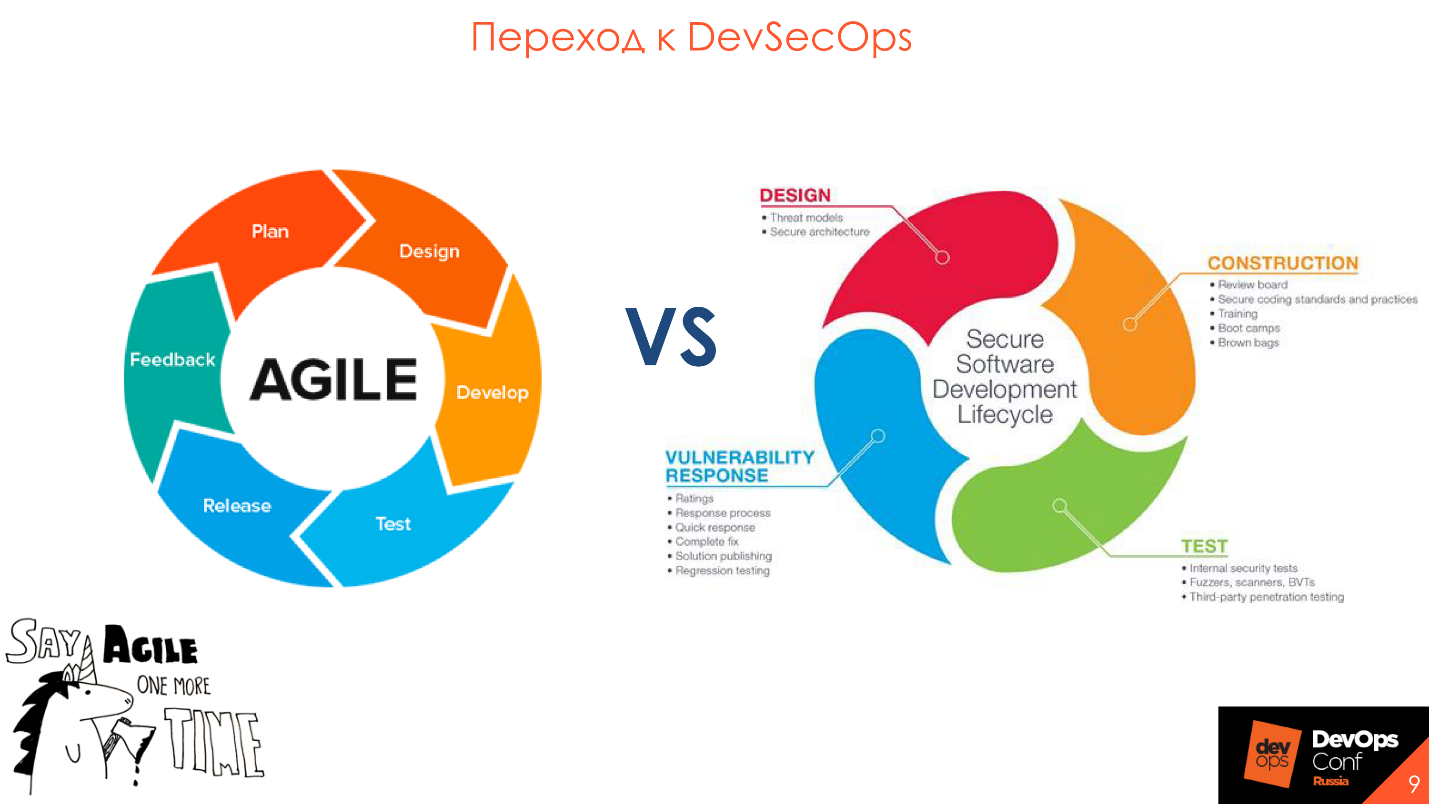

In the process of our company, we see that security in all areas and industries understands that it is time to pull yourself and spin with the development in one wheel - in Agile . The DevSecOps paradigm fits nicely on agile development methodology, on implementation, support, and participation in each release and iteration.

The most important word in the Security Development Lifecycle is “process . ” You must understand this before thinking about buying tools.

Often, the planning of a safe development process begins with the selection and purchase of a tool, and ends with attempts to integrate the tool into the current process, which remain attempts. This leads to sad consequences, because all tools have their own characteristics and limitations.

A common case when the security department chose a good, expensive tool, with great features, and came to the developers - to embed in the process. But it doesn’t work out - the process is structured so that the limitations of the already purchased tool do not fit into the current paradigm.

Before buying expensive tools, look at what you already have. Each company has safety requirements for development, there are checks, pentests - why not transform all this into an understandable and convenient form for everyone?

Typically, requirements are a paper Talmud, which lies on a shelf. There was a case when we come to the company to see the processes and ask to show the security requirements for the software. The specialist who was doing this was looking for a long time:

- Now, somewhere in the notes there was a way where this document lies.

As a result, we received the document a week later.

For requirements, checks and other things, create a page, for example, on Confluence - this is convenient for everyone.

Usually, in a medium-sized company for 100-200 developers one security officer works, who performs several functions, and physically does not have time to check everything. Even if he tries his best, he alone will not check all the code that the development generates. For such cases, a concept has been developed - Security Champions .

Security Champion is the entry point to the development team and the security evangelist all rolled into one.

Usually, when a security guard comes to the development team and indicates an error in the code, he receives an surprised answer:

“And who are you?” I see you for the first time. I’m doing fine - my senior friend on the code review set “apply”, we go further!

This is a typical situation, because there is a lot more trust in senior or just teammates, with whom the developer constantly interacts in work and in code review. If, instead of the security guard, the Security Champion indicates the mistake and consequences, then his word will have more weight.

Also, developers know their code better than any security provider. For a person who has at least 5 projects in a static analysis tool, it is usually difficult to remember all the nuances. Security Champions know their product: what interacts with what and what to look at in the first place - they are more effective.

So think about implementing Security Champions and expanding the influence of the security team. For the champion himself, this is also useful: professional development in a new field, expanding the technical horizons, pumping up technical, managerial and leadership skills, increasing the market value. This is some element of social engineering, your “eyes” in the development team.

The 20 to 80 paradigm says that 20% of the effort produces 80% of the result. These 20% are application analysis practices that can and should be automated. Examples of such activities are static analysis - SAST , dynamic analysis - DAST, and Open Source control . I’ll tell you more about activities, as well as about tools, what features we usually encounter when they are introduced into the process, and how to do it correctly.

I will highlight the problems that are relevant for all tools that require attention. I will analyze them in more detail so as not to repeat further.

Long time analysis. If it takes 30 minutes to complete all tests and assembly from the commit to going to the prod, then information security checks will take a day. So no one will slow down the process. Consider this feature and draw conclusions.

High False Negative or False Positive. All products are different, everyone uses different frameworks and their own style of writing code. On different code bases and technologies, tools can show different levels of False Negative and False Positive. Therefore, see what exactly in your company and for your applications will show a good and reliable result.

No integration with existing tools . Look at the tools in terms of integrations so that you are already using. For example, if you have Jenkins or TeamCity, check the integration of tools with this software, and not with GitLab CI, which you do not use.

The absence or excessive complexity of customization. If the tool does not have an API, then why is it needed? Everything that can be done in the interface should be accessible through the API. Ideally, the tool should have the ability to customize checks.

No roadmap product development.Development does not stand still, we always use new frameworks and functions, rewrite old code into new languages. We want to be sure that the tool we buy will support new frameworks and technologies. Therefore, it is important to know that the product has a real and proper development Roadmap .

In addition to the features of the tools, consider the features of the development process. For example, interfering with development is a typical mistake. Let's see what other features should be considered and what the security team should pay attention to.

In order not to disrupt the development and release dates, create different rules and different show stoppers - criteria for stopping the build process in the presence of vulnerabilities - for different environments . For example, we understand that the current branch goes to the development stand or UAT, so we do not stop and do not say:

- You have vulnerabilities here, you will not go anywhere further!

At this point, it is important to tell the developers that there are security issues worth paying attention to.

The presence of vulnerabilities is not an obstacle for further testing : manual, integration, or manual. On the other hand, we need to somehow increase the security of the product, and so that the developers do not forget about what they find safety. Therefore, sometimes we do this: at the stand, when it rolls out to the development environment, we just notify the development:

- Guys, you have problems, please pay attention to them.

At the UAT stage, we again show warnings about vulnerabilities, and at the exit stage in the prom we say:

- Guys, we warned several times, you did nothing - we won’t let you go with this.

If we talk about code and dynamics, it is necessary to show and warn about vulnerabilities only of those features and code that was just written in this feature. If the developer moved the button 3 pixels and we tell him that he has SQL injection there and therefore urgently needs to be fixed, this is wrong. Look only at what is written now and the change that comes to the application.

Suppose we have a certain functional defect - the way the application should not work: money is not transferred, when you click on the button, there is no transition to the next page or the goods do not load. Security defects are the same defects, but not in the context of the application, but security.

Since all vulnerabilities are the same defects, they should be located in the same place as all development defects. So forget about reports and scary PDFs that no one reads.

When I worked for a development company, I got a report from static analysis tools. I opened it, was horrified, made coffee, leafed through 350 pages, closed it and went on to work further. Big reports are dead reports . Usually they don’t go anywhere, letters are deleted, forgotten, lost or the business says that it takes risks.

What to do? We just transform the confirmed defects that we found into a form convenient for development, for example, add them to the backlog in Jira. We prioritize and eliminate defects in order of priority along with functional defects and test defects.

This is code analysis for vulnerabilities , but it is not the same as SonarQube. We check not only by patterns or style. In the analysis, a number of approaches are used: in the vulnerability tree, in DataFlow , in the analysis of configuration files. This is all directly related to the code.

Advantages of the approach : identifying vulnerabilities in the code at an early stage of development , when there are no stands and a finished tool, and the possibility of incremental scanning : scanning a section of code that has changed, and only the feature that we are doing now, which reduces scanning time.

Cons - this is the lack of support for the necessary languages.

Necessary integrationwhich should be in the tools, in my subjective opinion:

The picture shows some of the best representatives of static analysis.

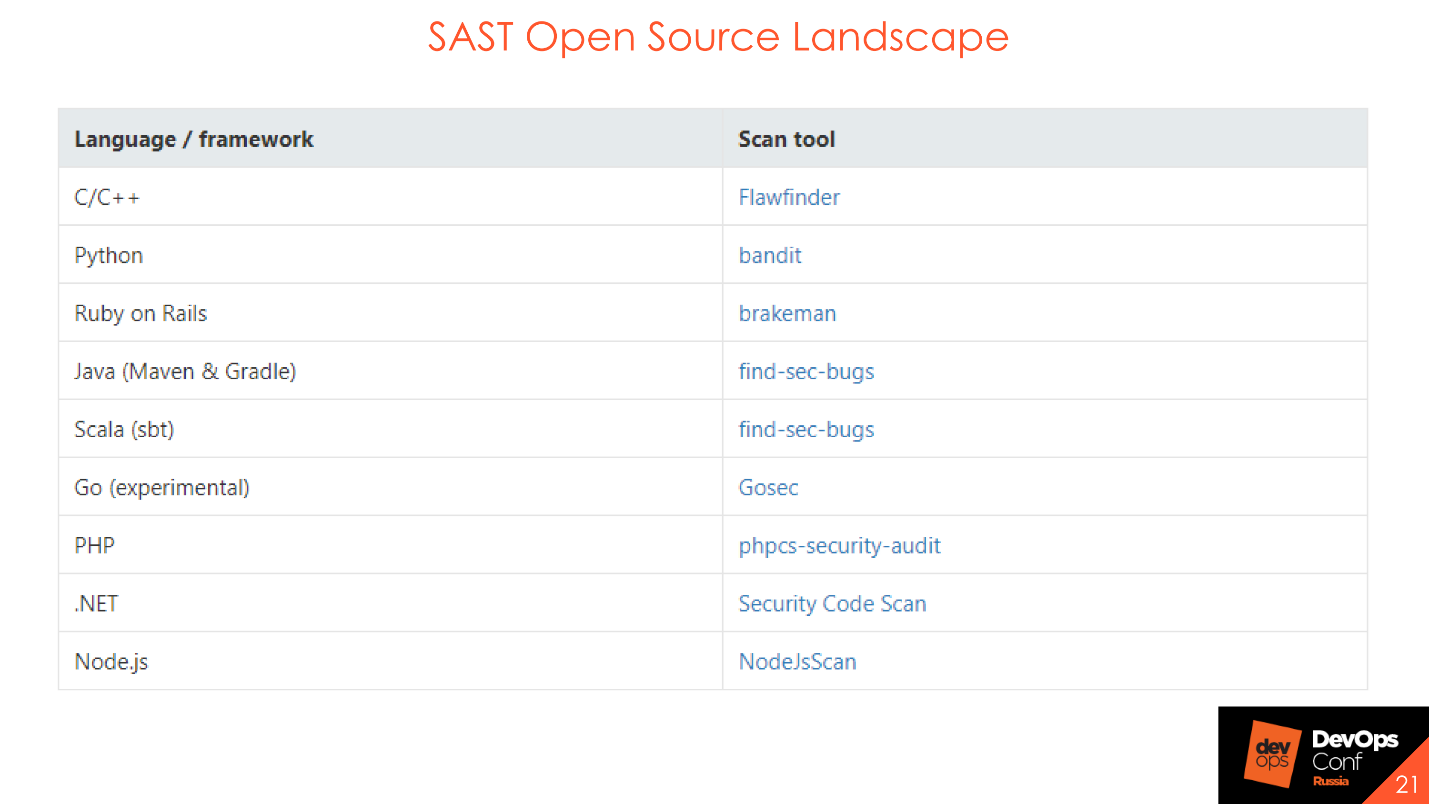

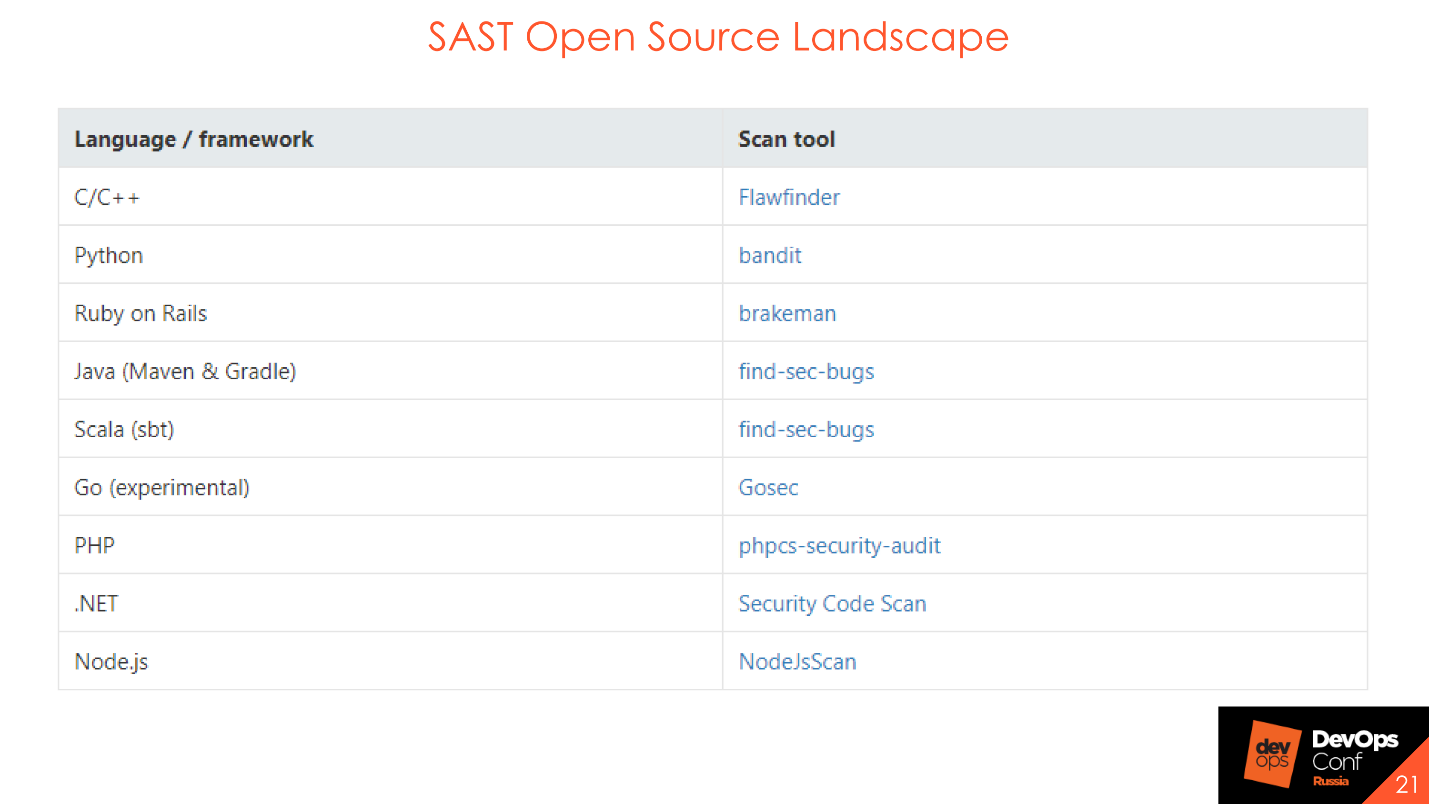

It’s not the tools that are important, but the process, so there are Open Source solutions that are also good for running the process.

SAST Open Source will not find a huge number of vulnerabilities or complex DataFlow, but you can and should use them when building a process. They help to understand how the process will be built, who will respond to bugs, who to report, who to report. If you want to carry out the initial stage of building the security of your code, use Open Source solutions.

How can this be integrated if you are at the beginning of the road, you have nothing: neither CI, nor Jenkins, nor TeamCity? Consider integration into the process.

If you have Bitbucket or GitLab, you can do integration at the Concurrent Versions System level .

By event - pull request, commit. You scan the code and show in the build status that the security check has passed or failed.

Feedback. Of course, feedback is always needed. If you just performed on the security side, put it in a box and didn’t tell anyone about it, and then dumped a bunch of bugs at the end of the month - this is neither right nor good.

Once, in a number of important projects, we set the default reviewer of the AppSec technical user. Depending on whether errors were detected in the new code or if there are no errors, the reviewer puts the status on “accept” or “need work” on the pull request - either everything is OK, or you need to refine and links to exactly what to finalize. For integration with the version that goes to the prod, we had merge ban enabled if the IB test is not passed. We included this in a manual code review, and other participants in the process saw security statuses for this particular process.

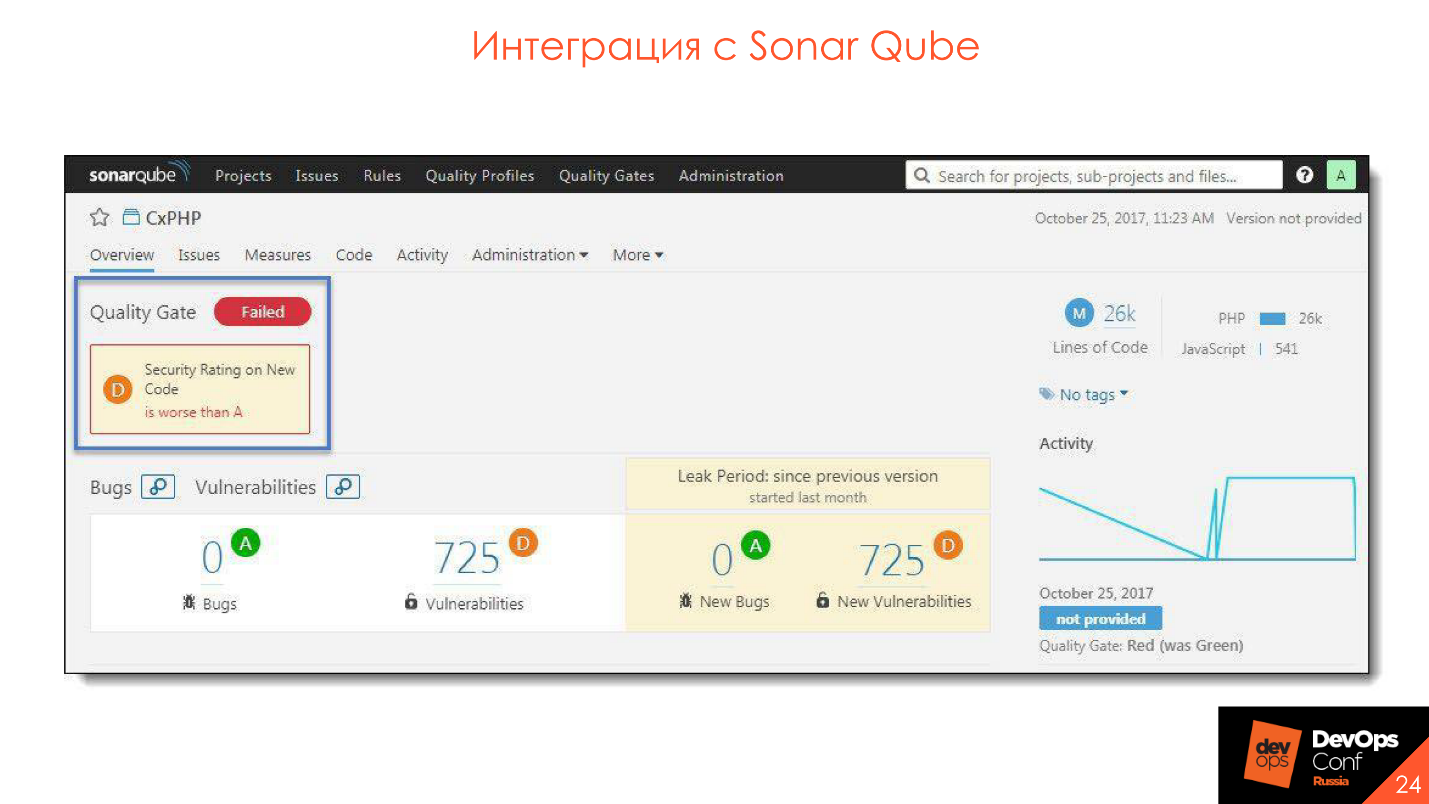

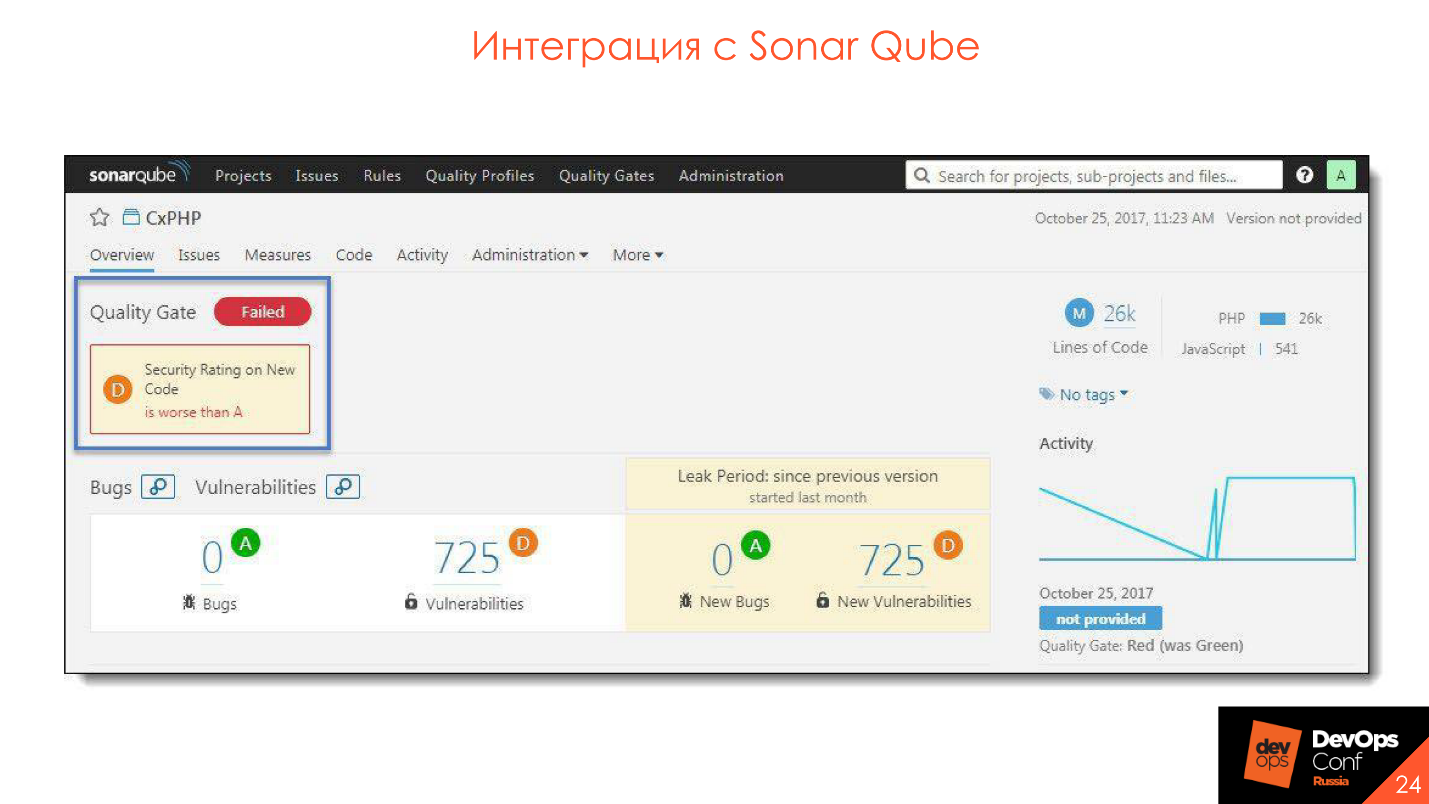

Many have a quality gate for code quality. The same thing here - you can make the same gates only for SAST tools. There will be the same interface, the same quality gate, only it will be called a security gate . And also, if you have a process using SonarQube, you can easily integrate everything there.

Here, too, everything is quite simple:

It's all in a perfect pink world. In real life, this is not, but we strive. The result of security checks should be similar to the results of unit tests.

For example, we took a large project and decided that now we will scan it with SAST'om - OK. We shoved this project into SAST, it gave us 20,000 vulnerabilities, and with a strong-willed decision, we accepted that everything is fine. 20,000 vulnerabilities are our technical duty. We will put the debt in a box, and we will slowly rake up and start bugs in the defect trackers. We hire a company, do it all ourselves or Security Champions will help us - and our technical debt will decrease.

And all newly emerging vulnerabilities in the new code should be fixed as well as errors in the unit or in autotests. Relatively speaking, the assembly started, drove away, two tests and two security tests fell down. OK - they went, looked what happened, corrected one thing, corrected the second one, drove it out the next time - everything was fine, there were no new vulnerabilities, the tests failed. If this task is deeper and you need to understand it well, or fixing vulnerabilities affects large layers of what lies under the hood: they brought a bug into the defect tracker, it is prioritized and fixed. Unfortunately, the world is not perfect and tests sometimes fail.

An example of a security gate is an analogue of a quality gate, by the presence and number of vulnerabilities in the code.

We integrate with SonarQube - the plugin is installed, everything is very convenient and cool.

We integrate with SonarQube - the plugin is installed, everything is very convenient and cool.

Integration Capabilities:

It looks something like getting results from the server.

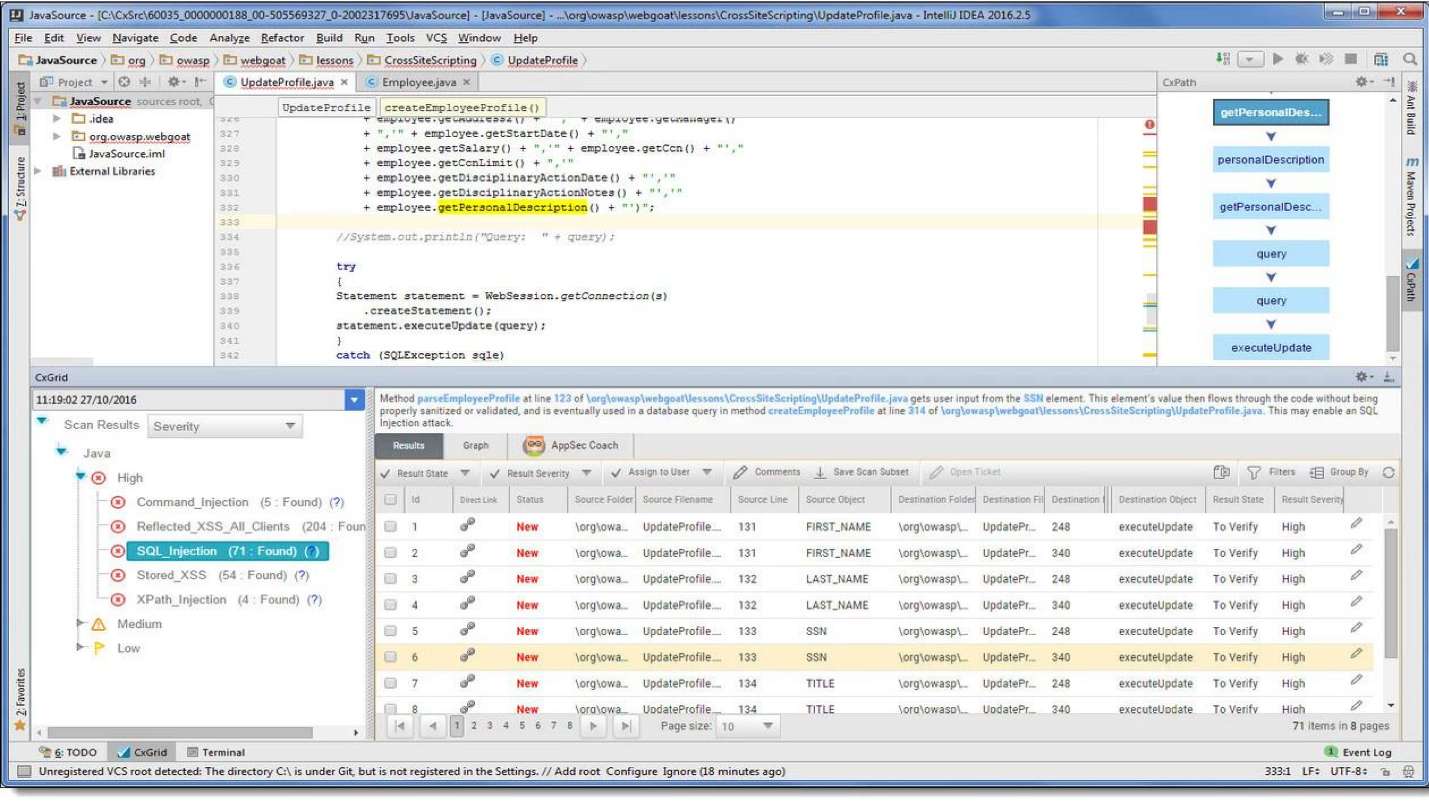

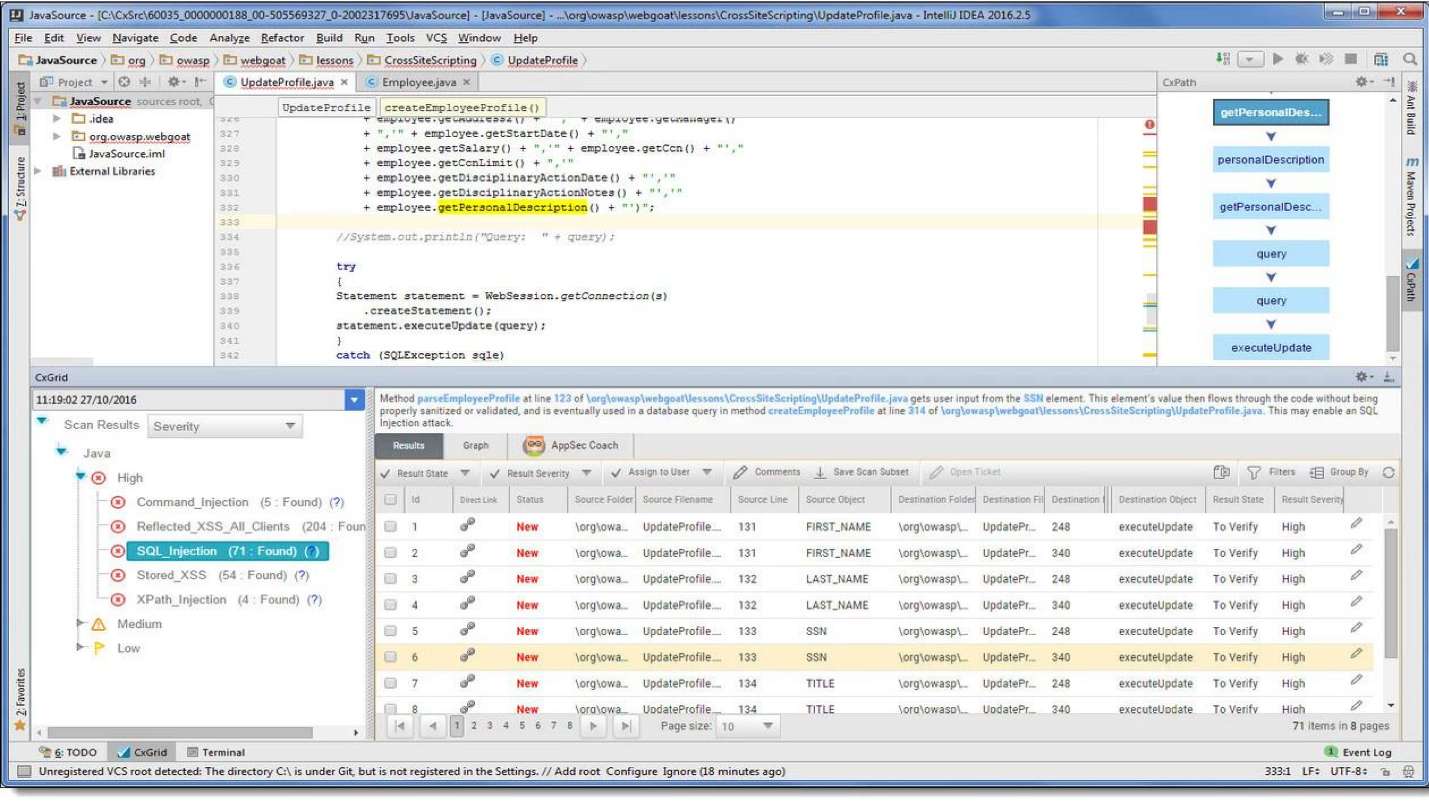

In our Intellij IDEA development environment , an additional item simply appears that reports that such vulnerabilities were detected during the scan. You can immediately edit the code, see recommendations and Flow Graph . This is all located at the developer's workplace, which is very convenient - you don’t have to go to the rest of the links and watch something extra.

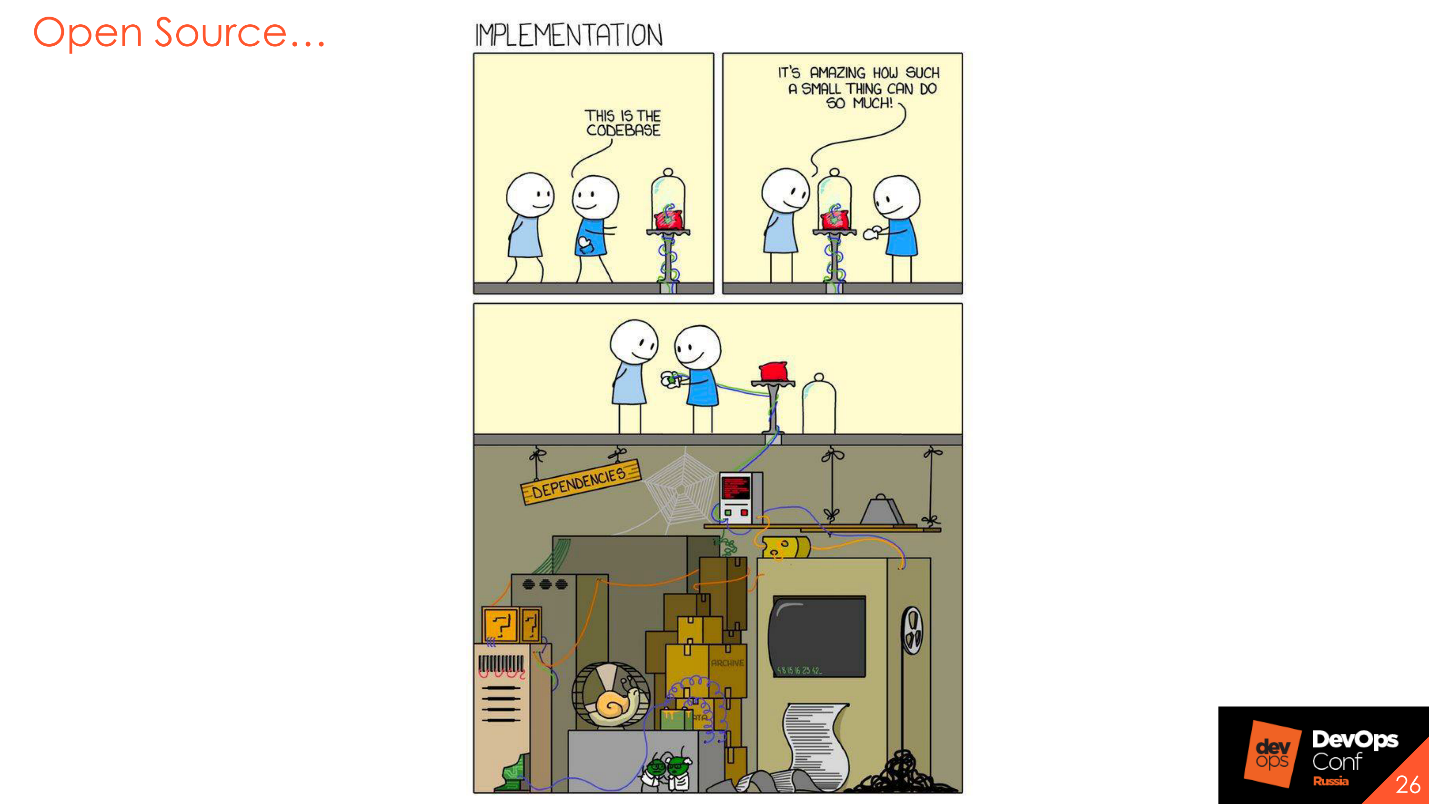

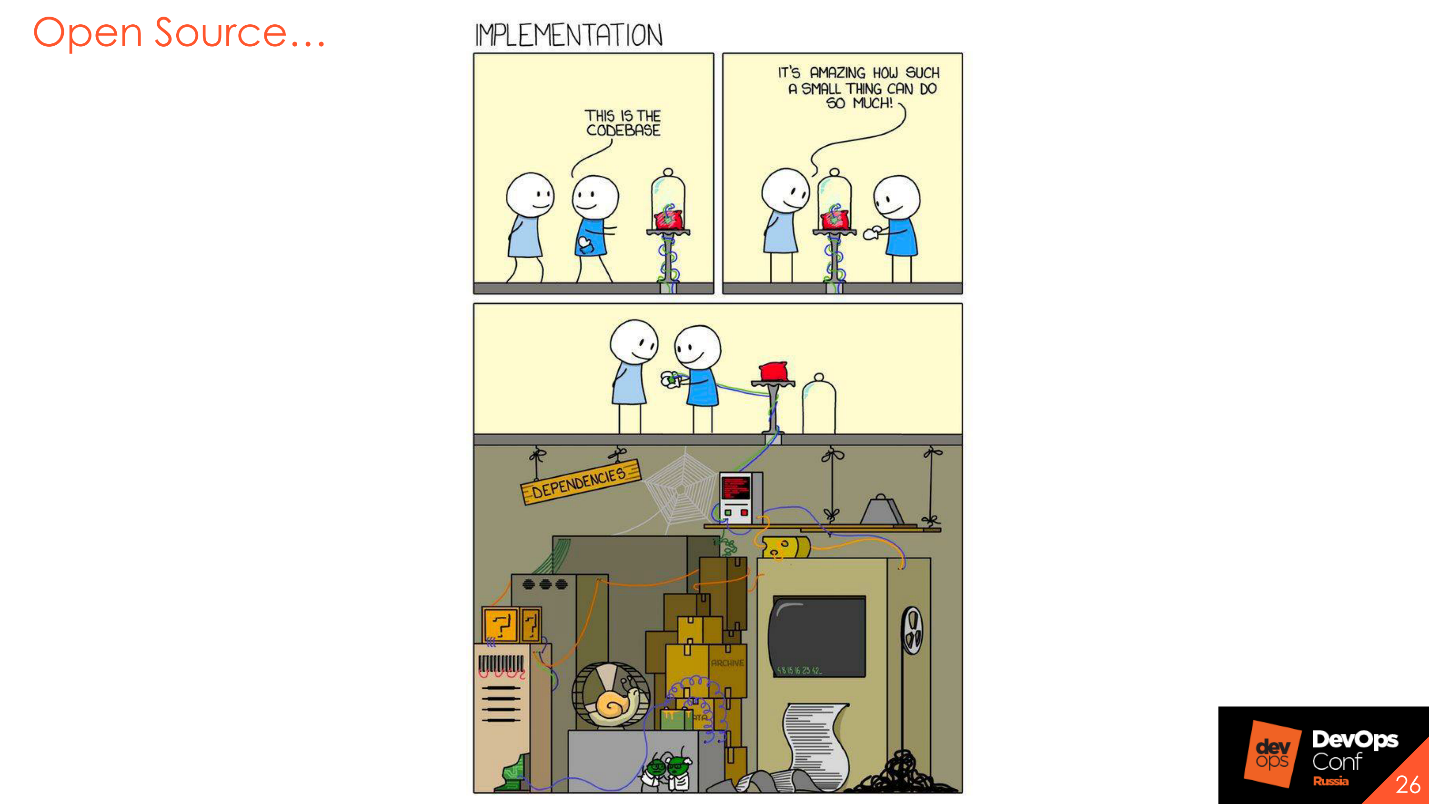

This is my favorite topic. Everyone uses the Open Source library - why write a bunch of crutches and bicycles when you can get a ready-made library in which everything is already implemented?

Of course, this is true, but libraries are also written by people, also include certain risks, and there are also vulnerabilities that are reported periodically, or constantly. Therefore, the next step in Application Security is the analysis of the Open Source component.

The tool includes three large steps.

Search for vulnerabilities in libraries. For example, the tool knows that we are using some kind of library, and that there are some vulnerabilities in CVE or in bug trackers that relate to this version of the library. When you try to use it, the tool will warn you that the library is vulnerable, and advises you to use a different version, where there are no vulnerabilities.

Analysis of licensed cleanliness.This is not very popular with us yet, but if you work with foreign countries, you can periodically receive an ATA for using an open source component that cannot be used or modified. According to the policy of the licensed library, we cannot do this. Or, if we modified and use it, we must lay out our code. Of course, no one wants to upload the code of their products, but you can also protect yourself from this.

Analysis of components that are used in an industrial environment.Imagine a hypothetical situation that we finally completed the development and released the latest latest release of our microservice in prom. He lives there wonderfully - a week, a month, a year. We do not collect it, we do not conduct security checks, everything seems to be fine. But suddenly, two weeks after the release, a critical vulnerability emerges in the Open Source component, which we use in this assembly, in the industrial environment. If we do not record what and where we use, then we simply will not see this vulnerability. Some tools have the ability to monitor vulnerabilities in libraries that are currently used in the prom. It is very useful.

Opportunities:

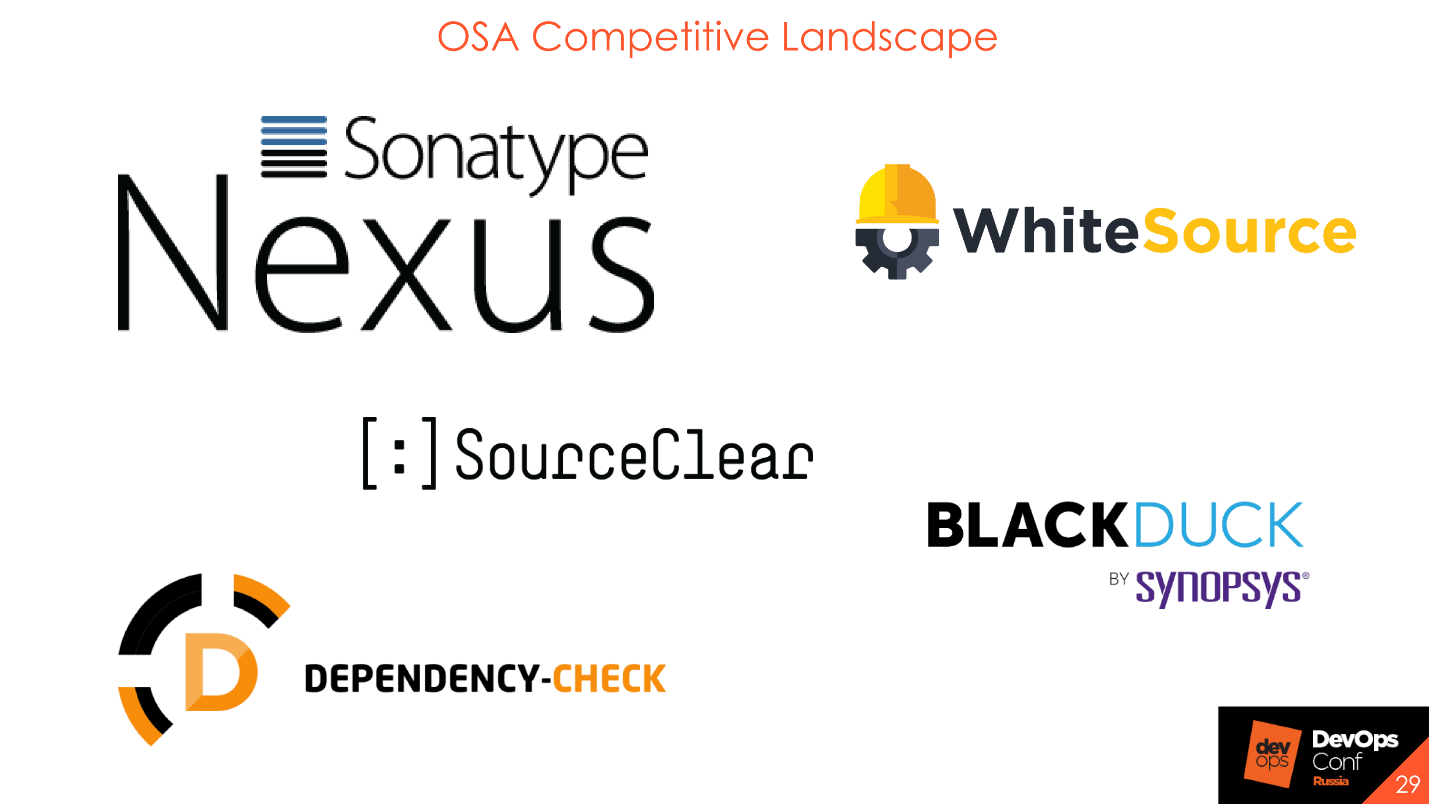

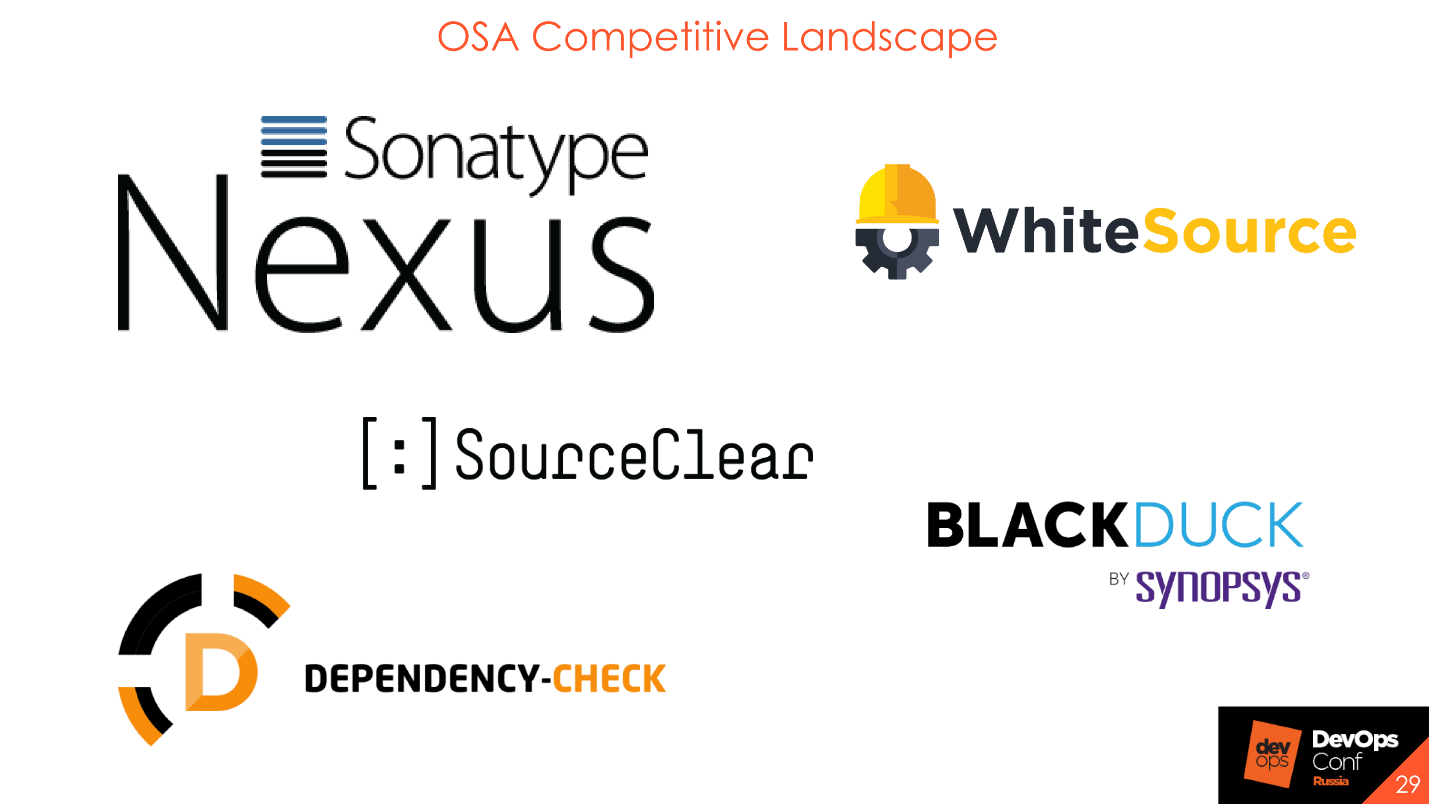

A few examples of area leaders who analyze Open Source.

The only free one is OWASP's Dependency-Check . You can turn it on at the first stages, see how it works and what it supports. Basically, these are all cloud products, or on-premise, but behind their base they are still sent to the Internet. They do not send your libraries, but hashes or their values, which they calculate, and fingerprints to their server to receive news of vulnerabilities.

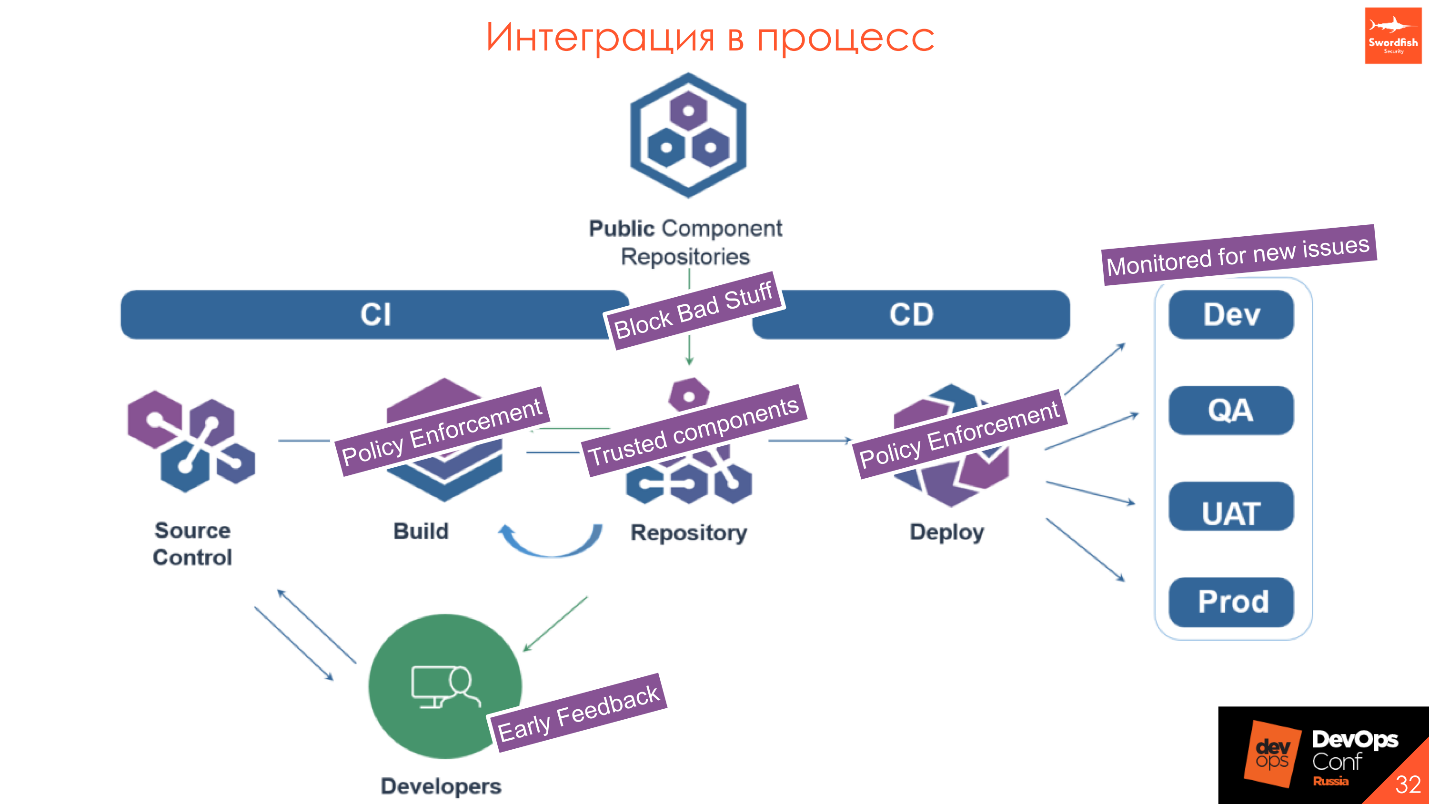

Perimeter libraries that are downloaded from external sources. We have external and internal repositories. For example, Nexus is located inside Event Central, and we want to ensure that there are no vulnerabilities with the status “critical” or “high” inside our repository. You can configure proxying using the Nexus Firewall Lifecycle tool so that such vulnerabilities are cut off and do not fall into the internal repository.

Integration in CI . At the same level as autotests, unit tests and separation by development stages: dev, test, prod. At each stage, you can download any libraries, use anything, but if there is something tough with the status of “critical”, it’s possible, you should pay attention to this at the stage of entering the prom.

Artifact Integration: Nexus and JFrog.

Integration into the development environment. The tools you choose should have integration with development environments. The developer should have access to the scan results from his workplace, or the ability to scan and check the code for vulnerabilities before committing to CVS.

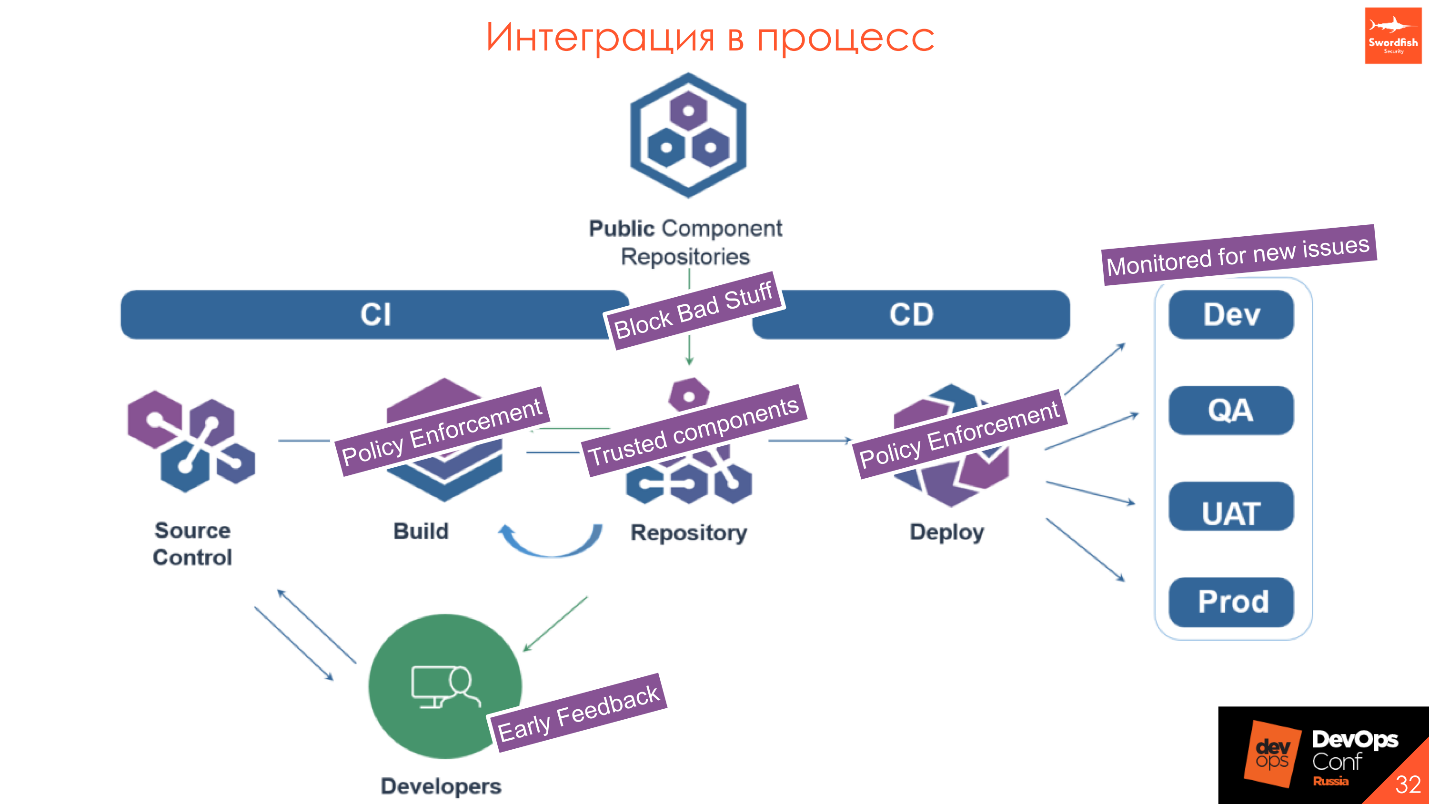

Integration in CD. This is a cool feature that I really like and about which I have already talked about - monitoring the emergence of new vulnerabilities in the industrial environment. It works something like this.

We have Public Component Repositories - some tools outside, and our internal repository. We want only trusted components in it. When proxying a request, we verify that the downloaded library has no vulnerabilities. If it falls under certain policies that we establish and necessarily coordinate with the development, then we don’t upload it and a beat comes to use another version. Accordingly, if there is something really critical and bad in the library, then the developer will not receive the library at the installation stage - let him use a version higher or lower.

Dynamic analysis tools are fundamentally different from everything that was said before. This is a kind of imitation of the user's work with the application. If this is a web application, we send requests, simulating the work of the client, click on the buttons on the front, send artificial data from the form: quotation marks, brackets, characters in different encodings, to see how the application works and processes external data.

The same system allows you to check pattern vulnerabilities in Open Source. Since DAST does not know which Open Source we are using, it simply throws “malicious” patterns and analyzes server responses:

- Yeah, there is a problem of deserialization, but not here.

There are big risks in this, because if you conduct this security test on the same stand with which testers work, unpleasant things can happen.

We had a situation when we finally launched AppScan: for a long time we knocked out access to the application, got 3 accounts and were delighted - finally we’ll check everything! We started the scan, and the first thing AppScan did was climb into the admin panel, poke all the buttons, change half of the data, and then completely kill the server with its mailform requests. Development with testing said:

- Guys, are you kidding me ?! We gave you the records, and you put a stand!

Consider possible risks. Ideally, prepare a separate test bench for information security, which will be isolated from the rest of the environment at least somehow, and it is advisable to conditionally check the admin panel in manual mode. This is a pentest - those remaining percentages of efforts that we are not considering now.

It is worth considering that you can use this as an analogue of load testing. At the first stage, you can turn on a dynamic scanner in 10-15 threads and see what happens, but usually, as practice shows, nothing good.

A few resources that we commonly use.

It is worth highlighting Burp Suite - this is a "Swiss knife" for any security specialist. Everyone uses it, and it is very convenient. Now there is a new demo version of enterprise edition. If earlier it was just a stand alone utility with plug-ins, now developers are finally making a large server from which it will be possible to manage several agents. This is cool, I advise you to try.

Integration is quite good and simple: launching a scan after successfully installing the application on the stand and scanning after successful integration testing .

If the integration does not work or there are stubs and mock functions there, it is pointless and useless - no matter what pattern we send, the server will still respond the same way.

A little generalized about the process in general and about the operation of each tool, in particular. All applications are different - dynamic analysis works better for one, static analysis for the other, OpenSource analysis for the third, pentests or something else in general, for example, events with Waf .

To understand how the process works and where it can be improved, you need to collect metrics from everything your hands reach, including production metrics, metrics from tools and from defect trackers.

Any data is helpful. It is necessary to look in various sections on where this or that tool is better applied, where the process specifically sags. It might be worth looking at the development response time to see where to improve the process based on time. The more data, the more sections you can build from the top to the details of each process.

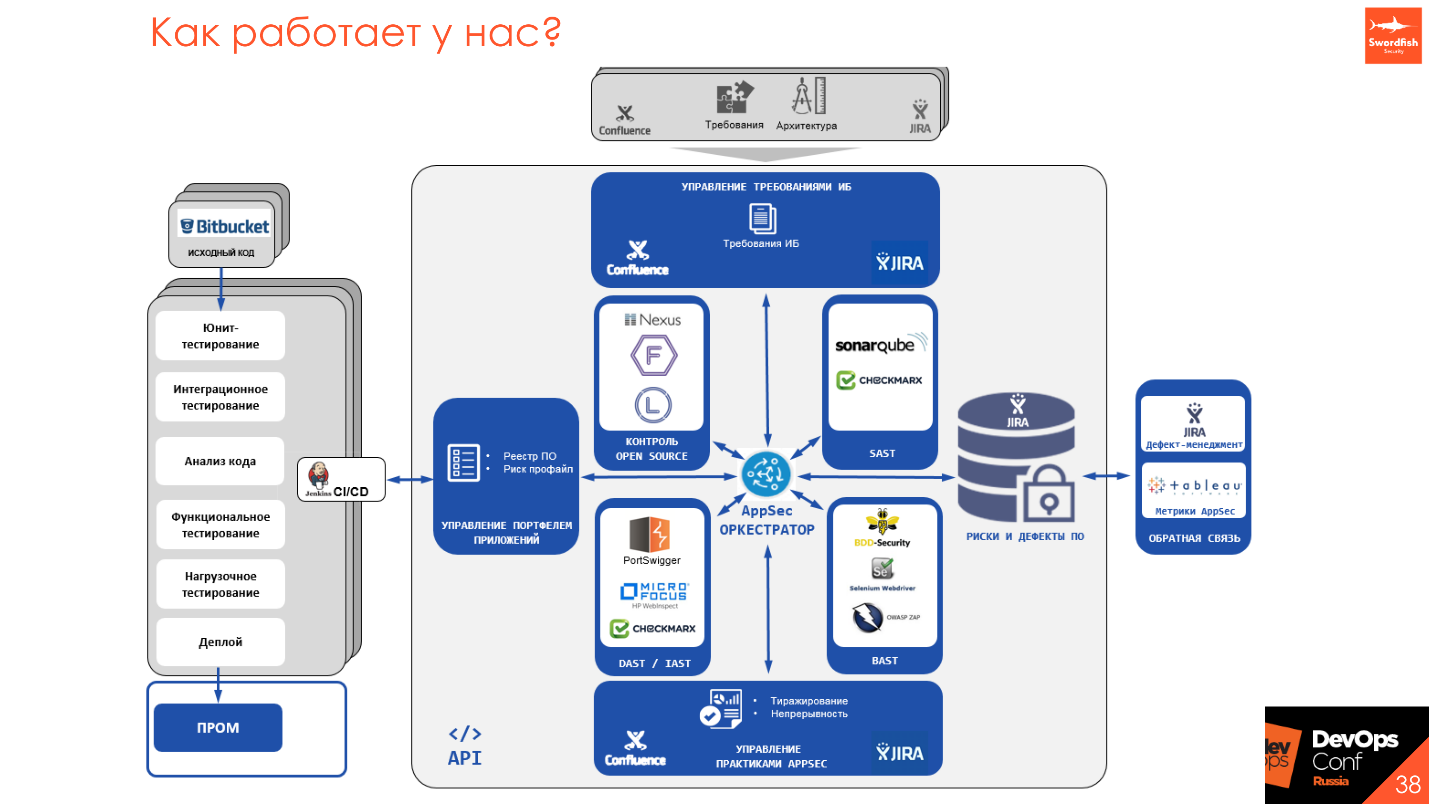

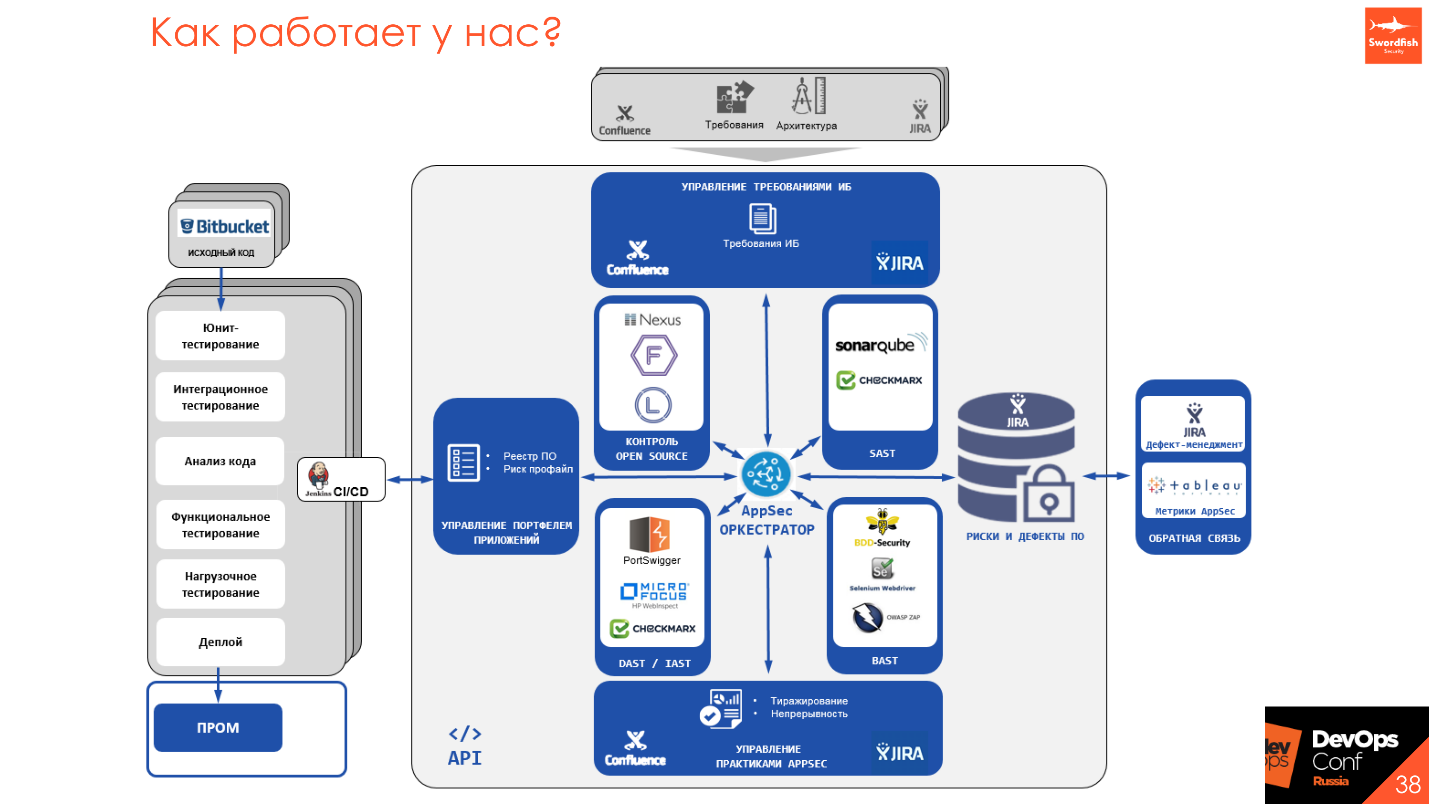

Since all static and dynamic analyzers have their own APIs, their own launch methods, principles, some have shedulers, others do not - we write the AppSec Orchestrator tool, which allows you to make a single point of entry into the entire process from the product and manage it from one point.

Managers, developers and security engineers have one entry point, from which you can see what is running, configure and run the scan, get the scan results, make demands. We try to get away from the papers, translate everything into a human one, which uses development - pages on Confluence with status and metrics, defects in Jira or in various defect trackers, or embedding in a synchronous / asynchronous process in CI / CD.

Tools are not important. First think over the process - then implement the tools. The tools are good, but expensive, so you can start with the process and set up the interaction and understanding between development and security. From the point of view of security - there is no need to "stop" everything, From the point of view of development - if there is something high mega super critical, then this should be eliminated, and not close your eyes to the problem.

Product quality is a common goal of both security and development. We are doing one thing, we are trying to ensure that everything works correctly and there are no reputational risks and financial losses. That is why we are promoting the approach to DevSecOps, SecDevOps in order to establish communication and make the product better.

Start with what you already have: requirements, architecture, partial checks, trainings, guidelines. You do not need to immediately apply all the practices to all projects - move iteratively . There is no single standard - experiment and try different approaches and solutions.

Between IS defects and functional defects an equal sign .

Automate everything that moves. All that does not move is move and automate. If something is done by hand, this is not a good part of the process. Perhaps it is worth revising it and also automating it.

If the size of the IB command is small, use Security Champions .

Perhaps what I was talking about will not suit you and you will come up with something of your own - and that's good. But choose tools based on the requirements of your process . Do not look at what the community says that this tool is bad and this one is good. Perhaps it’s the exact opposite on your product.

Tool Requirements.

Source . Character authors: Justin Royland and Dan Harmon.

What is SecDevOps? What about DevSecOps? What are the differences? Application Security - what is it about? Why doesn't the classic approach work anymore? Yury Shabalin from Swordfish Security knows the answer to all these questions .Yuri will answer everything in detail and analyze the problems of switching from the classic Application Security model to the DevSecOps process: how to approach the integration of the secure development process into the DevOps process and not break anything, how to go through the main stages of security testing, what tools can be used, what they differ and how to configure them correctly to avoid pitfalls.

About the speaker: Yuri Shabalin - Chief Security Architect at Swordfish Security . He is responsible for the implementation of SSDL, for the general integration of application analysis tools into a single development and testing ecosystem. 7 years of experience in information security. He worked at Alfa Bank, Sberbank and Positive Technologies, which develops software and provides services. Speaker of international conferences ZerONights, PHDays, RISSPA, OWASP.

Application Security: what is it about?

Application Security is the security section that is responsible for application security. This does not apply to infrastructure or network security, namely, what we write and what developers are working on - these are the flaws and vulnerabilities of the application itself.

The direction of SDL or SDLC - Security development lifecycle - was developed by Microsoft. The diagram shows the canonical SDLC model, the main task of which is the participation of security at each stage of development, from requirements, to release and release into production. Microsoft realized that there were too many bugs in the prom, there were more of them and something needed to be done, and they proposed this approach, which became canonical.

Application Security and SSDL are not aimed at detecting vulnerabilities, as is commonly believed, but at preventing their occurrence. Over time, the canonical approach from Microsoft was improved, developed, and a deeper detailed immersion appeared in it.

Canonical SDLC is highly detailed in various methodologies - OpenSAMM, BSIMM, OWASP. The methodologies are different, but generally similar.

Building Security In Maturity Model

I like BSIMM the most - Building Security In Maturity Model . The basis of the methodology is the separation of the Application Security process into 4 domains: Governance, Intelligence, SSDL Touchpoints and Deployment. Each domain has 12 practices, which are represented as 112 activities.

Each of 112 activities has 3 maturity levels : elementary, intermediate and advanced. All 12 practices can be studied in sections, select important things for you, understand how to implement them and gradually add elements, for example, static and dynamic code analysis or code review. You paint the plan and work calmly on it as part of the implementation of the selected activities.

Why DevSecOps

DevOps is a common big process in which you need to take care of security.

Initially, DevOps included security checks. In practice, the number of security teams was much smaller than now, and they did not act as participants in the process, but as a control and oversight body that sets requirements to it and checks the quality of the product at the end of the release. This is a classic approach in which security teams were behind the wall from development and were not involved in the process.

The main problem is that information security is separate from development. Usually this is a kind of IB circuit and it contains 2-3 large and expensive tools. Once every six months, the source code or application arrives that needs to be checked, and once a year pentests are made. This all leads to the fact that the deadlines for entering the prom are postponed, and a huge number of vulnerabilities from automated tools fall out on the developer. All this cannot be disassembled and repaired, because the results for the previous six months have not been sorted out, and here is a new batch.

In the process of our company, we see that security in all areas and industries understands that it is time to pull yourself and spin with the development in one wheel - in Agile . The DevSecOps paradigm fits nicely on agile development methodology, on implementation, support, and participation in each release and iteration.

Transition to DevSecOps

The most important word in the Security Development Lifecycle is “process . ” You must understand this before thinking about buying tools.

Just incorporating tools into the DevOps process is not enough - the interaction and understanding between the process participants is important.

More important than people, not tools

Often, the planning of a safe development process begins with the selection and purchase of a tool, and ends with attempts to integrate the tool into the current process, which remain attempts. This leads to sad consequences, because all tools have their own characteristics and limitations.

A common case when the security department chose a good, expensive tool, with great features, and came to the developers - to embed in the process. But it doesn’t work out - the process is structured so that the limitations of the already purchased tool do not fit into the current paradigm.

First, describe what result you want and how the process will look. This will help to understand the roles of the tool and safety in the process.

Start with what is already in use

Before buying expensive tools, look at what you already have. Each company has safety requirements for development, there are checks, pentests - why not transform all this into an understandable and convenient form for everyone?

Typically, requirements are a paper Talmud, which lies on a shelf. There was a case when we come to the company to see the processes and ask to show the security requirements for the software. The specialist who was doing this was looking for a long time:

- Now, somewhere in the notes there was a way where this document lies.

As a result, we received the document a week later.

For requirements, checks and other things, create a page, for example, on Confluence - this is convenient for everyone.

It is easier to reformat what is already there and use it to start.

Use Security Champions

Usually, in a medium-sized company for 100-200 developers one security officer works, who performs several functions, and physically does not have time to check everything. Even if he tries his best, he alone will not check all the code that the development generates. For such cases, a concept has been developed - Security Champions .

Security Champions is a person within the development team who is interested in the security of your product.

Security Champion is the entry point to the development team and the security evangelist all rolled into one.

Usually, when a security guard comes to the development team and indicates an error in the code, he receives an surprised answer:

“And who are you?” I see you for the first time. I’m doing fine - my senior friend on the code review set “apply”, we go further!

This is a typical situation, because there is a lot more trust in senior or just teammates, with whom the developer constantly interacts in work and in code review. If, instead of the security guard, the Security Champion indicates the mistake and consequences, then his word will have more weight.

Also, developers know their code better than any security provider. For a person who has at least 5 projects in a static analysis tool, it is usually difficult to remember all the nuances. Security Champions know their product: what interacts with what and what to look at in the first place - they are more effective.

So think about implementing Security Champions and expanding the influence of the security team. For the champion himself, this is also useful: professional development in a new field, expanding the technical horizons, pumping up technical, managerial and leadership skills, increasing the market value. This is some element of social engineering, your “eyes” in the development team.

Testing steps

The 20 to 80 paradigm says that 20% of the effort produces 80% of the result. These 20% are application analysis practices that can and should be automated. Examples of such activities are static analysis - SAST , dynamic analysis - DAST, and Open Source control . I’ll tell you more about activities, as well as about tools, what features we usually encounter when they are introduced into the process, and how to do it correctly.

The main problems of tools

I will highlight the problems that are relevant for all tools that require attention. I will analyze them in more detail so as not to repeat further.

Long time analysis. If it takes 30 minutes to complete all tests and assembly from the commit to going to the prod, then information security checks will take a day. So no one will slow down the process. Consider this feature and draw conclusions.

High False Negative or False Positive. All products are different, everyone uses different frameworks and their own style of writing code. On different code bases and technologies, tools can show different levels of False Negative and False Positive. Therefore, see what exactly in your company and for your applications will show a good and reliable result.

No integration with existing tools . Look at the tools in terms of integrations so that you are already using. For example, if you have Jenkins or TeamCity, check the integration of tools with this software, and not with GitLab CI, which you do not use.

The absence or excessive complexity of customization. If the tool does not have an API, then why is it needed? Everything that can be done in the interface should be accessible through the API. Ideally, the tool should have the ability to customize checks.

No roadmap product development.Development does not stand still, we always use new frameworks and functions, rewrite old code into new languages. We want to be sure that the tool we buy will support new frameworks and technologies. Therefore, it is important to know that the product has a real and proper development Roadmap .

Process features

In addition to the features of the tools, consider the features of the development process. For example, interfering with development is a typical mistake. Let's see what other features should be considered and what the security team should pay attention to.

In order not to disrupt the development and release dates, create different rules and different show stoppers - criteria for stopping the build process in the presence of vulnerabilities - for different environments . For example, we understand that the current branch goes to the development stand or UAT, so we do not stop and do not say:

- You have vulnerabilities here, you will not go anywhere further!

At this point, it is important to tell the developers that there are security issues worth paying attention to.

The presence of vulnerabilities is not an obstacle for further testing : manual, integration, or manual. On the other hand, we need to somehow increase the security of the product, and so that the developers do not forget about what they find safety. Therefore, sometimes we do this: at the stand, when it rolls out to the development environment, we just notify the development:

- Guys, you have problems, please pay attention to them.

At the UAT stage, we again show warnings about vulnerabilities, and at the exit stage in the prom we say:

- Guys, we warned several times, you did nothing - we won’t let you go with this.

If we talk about code and dynamics, it is necessary to show and warn about vulnerabilities only of those features and code that was just written in this feature. If the developer moved the button 3 pixels and we tell him that he has SQL injection there and therefore urgently needs to be fixed, this is wrong. Look only at what is written now and the change that comes to the application.

Suppose we have a certain functional defect - the way the application should not work: money is not transferred, when you click on the button, there is no transition to the next page or the goods do not load. Security defects are the same defects, but not in the context of the application, but security.

Not all software quality issues are security issues. But all security issues are related to software quality. Sherif Mansour, Expedia.

Since all vulnerabilities are the same defects, they should be located in the same place as all development defects. So forget about reports and scary PDFs that no one reads.

When I worked for a development company, I got a report from static analysis tools. I opened it, was horrified, made coffee, leafed through 350 pages, closed it and went on to work further. Big reports are dead reports . Usually they don’t go anywhere, letters are deleted, forgotten, lost or the business says that it takes risks.

What to do? We just transform the confirmed defects that we found into a form convenient for development, for example, add them to the backlog in Jira. We prioritize and eliminate defects in order of priority along with functional defects and test defects.

Static Analysis - SAST

This is code analysis for vulnerabilities , but it is not the same as SonarQube. We check not only by patterns or style. In the analysis, a number of approaches are used: in the vulnerability tree, in DataFlow , in the analysis of configuration files. This is all directly related to the code.

Advantages of the approach : identifying vulnerabilities in the code at an early stage of development , when there are no stands and a finished tool, and the possibility of incremental scanning : scanning a section of code that has changed, and only the feature that we are doing now, which reduces scanning time.

Cons - this is the lack of support for the necessary languages.

Necessary integrationwhich should be in the tools, in my subjective opinion:

- Integration tool: Jenkins, TeamCity and Gitlab CI.

- Development Environment: Intellij IDEA, Visual Studio. It’s more convenient for the developer not to climb into an incomprehensible interface that still needs to be remembered, but right at the workplace in his own development environment to see all the necessary integrations and vulnerabilities that he found.

- Code review: SonarQube and manual review.

- Defect Trackers: Jira and Bugzilla.

The picture shows some of the best representatives of static analysis.

It’s not the tools that are important, but the process, so there are Open Source solutions that are also good for running the process.

SAST Open Source will not find a huge number of vulnerabilities or complex DataFlow, but you can and should use them when building a process. They help to understand how the process will be built, who will respond to bugs, who to report, who to report. If you want to carry out the initial stage of building the security of your code, use Open Source solutions.

How can this be integrated if you are at the beginning of the road, you have nothing: neither CI, nor Jenkins, nor TeamCity? Consider integration into the process.

CVS Integration

If you have Bitbucket or GitLab, you can do integration at the Concurrent Versions System level .

By event - pull request, commit. You scan the code and show in the build status that the security check has passed or failed.

Feedback. Of course, feedback is always needed. If you just performed on the security side, put it in a box and didn’t tell anyone about it, and then dumped a bunch of bugs at the end of the month - this is neither right nor good.

Integration with code review

Once, in a number of important projects, we set the default reviewer of the AppSec technical user. Depending on whether errors were detected in the new code or if there are no errors, the reviewer puts the status on “accept” or “need work” on the pull request - either everything is OK, or you need to refine and links to exactly what to finalize. For integration with the version that goes to the prod, we had merge ban enabled if the IB test is not passed. We included this in a manual code review, and other participants in the process saw security statuses for this particular process.

SonarQube Integration

Many have a quality gate for code quality. The same thing here - you can make the same gates only for SAST tools. There will be the same interface, the same quality gate, only it will be called a security gate . And also, if you have a process using SonarQube, you can easily integrate everything there.

CI Integration

Here, too, everything is quite simple:

- At the same level with autotests , unit tests.

- Separation by stages of development : dev, test, prod. Different sets of rules may be included, or different fail conditions: stop the assembly, do not stop the assembly.

- Synchronous / asynchronous start . We are waiting for the end of the security test or not waiting. That is, we just launched them and move on, and then we receive the status that everything is good or bad.

It's all in a perfect pink world. In real life, this is not, but we strive. The result of security checks should be similar to the results of unit tests.

For example, we took a large project and decided that now we will scan it with SAST'om - OK. We shoved this project into SAST, it gave us 20,000 vulnerabilities, and with a strong-willed decision, we accepted that everything is fine. 20,000 vulnerabilities are our technical duty. We will put the debt in a box, and we will slowly rake up and start bugs in the defect trackers. We hire a company, do it all ourselves or Security Champions will help us - and our technical debt will decrease.

And all newly emerging vulnerabilities in the new code should be fixed as well as errors in the unit or in autotests. Relatively speaking, the assembly started, drove away, two tests and two security tests fell down. OK - they went, looked what happened, corrected one thing, corrected the second one, drove it out the next time - everything was fine, there were no new vulnerabilities, the tests failed. If this task is deeper and you need to understand it well, or fixing vulnerabilities affects large layers of what lies under the hood: they brought a bug into the defect tracker, it is prioritized and fixed. Unfortunately, the world is not perfect and tests sometimes fail.

An example of a security gate is an analogue of a quality gate, by the presence and number of vulnerabilities in the code.

We integrate with SonarQube - the plugin is installed, everything is very convenient and cool.

We integrate with SonarQube - the plugin is installed, everything is very convenient and cool.Development environment integration

Integration Capabilities:

- Starting a scan from the development environment before commit.

- View results.

- Analysis of the results.

- Synchronization with the server.

It looks something like getting results from the server.

In our Intellij IDEA development environment , an additional item simply appears that reports that such vulnerabilities were detected during the scan. You can immediately edit the code, see recommendations and Flow Graph . This is all located at the developer's workplace, which is very convenient - you don’t have to go to the rest of the links and watch something extra.

Open source

This is my favorite topic. Everyone uses the Open Source library - why write a bunch of crutches and bicycles when you can get a ready-made library in which everything is already implemented?

Of course, this is true, but libraries are also written by people, also include certain risks, and there are also vulnerabilities that are reported periodically, or constantly. Therefore, the next step in Application Security is the analysis of the Open Source component.

Open Source Analysis - OSA

The tool includes three large steps.

Search for vulnerabilities in libraries. For example, the tool knows that we are using some kind of library, and that there are some vulnerabilities in CVE or in bug trackers that relate to this version of the library. When you try to use it, the tool will warn you that the library is vulnerable, and advises you to use a different version, where there are no vulnerabilities.

Analysis of licensed cleanliness.This is not very popular with us yet, but if you work with foreign countries, you can periodically receive an ATA for using an open source component that cannot be used or modified. According to the policy of the licensed library, we cannot do this. Or, if we modified and use it, we must lay out our code. Of course, no one wants to upload the code of their products, but you can also protect yourself from this.

Analysis of components that are used in an industrial environment.Imagine a hypothetical situation that we finally completed the development and released the latest latest release of our microservice in prom. He lives there wonderfully - a week, a month, a year. We do not collect it, we do not conduct security checks, everything seems to be fine. But suddenly, two weeks after the release, a critical vulnerability emerges in the Open Source component, which we use in this assembly, in the industrial environment. If we do not record what and where we use, then we simply will not see this vulnerability. Some tools have the ability to monitor vulnerabilities in libraries that are currently used in the prom. It is very useful.

Opportunities:

- Different policies for different stages of development.

- Monitoring component in an industrial environment.

- Library control in the organization loop.

- Support for various build systems and languages.

- Analysis of Docker images.

A few examples of area leaders who analyze Open Source.

The only free one is OWASP's Dependency-Check . You can turn it on at the first stages, see how it works and what it supports. Basically, these are all cloud products, or on-premise, but behind their base they are still sent to the Internet. They do not send your libraries, but hashes or their values, which they calculate, and fingerprints to their server to receive news of vulnerabilities.

Process integration

Perimeter libraries that are downloaded from external sources. We have external and internal repositories. For example, Nexus is located inside Event Central, and we want to ensure that there are no vulnerabilities with the status “critical” or “high” inside our repository. You can configure proxying using the Nexus Firewall Lifecycle tool so that such vulnerabilities are cut off and do not fall into the internal repository.

Integration in CI . At the same level as autotests, unit tests and separation by development stages: dev, test, prod. At each stage, you can download any libraries, use anything, but if there is something tough with the status of “critical”, it’s possible, you should pay attention to this at the stage of entering the prom.

Artifact Integration: Nexus and JFrog.

Integration into the development environment. The tools you choose should have integration with development environments. The developer should have access to the scan results from his workplace, or the ability to scan and check the code for vulnerabilities before committing to CVS.

Integration in CD. This is a cool feature that I really like and about which I have already talked about - monitoring the emergence of new vulnerabilities in the industrial environment. It works something like this.

We have Public Component Repositories - some tools outside, and our internal repository. We want only trusted components in it. When proxying a request, we verify that the downloaded library has no vulnerabilities. If it falls under certain policies that we establish and necessarily coordinate with the development, then we don’t upload it and a beat comes to use another version. Accordingly, if there is something really critical and bad in the library, then the developer will not receive the library at the installation stage - let him use a version higher or lower.

- During the build, we verify that no one slipped anything bad, that all components are safe and that no one brought anything dangerous on the flash drive.

- In the repository we have only trusted components.

- When deploying, we once again check the package itself: war, jar, DL or Docker-image that it matches the policy.

- When we go to the industrial, we monitor what is happening in the industrial environment: critical vulnerabilities appear or do not appear.

Dynamic Analysis - DAST

Dynamic analysis tools are fundamentally different from everything that was said before. This is a kind of imitation of the user's work with the application. If this is a web application, we send requests, simulating the work of the client, click on the buttons on the front, send artificial data from the form: quotation marks, brackets, characters in different encodings, to see how the application works and processes external data.

The same system allows you to check pattern vulnerabilities in Open Source. Since DAST does not know which Open Source we are using, it simply throws “malicious” patterns and analyzes server responses:

- Yeah, there is a problem of deserialization, but not here.

There are big risks in this, because if you conduct this security test on the same stand with which testers work, unpleasant things can happen.

- High load on the network \ application server.

- No integrations.

- The ability to change the settings of the analyzed application.

- There is no support for the necessary technology.

- The complexity of the settings.

We had a situation when we finally launched AppScan: for a long time we knocked out access to the application, got 3 accounts and were delighted - finally we’ll check everything! We started the scan, and the first thing AppScan did was climb into the admin panel, poke all the buttons, change half of the data, and then completely kill the server with its mailform requests. Development with testing said:

- Guys, are you kidding me ?! We gave you the records, and you put a stand!

Consider possible risks. Ideally, prepare a separate test bench for information security, which will be isolated from the rest of the environment at least somehow, and it is advisable to conditionally check the admin panel in manual mode. This is a pentest - those remaining percentages of efforts that we are not considering now.

It is worth considering that you can use this as an analogue of load testing. At the first stage, you can turn on a dynamic scanner in 10-15 threads and see what happens, but usually, as practice shows, nothing good.

A few resources that we commonly use.

It is worth highlighting Burp Suite - this is a "Swiss knife" for any security specialist. Everyone uses it, and it is very convenient. Now there is a new demo version of enterprise edition. If earlier it was just a stand alone utility with plug-ins, now developers are finally making a large server from which it will be possible to manage several agents. This is cool, I advise you to try.

Process integration

Integration is quite good and simple: launching a scan after successfully installing the application on the stand and scanning after successful integration testing .

If the integration does not work or there are stubs and mock functions there, it is pointless and useless - no matter what pattern we send, the server will still respond the same way.

- Ideally - a separate stand for testing.

- Before testing, write down the login sequence.

- Testing the administration system is only manual.

Process

A little generalized about the process in general and about the operation of each tool, in particular. All applications are different - dynamic analysis works better for one, static analysis for the other, OpenSource analysis for the third, pentests or something else in general, for example, events with Waf .

Every process needs to be controlled.

To understand how the process works and where it can be improved, you need to collect metrics from everything your hands reach, including production metrics, metrics from tools and from defect trackers.

Any data is helpful. It is necessary to look in various sections on where this or that tool is better applied, where the process specifically sags. It might be worth looking at the development response time to see where to improve the process based on time. The more data, the more sections you can build from the top to the details of each process.

Since all static and dynamic analyzers have their own APIs, their own launch methods, principles, some have shedulers, others do not - we write the AppSec Orchestrator tool, which allows you to make a single point of entry into the entire process from the product and manage it from one point.

Managers, developers and security engineers have one entry point, from which you can see what is running, configure and run the scan, get the scan results, make demands. We try to get away from the papers, translate everything into a human one, which uses development - pages on Confluence with status and metrics, defects in Jira or in various defect trackers, or embedding in a synchronous / asynchronous process in CI / CD.

Key takeaways

Tools are not important. First think over the process - then implement the tools. The tools are good, but expensive, so you can start with the process and set up the interaction and understanding between development and security. From the point of view of security - there is no need to "stop" everything, From the point of view of development - if there is something high mega super critical, then this should be eliminated, and not close your eyes to the problem.

Product quality is a common goal of both security and development. We are doing one thing, we are trying to ensure that everything works correctly and there are no reputational risks and financial losses. That is why we are promoting the approach to DevSecOps, SecDevOps in order to establish communication and make the product better.

Start with what you already have: requirements, architecture, partial checks, trainings, guidelines. You do not need to immediately apply all the practices to all projects - move iteratively . There is no single standard - experiment and try different approaches and solutions.

Between IS defects and functional defects an equal sign .

Automate everything that moves. All that does not move is move and automate. If something is done by hand, this is not a good part of the process. Perhaps it is worth revising it and also automating it.

If the size of the IB command is small, use Security Champions .

Perhaps what I was talking about will not suit you and you will come up with something of your own - and that's good. But choose tools based on the requirements of your process . Do not look at what the community says that this tool is bad and this one is good. Perhaps it’s the exact opposite on your product.

Tool Requirements.

- Low False Positive.

- Adequate analysis time.

- Ease of use.

- Availability of integrations.

- Understanding Roadmap Product Development.

- The ability to customize tools.

Yuri's report was chosen as one of the best at DevOpsConf 2018. To get acquainted with even more interesting ideas and practical cases, come to Skolkovo on May 27 and 28 at DevOpsConf as part of the RIT ++ festival . Better yet, if you are ready to share your experience, then apply for a report by April 21.