Video analytics harvesters: what the brain and machines do with our faces

The ability to see and quickly recognize faces is superpower. No need to spend time analyzing, studying wrinkles, folds and ovals. Face recognition is instant and effortless. It’s so easy that we don’t realize how we do it.

Think about how different faces look like each other - two eyes, a mouth, a nose, ears sticking out on the sides, each time in the same order (most often). It is incredible that we analyze an object with such ease.

We are “programmed” to recognize faces from birth, but now people have achieved more - they taught the machine this skill. How will the widespread implementation of person recognition and identification systems affect society?

Pareidolia: automatic face search

People in the "automatic" mode are able to distinguish between familiar images on any surface. Only three architectural elements of the building are perceived as the face of a surprised duck. This is an example of pareidolia.

The word pareidolia comes from the Greek words para (para - near, near, deviation from anything) and eidolon - image. This is the name of an optical illusion, the perception of an image or meaning where they really are not. For example, a face on a tree trunk or animal figures in the clouds is a pareidolia.

More of these photographs can be found on thingswithfaces.com

. We see the faces of people and the faces of animals in any geometric shape. The whole emoji culture is built on this principle. :-)

The phenomenon of pareidolia is easily translated into the language of algorithms. ArtistsShinseungback Kimyonghun photographed clouds, merging into human faces for a moment, using a script with the OpenCV library.

Thatcher's Illusion: System Biological Mistakes

There is a biological bug that shows the great importance of the recognition skill . Most of the objects around you - a chair, a table, a computer, are easy to see and correctly identify from any angle. Just not faces.

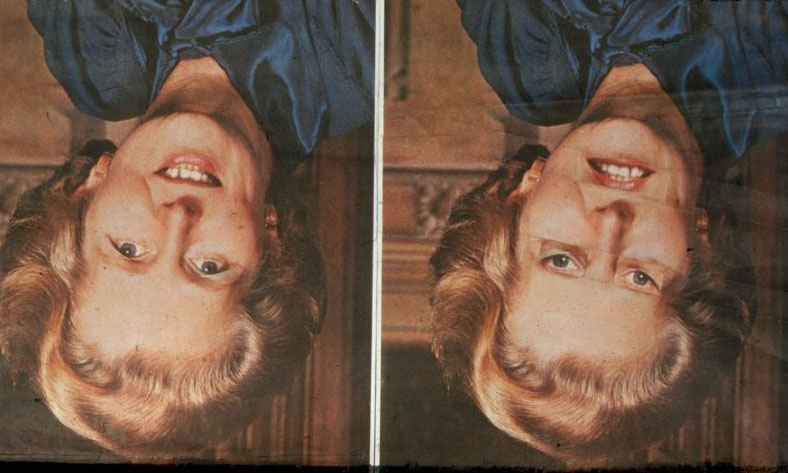

The inverted face gives rise to a malfunction in the brain called the Thatcher effect (illusion). The phenomenon describes a condition in which it is difficult to detect local changes in an inverted portrait photo.

Turn over the photo of Margaret Thatcher and look at the result.

The first photo seems normal, but if you turn it over, the wrong position of the eyes and mouth immediately catches the eye. Man and an artificial neural network perceive images in different ways. It is amazing that the “neural network” between our ears is so easy to fool.

Thatcher's illusion demonstrates some basic mechanisms by which our brain processes information. The brain reads a set of individual elements: a pair of eyes, nose, mouth, ears. In addition to the individual characteristics of facial features, their relationship between themselves and location are taken into account. That is, a person is perceived as a whole system.

Therefore, when we are shown an inverted face, it is more difficult for the brain to evaluate the image whole - the information is "collected" separately for each element: the eyes are in place, the mouth is like a mouth. However, as soon as we are shown the right face, suddenly the perception of a single system reconnects and problems begin: it becomes clear that the familiar features are interconnected in an unusual way.

Why is it important? The human brain is able to recognize the smallest differences in facial features due to the integrity of perception. The area of the cerebral cortex recognizes the face and determines the direction of the gaze, the amygdala and islet lobe analyze the facial expression, and the area in the prefrontal area of the frontal lobe and the brain system responsible for the feeling of pleasure evaluate its beauty.

A bug like a feature: Chernov’s faces

( c ) The

peculiarity of human perception is used to analyze aggregated multidimensional data with the help of “persons”. In 1973, American mathematician German Chernov outlined the concept of using “persons” to identify characteristic relationships and to study complex relationships between several variables.

The Chernov data is reflected in the form of faces-pictograms, where the relative values of the selected variables are presented as the shapes and sizes of individual features: nose length, angle between eyebrows, face width - up to 36 variables in total. Thus, the observer can identify visual characteristics of objects that are unique for each configuration of values.

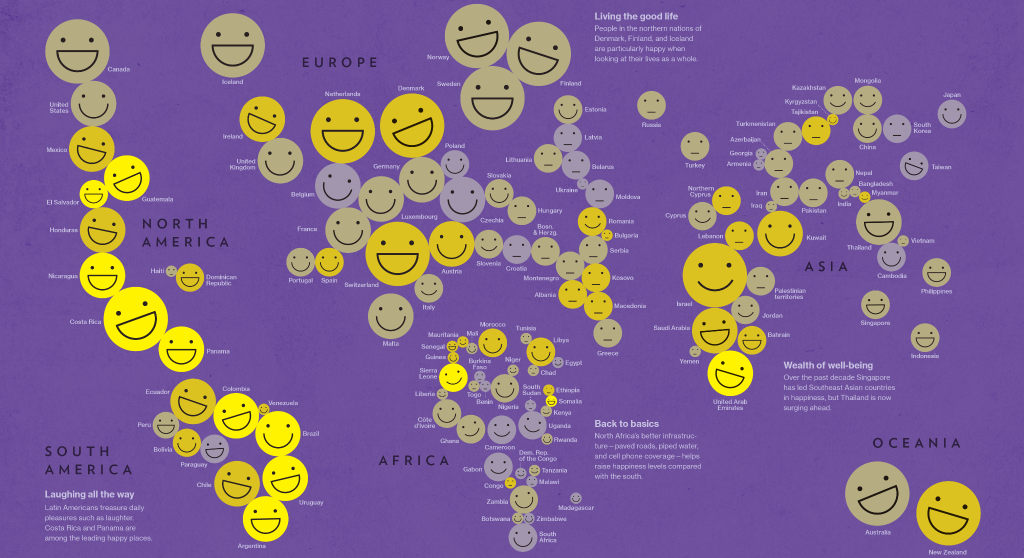

A quick look at the diagram made up of faces will allow you to quickly determine whether the characteristics of the profiles significantly differ (coincide). With a detailed review of facial features, it becomes clear in which features (each facial feature is a separate feature of the original data set) the similarity, and what is the difference. For example, in the illustration above, it is easy to notice the difference between countries by sad and funny emoticons.

Why car your face

The skill of quick face recognition helps to pick up your child from kindergarten, choose a partner, correctly and appropriately express emotions. But what happens when a person transfers this ability to an artificial neural network?

An idea can cause rejection. Not everyone is ready to easily accept technology that stores data, monitors movement, analyzes purchases and emotions. The transition from simple video surveillance to personalized video analytics entails a significant increase in responsibility.

Today, algorithms such as DeepFace determine the similarities of individuals with an accuracy higher than that of humans. The Nvidia algorithm itself creates the faces of non-existent people in a few seconds. Faces on the collage above are generatedneural network StyleGAN, trained on a data set of 70,000 images. They look frighteningly realistic.

Demonstration of the SearchFace algorithm At

first, the Facebook face recognition algorithm caused increased alertness, but then everyone got used to it (or left the social network). The FindFace service for searching people on photos on VKontakte received mixed reviews and was used for bullying, but the closure of the similar SearchFace project already caused a negative reaction from users - in the end, if the data is available, then let it be open to everyone.

Retailers install face recognition technology to prevent theft, collect data on age, gender and even emotions of customers. In the end, the goal is to improve customer service and capitalize on it. When customers realize that the system is personally beneficial to them, many will agree to introduce new technologies.

Given the growing number of cases of "identity theft" - credit card and personal data fraud, consumers will prefer the system at the right time. identifies them correctly.

Currently, algorithms help solve the problems of poor frame illumination, low resolution and masking - such as glasses, wigs and multi-day bristles. The systems are running at tremendous speed and map a person to a database of millions of people in just a second.

Some stores in the United States offer theft suspects a choice: allow yourself to take a picture or get a formal crime charge. A thief gains freedom along with a ban on shopping, and his photo officially gets into the database. Files containing images of people are encrypted and available only to the owner of the system.

Who makes a profit from recognition

Most stores have already installed CCTV cameras. For video analytics, hardware update is not required - just connect the cloud service. And with the Ivideon video analytics service, the entry threshold is practically absent. The cost of the solution is from 1,700 rubles per camera, which gives any entrepreneur access to software.

The main motive for retailers to use face recognition technology is to prevent theft. According to the National Retail Foundation, the United States alone, about 1.33% of all goods in 2017 were lost due to theft - no less damage worth $ 46.8 billion.

Face recognition technology reduces the number of store thefts by more than 30%.

Often secondary factors influence the amount of damage: negligence of employees, poor preparation of the security service, desire to save. These and other problems must be resolved with cameras and cloud technology.

The face recognition system facilitates quick work with blacklists: it compares the client’s photo with a database of unreliable persons and, if it matches, sends an appropriate warning to the guards.

Analytical software greatly enhances the security of the store. An experienced thief is able to notice the "blind spots" of cameras. In this case, the guard can use his phone to photograph the suspect, and then check whether this person is in the database.

Brands have been using mobile marketing for a long time - they send SMS, push notifications and show targeted ads. For traditional retail, recognition systems provide the same capabilities that online sellers with cookies have received.

The same platform that is used to identify thieves helps sellers figure out which storefronts attract customers better. The recognition system helps identify the VIP client right at the entrance to the store. Using the data from CRM, the seller can quickly make a customer an advantageous offer.

In Seoul’s International Financial Center, cameras on information boards in real time determine the age and gender of a person, and offer advertising according to identified parameters

Customer information activates a powerful tool to increase sales and assess the needs of the audience. The cameras will help you configure the display of video ads for a particular visitor, depending on their gender, age and emotional state, as well as become data providers for calculating the effectiveness of advertising.

The above opportunities for retailers often sound like annoying advertising buzz. The theses on “profit growth” and “audience needs” accompany any IT tool on the market - from ERP to an electronic price tag. Is there anything more to face recognition systems than pure marketing about artificial intelligence and future technologies? We will answer this question through examples of using real systems in existing stores.

“Work in the field”: who in real conditions recognizes faces

7-Eleven is the largest retail chain in the world, with Seven-Eleven Japan managing more than 36,000 small stores in 18 countries. The company recently installed software in 11,000 of its stores. Face recognition and behavior analysis technology in the distribution network is used to identify loyalty card holders, monitor customer traffic, determine the level of stocks of goods in warehouses.

Saks is a centennial chain of premium stores that is currently owned by one of the oldest companies in the world (founded in 1670) by Hudson's Bay Company. Video analytics usedin Saks mainly to prevent theft. The software checks photos of theft suspects against a database of well-known shoplifters. The cameras are networked, so the results can be viewed at Saks headquarters in New York.

According to Guardian, premium stores and hotels in Europe regularly use face recognition technology to track VIPs and celebrities to provide them with the most comfortable conditions.

In the US, the CaliBurger Burger Network uses face recognition technology in a loyalty program. The interactive kiosk “recognizes” customers, remembers orders and offers favorite dishes, accepts payments with identification by face.

The system removes the threshold for entering the bonus program for older people, who may find it difficult to use the mobile application, bonus points and credit cards.

Face recognition systems are widely used in Asia, especially in China, where they are used to pay for food, withdraw cash from an ATM, or even take loans. Face recognition accuracy in China is superior to the human eye. This is also due to the large-scale transition of China from 2D to 3D recognition.

In the first case, the algorithms use two-dimensional images stored in databases for analysis. 3D recognition analyzes reconstructed three-dimensional images and demonstrates much higher accuracy. In China, using face scans, you can make purchases (for example, pay orders at KFC), make payments, and enter buildings.

In Alipay, you need to smile so that the payment recognition system understands: before it is not a photograph, but a living person. It is alleged that it is impossible to deceive Alipay: changing hair color, makeup, using a wig does not change anything. The system uses a set of distinctive features that take into account the geometry of the face and the location of certain points on it.

conclusions

The scale of direct investment by Western companies and China in face recognition technology is huge. Nevertheless, the implementation of such projects in Russia is a matter of time. Large commercial companies already understand the benefits and economic benefits. If we consider face recognition as a product, it is important to understand that each segment of the business has its own specifics, including price. The larger the enterprise, the more cameras and analytics modules may be required. Solutions for large businesses are always complex customized projects, and customization requires additional funds. Medium and small businesses can easily do with one camera with a connected face recognition module. In this case, the cost of the solution is comparable to the use of cloud video surveillance.