Modular data center in the service of the Large Hadron Collider

The last twenty years, data centers appear like mushrooms after rain, which is understandable. In addition to quantitative indicators, qualitative, new forms, new approaches to the construction of the environment are also developing. One of these innovations have become modular data centers. The effectiveness of solutions based on a standardized container was the first to evaluate the military, it was they who pioneered the use of data centers built on a modular basis. The rapid deployment of computing power and the ability to store data in the most remote corners of the Earth have become a panacea for the military in the world with a daily increasing amount of information generated. Thanks to Sun Microsystems, in 2006, modular data centers became available for civilian consumers. But it would seem Who will buy them? Under the conditions of the brutal struggle of IT market participants for high performance of “iron”, the advantages of modularity of data centers, in most cases of civilian life, are mercilessly crossed out by a whole range of minuses generated by this very modularity. But as time has shown, everything is not so simple in this topic and the product has found its consumer. One of these consumers, however surprising it may be, has become CERN. The brainchild of the organization is the Large Hadron Collider, which will acquire a pair of new modular data centers. Very strange decision? This and not only will be discussed further. One of these consumers, however surprising it may be, has become CERN. The brainchild of the organization is the Large Hadron Collider, which will acquire a pair of new modular data centers. Very strange decision? This and not only will be discussed further. One of these consumers, however surprising it may be, has become CERN. The brainchild of the organization is the Large Hadron Collider, which will acquire a pair of new modular data centers. Very strange decision? This and not only will be discussed further.

Container - all ingenious is simple!

As far as we know, for the first time this idea with the container was implemented by the military - to stuff the metal frame with server racks, integrate the cooling system into it and here you have a ready-made solution. But with all the utility of such a data container, there are questions of flexibility, the efficiency of its filling. How much the standard hardware installed corresponds to the tasks to be performed, because the reserve of server racks personalization is very limited there. On the one hand, the container form factor does not allow the network administrator to unfold in it, both literally and figuratively. On the other hand, replacing the filling with a more powerful one can trivially disable the auxiliary systems of the container: energy supply, cooling. Even with the ability to easily increase the capacity of its server design, the method of delivering more and more of one container,

CERN selects a modular data center

“By the end of 2019, we plan to install two new data centers, each of which will serve exclusively its own BAC detector. The detector related to the LHCb experiment will be awarded six modules, the detector of the ALISA experiment will serve a data center of four containers. The need to expand the existing infrastructure was caused by the upgrading of the mentioned detectors. After modernization, the number of data generated by the detectors will increase significantly, ”said the deputy head of one of the CERN projects, Niko Neufeld.

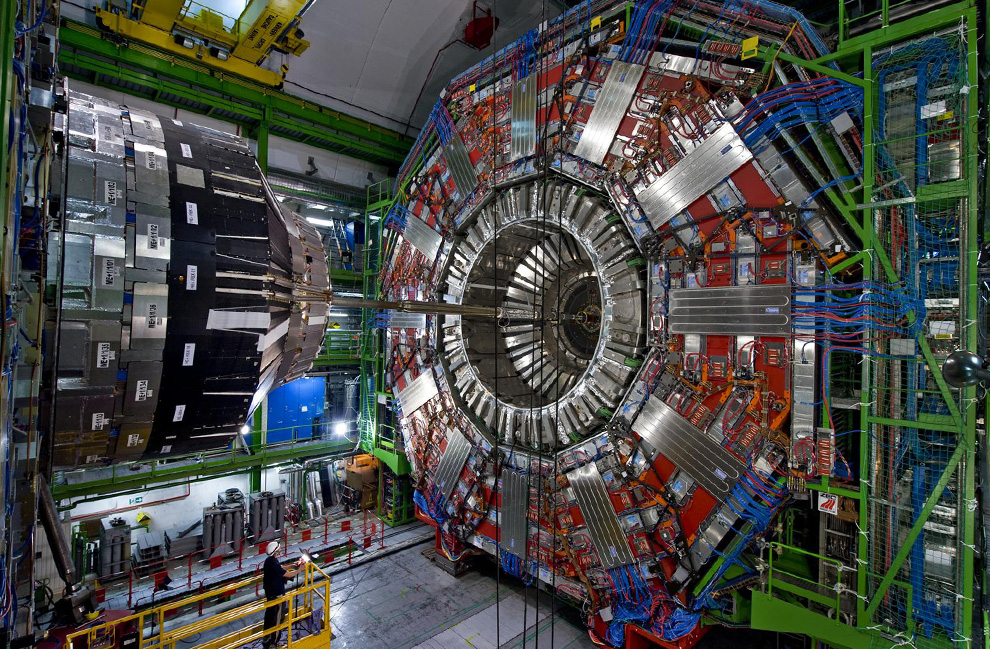

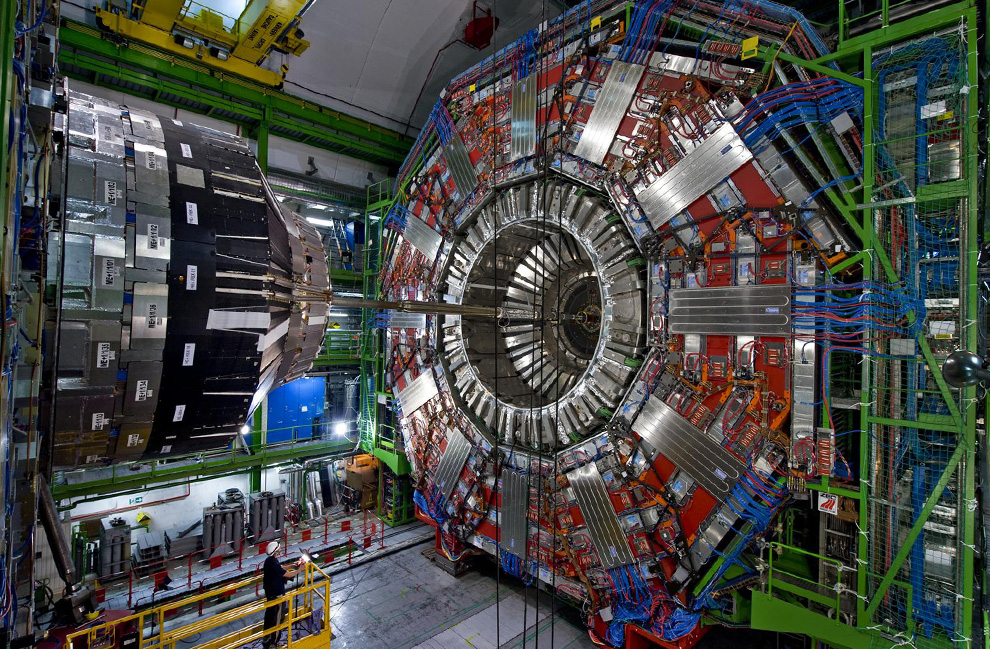

The BAC (Large Hadron Collider) is a project under the auspices of CERN, it is a colossal structure that is located at a depth of about 100 meters in the heart of Western Europe, on the territory of two states - France and Switzerland. With a diameter of 8.5 km and a length of about 27 km, it is difficult to blame the LHC for its remoteness from civilization. The project itself is essentially the core of modern science, the engine of its fundamental component. In addition, the existing IT infrastructure serving BAC meets all modern standards, including data centers and fiber-optic backbones, which connect it with the whole world. What was the point in the decision of European nuclear engineers from CERN to increase the capacity of the IT infrastructure through modular data centers?

At the moment, directly the needs of the collider are served by as many as five data centers. Of these, four are assigned to the four main sensors of each of the main experiments: ATLAS, CMS, ALISA and LHCb. The fifth data center is the largest - it is the central hub for the processing and storage of data collected from the entire scientific complex of LHC.

“At the moment, the central detector serving the LHCb experiment records events occurring in it at a frequency of 1 MHz, after the upgrade the fixation frequency will increase 40 times, and imagine 40,000,000 records per second,” Niko continued.

It is not surprising that the new time required new equipment. Increasing data flow is a widespread trend and there is nothing to be surprised at upgrading the network infrastructure, but why modular data centers? The answer was not long in coming.

“This is pure savings. The specificity of the detectors is short-term bursts of data generation. The amount of data generated by these bursts is known with very high accuracy, so we know exactly how much disk space for data storage we need and this volume will be relevant to the extreme phase of the experiment. Moreover, by placing the server modules as close as possible to the sensors, we have reduced the length of fiber-optic communications required for transmitting 30 PBB of data in a short period of time, the price of laying such communications is quite impressive. “- explained Niko.

According to the scheme outlined by the CERN employee, the situation with the choice of infrastructure began to clear up. The huge data flow generated by the BAK sensors will reach the primary data processing and storage centers in the shortest time, after which, waiting for the next sensor launch, the collected information will gradually flow into the central data center without overloading the existing network infrastructure.

Life after the Higgs

In fact, this whole megalithic structure, the LHC, was created with the aim of revealing / disproving the existence of the Higgs bason. In 2013, indirect signs of existence were discovered and the original problem was solved. A year and a half of the collider's idle time was required for CERN employees to upgrade existing detectors that could solve new problems. However, no more than three years have passed since the date of the new launch and at the moment the LHC is waiting for the next modernization, the shutdown of the collider should last from 2019 to 2021.

As we can see, the situation here is that it does not make sense to burden the project with a heavy, stationary infrastructure, because after the short stage of collecting statistical data, the chances are high that the need for it will simply disappear. As practice shows, new projects will still require new infrastructure, other equipment involved in it. The modules installed now can always be easily moved to another place where their use will be more rational.

“Now the sensor from the LHDb project is serviced by a server located directly next to it - underground. Two factors prevented us from modernizing this site: the space bounded by the dungeon and the problems caused by the inability to effectively cool the server room, ”Niko said.

The existing server room, which was mentioned by a research center employee, is located at a depth of 100 meters. At times of peak load on servers, the amount of heat they emit makes it necessary to deliver heated coolant to the surface, where it can release excess heat to the atmosphere and then return to the bottom. .

High cost of cooling servers in the modules is clearly not expected. Given the cool climate of the Alpine foothills, PUE - the cooling system's efficiency ratio will be less than 1.1 (only 10% of the energy consumed by the server equipment will go to its cooling).

Until March 2019, all ten modules will have to occupy their project sites. Toward the end of the year, fiber-optic lines will be connected to the data centers, only after that the infrastructure will be functional. However, she will be able to prove herself no sooner than in three years. The first launch of the larger hadron collider, after the modernization, is planned already for 2021.

“But the most unusual thing about our new data centers is that we will not provide them with a backup power source. Over the 6 years of work at the science center, we have never had a power outage, ”concluded Nico Newfield.

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending friends30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server correctly? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until January for free if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read aboutHow to build the infrastructure of the building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

Container - all ingenious is simple!

As far as we know, for the first time this idea with the container was implemented by the military - to stuff the metal frame with server racks, integrate the cooling system into it and here you have a ready-made solution. But with all the utility of such a data container, there are questions of flexibility, the efficiency of its filling. How much the standard hardware installed corresponds to the tasks to be performed, because the reserve of server racks personalization is very limited there. On the one hand, the container form factor does not allow the network administrator to unfold in it, both literally and figuratively. On the other hand, replacing the filling with a more powerful one can trivially disable the auxiliary systems of the container: energy supply, cooling. Even with the ability to easily increase the capacity of its server design, the method of delivering more and more of one container,

CERN selects a modular data center

“By the end of 2019, we plan to install two new data centers, each of which will serve exclusively its own BAC detector. The detector related to the LHCb experiment will be awarded six modules, the detector of the ALISA experiment will serve a data center of four containers. The need to expand the existing infrastructure was caused by the upgrading of the mentioned detectors. After modernization, the number of data generated by the detectors will increase significantly, ”said the deputy head of one of the CERN projects, Niko Neufeld.

The BAC (Large Hadron Collider) is a project under the auspices of CERN, it is a colossal structure that is located at a depth of about 100 meters in the heart of Western Europe, on the territory of two states - France and Switzerland. With a diameter of 8.5 km and a length of about 27 km, it is difficult to blame the LHC for its remoteness from civilization. The project itself is essentially the core of modern science, the engine of its fundamental component. In addition, the existing IT infrastructure serving BAC meets all modern standards, including data centers and fiber-optic backbones, which connect it with the whole world. What was the point in the decision of European nuclear engineers from CERN to increase the capacity of the IT infrastructure through modular data centers?

At the moment, directly the needs of the collider are served by as many as five data centers. Of these, four are assigned to the four main sensors of each of the main experiments: ATLAS, CMS, ALISA and LHCb. The fifth data center is the largest - it is the central hub for the processing and storage of data collected from the entire scientific complex of LHC.

“At the moment, the central detector serving the LHCb experiment records events occurring in it at a frequency of 1 MHz, after the upgrade the fixation frequency will increase 40 times, and imagine 40,000,000 records per second,” Niko continued.

It is not surprising that the new time required new equipment. Increasing data flow is a widespread trend and there is nothing to be surprised at upgrading the network infrastructure, but why modular data centers? The answer was not long in coming.

“This is pure savings. The specificity of the detectors is short-term bursts of data generation. The amount of data generated by these bursts is known with very high accuracy, so we know exactly how much disk space for data storage we need and this volume will be relevant to the extreme phase of the experiment. Moreover, by placing the server modules as close as possible to the sensors, we have reduced the length of fiber-optic communications required for transmitting 30 PBB of data in a short period of time, the price of laying such communications is quite impressive. “- explained Niko.

According to the scheme outlined by the CERN employee, the situation with the choice of infrastructure began to clear up. The huge data flow generated by the BAK sensors will reach the primary data processing and storage centers in the shortest time, after which, waiting for the next sensor launch, the collected information will gradually flow into the central data center without overloading the existing network infrastructure.

Life after the Higgs

In fact, this whole megalithic structure, the LHC, was created with the aim of revealing / disproving the existence of the Higgs bason. In 2013, indirect signs of existence were discovered and the original problem was solved. A year and a half of the collider's idle time was required for CERN employees to upgrade existing detectors that could solve new problems. However, no more than three years have passed since the date of the new launch and at the moment the LHC is waiting for the next modernization, the shutdown of the collider should last from 2019 to 2021.

As we can see, the situation here is that it does not make sense to burden the project with a heavy, stationary infrastructure, because after the short stage of collecting statistical data, the chances are high that the need for it will simply disappear. As practice shows, new projects will still require new infrastructure, other equipment involved in it. The modules installed now can always be easily moved to another place where their use will be more rational.

“Now the sensor from the LHDb project is serviced by a server located directly next to it - underground. Two factors prevented us from modernizing this site: the space bounded by the dungeon and the problems caused by the inability to effectively cool the server room, ”Niko said.

The existing server room, which was mentioned by a research center employee, is located at a depth of 100 meters. At times of peak load on servers, the amount of heat they emit makes it necessary to deliver heated coolant to the surface, where it can release excess heat to the atmosphere and then return to the bottom. .

High cost of cooling servers in the modules is clearly not expected. Given the cool climate of the Alpine foothills, PUE - the cooling system's efficiency ratio will be less than 1.1 (only 10% of the energy consumed by the server equipment will go to its cooling).

Until March 2019, all ten modules will have to occupy their project sites. Toward the end of the year, fiber-optic lines will be connected to the data centers, only after that the infrastructure will be functional. However, she will be able to prove herself no sooner than in three years. The first launch of the larger hadron collider, after the modernization, is planned already for 2021.

“But the most unusual thing about our new data centers is that we will not provide them with a backup power source. Over the 6 years of work at the science center, we have never had a power outage, ”concluded Nico Newfield.

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending friends30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server correctly? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until January for free if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read aboutHow to build the infrastructure of the building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?