Recognize fake review? Easy, the new algorithm does it with 95% efficiency

Researchers from the University of São Paulo have created an algorithm that, according to them, recognizes purchased reviews on various sites (Amazon, Trip Advisor, etc.) with 95% efficiency. Moreover, reviews can be either positive or negative, the algorithm recognizes both types of reviews.

The system with the complex name Online-Recommendation Fraud ExcLuder (ORFEL) solves a problem that is quite important for the present, separating grains from the chaff. Millions of users leave hundreds of thousands of reviews, and recognizing a fake, purchased review is not an easy task. Nevertheless, it is necessary to solve this problem, since the task of many false reviews is to damage the reputation of any company. This problem is not new - in 2011, the authorities of some countries investigated the situation.with the advent of a huge number of hired commentators leaving reviews of a product or service on Amazon / TripAdvisor and other sites.

The fake review identification system uses a special algorithm that tracks the coordinated actions of many users. Usually hired commentators begin to leave reviews about a product or service (of their own or another company) at a certain time. In this case, it is rather difficult, but possible, to single out a certain vector of actions of “mercenaries”. ORFEL uses a vertex-centric algorithm to highlight specific patterns of user behavior.

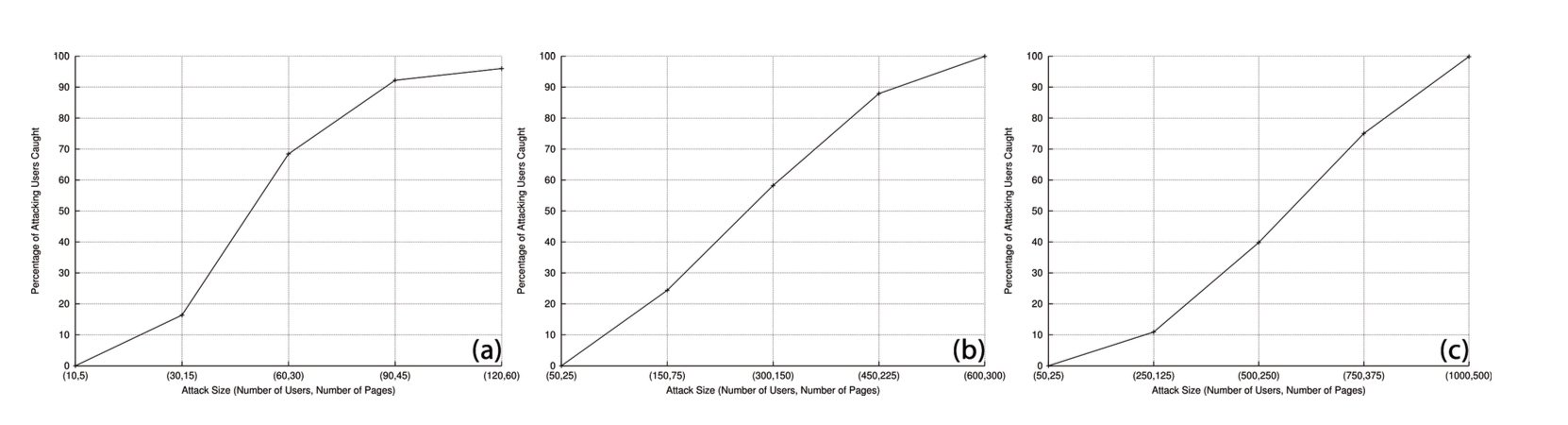

The more users are involved, the easier it is to detect a “feedback attack”

Moreover, the system allows you to detect both reviews directed against any product or service, and reviews that are used to promote such products and services.

In test mode, to test the algorithm, the researchers took two sets of data, the processing results of which are shown above, on the graph. The system works not only with Amazon and other similar sites, but also detects “commentator attacks” through social networks, Google+, Facebook and others.

It is worth noting that many companies are now actively fighting the “bought commentators”. For example, Amazon has discovered more than 1000 of these "browsers."

In June, the UK government also published a report on the work of hired commentators, promising to introduce a systemunlimited fines or even imprison representatives of companies that are engaged in a dishonest fight, using fake reviews about products and services of their own or other companies.