Pascal Guide: Understanding NVIDIA 2016 Graphics Cards

2016 is already running out, but its contribution to the gaming industry will remain with us for a long time. Firstly, video cards from the red camp received an unexpectedly successful update in the middle price range, and secondly, NVIDIA proved once again that it is not in vain that it occupies 70% of the market. Maxwells were good, the GTX 970 was rightfully considered one of the best cards for its money, but Pascal is a completely different matter.

The new generation of iron represented by GTX 1080 and 1070 literally buried the results of last year’s systems and the flagship used iron market, while the “younger” lines represented by GTX 1060 and 1050 secured success in more accessible segments. Owners of GTX980Ti and other Titans cry crocodile tears: their uber guns for many thousands of rubles lost 50% of the cost and 100% of the show off. NVIDIA itself claims that 1080 is faster than last year's TitanX, 1070 easily "heaps" 980Ti, and the relatively budget 1060 will hurt the owners of all other cards.

Is this so, where do the legs of high productivity come from and what to do with all this on the eve of the holidays and sudden financial joys, as well as how to please yourself, you can find in this long and a little boring article.

Nvidia can be loved or ... not loved, but denying that it is it that is currently the leader in the field of video graphics will only be a popadan from an alternative universe. Since Vega from AMD has not yet been announced, we still haven’t seen the flagship RXs on Polaris, and the R9 Fury with its 4 GB of experimental memory frankly cannot be considered a promising card (VR and 4K, nevertheless, they want a little more, than she has) - we have what we have. While 1080 Ti and the conventional RX 490, RX Fury and RX 580 are just rumors and expectations, we have time to figure out the current NVIDIA line and see what the company has achieved in recent years.

NVIDIA regularly gives reasons to "not love yourself." The story of the GTX 970 and its “3.5 GB memory”, “NVIDIA, Fuck you!” from Linus Torvalds, full pornography in the line of desktop graphics, refusal to work with the free and much more common FreeSync system in favor of its proprietorship ... In general, there are enough reasons. One of the most annoying to me personally is what happened to the past two generations of video cards. If we take a rough description, the "modern" GPUs have gone since the support of DX10. And if you look for the "grandfather" of the 10th series today, then the beginning of modern architecture will be in the region of the 400th series of video accelerators and Fermi architecture. It was in him that the idea of a “block” construction of the so-called "CUDA cores" in NVIDIA terminology.

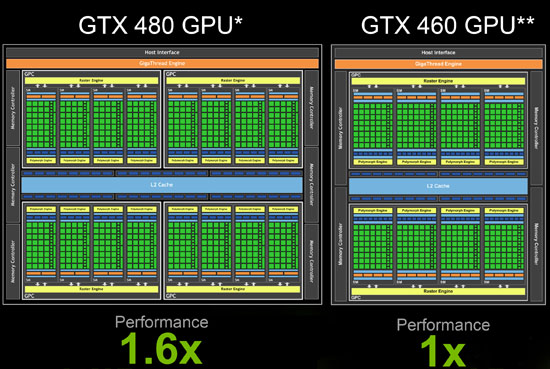

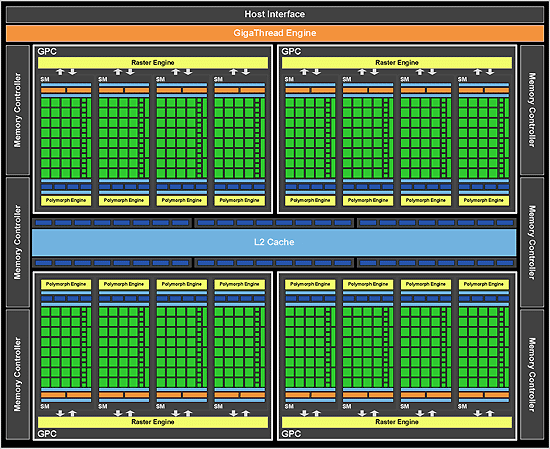

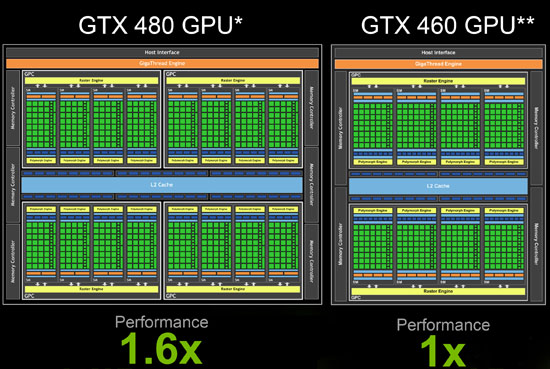

If the video cards of the 8000th, 9000th and 200th series were the first steps in mastering the very concept of “modern architecture” with universal shader processors (like AMD, yes), then the 400th series was as similar as possible what we see in some 1070. Yes, Fermi has a small Legacy crutch from previous generations: the shader unit worked at twice the core frequency, which was responsible for calculating the geometry, but the overall picture of some GTX 480 is not much different from any some 780th, SM multiprocessors are clustered, clusters communicate through a common cache with MODULES memory, and displays the results for the overall cluster rasterization unit:

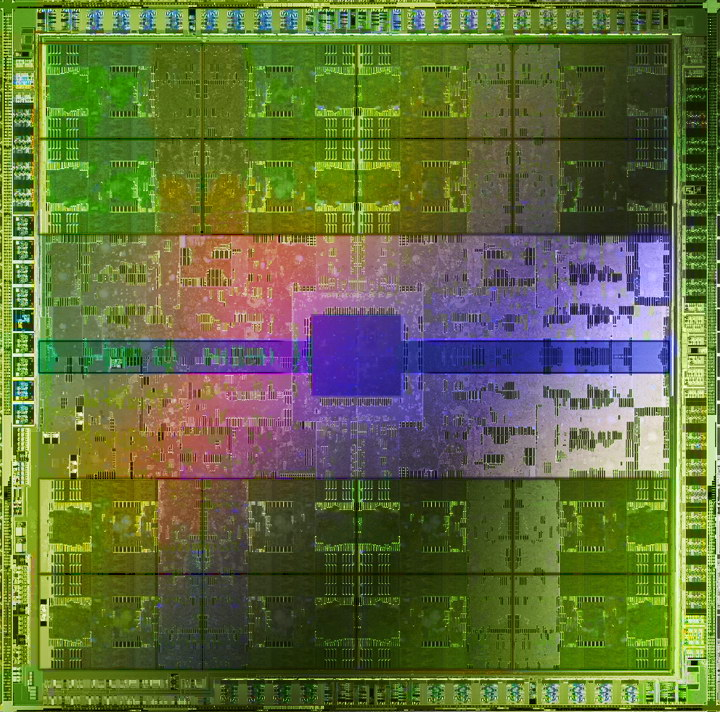

The block diagram of the GF100 processor used in the GTX 480.

In the 500th series, there was the same Fermi, slightly improved “inside” and with less rejects, so the top solutions received 512 CUDA cores instead of 480 from the previous generation. Visually, the flowcharts generally seem to be twins:

GF110 is the heart of the GTX 580.

In some places the frequencies were increased, the design of the chip itself was slightly changed, there was no revolution. All the same 40 nm manufacturing process and 1.5 GB of video memory on a 384-bit bus.

With the advent of Kepler architecture, much has changed. We can say that it was this generation that gave NVIDIA graphics cards the development vector that led to the emergence of current models. Not only the GPU architecture has changed, but the kitchen itself has been developing new hardware inside NVIDIA. If Fermi was aimed at finding a solution that provides high performance, Kepler relied on energy efficiency, the rational use of resources, high frequencies and the simplicity of optimizing the game engine for the capabilities of a high-performance architecture.

Serious changes have been made in the design of the GPU: the basis was not the “flagship” GF100 / GF110, but the “budget” GF104 / GF114, which was used in one of the most popular cards of that time - GTX 460.

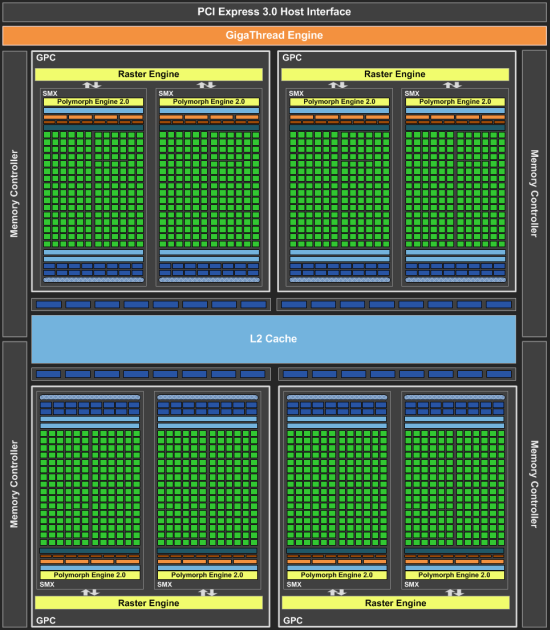

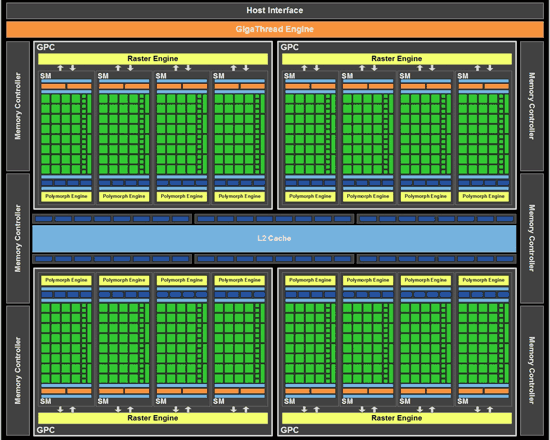

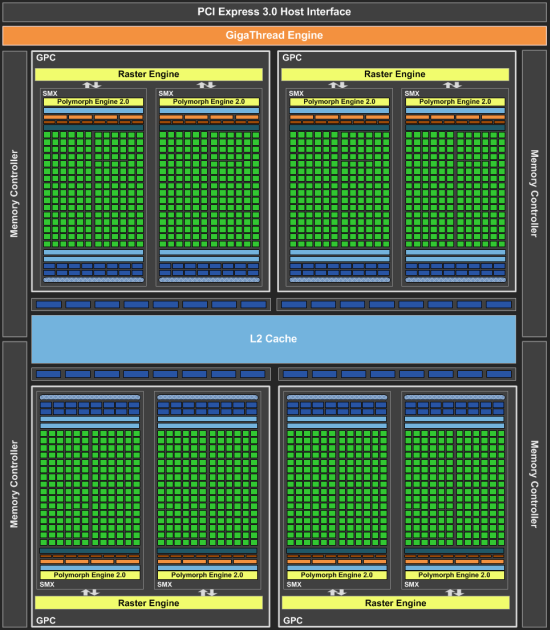

The general processor architecture has become simpler by using only two large blocks with four unified shader multiprocessor modules. The layout of the new flagships looked something like this:

GK104 installed in the GTX 680.

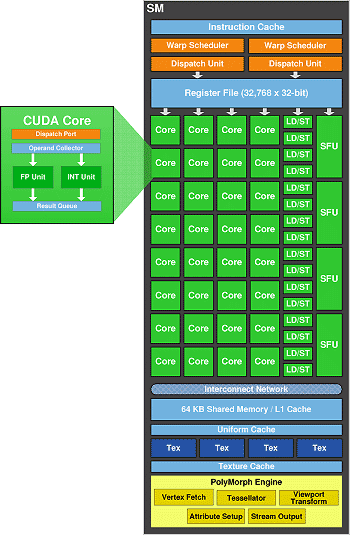

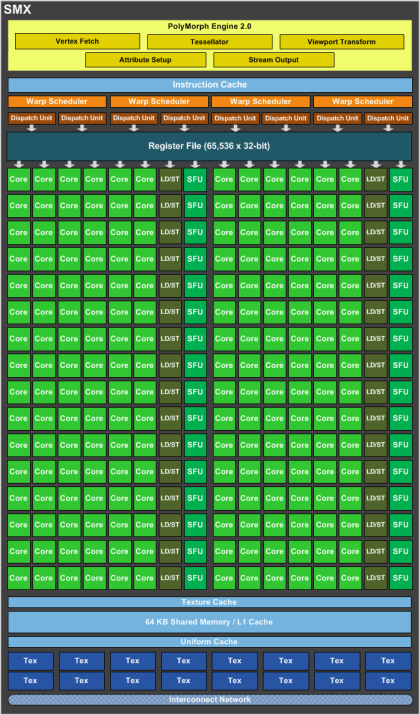

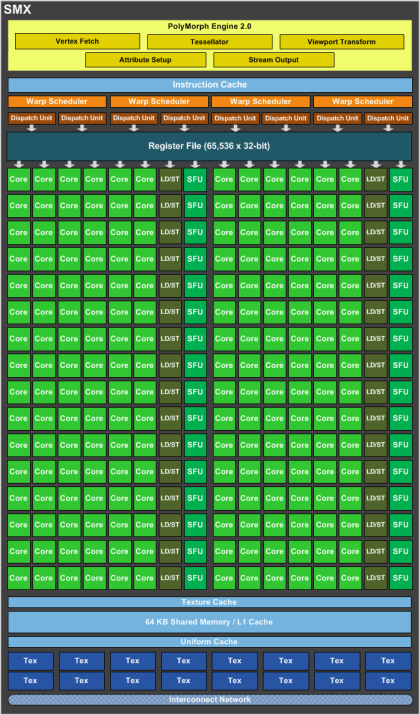

As you can see, each of the computing units significantly gained weight relative to the previous architecture, and was called SMX. Compare the structure of the block with that shown above in the Fermi section.

GX104 GPX multiprocessor The

six hundredth series did not have video cards on a full-fledged processor containing six blocks of computational modules, the flagship was the GTX 680 with GK104 installed, and steeper than it was only the “two-headed” 690th, on which just two processors with all the necessary strapping and memory. A year later, the flagship GTX 680 with minor changes turned into a GTX 770, and the GK110 crystal video cards: GTX Titan and Titan Z, 780Ti and the usual 780 became the crown of the evolution of Kepler architecture. Inside - the same 28 nanometers, the only qualitative improvement (which is NOT went to consumer graphics cards based on GK110) - performance with double-precision operations.

The first Maxwell-based graphics card was ... the NVIDIA GTX 750Ti. A bit later, its trim appeared in the face of the GTX 750 and 745 (it was supplied only as an integrated solution), and at the time of its appearance, the younger cards really shook the market for inexpensive video accelerators. The new architecture was tested on the GK107 chip: a tiny bit of future flagships with huge radiators and a frightening price. It looked something like this:

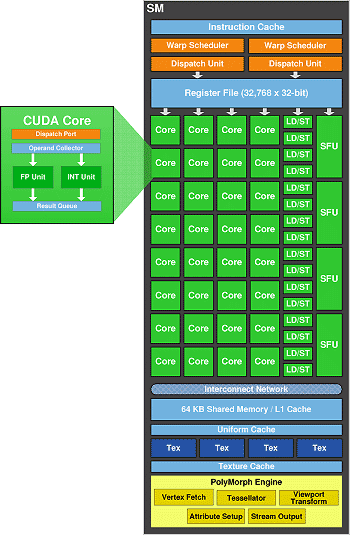

Yes, there is only one computing unit, but how much more complicated it is than the predecessor, compare yourself:

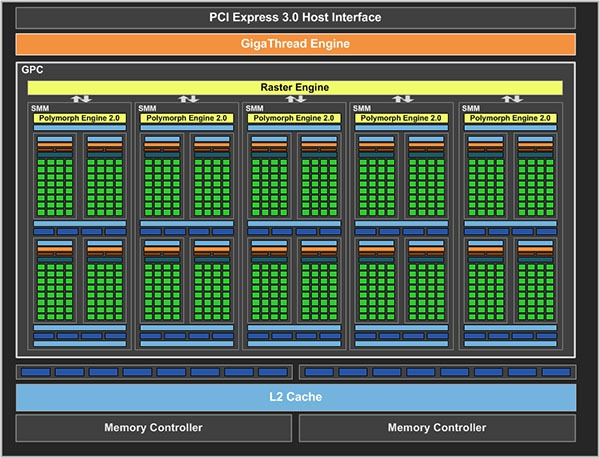

Instead of the large SMX block, which was used as the basic building block, the new, more compact SMM blocks are used in the creation of the GPU. Kepler’s basic computing units were good, but they suffered from poor capacity utilization - a banal hunger for instructions: the system could not dispense instructions for a large number of executive elements. Pentium 4 had approximately the same problems: the power was idle, and the error in branch prediction was very expensive. In Maxwell, each computing module was divided into four parts, each of them having its own instruction buffer and a warp scheduler - operations of the same type on a group of threads. As a result, efficiency increased, and the GPUs themselves became more flexible than their predecessors, and most importantly - at the cost of a little blood and a fairly simple crystal, they worked out a new architecture.

Most of all, mobile solutions have benefited from the innovations: the crystal area has grown by a quarter, and the number of executive units of multiprocessors has almost doubled. As luck would have it, it was the 700th and 800th series that arranged the main mess in the classification. Inside the 700th alone, there were video cards based on the architectures Kepler, Maxwell and even Fermi! That is why desktop Maxwells, in order to distance themselves from the mishmash in previous generations, received the general 900 series, from which the GTX 9xx M mobile cards subsequently budded.

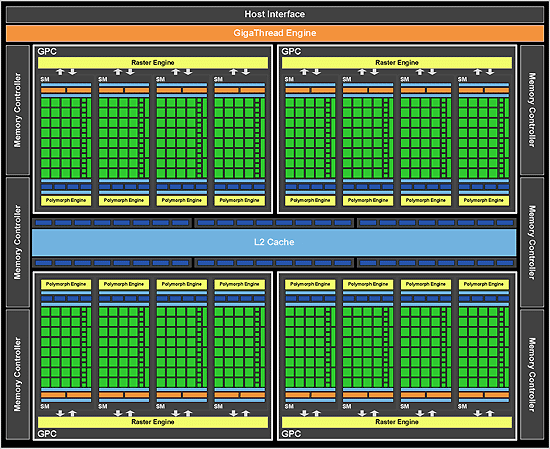

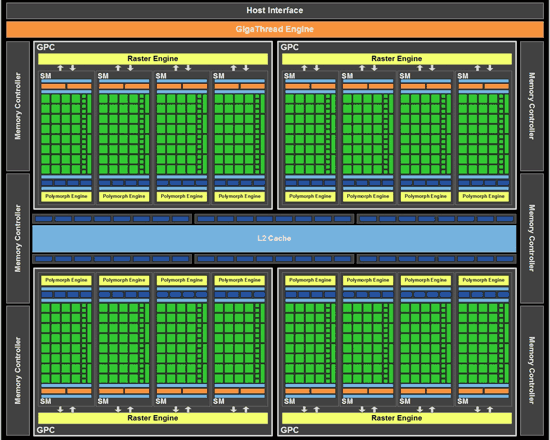

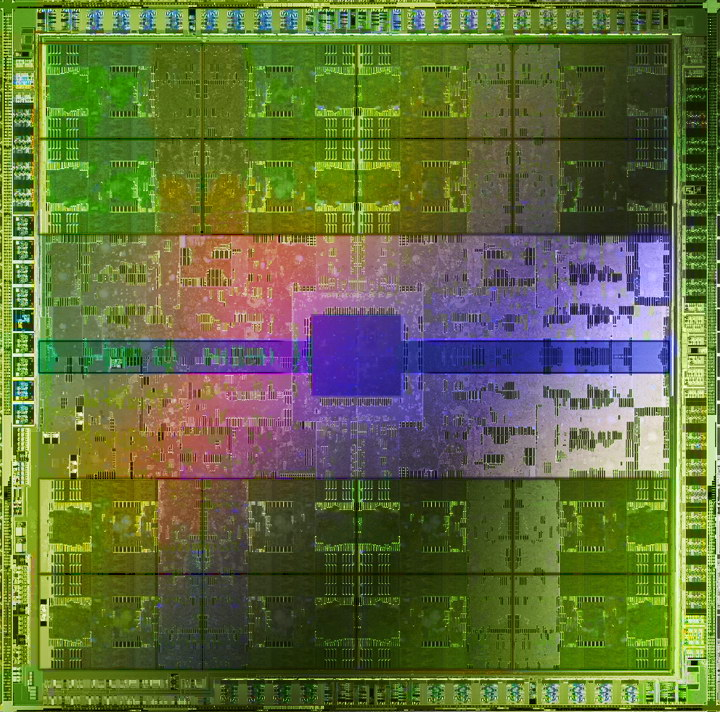

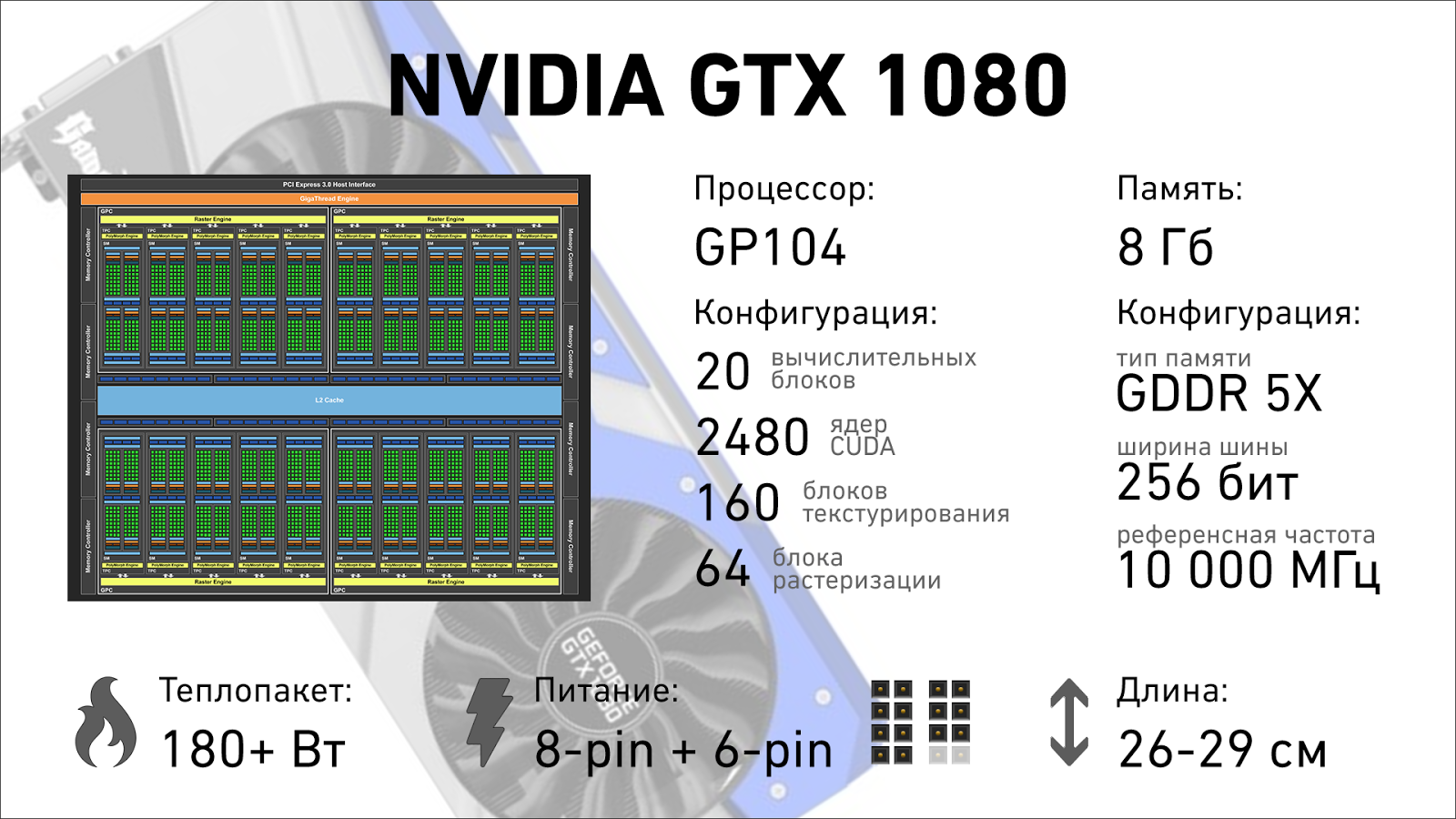

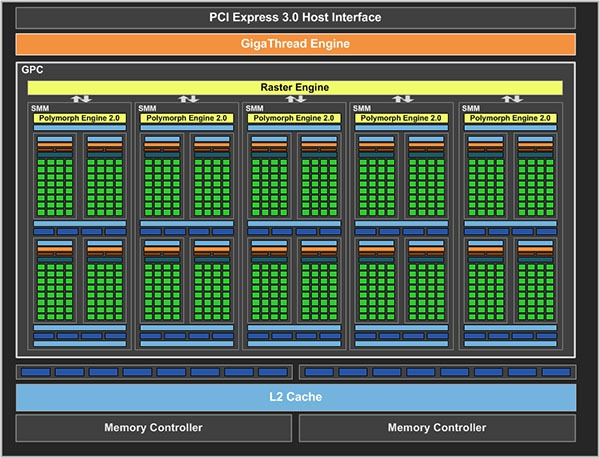

What was laid down in Kepler and continued in the Maxwell generation also remained in Pascals: the first consumer video cards were released on the basis of not the largest GP104 chip, which consists of four graphics processing clusters. The full-sized, six-cluster GP100 went to the expensive semi-professional GPU under the brand name TITAN X. However, even the “cropped” 1080 lights up so that past generations get sick.

Maxwell became the foundation of the new architecture, the diagram of comparable processors (GM104 and GP104) looks almost the same, the main difference is the number of multiprocessors packed in clusters. In Kepler (700th generation) there were two large SMX multiprocessors, which were divided into 4 parts each in Maxwell, providing the necessary binding (changing the name to SMM). In Pascal, two more were added to the existing eight in the block, so there were 10 of them, and the abbreviation was again killed: now single multiprocessors are again called SM.

The rest is a complete visual resemblance. True, within the changes has become even greater.

The changes inside the multiprocessor unit are indecent. In order not to go into very boring details of what you redid, how they optimized and how it was before, I will describe the changes very briefly, and some already yawn.

First things first, Pascal’s corrected the part that is responsible for the geometric component of the picture. This is necessary for multi-monitor configurations and working with VR helmets: with proper support from the game engine (and NVIDIA will quickly support this), the video card can calculate the geometry once and get several geometry projections for each of the screens. This significantly reduces the load in VR, not only in the area of working with triangles (here the growth is just twofold), but also in working with the pixel component.

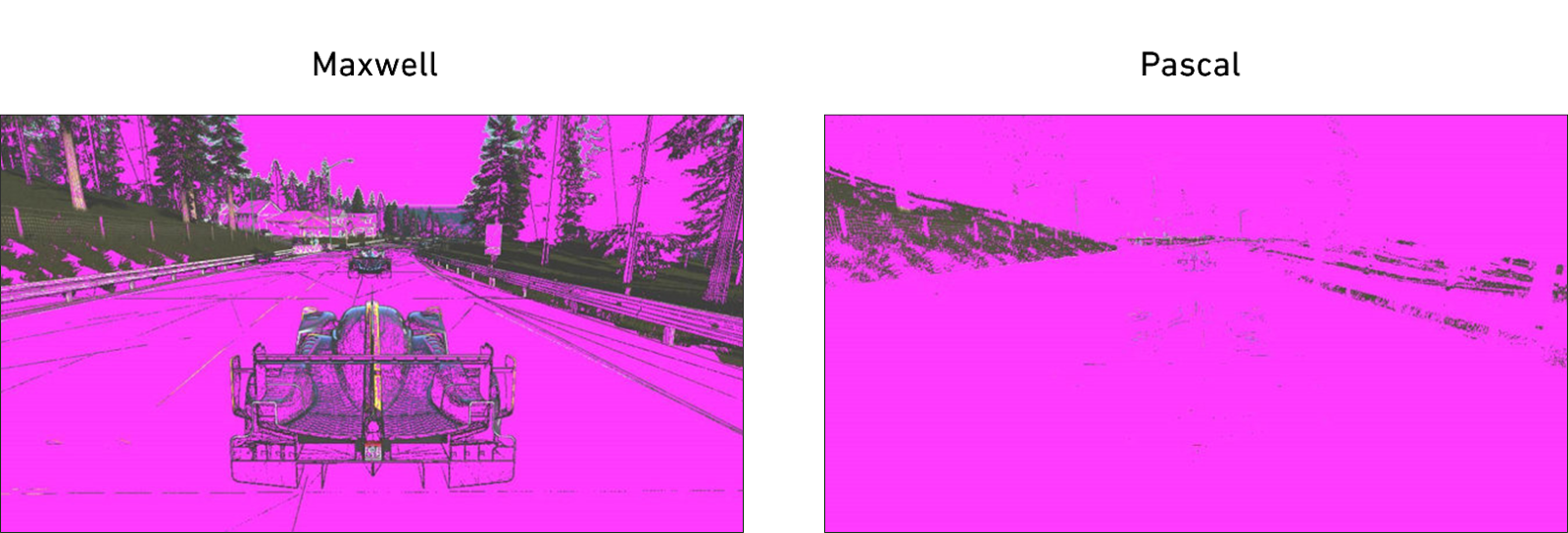

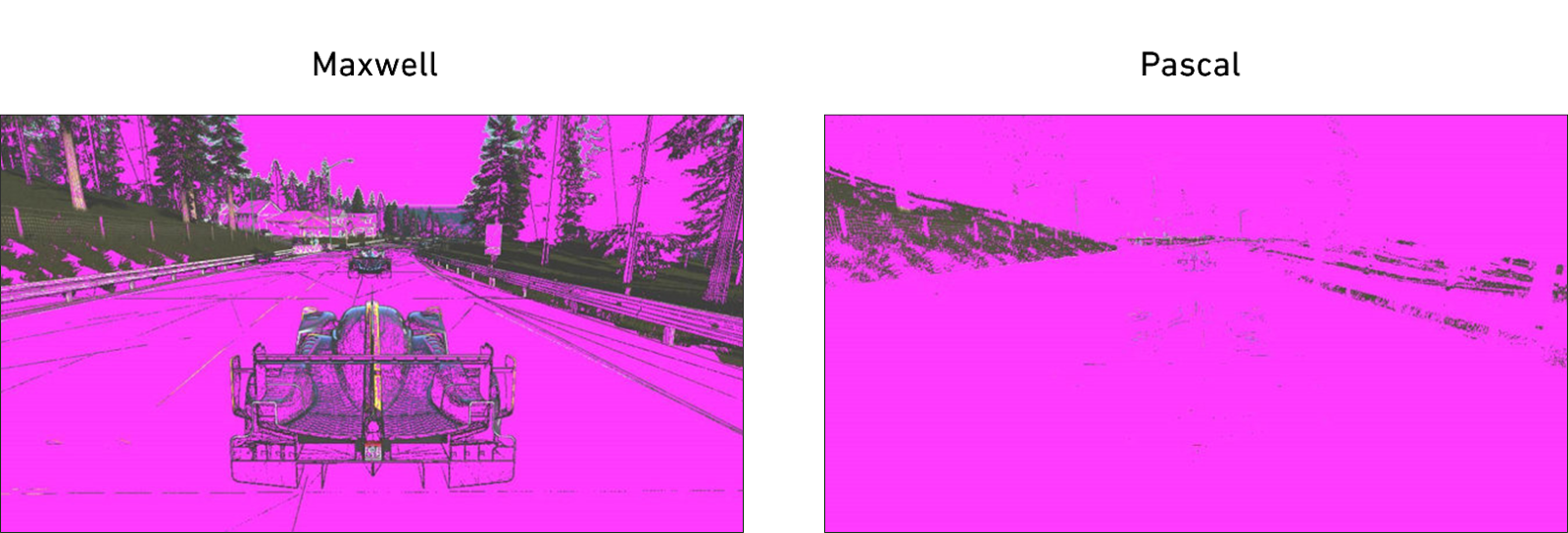

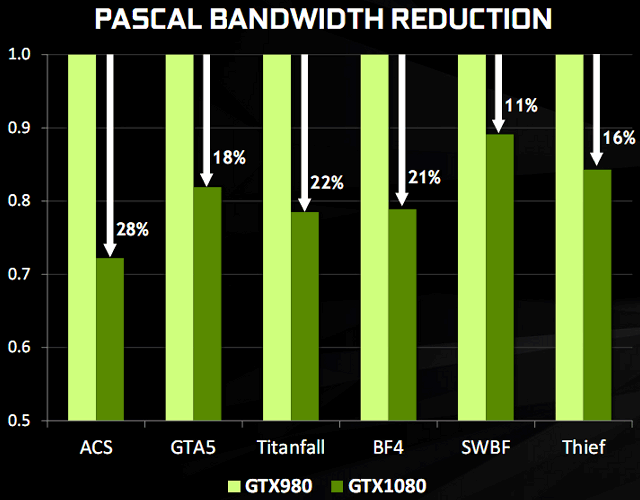

With the pixel component, everything is, in fact, even cooler. Since the increase in memory bandwidth can be carried out only on two fronts (increasing the frequency and bandwidth per cycle), both methods cost money, and the “hunger” of the GPU in terms of memory is more pronounced over the years due to the increase in resolution and development of VR, it remains Improve “free” methods to increase throughput. If you can not expand the bus and raise the frequency - you need to compress the data. In previous generations, hardware compression was already implemented, but in Pascal it was taken to a new level. Again, we can do without boring mathematics, and take a ready-made example from NVIDIA. On the left is Maxwell, on the right is Pascal; those points whose color component was compressed without loss of quality are filled with pink.

Instead of transferring specific tiles of 8x8 points, the memory contains the “average” color + matrix of deviations from it, such data takes from ½ to ⅛ of the volume of the source. In real tasks, the load on the memory subsystem decreased from 10 to 30%, depending on the number of gradients and the uniformity of fills in complex scenes on the screen.

This was not enough for the engineers, and memory with increased bandwidth was used for the flagship video card (GTX 1080): GDDR5X transmits twice as many data bits (not instructions) per clock cycle and produces more than 10 Gb / s at the peak. Data transfer at such an insane speed required a completely new topology of memory layout on the board, and in total, the efficiency of working with memory increased by 60-70% compared to the flagships of the previous generation.

Video cards have long been engaged not only in graphics processing, but also in related calculations. Physics is often tied to frames of animation and remarkably parallel, which means it is much more efficiently considered on the GPU. But the biggest problem generator in recent years has become the VR industry. Many game engines, development methodologies and a bunch of other technologies used to work with graphics simply were not designed for VR, the case of moving the camera or changing the position of the user's head during the rendering of the frame was simply not processed. If you leave everything as it is, then the out of sync of the video stream and your movements will cause bouts of seasickness and simply interfere with immersion in the game world, which means that you have to throw out the “wrong” frames after rendering and start work again. And these are new delays in displaying the image. This has no positive effect on performance.

Pascal took this problem into account and implemented dynamic load balancing and the possibility of asynchronous interruptions: now execution units can either interrupt the current task (saving the results of work in the cache) to process more urgent tasks, or simply reset the underdone frame and start a new one, significantly reducing delays in image formation. The main beneficiary here, of course, VR and games, but also with general-purpose calculations, this technology can help: simulation of particle collisions received a performance increase of 10-20%.

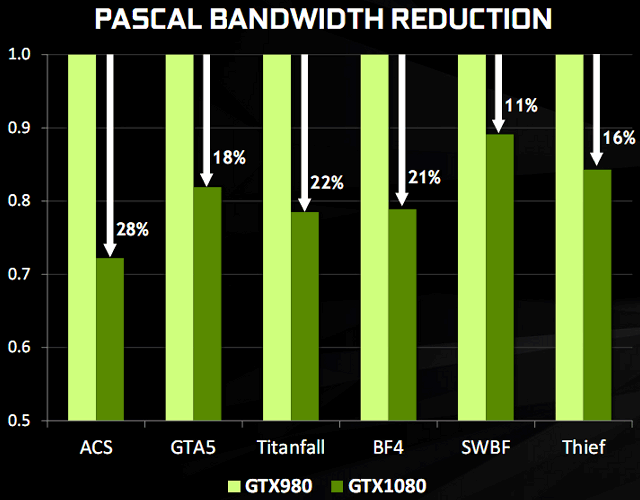

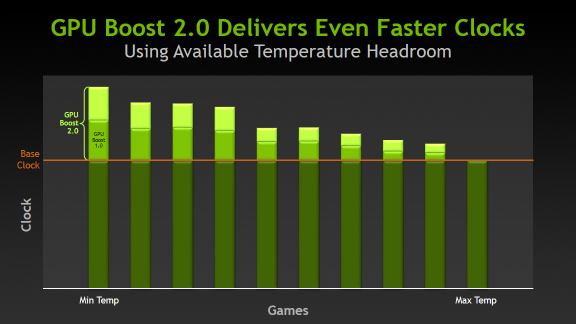

NVIDIA video cards received automatic overclocking a long time ago, back in the 700th generation based on the Kepler architecture. Overclocking was improved at Maxwell, but it was still mildly speaking so-so: yes, the video card worked a little faster, while the heat packet allowed it, an additional 20-30 megahertz from the factory and 50-100 MHz from the factory, which were wired, provided an increase, but not much . It worked something like this:

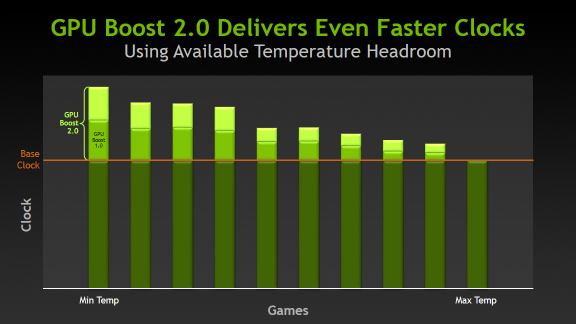

Even if there was a margin in temperature for the GPU, performance did not increase. With the advent of Pascal, engineers shook up and this dusty swamp. Boost 3.0 works on three fronts: temperature analysis, increasing the clock frequency and increasing the voltage on the chip. Now all the juices are squeezed out of the GPU: standard NVIDIA drivers do not, but the software of the vendors allows you to build a profile curve in one click that will take into account the quality of your particular video card instance.

One of the first in this field was EVGA, its Precision XOC utility has an NVIDIA-certified scanner that sequentially iterates over the entire range of temperatures, frequencies and voltages, achieving maximum performance in all modes.

Add here a new process technology, high-speed memory, all sorts of optimizations and a reduction in the heat packet of the chips, and the result will be simply indecent. C 1500 "base" MHz in the GTX 1060, you can squeeze more than 2000 MHz, if you get a good copy, and the vendor does not screw up with cooling.

Performance was increased on all fronts, but there are a number of points in which there have been no qualitative changes for several years: as the output image. And this is not about graphic effects, they are provided by game developers, but about what exactly we see on the monitor and how the game looks for the end user.

The most important feature of Pascal is a triple buffer for outputting frames, which provides both ultra-low latency in rendering and vertical synchronization. The output image is stored in one buffer, the last frame drawn in the other, and the current frame is drawn in the third. Goodbye, horizontal stripes and frame breaks, hello, high performance. There are no delays that the classic V-Sync arranges (since no one restrains the video card's performance and it always draws with the highest possible frame rate), and only fully formed frames are sent to the monitor. I think that after the new year I’ll write a separate big post about V-Sync, G-Sync, Free-Sync and this new fast synchronization algorithm from Nvidia, there are too many details.

No, those screenshots that are now are just a shame. Almost all games use a bunch of technologies so that the picture in motion is breathtaking and breathtaking, and the screenshots become a real nightmare: instead of a stunningly realistic picture consisting of animations, special effects that exploit the features of human vision, you see some kind of angular neopoly with strange colors and absolutely lifeless picture.

New NVIDIA Ansel technology solves the problem with screenshots. Yes, its implementation requires the integration of special code from game developers, but there is a minimum of real manipulation, but the profit is huge. Ansel can pause the game, transfer the control of the camera into your hands, and then there is room for creativity. You can just take a frame without a GUI and your favorite view.

You can draw an existing scene in ultra-high resolution, shoot 360-degree panoramas, stitch them in a plane or leave them in three-dimensional form for viewing in a VR helmet. Take a photo with 16 bits per channel, save it in a kind of RAW file, and then play with exposure, white balance and other settings so that the screenshots become attractive again. We are waiting for tons of cool content from game fans in a year or two.

The new NVIDIA Gameworks libraries add many features available to developers. They are mainly aimed at VR and acceleration of various calculations, as well as improving the quality of the picture, but one of the features is the most interesting and worthy of mention. VRWorks Audio takes work with sound to a whole new level, considering sound not according to commonplace averaged formulas, depending on the distance and thickness of the obstacle, but performs a full trace of the sound signal, with all reflections from the surroundings, reverberation and sound absorption in various materials. NVIDIA has a good video example on how this technology works:

It’s better to look with headphones.

Theoretically, nothing prevents you from running such a simulation on Maxwell, but optimizations regarding asynchronous execution of instructions and the new interrupt system in Pascal allow calculations to be made without affecting the frame rate.

Actually, there are even more changes, and many of them are so deep in architecture that a huge article can be written for each of them. The key innovations are the improved design of the chips themselves, optimization at the lowest level in terms of geometry and asynchronous operation with full interrupt handling, a lot of features geared towards working with high resolutions and VR, and, of course, crazy frequencies that were not dreamed of by previous generations of video cards. Two years ago, the 780 Ti barely crossed the 1 GHz frontier, today 1080 in some cases works on two: and here the merit is not only in the technical process reduced from 28 nm to 16 or 14 nm: many things are optimized at the lowest level, starting with the design of transistors ending with their topology and strapping inside the chip itself.

The line of NVIDIA 10 series video cards turned out to be really balanced, and it covers all gaming user cases quite tightly, from the option “play strategy and diabetes” to “I want top games in 4k”. Game tests are chosen according to one simple technique: to cover the widest possible range of tests with the smallest possible set of tests. BF1 is a great example of good optimization and allows you to compare the performance of DX11 versus DX12 under the same conditions. DOOM is selected for the same reason, it only allows you to compare OpenGL and Vulkan. The third "The Witcher" here acts as a so-so-optimized-toy, in which the maximum graphics settings make it possible to screw any flagship simply by virtue of the shit. It uses the classic DX11, which is time-tested and well-developed in drivers and is familiar to igrodelov. Overwatch puffs out for all the tournament games

I’ll give you some general comments right away: Vulkan is very gluttonous in terms of video memory, for him this characteristic is one of the main indicators, and you will see a reflection of this thesis in benchmarks. DX12 on AMD cards behaves much better than NVIDIA, if “green” on average show FPS drawdown on new APIs, then “red”, on the contrary, increase.

The younger NVIDIA (without the letters Ti) is not as interesting as her charged sister with the letters Ti. Her destiny is a game solution for MOBA games, strategies, tournament shooters and other games where few people are interested in detail and picture quality, and a stable frame rate for minimal money is what the doctor ordered.

DOOM 2016 (1080p, ULTRA): OpenGL - 68 FPS, Vulkan - 55 FPS;

The Witcher 3: Wild Hunt (1080p, MAX, HairWorks Off): DX11 - 38 FPS;

Battlefield 1 (1080p, ULTRA): DX11 - 49 FPS, DX12 - 40 FPS;

Overwatch (1080p, ULTRA): DX11 - 93 FPS;

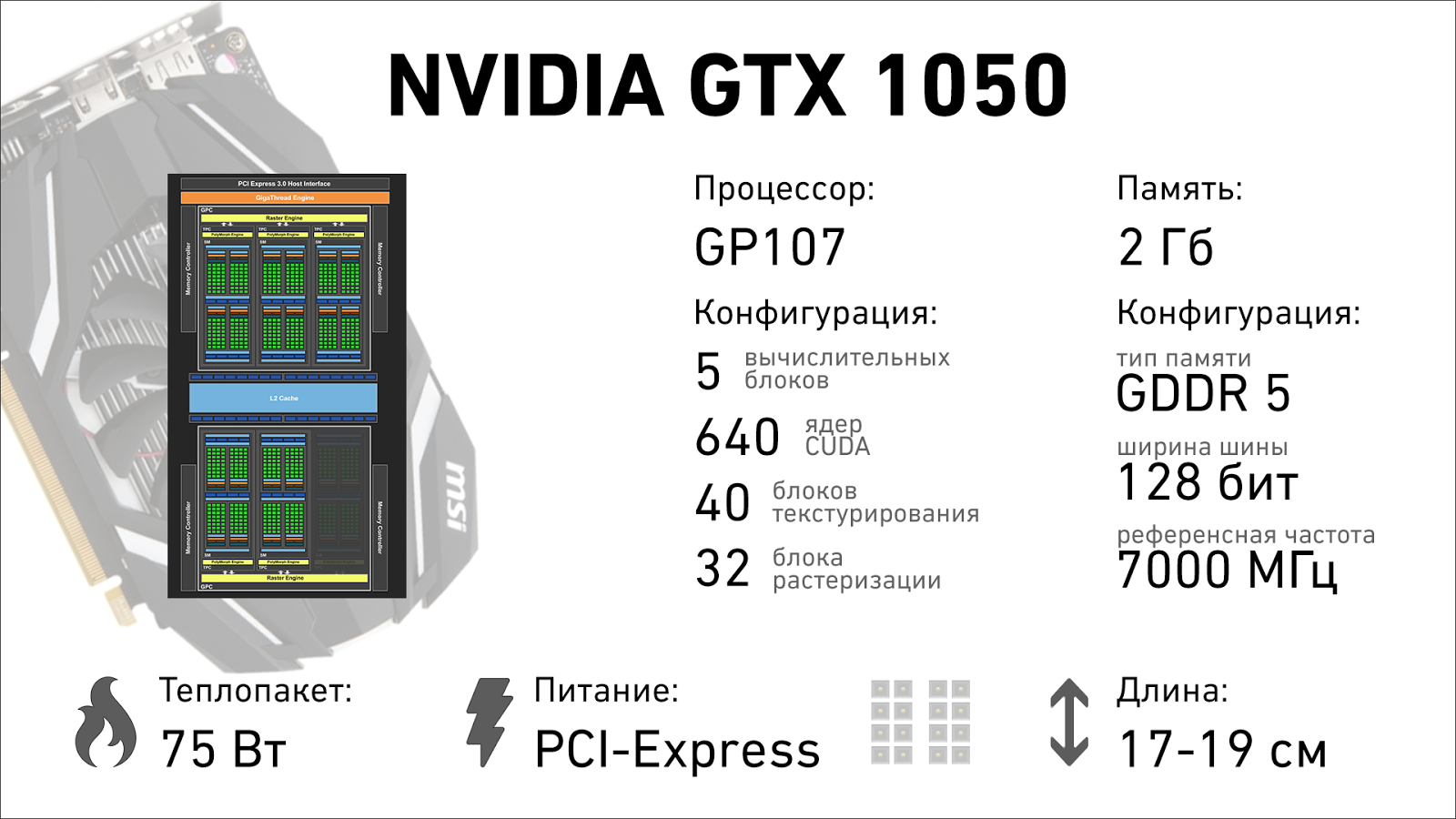

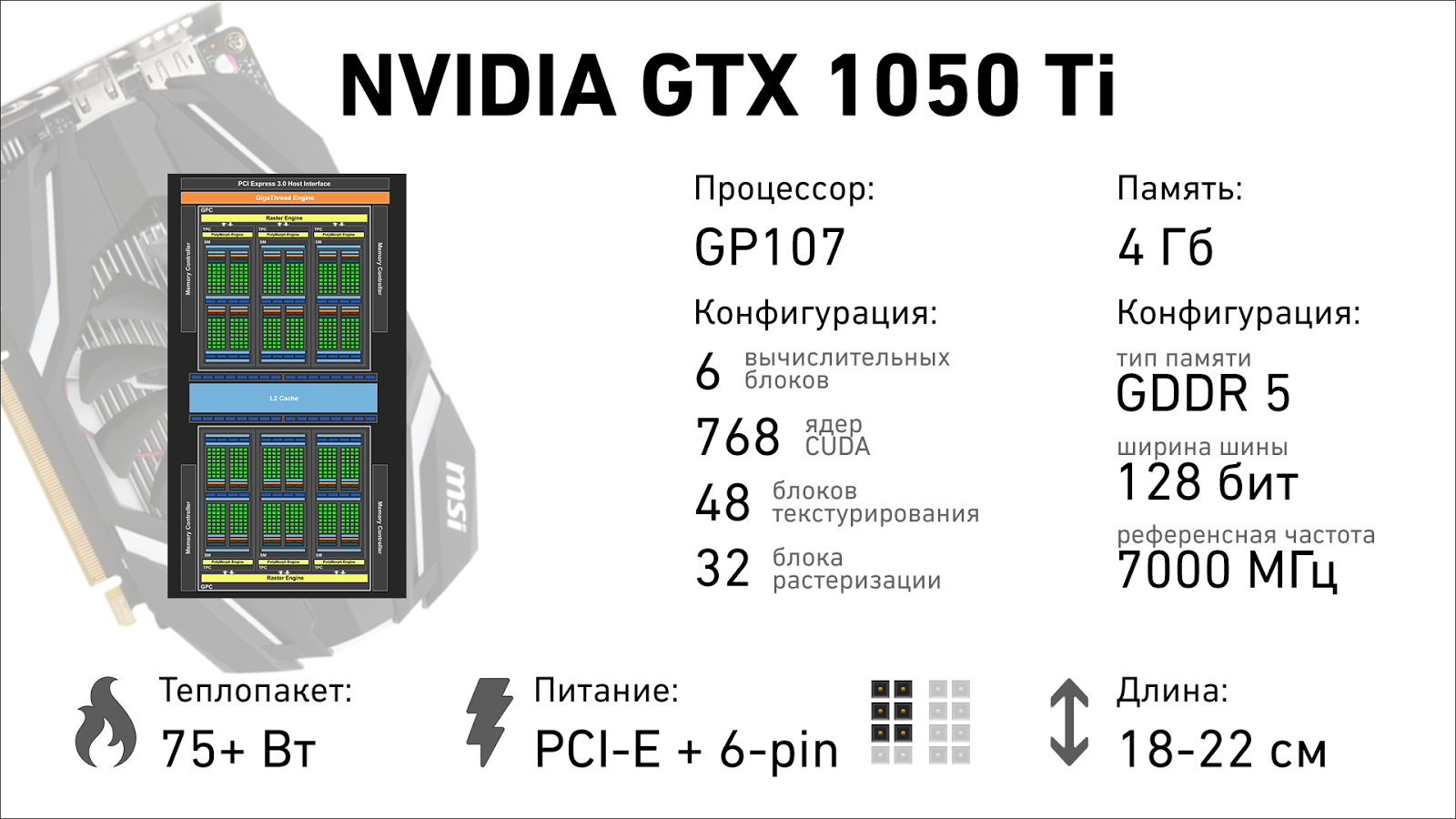

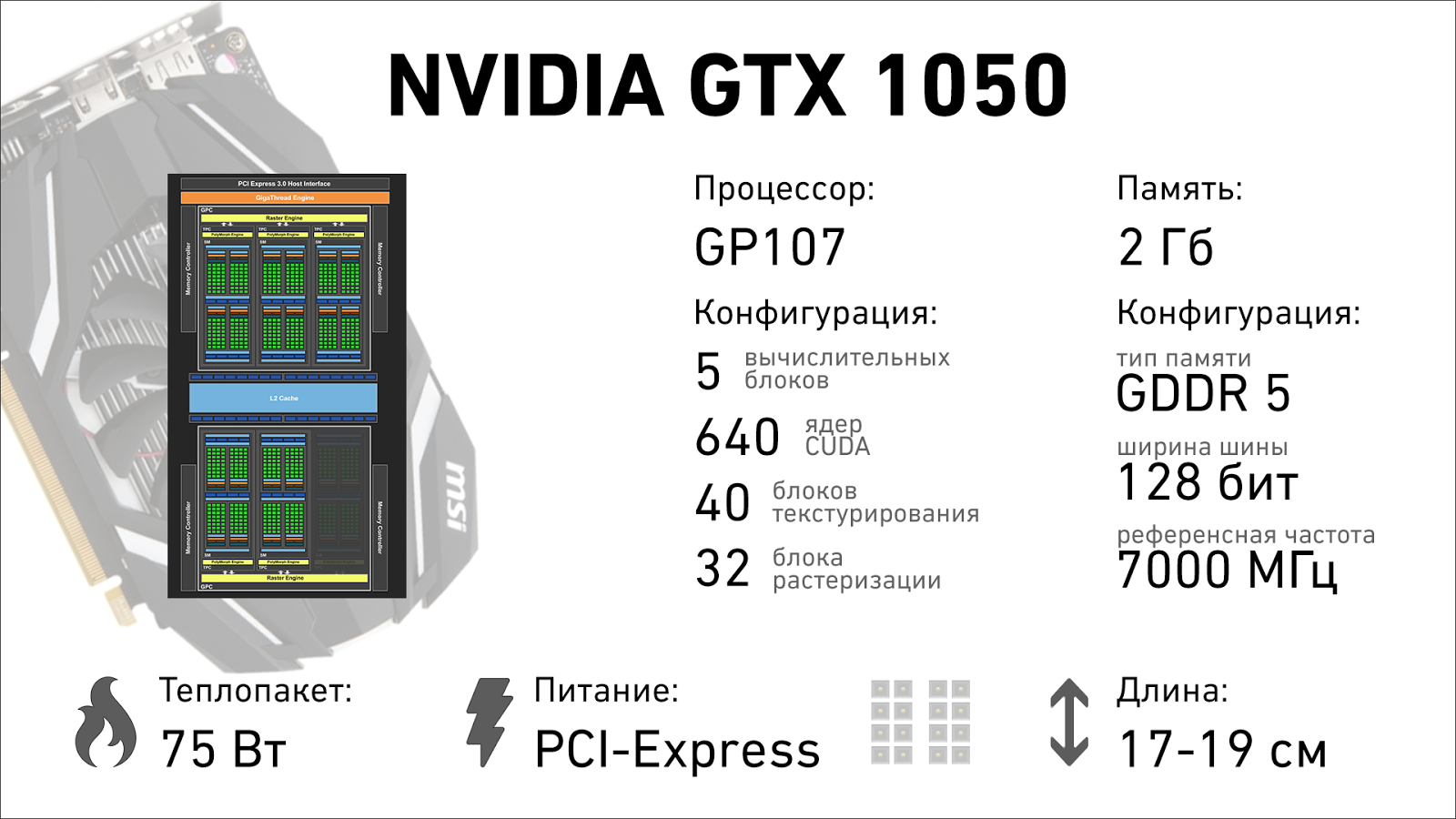

The GTX 1050 is equipped with a GP107 graphics processor, inherited from an older card with a small cropping of functional blocks. 2 GB of video memory will not let you go for a walk, but for e-sports disciplines and playing in some tanks, it is perfect, since the price of a low-end card starts at 9.5 thousand rubles. Additional power is not required, the graphics card is enough 75 watts coming from the motherboard via a PCI-Express slot. However, in this price segment there is also an AMD Radeon RX460, which costs less with the same 2 GB of memory, and is almost as good as the work quality, and for about the same money you can get the RX460, but in the 4 GB version. Not that they would help him much, but there is no reserve for the future. The choice of a vendor is not so important, you can take what is available and does not pull your pocket away with an extra thousand rubles,

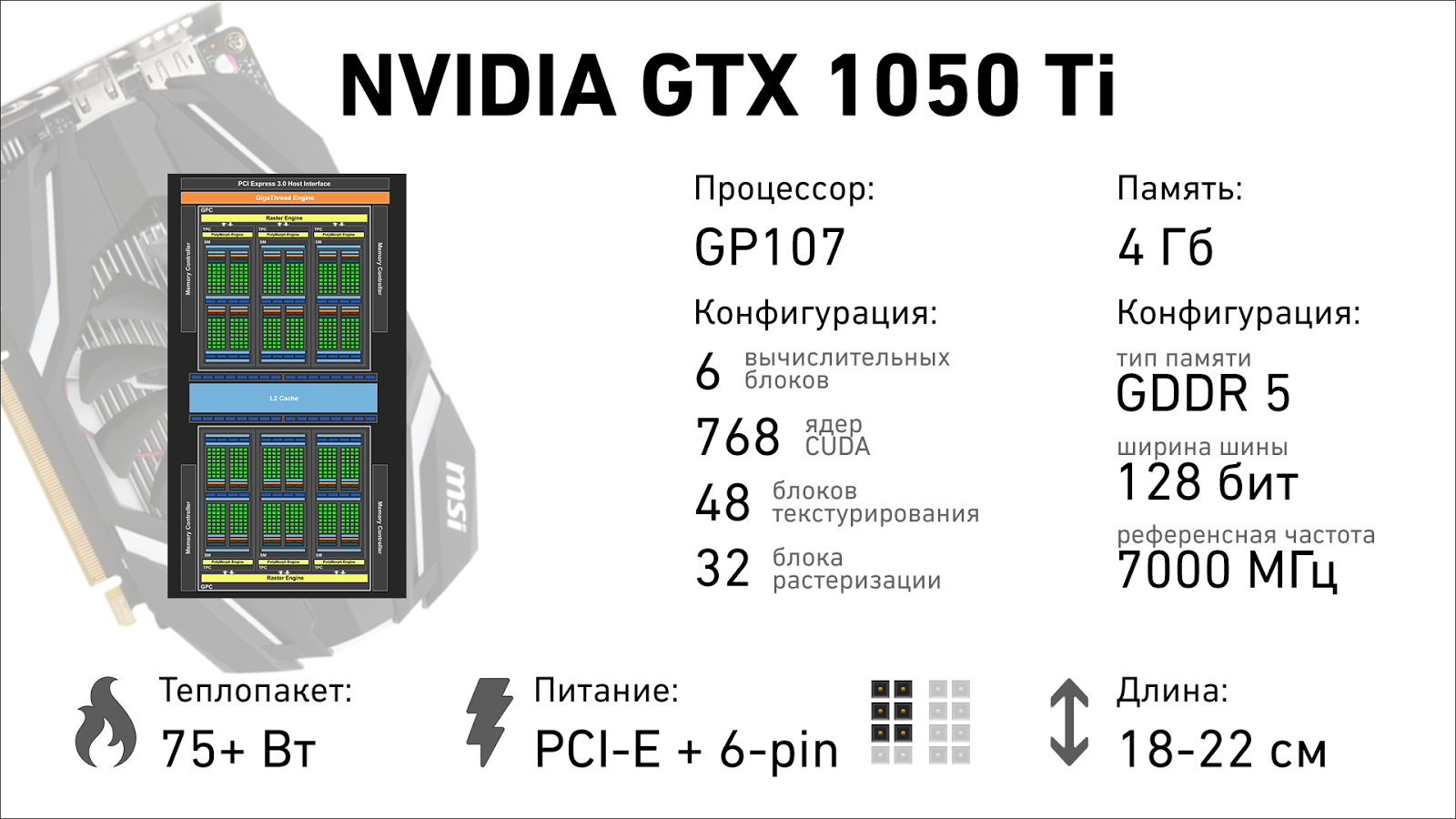

About 10 thousand for the regular 1050 is not bad, but for the charged (or full, call it what you want) version is asked for not much more (on average, 1-1.5 thousand more), but its filling is much more interesting. By the way, the entire 1050 series is not produced from trimming / rejecting “large” chips that are not suitable for 1060, but as a completely independent product. It has less technical process (14 nm), another plant (crystals are grown by Samsung factory), and there are extremely interesting specimens with ext. power supply: the heat package and basic consumption are the same 75 watts, but the overclocking potential and the ability to go beyond what is permitted are completely different.

If you continue to play at FullHD resolution (1920x1080), do not plan to upgrade, and your remaining hardware within 3-5 years ago is a great way to increase the performance in toys with little blood. It’s worth focusing on ASUS and MSI solutions with an additional 6-pin power supply, Gigabyte's options are not bad, but the price is not so good anymore.

DOOM 2016 (1080p, ULTRA): OpenGL - 83 FPS, Vulkan - 78 FPS;

The Witcher 3: Wild Hunt (1080p, MAX, HairWorks Off): DX11 - 44 FPS;

Battlefield 1 (1080p, ULTRA): DX11 - 58 FPS, DX12 - 50 FPS;

Overwatch (1080p, ULTRA): DX11 - 104 FPS.

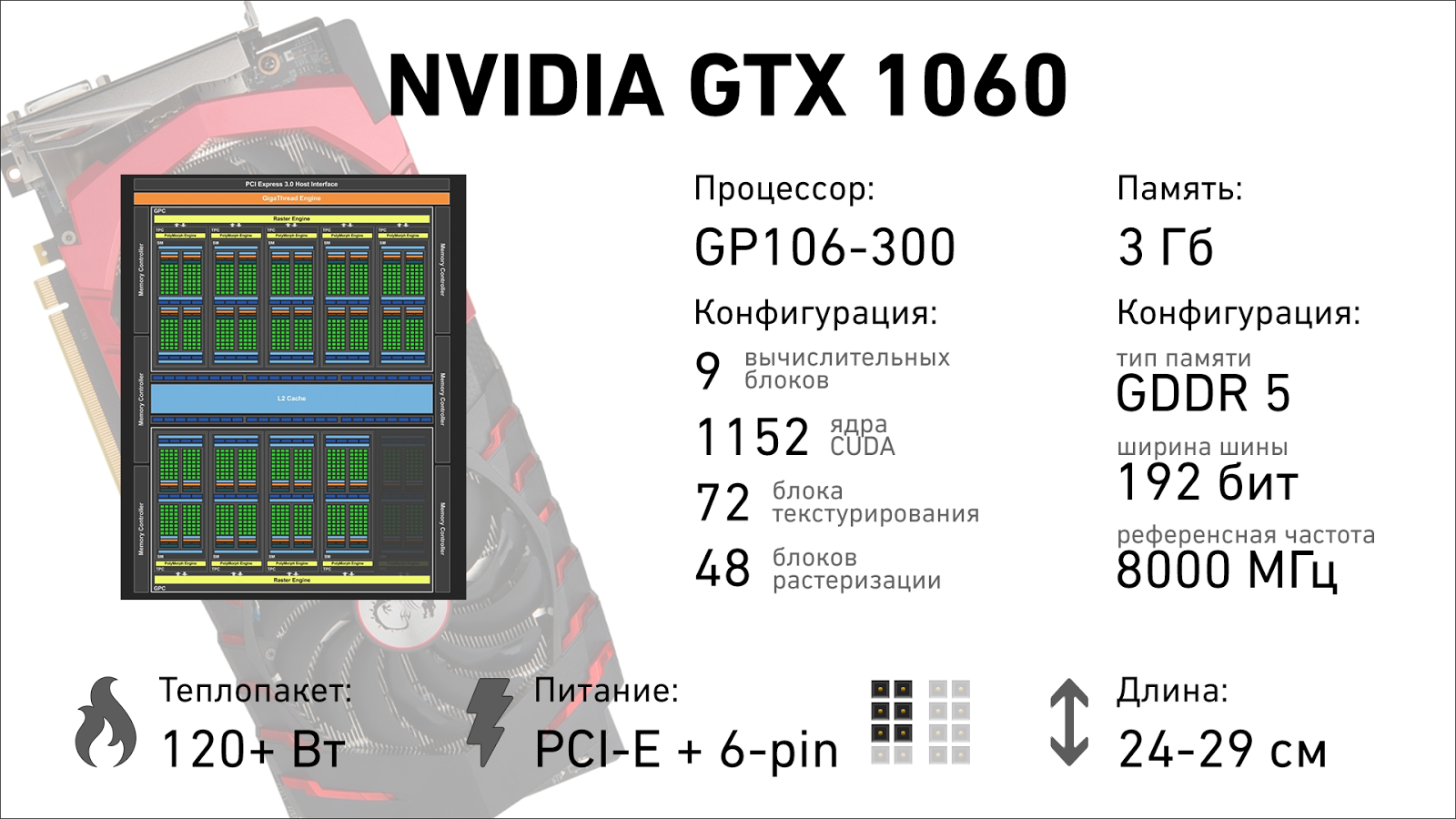

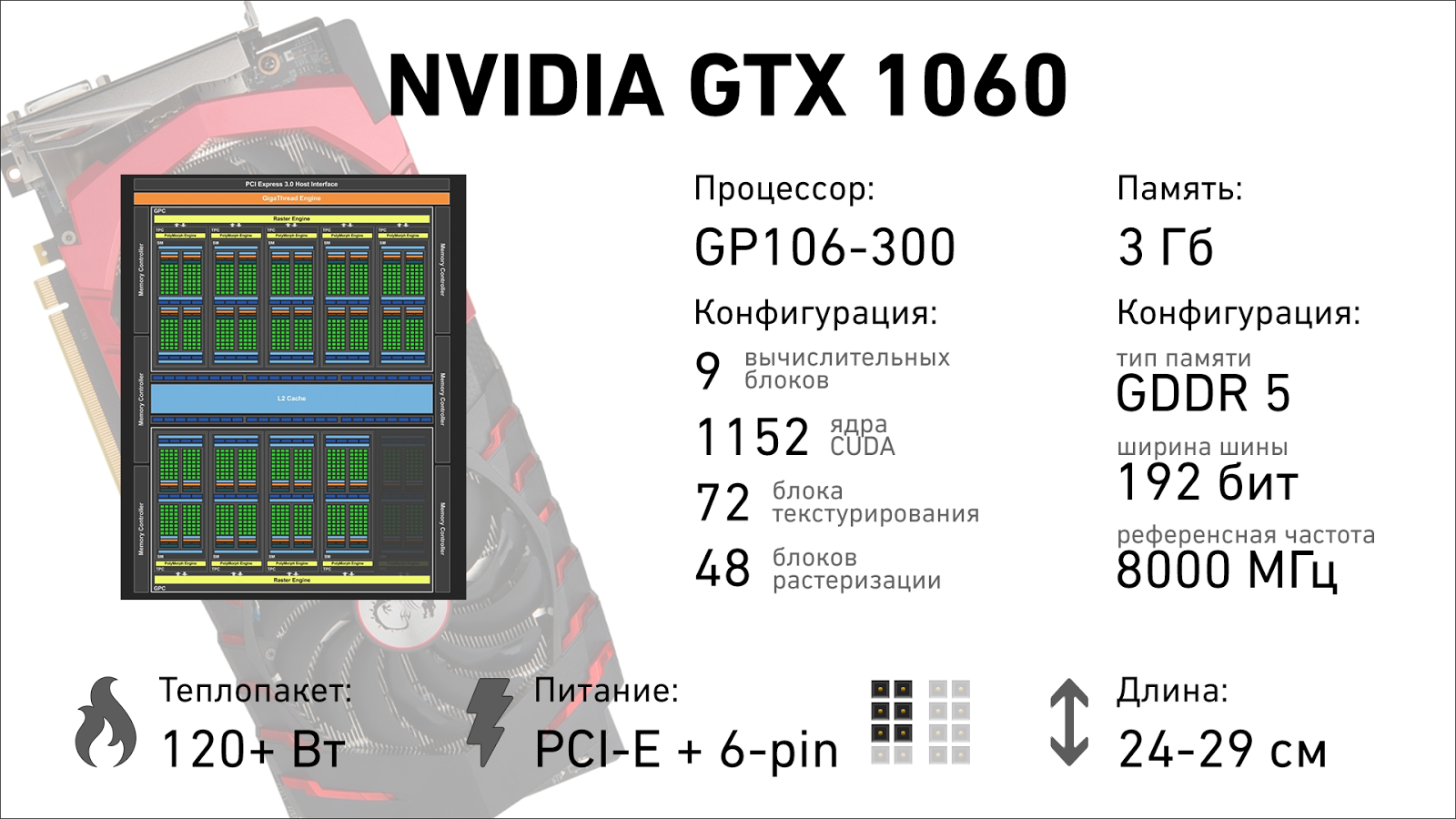

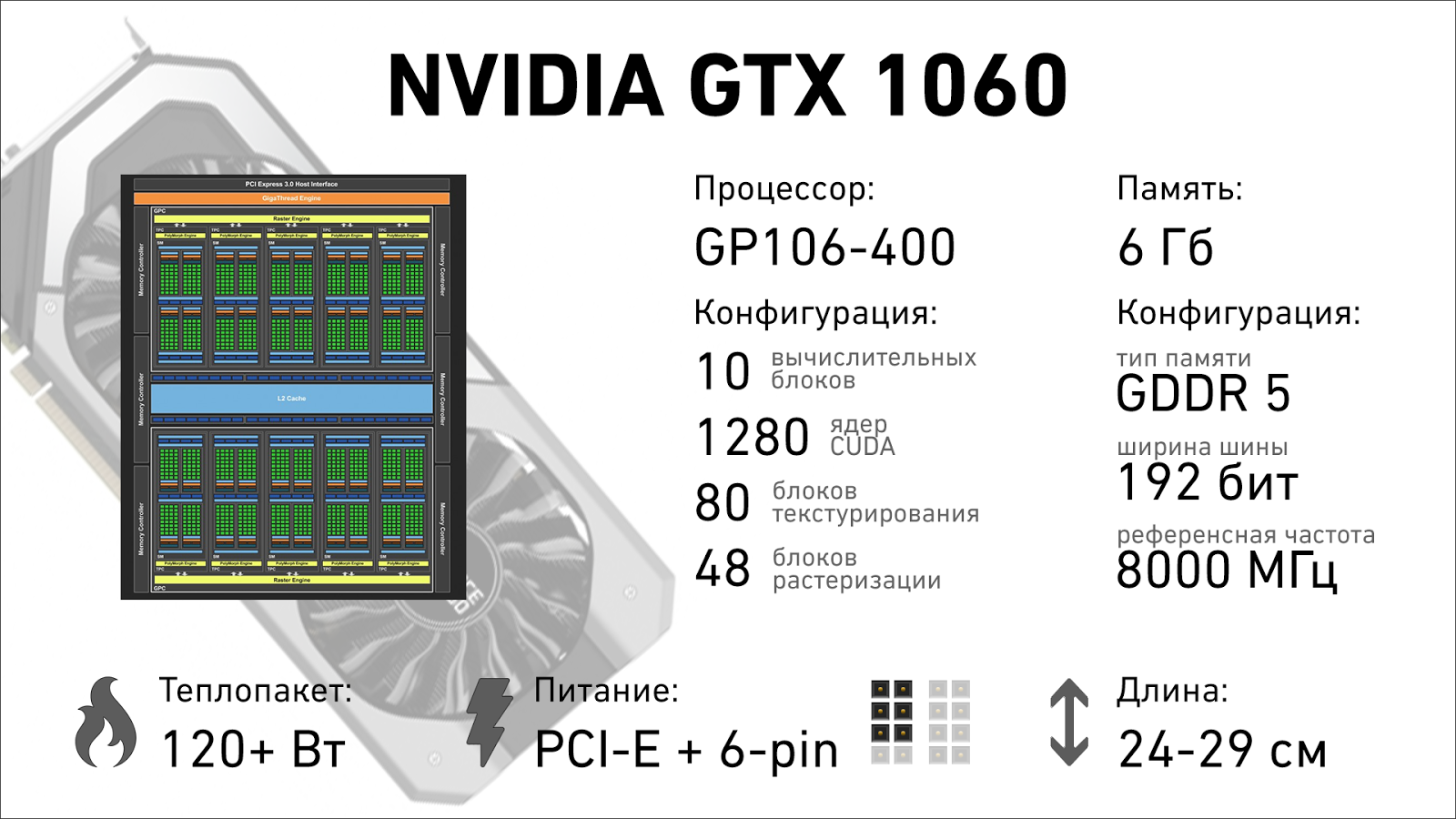

Video cards of the 60th line have long been considered the best choice for those who do not want to spend a lot of money, and at the same time play at high graphics settings everything that comes out in the next couple of years. It started back in the days of the GTX 260, which had two versions (simpler, 192 stream processors, and fatter, 216 "stones"), lasted in the 400, 500, and 700 generations, and now NVIDIA again fell into an almost perfect combination price and quality. Two versions of the “average” are again available: the GTX 1060 for 3 and 6 GB of video memory differ not only in the amount of available RAM, but also in performance.

Queen of eSports. Reasonable price, amazing performance for FullHD (and in e-sports rarely use a higher resolution: the results are more important there than beautiful ones), a reasonable amount of memory (3 GB, for a minute, stood two years ago in the flagship GTX 780 Ti, which cost indecent money). In terms of performance, the youngest 1060 easily heaps up last year's GTX 970 with a memorable 3.5 GB of memory, and easily drags its ears the year before last year's super-flagship 780 Ti.

DOOM 2016 (1080p, ULTRA): OpenGL - 117 FPS, Vulkan - 87 FPS;

The Witcher 3: Wild Hunt (1080p, MAX, HairWorks Off): DX11 - 70 FPS;

Battlefield 1 (1080p, ULTRA): DX11 - 92 FPS, DX12 - 85 FPS;

Overwatch (1080p, ULTRA): DX11 - 93 FPS.

There is an unconditional favorite in terms of price and exhaust ratio - the version from MSI. Good frequencies, silent cooling system and sane dimensions. For her, they ask for nothing at all, in the region of 15 thousand rubles.

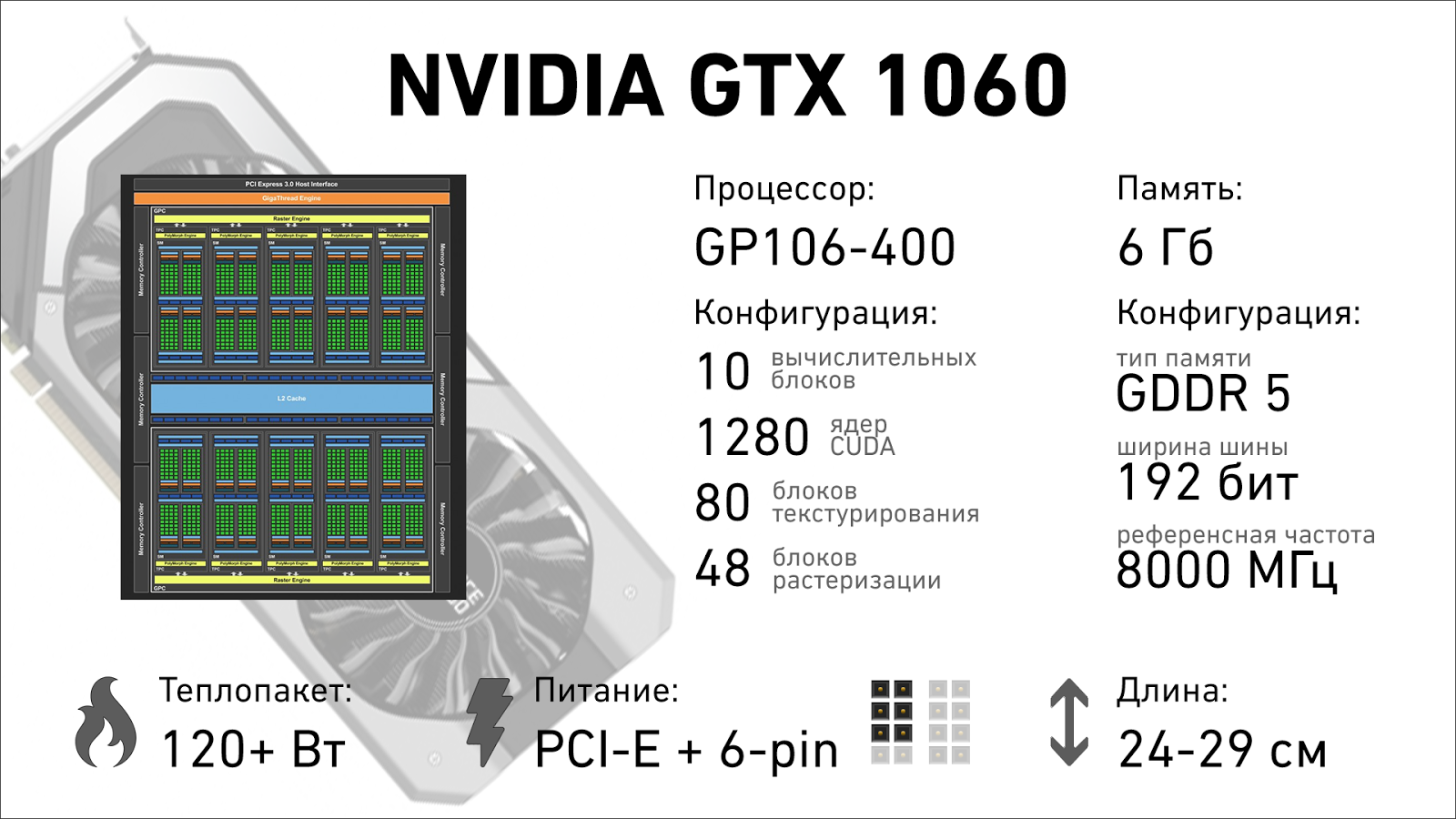

The six gigabyte version is a budget VR ticket and high resolutions. She will not starve in memory, a little faster in all tests and will confidently beat the GTX 980 where last year's video card will have fewer than 4 GB of video memory.

DOOM 2016 (1080p, ULTRA): OpenGL - 117 FPS, Vulkan - 121 FPS;

The Witcher 3: Wild Hunt (1080p, MAX, HairWorks Off): DX11 - 73 FPS;

Battlefield 1 (1080p, ULTRA): DX11 - 94 FPS, DX12 - 90 FPS;

Overwatch (1080p, ULTRA): DX11 - 166 FPS.

I would like to once again note the behavior of video cards when using the Vulkan API. 1050 with 2 GB of memory - FPS drawdown. 1050 Ti with 4 GB - almost on par. 1060 3 GB - drawdown. 1060 6 GB - increased results. The trend, I think, is understandable: Vulkan needs 4+ GB of video memory.

The trouble is that both 1060 - video cards are not small. It seems that the heat package is reasonable and the board is really small, but many vendors decided to simply unify the cooling system between 1080, 1070 and 1060. Someone has a video card with a height of 2 slots, but with a length of 28+ centimeters, someone made them shorter, but thicker (2.5 slots). Choose carefully.

Unfortunately, an additional 3 GB of video memory and an unlocked computing unit will cost you ~ 5-6 thousand rubles on top of the price of a 3-gig version. In this case, the most interesting options for price and quality at Palit. ASUS has released a monstrous 28-centimeter cooling system, which sculpts both on 1080, and 1070, and 1060, and such a video card does not fit much, versions without factory overclocking cost almost the same, and the exhaust is less, and for relatively compact MSI they ask for more than competitors with about the same level of quality and factory overclocking.

Playing for all the money in 2016 is difficult. Yes, 1080 is insanely cool, but perfectionists and metalworkers know that NVIDIA HIDES the existence of the super-flagship 1080 Ti, which must be incredibly cool. The first specifications are already seeping into the network, and it is clear that the greens are waiting for a step from red and white: some kind of uber gun that can instantly be replaced by the new king of 3D graphics, the great and powerful GTX 1080 Ti. In the meantime, we have what we have.

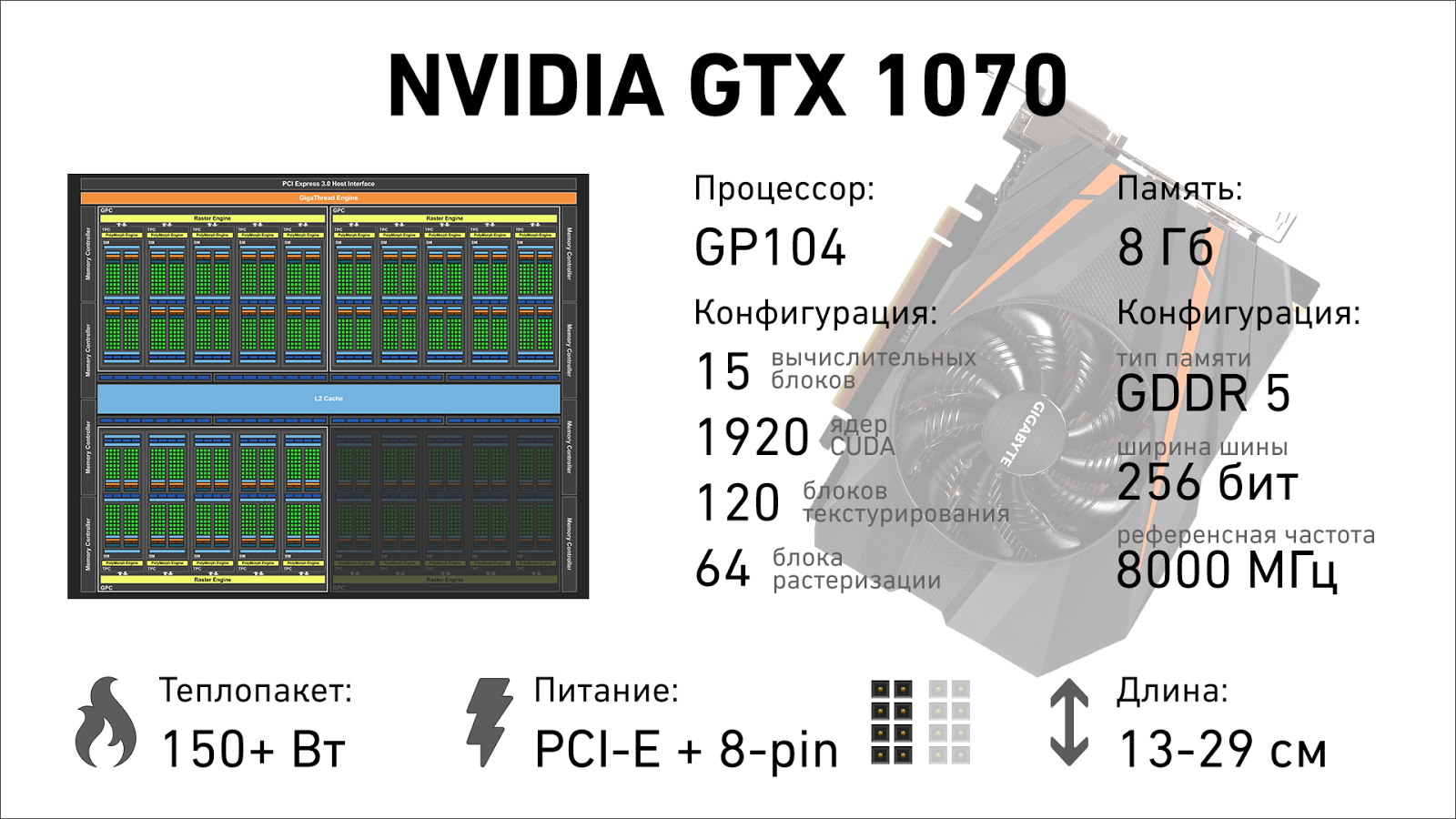

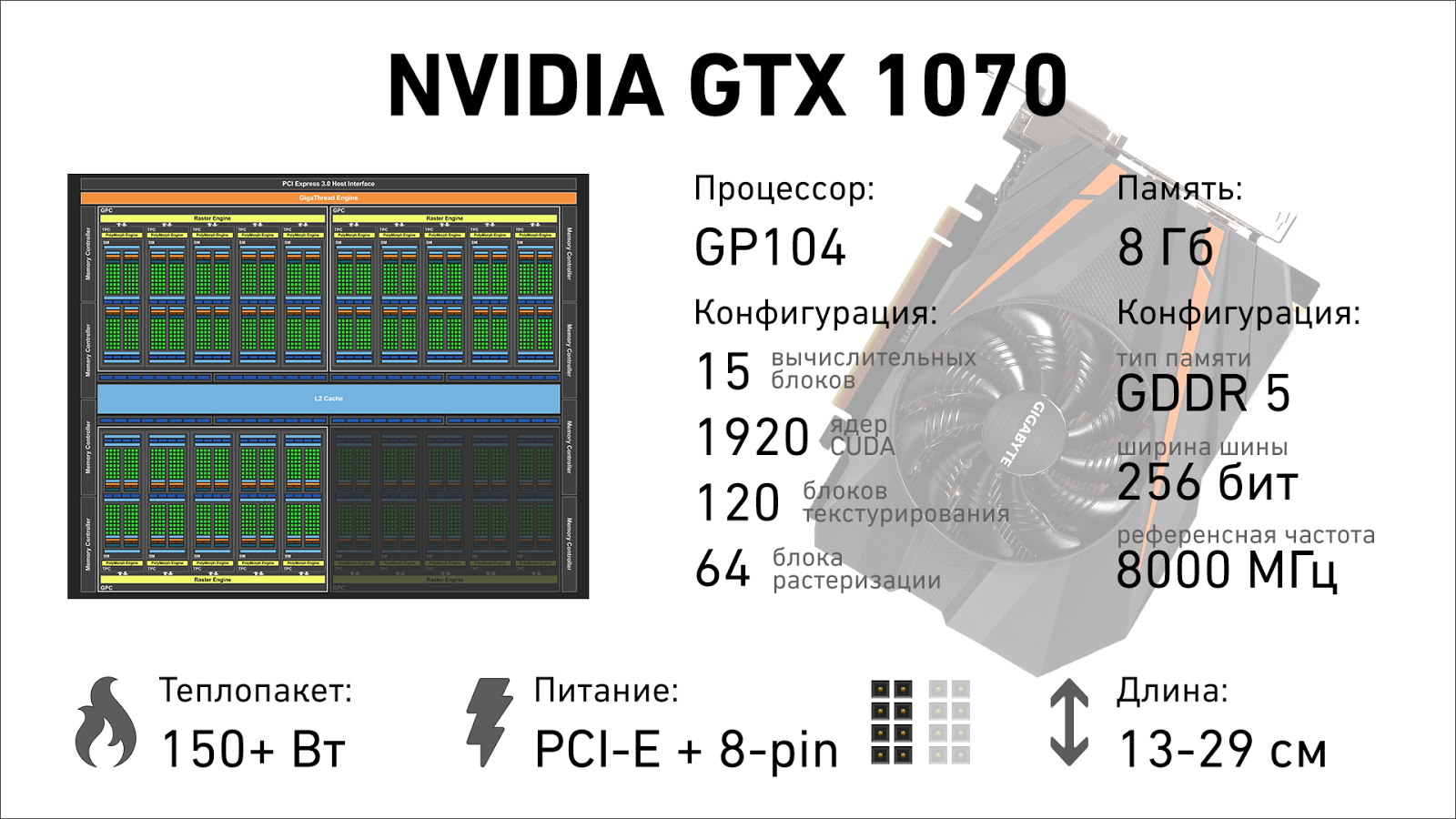

Last year's adventures of the mega-popular GTX 970 and its not-quite-honest-4-gigabyte memory were actively sorted and sucked all over the Internet. This did not stop her from becoming the most popular gaming graphics card in the world. In anticipation of the change of year on the calendar, she holds the first place in Steam Hardware & Software Survey . It is understandable: the combination of price and performance was just perfect. And if you missed last year's upgrade, and the 1060 doesn't seem cool enough to you, the GTX 1070 is your choice.

The resolutions 2560x1440 and 3840x2160 the video card digests with a bang. The Boost 3.0 overclocking system will try to throw firewood when the load on the GPU increases (that is, in the most difficult scenes, when the FPS sags under the onslaught of special effects), overclocking the video processor to a stunning 2100+ MHz. The memory easily receives 15-18% of the effective frequency in excess of the factory parameters. Monstrous thing.

Attention, all tests were conducted in 2.5k (2560x1440):

DOOM 2016 (1440p, ULTRA): OpenGL - 91 FPS, Vulkan - 78 FPS;

The Witcher 3: Wild Hunt (1440p, MAX, HairWorks Off): DX11 - 73 FPS;

Battlefield 1 (1440p, ULTRA): DX11 - 91 FPS, DX12 - 83 FPS;

Overwatch (1440p, ULTRA): DX11 - 142 FPS.

It’s clear that pulling out ultra-settings in 4k and never sinking below 60 frames per second is beyond the power of either this card or 1080, but you can play at conditional “high” settings by disabling or slightly lowering the most gluttonous features in full resolution, and in terms of real performance, the video card easily sets the heat even last year’s 980 Ti, which cost almost twice as much. The most interesting option for Gigabyte: they managed to cram a full-fledged 1070 into the ITX-standard case. Thanks to the modest heat package and energy-efficient design. Prices for cards start from 29-30 thousand rubles for tasty options.

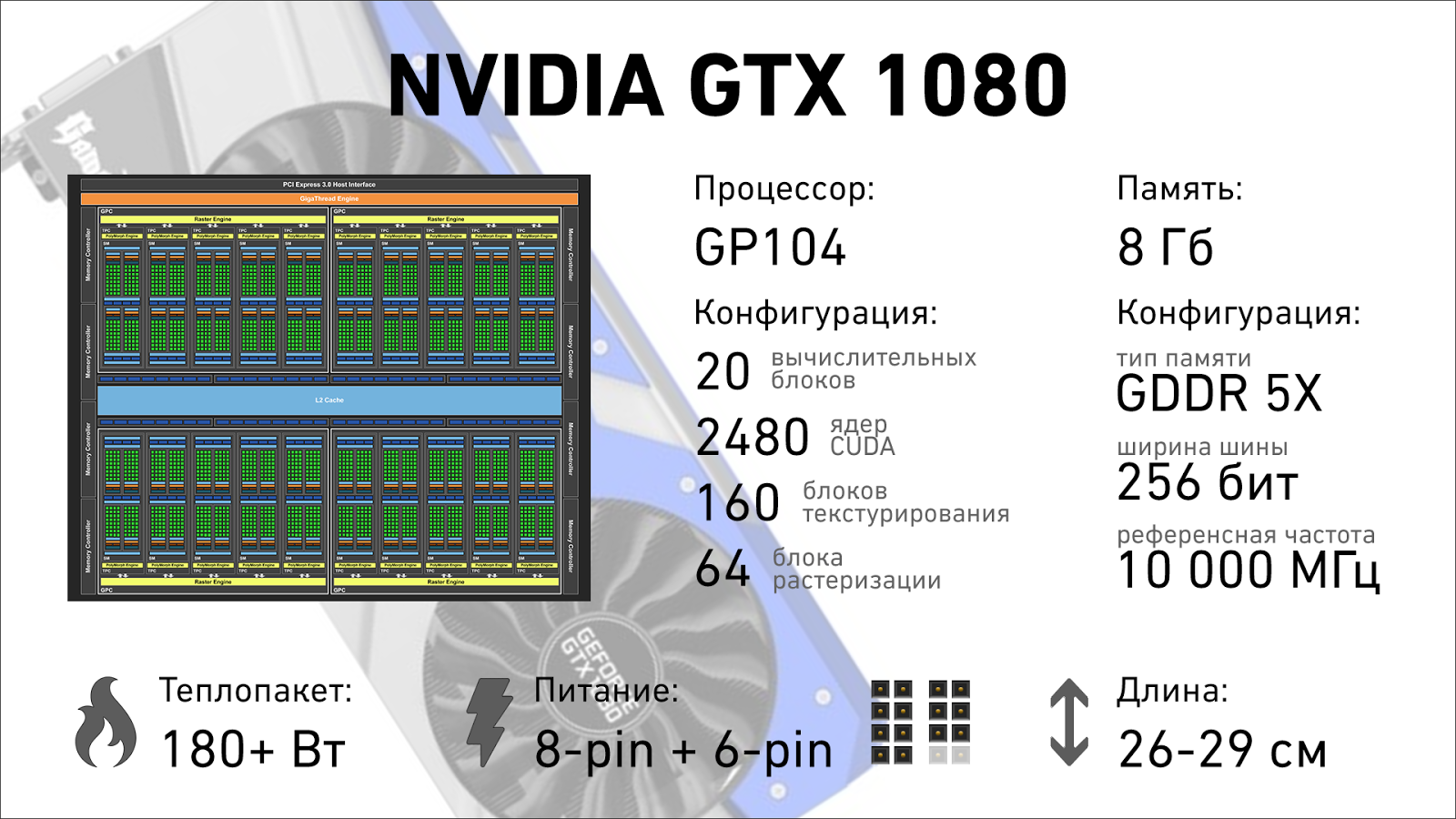

Yes, the flagship does not have the letters Ti. Yes, it does not use the largest GPU available to NVIDIA. Yes, there is no coolest HBM 2 memory, and the video card does not look like the Death Star or, in the extreme case, an imperial Star Destroyer cruiser. And yes, this is the coolest gaming graphics card that is now. One one takes and runs DOOM in 5k3k resolution with 60 frames per second on ultra-settings. She is subject to more and more new toys, and for the next year or two she will not have any problems: until the new technologies embedded in Pascal become widespread, until the game engines learn to efficiently load available resources ... Yes, in a couple of years we will say: “Now, look at The GTX 1260, a couple of years ago, to play with these settings, you needed a flagship, ”but for now, the best of the best graphics cards is available before the New Year at a very reasonable price.

Attention, all tests were carried out in 4k (3840x2160):

DOOM 2016 (2160p, ULTRA): OpenGL - 54 FPS, Vulkan - 78 FPS;

The Witcher 3: Wild Hunt (2160p, MAX, HairWorks Off): DX11 - 55 FPS;

Battlefield 1 (2160p, ULTRA): DX11 - 65 FPS, DX12 - 59 FPS;

Overwatch (2160p, ULTRA): DX11 - 93 FPS.

It remains only to decide: you need it, or you can save and take 1070. There is no big difference to play on the “ultra” or “high” settings, since modern engines perfectly paint a picture in high resolution even at medium settings: in the end, we have you are not soapy consoles that cannot provide enough performance for honest 4k and stable 60 frames per second.

If we discard the most inexpensive options, then Palit in the GameRock version will again have the best combination of price and quality (about 43-45 thousand rubles): yes, the cooling system is “thick”, 2.5 slots, but the video card is shorter than the competitors, and rarely put a pair of 1080 . SLI is slowly dying, and even the life-giving injection of high-speed bridges does not really help him. The ASUS ROG option is not bad if you have a lot of add-ons installed. you don’t want to overlay the devices and overlap the extra expansion slots: their video card is exactly 2 slots thick, but it requires 29 centimeters of free space from the back wall to the hard drive cage. I wonder if Gigabyte will overpower the release of this monster in ITX format?

The new NVIDIA graphics cards simply buried the used iron market. Only the GTX 970 survives on it, which can be snatched for 10-12 thousand rubles. Potential buyers of used 7970 and R9 280 often have nowhere to put it and simply do not feed it, and many options from the secondary market are simply unpromising, and as a cheap upgrade for a couple of years, they are worthless: there are not enough memory, new technologies are not supported. The charm of the new generation of video cards is that even toys that are not optimized for them go much more energetically than on veterans of GPU charts of past years, and what will happen in a year when the game engines learn to use the full power of new technologies is hard to imagine.

Alas, I can’t recommend buying the cheapest Pascal. The RX 460 is usually sold a thousand or two cheaper, and if your budget is so limited that you take the graphics card “for the last”, then Radeon is objectively a more interesting investment. On the other hand, 1050 is a little faster, and if the prices in your city for these two video cards are almost the same, take it.

1050Ti, in turn, is an excellent option for those who need plot and gameplay more than bells and whistles and realistic nose hair. She does not have a bottleneck in the form of 2 GB of video memory, she does not "dry out" in a year. You can report money to her - do it. The Witcher at high settings, GTA V, DOOM, BF 1 - no problem. Yes, you will have to abandon a number of improvements, such as extra-long shadows, complex tessellation, or “expensive” miscalculations of self-shadowing models by limited ray tracing, but in the heat of battle you will forget about these beautiful things after 10 minutes of the game, and stable 50-60 frames per second will give much more the effect of immersion than nervous jumps from 25 to 40, but with the settings at the “maximum”.

If you have any Radeon 7850, GTX 760 or younger, graphics cards with 2 GB of video memory or less - you can safely change.

The younger 1060 will please those who are interested in a frame rate of 100 FPS more than graphic bells and whistles. However, it will allow you to comfortably play all released toys in FullHD resolution with high or maximum settings and stable 60 frames per second, and the price is very different from everything that comes after it. The oldest 1060 with 6 gigabytes of memory is an uncompromising solution for FullHD with a margin of performance for a year or two, acquaintance with VR and a perfectly acceptable candidate for playing in high resolutions at medium settings.

It makes no sense to change your GTX 970 to GTX 1060, it will suffer another year. But the bored 960, 770, 780, R9 280X and more ancient units can safely be updated to 1060.

1070 is unlikely to become as popular as the GTX 970 (nevertheless, for most users, the iron update cycle is every two years), but in terms of price and quality, it is certainly a worthy continuation of the 70th line. It simply grinds games on the mainstream resolution of 1080p, easily copes with 2560x1440, withstands the ordeals of the unoptimized 21 to 9, and is quite capable of displaying 4k, albeit not at maximum settings.

Yes, SLI also happens.

We say “come on, bye” to all 780 Ti, R9 390X and other last year’s 980th, especially if we want to play in high resolution. And, yes, this is the best option for fans to collect an infernal box in Mini-ITX format and scare guests with 4k games on a 60-70 inch TV that runs on a computer the size of a coffee maker.

The GTX 1080 easily replaces any bunch of previous graphics cards, except for a pair of 980Ti, FuryX or some furious Titans. True, the energy consumption of such monstrous configs cannot be compared with one 1080, and there are complaints about the quality of the pair.

That's all for me, my gift to you for the new year is this guide, and so what to please yourself - choose for yourself :) Happy New Year!

The new generation of iron represented by GTX 1080 and 1070 literally buried the results of last year’s systems and the flagship used iron market, while the “younger” lines represented by GTX 1060 and 1050 secured success in more accessible segments. Owners of GTX980Ti and other Titans cry crocodile tears: their uber guns for many thousands of rubles lost 50% of the cost and 100% of the show off. NVIDIA itself claims that 1080 is faster than last year's TitanX, 1070 easily "heaps" 980Ti, and the relatively budget 1060 will hurt the owners of all other cards.

Is this so, where do the legs of high productivity come from and what to do with all this on the eve of the holidays and sudden financial joys, as well as how to please yourself, you can find in this long and a little boring article.

Nvidia can be loved or ... not loved, but denying that it is it that is currently the leader in the field of video graphics will only be a popadan from an alternative universe. Since Vega from AMD has not yet been announced, we still haven’t seen the flagship RXs on Polaris, and the R9 Fury with its 4 GB of experimental memory frankly cannot be considered a promising card (VR and 4K, nevertheless, they want a little more, than she has) - we have what we have. While 1080 Ti and the conventional RX 490, RX Fury and RX 580 are just rumors and expectations, we have time to figure out the current NVIDIA line and see what the company has achieved in recent years.

Pascal's Mess and Origin

NVIDIA regularly gives reasons to "not love yourself." The story of the GTX 970 and its “3.5 GB memory”, “NVIDIA, Fuck you!” from Linus Torvalds, full pornography in the line of desktop graphics, refusal to work with the free and much more common FreeSync system in favor of its proprietorship ... In general, there are enough reasons. One of the most annoying to me personally is what happened to the past two generations of video cards. If we take a rough description, the "modern" GPUs have gone since the support of DX10. And if you look for the "grandfather" of the 10th series today, then the beginning of modern architecture will be in the region of the 400th series of video accelerators and Fermi architecture. It was in him that the idea of a “block” construction of the so-called "CUDA cores" in NVIDIA terminology.

Fermi

If the video cards of the 8000th, 9000th and 200th series were the first steps in mastering the very concept of “modern architecture” with universal shader processors (like AMD, yes), then the 400th series was as similar as possible what we see in some 1070. Yes, Fermi has a small Legacy crutch from previous generations: the shader unit worked at twice the core frequency, which was responsible for calculating the geometry, but the overall picture of some GTX 480 is not much different from any some 780th, SM multiprocessors are clustered, clusters communicate through a common cache with MODULES memory, and displays the results for the overall cluster rasterization unit:

The block diagram of the GF100 processor used in the GTX 480.

In the 500th series, there was the same Fermi, slightly improved “inside” and with less rejects, so the top solutions received 512 CUDA cores instead of 480 from the previous generation. Visually, the flowcharts generally seem to be twins:

GF110 is the heart of the GTX 580.

In some places the frequencies were increased, the design of the chip itself was slightly changed, there was no revolution. All the same 40 nm manufacturing process and 1.5 GB of video memory on a 384-bit bus.

Kepler

With the advent of Kepler architecture, much has changed. We can say that it was this generation that gave NVIDIA graphics cards the development vector that led to the emergence of current models. Not only the GPU architecture has changed, but the kitchen itself has been developing new hardware inside NVIDIA. If Fermi was aimed at finding a solution that provides high performance, Kepler relied on energy efficiency, the rational use of resources, high frequencies and the simplicity of optimizing the game engine for the capabilities of a high-performance architecture.

Serious changes have been made in the design of the GPU: the basis was not the “flagship” GF100 / GF110, but the “budget” GF104 / GF114, which was used in one of the most popular cards of that time - GTX 460.

The general processor architecture has become simpler by using only two large blocks with four unified shader multiprocessor modules. The layout of the new flagships looked something like this:

GK104 installed in the GTX 680.

As you can see, each of the computing units significantly gained weight relative to the previous architecture, and was called SMX. Compare the structure of the block with that shown above in the Fermi section.

GX104 GPX multiprocessor The

six hundredth series did not have video cards on a full-fledged processor containing six blocks of computational modules, the flagship was the GTX 680 with GK104 installed, and steeper than it was only the “two-headed” 690th, on which just two processors with all the necessary strapping and memory. A year later, the flagship GTX 680 with minor changes turned into a GTX 770, and the GK110 crystal video cards: GTX Titan and Titan Z, 780Ti and the usual 780 became the crown of the evolution of Kepler architecture. Inside - the same 28 nanometers, the only qualitative improvement (which is NOT went to consumer graphics cards based on GK110) - performance with double-precision operations.

Maxwell

The first Maxwell-based graphics card was ... the NVIDIA GTX 750Ti. A bit later, its trim appeared in the face of the GTX 750 and 745 (it was supplied only as an integrated solution), and at the time of its appearance, the younger cards really shook the market for inexpensive video accelerators. The new architecture was tested on the GK107 chip: a tiny bit of future flagships with huge radiators and a frightening price. It looked something like this:

Yes, there is only one computing unit, but how much more complicated it is than the predecessor, compare yourself:

Instead of the large SMX block, which was used as the basic building block, the new, more compact SMM blocks are used in the creation of the GPU. Kepler’s basic computing units were good, but they suffered from poor capacity utilization - a banal hunger for instructions: the system could not dispense instructions for a large number of executive elements. Pentium 4 had approximately the same problems: the power was idle, and the error in branch prediction was very expensive. In Maxwell, each computing module was divided into four parts, each of them having its own instruction buffer and a warp scheduler - operations of the same type on a group of threads. As a result, efficiency increased, and the GPUs themselves became more flexible than their predecessors, and most importantly - at the cost of a little blood and a fairly simple crystal, they worked out a new architecture.

Most of all, mobile solutions have benefited from the innovations: the crystal area has grown by a quarter, and the number of executive units of multiprocessors has almost doubled. As luck would have it, it was the 700th and 800th series that arranged the main mess in the classification. Inside the 700th alone, there were video cards based on the architectures Kepler, Maxwell and even Fermi! That is why desktop Maxwells, in order to distance themselves from the mishmash in previous generations, received the general 900 series, from which the GTX 9xx M mobile cards subsequently budded.

Pascal - the logical evolution of Maxwell architecture

What was laid down in Kepler and continued in the Maxwell generation also remained in Pascals: the first consumer video cards were released on the basis of not the largest GP104 chip, which consists of four graphics processing clusters. The full-sized, six-cluster GP100 went to the expensive semi-professional GPU under the brand name TITAN X. However, even the “cropped” 1080 lights up so that past generations get sick.

Performance improvement

Foundation basis

Maxwell became the foundation of the new architecture, the diagram of comparable processors (GM104 and GP104) looks almost the same, the main difference is the number of multiprocessors packed in clusters. In Kepler (700th generation) there were two large SMX multiprocessors, which were divided into 4 parts each in Maxwell, providing the necessary binding (changing the name to SMM). In Pascal, two more were added to the existing eight in the block, so there were 10 of them, and the abbreviation was again killed: now single multiprocessors are again called SM.

The rest is a complete visual resemblance. True, within the changes has become even greater.

Engine of progress

The changes inside the multiprocessor unit are indecent. In order not to go into very boring details of what you redid, how they optimized and how it was before, I will describe the changes very briefly, and some already yawn.

First things first, Pascal’s corrected the part that is responsible for the geometric component of the picture. This is necessary for multi-monitor configurations and working with VR helmets: with proper support from the game engine (and NVIDIA will quickly support this), the video card can calculate the geometry once and get several geometry projections for each of the screens. This significantly reduces the load in VR, not only in the area of working with triangles (here the growth is just twofold), but also in working with the pixel component.

The conditional 980Ti will read the geometry twice (for each eye), and then fill it with textures and perform post-processing for each of the images, processing a total of about 4.2 million points, of which about 70% will actually be used, the rest will be cut off or fall into the region , which is simply not displayed for each of the eyes.

1080 will process the geometry once, and pixels that do not fall into the final image simply will not be calculated.

With the pixel component, everything is, in fact, even cooler. Since the increase in memory bandwidth can be carried out only on two fronts (increasing the frequency and bandwidth per cycle), both methods cost money, and the “hunger” of the GPU in terms of memory is more pronounced over the years due to the increase in resolution and development of VR, it remains Improve “free” methods to increase throughput. If you can not expand the bus and raise the frequency - you need to compress the data. In previous generations, hardware compression was already implemented, but in Pascal it was taken to a new level. Again, we can do without boring mathematics, and take a ready-made example from NVIDIA. On the left is Maxwell, on the right is Pascal; those points whose color component was compressed without loss of quality are filled with pink.

Instead of transferring specific tiles of 8x8 points, the memory contains the “average” color + matrix of deviations from it, such data takes from ½ to ⅛ of the volume of the source. In real tasks, the load on the memory subsystem decreased from 10 to 30%, depending on the number of gradients and the uniformity of fills in complex scenes on the screen.

This was not enough for the engineers, and memory with increased bandwidth was used for the flagship video card (GTX 1080): GDDR5X transmits twice as many data bits (not instructions) per clock cycle and produces more than 10 Gb / s at the peak. Data transfer at such an insane speed required a completely new topology of memory layout on the board, and in total, the efficiency of working with memory increased by 60-70% compared to the flagships of the previous generation.

Reduced latency and downtime

Video cards have long been engaged not only in graphics processing, but also in related calculations. Physics is often tied to frames of animation and remarkably parallel, which means it is much more efficiently considered on the GPU. But the biggest problem generator in recent years has become the VR industry. Many game engines, development methodologies and a bunch of other technologies used to work with graphics simply were not designed for VR, the case of moving the camera or changing the position of the user's head during the rendering of the frame was simply not processed. If you leave everything as it is, then the out of sync of the video stream and your movements will cause bouts of seasickness and simply interfere with immersion in the game world, which means that you have to throw out the “wrong” frames after rendering and start work again. And these are new delays in displaying the image. This has no positive effect on performance.

Pascal took this problem into account and implemented dynamic load balancing and the possibility of asynchronous interruptions: now execution units can either interrupt the current task (saving the results of work in the cache) to process more urgent tasks, or simply reset the underdone frame and start a new one, significantly reducing delays in image formation. The main beneficiary here, of course, VR and games, but also with general-purpose calculations, this technology can help: simulation of particle collisions received a performance increase of 10-20%.

Boost 3.0

NVIDIA video cards received automatic overclocking a long time ago, back in the 700th generation based on the Kepler architecture. Overclocking was improved at Maxwell, but it was still mildly speaking so-so: yes, the video card worked a little faster, while the heat packet allowed it, an additional 20-30 megahertz from the factory and 50-100 MHz from the factory, which were wired, provided an increase, but not much . It worked something like this:

Even if there was a margin in temperature for the GPU, performance did not increase. With the advent of Pascal, engineers shook up and this dusty swamp. Boost 3.0 works on three fronts: temperature analysis, increasing the clock frequency and increasing the voltage on the chip. Now all the juices are squeezed out of the GPU: standard NVIDIA drivers do not, but the software of the vendors allows you to build a profile curve in one click that will take into account the quality of your particular video card instance.

One of the first in this field was EVGA, its Precision XOC utility has an NVIDIA-certified scanner that sequentially iterates over the entire range of temperatures, frequencies and voltages, achieving maximum performance in all modes.

Add here a new process technology, high-speed memory, all sorts of optimizations and a reduction in the heat packet of the chips, and the result will be simply indecent. C 1500 "base" MHz in the GTX 1060, you can squeeze more than 2000 MHz, if you get a good copy, and the vendor does not screw up with cooling.

Improving the quality of the picture and perception of the game world

Performance was increased on all fronts, but there are a number of points in which there have been no qualitative changes for several years: as the output image. And this is not about graphic effects, they are provided by game developers, but about what exactly we see on the monitor and how the game looks for the end user.

Fast vertical sync

The most important feature of Pascal is a triple buffer for outputting frames, which provides both ultra-low latency in rendering and vertical synchronization. The output image is stored in one buffer, the last frame drawn in the other, and the current frame is drawn in the third. Goodbye, horizontal stripes and frame breaks, hello, high performance. There are no delays that the classic V-Sync arranges (since no one restrains the video card's performance and it always draws with the highest possible frame rate), and only fully formed frames are sent to the monitor. I think that after the new year I’ll write a separate big post about V-Sync, G-Sync, Free-Sync and this new fast synchronization algorithm from Nvidia, there are too many details.

Normal screenshots

No, those screenshots that are now are just a shame. Almost all games use a bunch of technologies so that the picture in motion is breathtaking and breathtaking, and the screenshots become a real nightmare: instead of a stunningly realistic picture consisting of animations, special effects that exploit the features of human vision, you see some kind of angular neopoly with strange colors and absolutely lifeless picture.

New NVIDIA Ansel technology solves the problem with screenshots. Yes, its implementation requires the integration of special code from game developers, but there is a minimum of real manipulation, but the profit is huge. Ansel can pause the game, transfer the control of the camera into your hands, and then there is room for creativity. You can just take a frame without a GUI and your favorite view.

You can draw an existing scene in ultra-high resolution, shoot 360-degree panoramas, stitch them in a plane or leave them in three-dimensional form for viewing in a VR helmet. Take a photo with 16 bits per channel, save it in a kind of RAW file, and then play with exposure, white balance and other settings so that the screenshots become attractive again. We are waiting for tons of cool content from game fans in a year or two.

Video Processing Sound

The new NVIDIA Gameworks libraries add many features available to developers. They are mainly aimed at VR and acceleration of various calculations, as well as improving the quality of the picture, but one of the features is the most interesting and worthy of mention. VRWorks Audio takes work with sound to a whole new level, considering sound not according to commonplace averaged formulas, depending on the distance and thickness of the obstacle, but performs a full trace of the sound signal, with all reflections from the surroundings, reverberation and sound absorption in various materials. NVIDIA has a good video example on how this technology works:

It’s better to look with headphones.

Theoretically, nothing prevents you from running such a simulation on Maxwell, but optimizations regarding asynchronous execution of instructions and the new interrupt system in Pascal allow calculations to be made without affecting the frame rate.

Pascal in total

Actually, there are even more changes, and many of them are so deep in architecture that a huge article can be written for each of them. The key innovations are the improved design of the chips themselves, optimization at the lowest level in terms of geometry and asynchronous operation with full interrupt handling, a lot of features geared towards working with high resolutions and VR, and, of course, crazy frequencies that were not dreamed of by previous generations of video cards. Two years ago, the 780 Ti barely crossed the 1 GHz frontier, today 1080 in some cases works on two: and here the merit is not only in the technical process reduced from 28 nm to 16 or 14 nm: many things are optimized at the lowest level, starting with the design of transistors ending with their topology and strapping inside the chip itself.

For each individual case

The line of NVIDIA 10 series video cards turned out to be really balanced, and it covers all gaming user cases quite tightly, from the option “play strategy and diabetes” to “I want top games in 4k”. Game tests are chosen according to one simple technique: to cover the widest possible range of tests with the smallest possible set of tests. BF1 is a great example of good optimization and allows you to compare the performance of DX11 versus DX12 under the same conditions. DOOM is selected for the same reason, it only allows you to compare OpenGL and Vulkan. The third "The Witcher" here acts as a so-so-optimized-toy, in which the maximum graphics settings make it possible to screw any flagship simply by virtue of the shit. It uses the classic DX11, which is time-tested and well-developed in drivers and is familiar to igrodelov. Overwatch puffs out for all the tournament games

I’ll give you some general comments right away: Vulkan is very gluttonous in terms of video memory, for him this characteristic is one of the main indicators, and you will see a reflection of this thesis in benchmarks. DX12 on AMD cards behaves much better than NVIDIA, if “green” on average show FPS drawdown on new APIs, then “red”, on the contrary, increase.

Junior Division

GTX 1050

The younger NVIDIA (without the letters Ti) is not as interesting as her charged sister with the letters Ti. Her destiny is a game solution for MOBA games, strategies, tournament shooters and other games where few people are interested in detail and picture quality, and a stable frame rate for minimal money is what the doctor ordered.

In all the pictures there is no core frequency, because it is individual for each instance: 1050 without add. power supply can not drive, and her sister with a 6-pin connector can easily take the conditional 1.9 GHz. In terms of power and length, the most popular options are shown, you can always find a video card with a different circuit or other cooling, which does not fit into the specified "standards".

DOOM 2016 (1080p, ULTRA): OpenGL - 68 FPS, Vulkan - 55 FPS;

The Witcher 3: Wild Hunt (1080p, MAX, HairWorks Off): DX11 - 38 FPS;

Battlefield 1 (1080p, ULTRA): DX11 - 49 FPS, DX12 - 40 FPS;

Overwatch (1080p, ULTRA): DX11 - 93 FPS;

The GTX 1050 is equipped with a GP107 graphics processor, inherited from an older card with a small cropping of functional blocks. 2 GB of video memory will not let you go for a walk, but for e-sports disciplines and playing in some tanks, it is perfect, since the price of a low-end card starts at 9.5 thousand rubles. Additional power is not required, the graphics card is enough 75 watts coming from the motherboard via a PCI-Express slot. However, in this price segment there is also an AMD Radeon RX460, which costs less with the same 2 GB of memory, and is almost as good as the work quality, and for about the same money you can get the RX460, but in the 4 GB version. Not that they would help him much, but there is no reserve for the future. The choice of a vendor is not so important, you can take what is available and does not pull your pocket away with an extra thousand rubles,

GTX 1050 Ti

About 10 thousand for the regular 1050 is not bad, but for the charged (or full, call it what you want) version is asked for not much more (on average, 1-1.5 thousand more), but its filling is much more interesting. By the way, the entire 1050 series is not produced from trimming / rejecting “large” chips that are not suitable for 1060, but as a completely independent product. It has less technical process (14 nm), another plant (crystals are grown by Samsung factory), and there are extremely interesting specimens with ext. power supply: the heat package and basic consumption are the same 75 watts, but the overclocking potential and the ability to go beyond what is permitted are completely different.

If you continue to play at FullHD resolution (1920x1080), do not plan to upgrade, and your remaining hardware within 3-5 years ago is a great way to increase the performance in toys with little blood. It’s worth focusing on ASUS and MSI solutions with an additional 6-pin power supply, Gigabyte's options are not bad, but the price is not so good anymore.

DOOM 2016 (1080p, ULTRA): OpenGL - 83 FPS, Vulkan - 78 FPS;

The Witcher 3: Wild Hunt (1080p, MAX, HairWorks Off): DX11 - 44 FPS;

Battlefield 1 (1080p, ULTRA): DX11 - 58 FPS, DX12 - 50 FPS;

Overwatch (1080p, ULTRA): DX11 - 104 FPS.

Middle division

Video cards of the 60th line have long been considered the best choice for those who do not want to spend a lot of money, and at the same time play at high graphics settings everything that comes out in the next couple of years. It started back in the days of the GTX 260, which had two versions (simpler, 192 stream processors, and fatter, 216 "stones"), lasted in the 400, 500, and 700 generations, and now NVIDIA again fell into an almost perfect combination price and quality. Two versions of the “average” are again available: the GTX 1060 for 3 and 6 GB of video memory differ not only in the amount of available RAM, but also in performance.

GTX 1060 3GB

Queen of eSports. Reasonable price, amazing performance for FullHD (and in e-sports rarely use a higher resolution: the results are more important there than beautiful ones), a reasonable amount of memory (3 GB, for a minute, stood two years ago in the flagship GTX 780 Ti, which cost indecent money). In terms of performance, the youngest 1060 easily heaps up last year's GTX 970 with a memorable 3.5 GB of memory, and easily drags its ears the year before last year's super-flagship 780 Ti.

DOOM 2016 (1080p, ULTRA): OpenGL - 117 FPS, Vulkan - 87 FPS;

The Witcher 3: Wild Hunt (1080p, MAX, HairWorks Off): DX11 - 70 FPS;

Battlefield 1 (1080p, ULTRA): DX11 - 92 FPS, DX12 - 85 FPS;

Overwatch (1080p, ULTRA): DX11 - 93 FPS.

There is an unconditional favorite in terms of price and exhaust ratio - the version from MSI. Good frequencies, silent cooling system and sane dimensions. For her, they ask for nothing at all, in the region of 15 thousand rubles.

GTX 1060 6GB

The six gigabyte version is a budget VR ticket and high resolutions. She will not starve in memory, a little faster in all tests and will confidently beat the GTX 980 where last year's video card will have fewer than 4 GB of video memory.

DOOM 2016 (1080p, ULTRA): OpenGL - 117 FPS, Vulkan - 121 FPS;

The Witcher 3: Wild Hunt (1080p, MAX, HairWorks Off): DX11 - 73 FPS;

Battlefield 1 (1080p, ULTRA): DX11 - 94 FPS, DX12 - 90 FPS;

Overwatch (1080p, ULTRA): DX11 - 166 FPS.

I would like to once again note the behavior of video cards when using the Vulkan API. 1050 with 2 GB of memory - FPS drawdown. 1050 Ti with 4 GB - almost on par. 1060 3 GB - drawdown. 1060 6 GB - increased results. The trend, I think, is understandable: Vulkan needs 4+ GB of video memory.

The trouble is that both 1060 - video cards are not small. It seems that the heat package is reasonable and the board is really small, but many vendors decided to simply unify the cooling system between 1080, 1070 and 1060. Someone has a video card with a height of 2 slots, but with a length of 28+ centimeters, someone made them shorter, but thicker (2.5 slots). Choose carefully.

Unfortunately, an additional 3 GB of video memory and an unlocked computing unit will cost you ~ 5-6 thousand rubles on top of the price of a 3-gig version. In this case, the most interesting options for price and quality at Palit. ASUS has released a monstrous 28-centimeter cooling system, which sculpts both on 1080, and 1070, and 1060, and such a video card does not fit much, versions without factory overclocking cost almost the same, and the exhaust is less, and for relatively compact MSI they ask for more than competitors with about the same level of quality and factory overclocking.

Major League

Playing for all the money in 2016 is difficult. Yes, 1080 is insanely cool, but perfectionists and metalworkers know that NVIDIA HIDES the existence of the super-flagship 1080 Ti, which must be incredibly cool. The first specifications are already seeping into the network, and it is clear that the greens are waiting for a step from red and white: some kind of uber gun that can instantly be replaced by the new king of 3D graphics, the great and powerful GTX 1080 Ti. In the meantime, we have what we have.

GTX 1070

Last year's adventures of the mega-popular GTX 970 and its not-quite-honest-4-gigabyte memory were actively sorted and sucked all over the Internet. This did not stop her from becoming the most popular gaming graphics card in the world. In anticipation of the change of year on the calendar, she holds the first place in Steam Hardware & Software Survey . It is understandable: the combination of price and performance was just perfect. And if you missed last year's upgrade, and the 1060 doesn't seem cool enough to you, the GTX 1070 is your choice.

The resolutions 2560x1440 and 3840x2160 the video card digests with a bang. The Boost 3.0 overclocking system will try to throw firewood when the load on the GPU increases (that is, in the most difficult scenes, when the FPS sags under the onslaught of special effects), overclocking the video processor to a stunning 2100+ MHz. The memory easily receives 15-18% of the effective frequency in excess of the factory parameters. Monstrous thing.

Attention, all tests were conducted in 2.5k (2560x1440):

DOOM 2016 (1440p, ULTRA): OpenGL - 91 FPS, Vulkan - 78 FPS;

The Witcher 3: Wild Hunt (1440p, MAX, HairWorks Off): DX11 - 73 FPS;

Battlefield 1 (1440p, ULTRA): DX11 - 91 FPS, DX12 - 83 FPS;

Overwatch (1440p, ULTRA): DX11 - 142 FPS.

It’s clear that pulling out ultra-settings in 4k and never sinking below 60 frames per second is beyond the power of either this card or 1080, but you can play at conditional “high” settings by disabling or slightly lowering the most gluttonous features in full resolution, and in terms of real performance, the video card easily sets the heat even last year’s 980 Ti, which cost almost twice as much. The most interesting option for Gigabyte: they managed to cram a full-fledged 1070 into the ITX-standard case. Thanks to the modest heat package and energy-efficient design. Prices for cards start from 29-30 thousand rubles for tasty options.

GTX 1080

Yes, the flagship does not have the letters Ti. Yes, it does not use the largest GPU available to NVIDIA. Yes, there is no coolest HBM 2 memory, and the video card does not look like the Death Star or, in the extreme case, an imperial Star Destroyer cruiser. And yes, this is the coolest gaming graphics card that is now. One one takes and runs DOOM in 5k3k resolution with 60 frames per second on ultra-settings. She is subject to more and more new toys, and for the next year or two she will not have any problems: until the new technologies embedded in Pascal become widespread, until the game engines learn to efficiently load available resources ... Yes, in a couple of years we will say: “Now, look at The GTX 1260, a couple of years ago, to play with these settings, you needed a flagship, ”but for now, the best of the best graphics cards is available before the New Year at a very reasonable price.

Attention, all tests were carried out in 4k (3840x2160):

DOOM 2016 (2160p, ULTRA): OpenGL - 54 FPS, Vulkan - 78 FPS;

The Witcher 3: Wild Hunt (2160p, MAX, HairWorks Off): DX11 - 55 FPS;

Battlefield 1 (2160p, ULTRA): DX11 - 65 FPS, DX12 - 59 FPS;

Overwatch (2160p, ULTRA): DX11 - 93 FPS.

It remains only to decide: you need it, or you can save and take 1070. There is no big difference to play on the “ultra” or “high” settings, since modern engines perfectly paint a picture in high resolution even at medium settings: in the end, we have you are not soapy consoles that cannot provide enough performance for honest 4k and stable 60 frames per second.

If we discard the most inexpensive options, then Palit in the GameRock version will again have the best combination of price and quality (about 43-45 thousand rubles): yes, the cooling system is “thick”, 2.5 slots, but the video card is shorter than the competitors, and rarely put a pair of 1080 . SLI is slowly dying, and even the life-giving injection of high-speed bridges does not really help him. The ASUS ROG option is not bad if you have a lot of add-ons installed. you don’t want to overlay the devices and overlap the extra expansion slots: their video card is exactly 2 slots thick, but it requires 29 centimeters of free space from the back wall to the hard drive cage. I wonder if Gigabyte will overpower the release of this monster in ITX format?

Summary

The new NVIDIA graphics cards simply buried the used iron market. Only the GTX 970 survives on it, which can be snatched for 10-12 thousand rubles. Potential buyers of used 7970 and R9 280 often have nowhere to put it and simply do not feed it, and many options from the secondary market are simply unpromising, and as a cheap upgrade for a couple of years, they are worthless: there are not enough memory, new technologies are not supported. The charm of the new generation of video cards is that even toys that are not optimized for them go much more energetically than on veterans of GPU charts of past years, and what will happen in a year when the game engines learn to use the full power of new technologies is hard to imagine.

GTX 1050 and 1050Ti

Alas, I can’t recommend buying the cheapest Pascal. The RX 460 is usually sold a thousand or two cheaper, and if your budget is so limited that you take the graphics card “for the last”, then Radeon is objectively a more interesting investment. On the other hand, 1050 is a little faster, and if the prices in your city for these two video cards are almost the same, take it.

1050Ti, in turn, is an excellent option for those who need plot and gameplay more than bells and whistles and realistic nose hair. She does not have a bottleneck in the form of 2 GB of video memory, she does not "dry out" in a year. You can report money to her - do it. The Witcher at high settings, GTA V, DOOM, BF 1 - no problem. Yes, you will have to abandon a number of improvements, such as extra-long shadows, complex tessellation, or “expensive” miscalculations of self-shadowing models by limited ray tracing, but in the heat of battle you will forget about these beautiful things after 10 minutes of the game, and stable 50-60 frames per second will give much more the effect of immersion than nervous jumps from 25 to 40, but with the settings at the “maximum”.

If you have any Radeon 7850, GTX 760 or younger, graphics cards with 2 GB of video memory or less - you can safely change.

GTX 1060

The younger 1060 will please those who are interested in a frame rate of 100 FPS more than graphic bells and whistles. However, it will allow you to comfortably play all released toys in FullHD resolution with high or maximum settings and stable 60 frames per second, and the price is very different from everything that comes after it. The oldest 1060 with 6 gigabytes of memory is an uncompromising solution for FullHD with a margin of performance for a year or two, acquaintance with VR and a perfectly acceptable candidate for playing in high resolutions at medium settings.

It makes no sense to change your GTX 970 to GTX 1060, it will suffer another year. But the bored 960, 770, 780, R9 280X and more ancient units can safely be updated to 1060.

Top Segment: GTX 1070 and 1080

1070 is unlikely to become as popular as the GTX 970 (nevertheless, for most users, the iron update cycle is every two years), but in terms of price and quality, it is certainly a worthy continuation of the 70th line. It simply grinds games on the mainstream resolution of 1080p, easily copes with 2560x1440, withstands the ordeals of the unoptimized 21 to 9, and is quite capable of displaying 4k, albeit not at maximum settings.

Yes, SLI also happens.

We say “come on, bye” to all 780 Ti, R9 390X and other last year’s 980th, especially if we want to play in high resolution. And, yes, this is the best option for fans to collect an infernal box in Mini-ITX format and scare guests with 4k games on a 60-70 inch TV that runs on a computer the size of a coffee maker.

The GTX 1080 easily replaces any bunch of previous graphics cards, except for a pair of 980Ti, FuryX or some furious Titans. True, the energy consumption of such monstrous configs cannot be compared with one 1080, and there are complaints about the quality of the pair.

That's all for me, my gift to you for the new year is this guide, and so what to please yourself - choose for yourself :) Happy New Year!