How Amazon uses the new face recognition system and why they hate it

Amazon has developed a powerful new system for real-time face recognition. Neural networks are fed with photos and videos, and they determine what (or who) is shown there. Any AWS user can use it. In the United States, with its help, sheriffs are already successfully catching criminals, and television stations find celebrities in live broadcasts. But the technology also had ardent opponents. Which write letters to Bezos and urge Amazon to immediately stop developing the system, otherwise the consequences may be the most unpredictable.

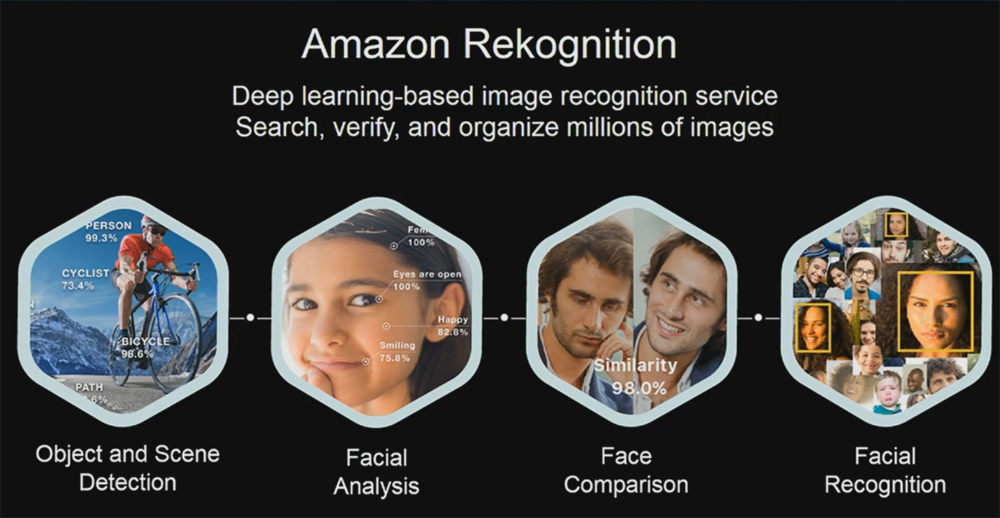

Amazon Rekognition enables you to embed in-app image and video analytics created by deep learning algorithms. It is enough to give your video clip or series of pictures to neural networks, and they will understand what is shown on them. The system can recognize people's faces, their actions, objects, and the environment. Detect pornography or a murder scene.

The technology for the photo was introduced in 2016, and for the video in 2017. Since this is characteristic of neural networks, the product has matured well, wised up and learned new tricks. For example, at the initial stage, Rekognition was not able to determine the context of the image - what is the person doing now, and what does the expression on his face show (pleasure, fear, anger?). Then this technology of Amazon was often compared with Microsoft Cognitive Services , and they said that Microsoft is doing better.

But in the end, the AWS system has become much more famous than its competitor. Perhaps its role was played by the fact that Amazon cloud services accounted for 33% of the market, while Microsoft - only 13%. Amazon itself says its service is more powerful because it has accumulated more data that can be fed to neural networks to train them. Be that as it may, Rekognition is now the face of deep learning for recognition of photos and videos for the public and authorities in the United States. And now this brings the company a lot of problems.

How it works

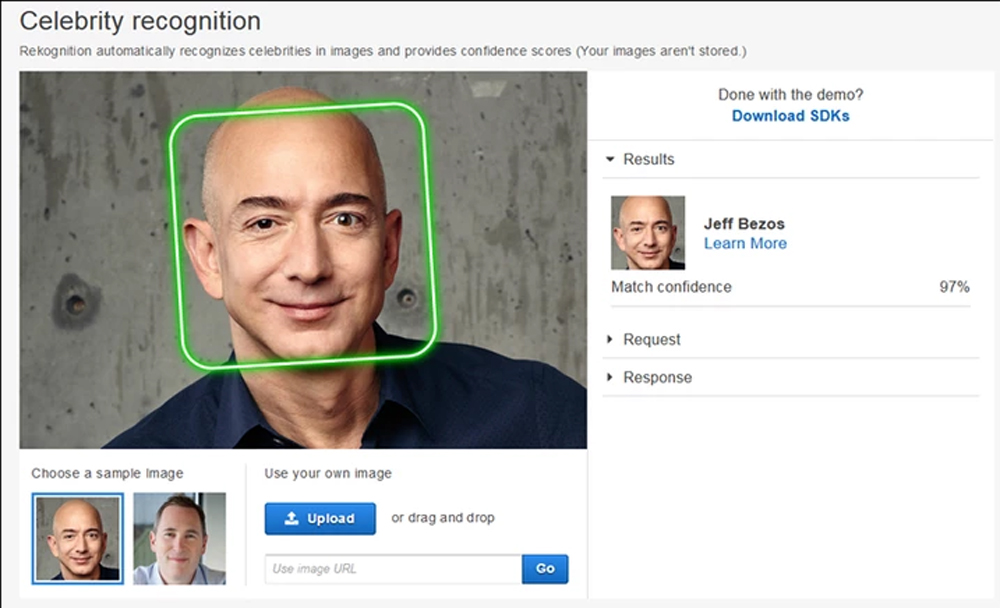

Rekognition allows you to search for faces in collections containing tens of millions of faces in real time. You must first create such a collection (or use the option provided by Amazon). Then the service conducts a quick search on it to find faces that are visually similar to the faces of people in a photo or video.

From Amazon Ads

Amazon's computer vision experts have developed this system for the daily analysis of billions of images and videos. According to rumors (so far unconfirmed), it is it that is used in the first stores without Amazon Go cash desks . In the video, she can even remember where this or that person went when his face is not visible. Technology promises a great future - for example, in the search for missing people or in the automatic detection of suspected criminals.

Rekognition can also be used to filter inappropriate content. Now for this you have to use a team of moderators - or rely on users to tag photos / videos with violence or sexual scenes in applications and social networks. networks. Amazon now lets you specify which items to automatically delete. And they won’t even appear in the results: the system will filter out already at the boot stage.

The service is constantly learning from new data, expanding its capabilities and recognition accuracy. Moreover, due to the phenomenal power of AWS, the technology is stable with any number of requests. Its delay will remain unchanged even with an increase in the number of requests to tens of millions.

For individuals or companies, the service is leased at a price of 10 cents per minute for recognition of archived or streaming video (plus free analysis of 1000 minutes of video per month in the first year of use). But Amazon itself sees it in the state. structures. Under a special program, police in the United States can receive Rekognition for only $ 6 per month - to scan hundreds of thousands of potential criminals. The first few sheriffs have already installed such technology in their department.

For example, in July last year, a man went to a store in Oregon. He took the basket, scored the most expensive goods in it. And he went out without going into the cash desk self-discussion. The store had a camera that took a couple of photos of the thief, but in a normal situation they probably would never have been found - the police have other tasks. Unfortunately for the culprit, the sheriff’s office tied to the case recently connected to Amazon technology. The face of a store thief was driven through a database of 300,000 photographs of criminals from their district. She betrayed four people with similar faces. The detective scanned them on Facebook - and found the same person with the same facial features and the same sweatshirt. Everything, the case is closed.

The police say that if a person has already committed a crime once, there’s a big chance that he can do it again. This is where their old photo comes in handy. Oregon has a database with photos of all the criminals caught over the past 10 years. Now it is used mainly for searching by first name, last name and place of residence. It’s almost impossible to find someone in her face to face, especially considering that over time people grow beards, make new hairstyles, and so on. But neural networks cope with the task in a few seconds. County police say she’s using Rekognition’s capabilities 20 times a day.

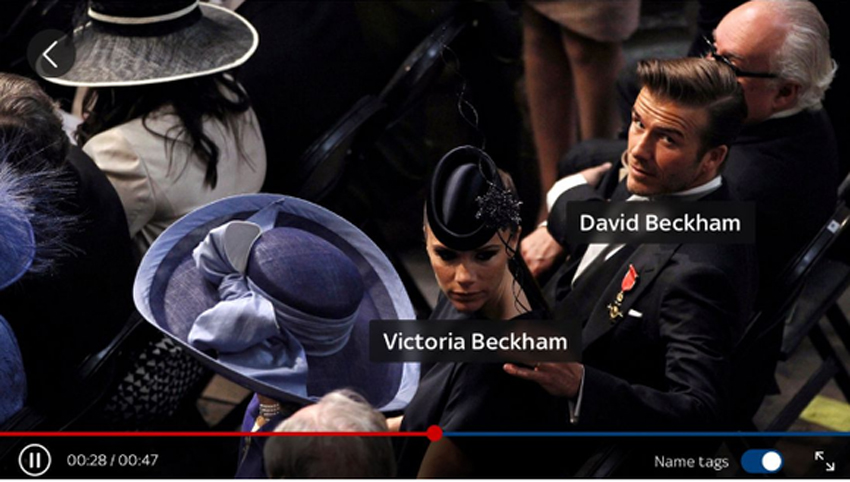

Royal wedding

Another version of how Rekognition can be used for good was demonstrated last Saturday. Millions of people were chained to television screens, watching how Prince Harry marries American actress Meghan Markle. The wedding gathered three times as many spectators as the top episodes of Game of Thrones. For many, in truth, it was a rather boring sight. Watch how some lords, ladies and British celebrities of different calibers take turns gathering and entering the cathedral. A crowd of people of whom, God forbid, you know a dozen. Brr

But the British television network Sky News has found a way out of this situation. Viewers of her livestream did not have to wonder who it was wearing a hat. All data went through the AWS cloud. And Rekognition live recognized all the celebrities from the video, and under each issued a signature with his name, surname and title. In the window next to it, you could see who this person was Harry and Megan, and find out more about him. How it worked in real time can be seen here . On the right, you can choose which celebrity you want to see, and you will be switched to the moment when Rekognition detected her arrival. Cool.

The path to authoritarianism

Not everyone shares the rainbow feelings about the new technology. This Tuesday, the American Civil Liberties Union (ACLU) and its 40 supporting organizations issued a statement outlining the hidden dangers of using Rekognition. They also sent an open letter to Jeff Bezos, urging him to stop giving technology to third parties and stop developing new “surveillance systems that harm civil society.” Face recognition, of course, is not something revolutionary, but, according to the authors of the letter, how Amazon does this is extremely dangerous and can set a precedent.

The point is this. After the recent protests of black Americans, who believe that the police are biased towards them, all law enforcement officers in the United States were forced to carry cameras on them. So that in case of what it was possible to determine whether the officer was to blame for the situation. Amazon is actively promoting the use of Rekognition specifically with these cameras. So, let’s say, in real time, the system could whisper in the ear of a policeman “To the right in a black jacket and white cap is a suspect in three murders.” But the leader of the Black Lives Matter movement, Malkiya Ziril, says that he does not believe in the new technology, and on the contrary, it will make the life of minorities worse:

This is a recipe for authoritarianism and disaster. Amazon shouldn’t even be close to doing this, and if we can do something about it, they won’t.

The cameras on the body of the police do not monitor the police. They follow those whom the police are looking at - for us, for the communities, for people like me.

Technology is a tool. Offering such an instrument in the context of extreme racism and cruelty can only strengthen this racism and cruelty. Given Amazon’s computing power, you essentially stop feeding this discrimination system with a battery, and instead connect it to a nuclear power plant. You increase not only speed, but also the scale of how the state can interfere in our lives.

Rekognition can allow police to detect in real time who joined the protest. Or who is an illegal immigrant. “This poses a fatal threat to communities,” organizations write in their letter to Amazon. “People should be able to walk freely along the street, without fear of being watched by the state.”

Malkia Cyril led the protest

In the annex to the letter, ACLU published a series of internal letters between Amazon and law enforcement in Oregon, which it received through an official request. Of course, there is nothing criminal there, but it is interesting that even the sheriffs themselves, while talking with Amazon representatives, were slightly nervous about "how the public can perceive it." And they asked to slow down the introduction of new systems in order to give people time to adapt.

There are also correspondence with California and Arizona police who asked Oregon how effective their new system was and how quickly it could be implemented. It turned out that the Rekognition installation cost the office of the sheriff only $ 400 - to upload 305 thousand photos with profiles to the system. And $ 6 per month to continue using the service. Cheaper than two big macs.

AWS responded to the claims of public organizations in a statement that if customers do not follow the letter of the law, they will be disconnected from the service. And they promised to continue to closely monitor how their technology is used.

From Amazon statement:

The quality of our life today would be much worse if we prohibited every new invention whenever some people could find a way to abuse it. Imagine if you could not buy a computer, because it can be used for illegal activity?

But reassuring people is not so simple, and public fears about surveillance systems using deep learning algorithms continue to grow in the States. A few weeks ago, about a dozen Google employees quit in protest at the search engine’s decision to join the US Department of Defense in the development of the Maven Project. This project creates an AI that will analyze and identify objects in the field of view of the drone (say, when searching for ISIS terrorists). Google employees were opposed to using their technology to kill people.

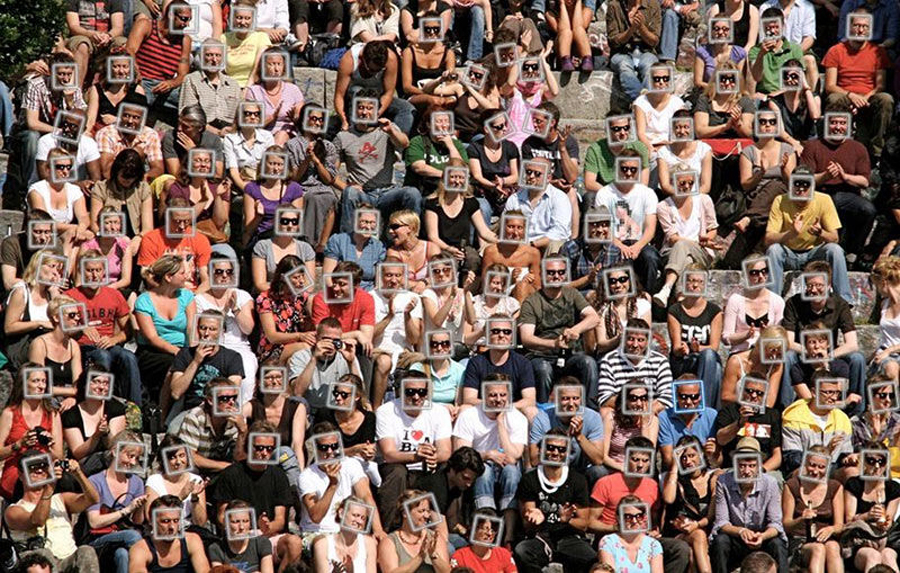

Rekognition, meanwhile, already exists, and in many respects it turns out to be much more powerful. The system does not care what content it is “fed” to - video from a drone, a telecast, recording a camera from a store. She can recognize up to 100 faces in crowd photographs in a split second and determine to whom they belong. The only thing she needs is a fairly serious database with which these persons could be compared. Fortunately (for Amazon, but not for human rights defenders), the police have such a database. In 2016, researchers from Georgetown University found that every second adult American is in it - 117 million people. Moreover, most of these records are not advertised and are not regulated by anyone.

Racial issue

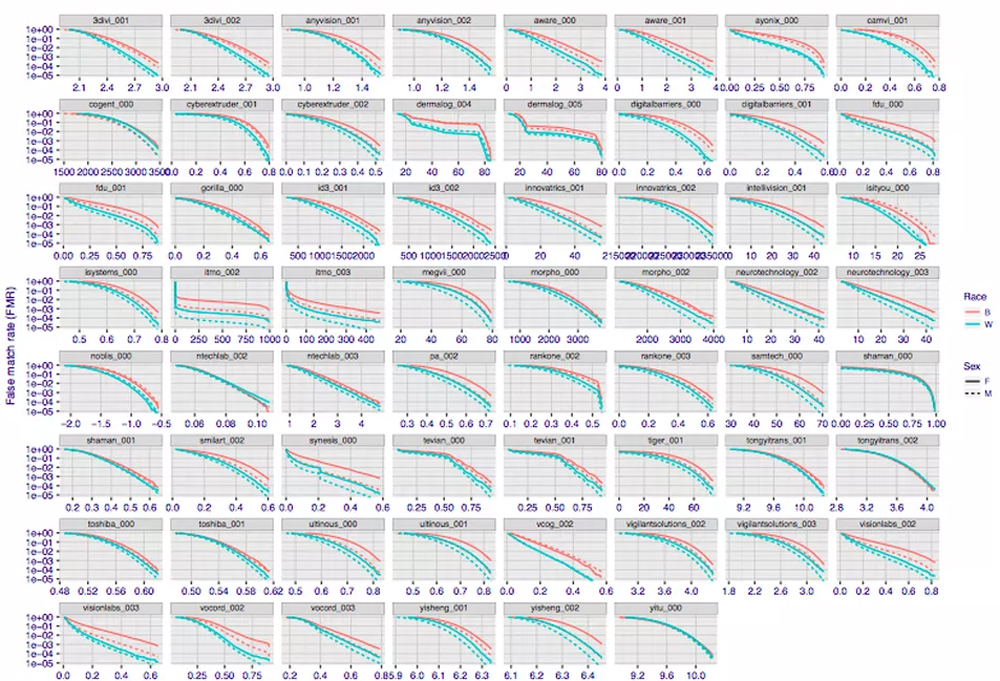

Another major criticism of all American face recognition systems, and Rekognition in particular, concerns their “bias” towards women, blacks, and Muslims. In short, the bottom line is that neural networks train mainly in the photos of white men - simply because there are more of them. As a result, the technology is learning very well to distinguish such men from all others, and for them it works almost without errors.

But for women and blacks, these neurosystems, as a rule, work worse. A MIT study, for example, showed that one similar system using deep learning algorithms thought that 35% of women thought that black women were men - although there were practically no mistakes for white women.

Rekognition, of course, is much smarter. But still, if for each photograph of a white suspect, on average, there are three or four “similar” persons in the database, then for a Negro she can produce twenty or thirty results. Of which it will be easier for the police to find “the same one”, even if in reality this is not a criminal. As a result, experts fear, the percentage of searches and arrests among racial minorities with such a system can only increase. And America now just did not have enough.

FRVT data. The higher the line, the greater the percentage of errors. Green is white, red is black.

A successful example of a similar technology in China, where police track down criminals in real time and identify “people with low social capital” using their photographs and fingerprints, is not impressive for Rekognition's critics. They say that in China, with its homogenized population, such systems are easier to operate than in America, given its cultural and racial diversity. Plus, perhaps, Chinese civil society does not like such government interference in the private lives of people, it’s just that it’s not organized enough to protest.

The final argument of the opponents of Rekognition is FRVT. For more than a year, the National Institute of Standards and Technology in the United States has been testing systems from dozens of companies developing their own face recognition technologies. To check which one works more precisely, and who has what disadvantages compared to the others. The project is called Facial Recognition Vendor Test. It is from him that information is mainly taken that all non-white non-male systems are recognized worse (out of 62 samples last year, only 4 showed almost no deviations depending on race). Amazon does not provide its system for testing to the institute - which gives skeptics a reason to say once again that "something is wrong here."

Which side are you on, ACLU or Amazon? Would the state give their face to the database?

PS You can deliver purchases from Amazon and other US stores with Pochtoy.com. We have the lowest prices, from $ 8.99 per pound. And $ 7 to the account of all readers who register with the Geektimes code.