DIY Face Averaging

- Tutorial

- Recovery mode

Did you recognize anyone? All the people above do not exist: this is not a photograph, but the result of a simple algorithm that averages thousands of different people. In this article, we will talk about how easy it is to quickly sketch such an algorithm and get interesting results.

Preamble

So, one spring Saturday night, I had a desire to highlight some basic set of facial features for later identification. It was not related to my work, just a hobby. I decided to start by building an averaged face, in relation to which it will be easier to choose deviations and track the influence of individual features on the overall structure of the face. In general, this problem is quite successfully solved, usually using Image Warp or other non-linear transformations of the coordinate system, which by and large can not always be called averaging, but we will go a different way.

I hasten to assure, in this article there is no:

- Dental mathematics

- Finished pieces of code

- Optimal solutions

Because this project started for me as a side one in the course of solving a narrow task, most of the actions here were completed by Just For Fun, without regard to Best Practices with the thought of quick, easy development. But there is honest averaging, without changing the proportions and shape of the face. I will use Matlab in my work, but if you decide to repeat my experience, you can choose almost any language and programming environment, because it does not use any overly complex constructions that could not be written on anything in a day.

Base of pictures

Critical for the whole subsequent venture. We need a fairly large set of source images.

What we will pay attention to when choosing images for our database:

- Base volume. I often saw when a similar problem was solved on the basis of a number of images of the order of hundreds. This is clearly not enough for subsequent filtering of the database in accordance with various signs.

- The quality of the pictures. To obtain a detailed result, it is desirable to have high-quality images (with an eye on the possibilities of iron for processing this whole thing)

- Lighting. There is only one strict rule: lighting should not vary significantly from image to image. I would also like to avoid the presence of shaded areas as much as possible, but even with them our algorithm will work.

- The position of the face in the picture. It would be ideal to have position markers for the main facial features, but if they are not there, you can also do without it.

- Emotions, hats, body modifications. All this will greatly complicate the work, therefore it is advisable to remove the most flagrantly inappropriate pictures from the database in advance.

According to the above parameters, I really liked the Humanae base, illuminated on TED, where I actually saw it. It contains about 3,000 high-quality images with completely uniform lighting, a uniform background and fairly stable position and facial expression:

Before starting work, we should download the database and bring all the images to the same size. You can use images of different sizes, but with t.z. programming was more convenient for me. Then you need to divide all 3000 images into classes. This will be necessary to filter the source code and get averaged faces of different groups. This is the longest and dreary stage of all work.

I used the following parameters for classification:

- Gender: Male, Female, Not Obvious.

- Skin color: 0-255. It can be taken from the background color.

- Age: Children, Adults, Elderly.

- Subjective beauty: 0, 1 (normal, no obvious defects), 2 (attractive).

We will use them as filters to get different results. You can do without selection at all, you will get a certain average person whose features will depend on the number of people with certain features represented in the database. Separation by gender we will necessarily produce, because the outward features of men and women differ most.

If you have chosen the same base as me, screenings of children (several dozen), blacks (about several hundred) and people with obvious physical defects can help you to get a less blurred result. This is not connected with any chauvinism or political statements, just the number of these groups in the database is often too low, and the features are too different from the average. In any case, you can always invert the filter and build averaged faces specifically for these groups, although quite inaccurate.

Figure 1. Example of averaged face over a very limited set of shots (black adults)

Algorithms and Methods

Now that we have a formed base, it is time to go directly to thinking about how to achieve the desired result. Firstly, I will immediately exclude various ready-made libraries Pattern Matching, Image Warping, as well as any complex mathematics. We just want to quickly throw the working code and have fun, right?

The imperfection of the naive method

Life would be simple and enjoyable if you could just put together the whole set of photos and get the result. Alas, cruel reality answers our fantasies with a rather mediocre result for the male average person. You can significantly improve the image obtained by the naive method, if for each subsequent photo you look for the most suitable coordinate system transformation (position, scale, etc.). How to do it? You can use different approaches:

- The above predefined pattern matching algorithms. We are looking for a set of common points, build a transformation on them. There are ready-made algorithms in matlab, but I never managed to get them to work well on average faces, so we will not use this method.

- Cross-correlation. A fairly easy to use method of finding a suitable position, which is also perfectly considered on the GPU. In theory, it is somewhat more primitive, because the transformation does not take into account the rotation and scale of the image, but is quite sufficient for our purposes. In matlab, you can use the normxcorr2 function.

The first pass by naive and correlation methods for comparison:

Figure 2. Comparison of naive (left) and correlation (right) methods

Focus and refinement

The image above still looks blurry enough, now you should focus on highlighting the main features of the face. Therefore, we will look at the images and center not the whole face, but the individual details obtained at the previous stage:

Fig 3. Plots for correlation

Naturally, it is desirable to somehow select images by the value of the correlation coefficient, so as not to miss out on completely inappropriate results. I implemented this simply as a threshold function with auto-tuning. Say, let the threshold be t = 0.5, iterate over the pictures one by one in random order. All new images are rejected, the correlation of which is less than 0.5 in this area or with the current result. If the image is not rejected, the threshold t rises by 0.003, otherwise it decreases by 0.001. As a result, the proportion of images falling into the result is adjusted. The system independently balances on a suitable value of t. It could have been implemented somehow differently, here the selection algorithm is on your conscience.

As a result, we get a set of images with different areas of focus. It remains to combine them in manual or automatic mode to obtain a sufficiently accurate image.

Fig. 4. Gradual refinement and focusing of the main facial features

Additional features

To date, we already have fairly pronounced basic features and shape of the face, but we notice that averaging “ate” all the features: hair, skin texture and eyebrows, making the face somewhat synthetic. This can be corrected by setting a high threshold so that only one or more of the most similar persons pass the selection. You can mix the obtained additional features with the main ones automatically, but I did it manually. Naturally, it is advisable to transfer only those small details that have been lost.

Fig. 5. Male averaged face, more than 500 shots.

results

Before the publication of this article, I expected to drive the first person I received through FindFace, but he turned it off, and in the end it was not needed. I already knew that person whom the figure above clearly reminded me. Upon completion, he looked at me from the screen ... I myself. I understand that the average person should combine the features of all people in such a way as to be somewhat similar to absolutely everyone, but the coincidence was too significant to not be noted. It only remained to take a picture more or less similar in terms of angle and light, and then combine it with the result for clarity:

Minute of deanonymization

I found my photo redundant right in the article for reasons of minimal privacy and relevance, so please use this text link drive.google.com/file/d/0B3QDURLCBJrBOGpONmJMbTZPanc/view?usp=sharing Thank you for understanding.

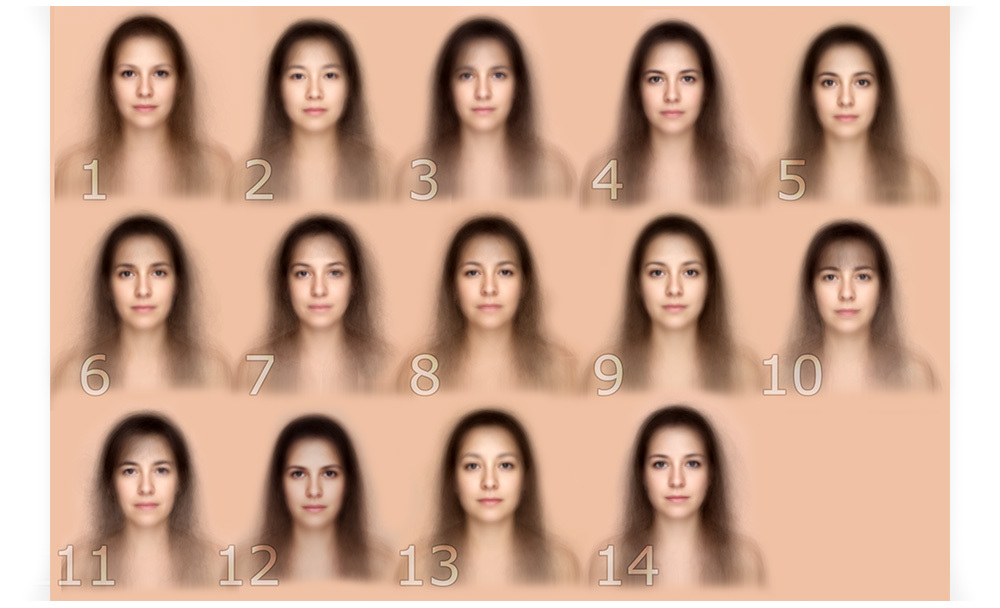

After receiving the averaged face, it was easy to find all kinds of typical classes for the eyes, noses, mouths and visually evaluate their effect on the shape of the face. For the eyes, I distinguished 35 different classes, of which fourteen are mainly female:

Fig. 6. Fourteen types of female eyes and their influence on the shape of the face A

brief illustration of the operation of the first stage of the algorithm:

It may also be interesting:

- Select images with the largest deviations from the average and average them. Most likely, there are other popular combinations of traits that the algorithm missed during mass averaging. In fact, the right character on the KDPV is obtained in this way, and I am sure that he is not the only one.

- Search and average images that are similar to a certain really existing person.

- From the obtained facial features classes, find the most popular combination and average. The person obtained in this way may slightly differ from the above.

Only registered users can participate in the survey. Please come in.

Which of the girls in Figure 6 seem most attractive to you?

- 40.2% 1 594

- 4.8% 2 72

- 8.2% 3 122

- 43.7% 4 645

- 23.8% 5 351

- 14.5% 6 215

- 3.3% 7 49

- 1.2% 8 19

- 28.1% 9 415

- 2.5% 10 38

- 1.5% 11 23

- 34.4% 12 508

- 1.6% 13 24

- 43.2% 14 638