Problems with the cache and how to solve them

Hello, Habr!

My name is Victor Pryazhnikov, I work in the Badoo SRV team. Our team is developing and supporting the internal API for our clients on the server side, and data caching is what we encounter every day.

There is an opinion that in programming there are only two truly complex tasks: inventing names and invalidating the cache. I won’t argue that disability is difficult, but it seems to me that caching is a pretty tricky thing even without disability. There are many things to think about before you start using the cache. In this article I will try to formulate some problems that you may encounter when working with the cache in a large system.

I’ll talk about the problems of sharing cached data between servers, parallel data updates, “cold start” and the system’s malfunctioning. I will also describe possible solutions to these problems and provide links to materials where these topics are covered in more detail. I will not talk about what a cache is in principle and about the implementation details of specific systems.

When working, I assume that the system in question consists of an application, a database, and a cache for data. Instead of a database, any other source can be used (for example, some kind of microservice or external API).

If you want to use caching in a sufficiently large system, you need to make sure that you can share the cached data between available servers. This is necessary for several reasons:

The most obvious way to break the data is to calculate the server number in a pseudo-random manner depending on the caching key.

There are different algorithms for implementing this. The simplest is to calculate the server number as the remainder of the integer division of the numerical key representation (for example, CRC32) by the number of caching servers:

Such an algorithm is called modulo hashing. CRC32 is used here as an example. Instead, you can take any other hash function, from the results of which you can get a number greater than or equal to the number of servers, with a more or less evenly distributed result.

This method is easy to understand and implement, it evenly distributes data between servers, but it has a serious drawback: when changing the number of servers (due to technical problems or adding new ones), a significant part of the cache is lost, since the remainder of the division changes for the keys.

I wrote a small script that will demonstrate this problem.

It generates 1 million unique keys distributed across five servers using modular hashing and CRC32. I emulate the failure of one of the servers and the redistribution of data on the four remaining.

As a result of this “failure”, approximately 80% of the keys will change their location, that is, they will be inaccessible for further reading:

Total keys count: 1000000

Shards count range: 4, 5

The most unpleasant thing here is that 80% is far from the limit. With the increase in the number of servers, the percentage of cache loss will continue to grow. The only exception is multiple changes (from two to four, from nine to three, etc.), in which the losses will be less than usual, but in any case at least half of the existing cache:

I posted a script on GitHub with which I collected data, as well as an ipynb file that draws this table, and data files.

To solve this problem there is another breakdown of the algorithm - a consistent hashing (Eng. Consistent hashing) The basic idea of this mechanism is very simple: an additional mapping of keys to slots is added here, the number of which significantly exceeds the number of servers (there can be thousands or even more). The slots themselves, in turn, are somehow distributed across the servers.

When changing the number of servers, the number of slots does not change, but the distribution of slots between these servers changes:

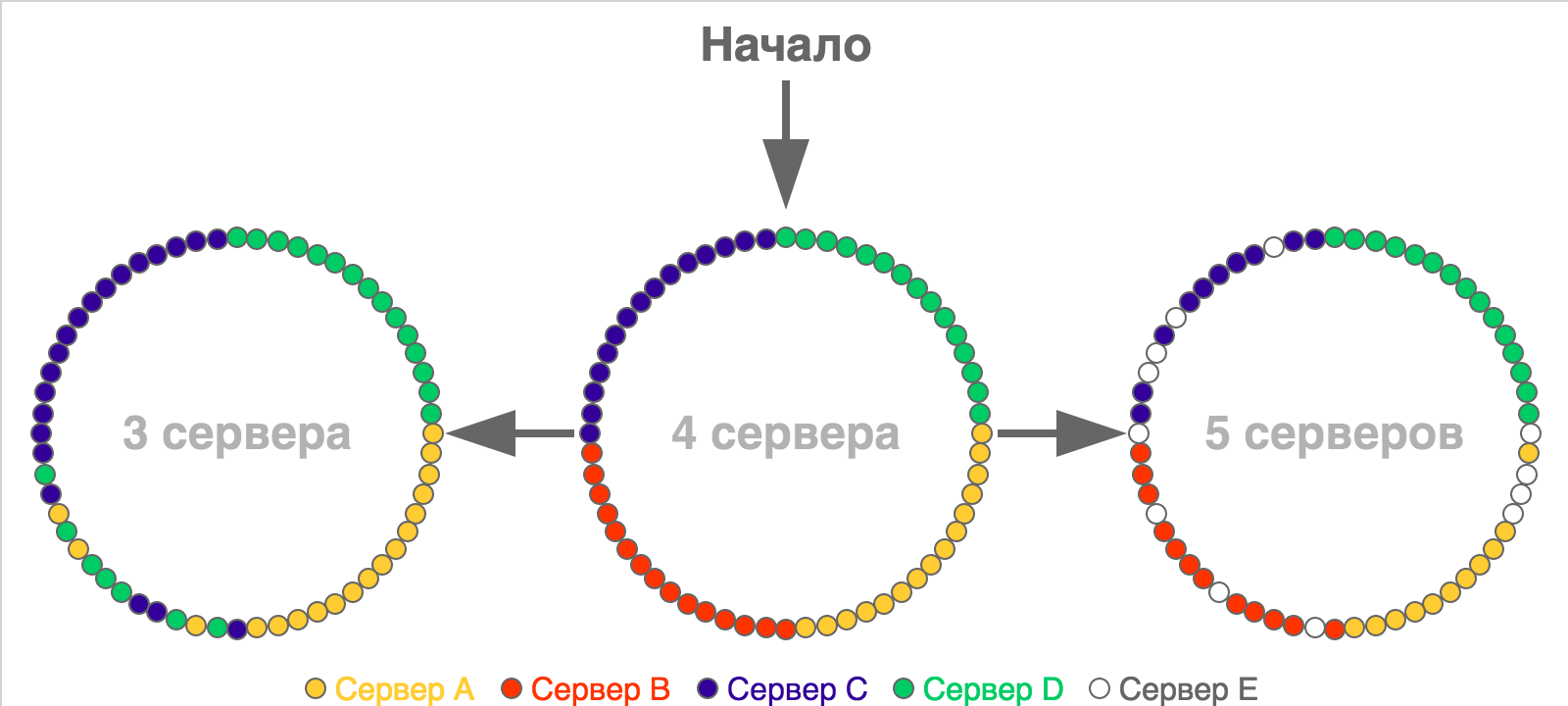

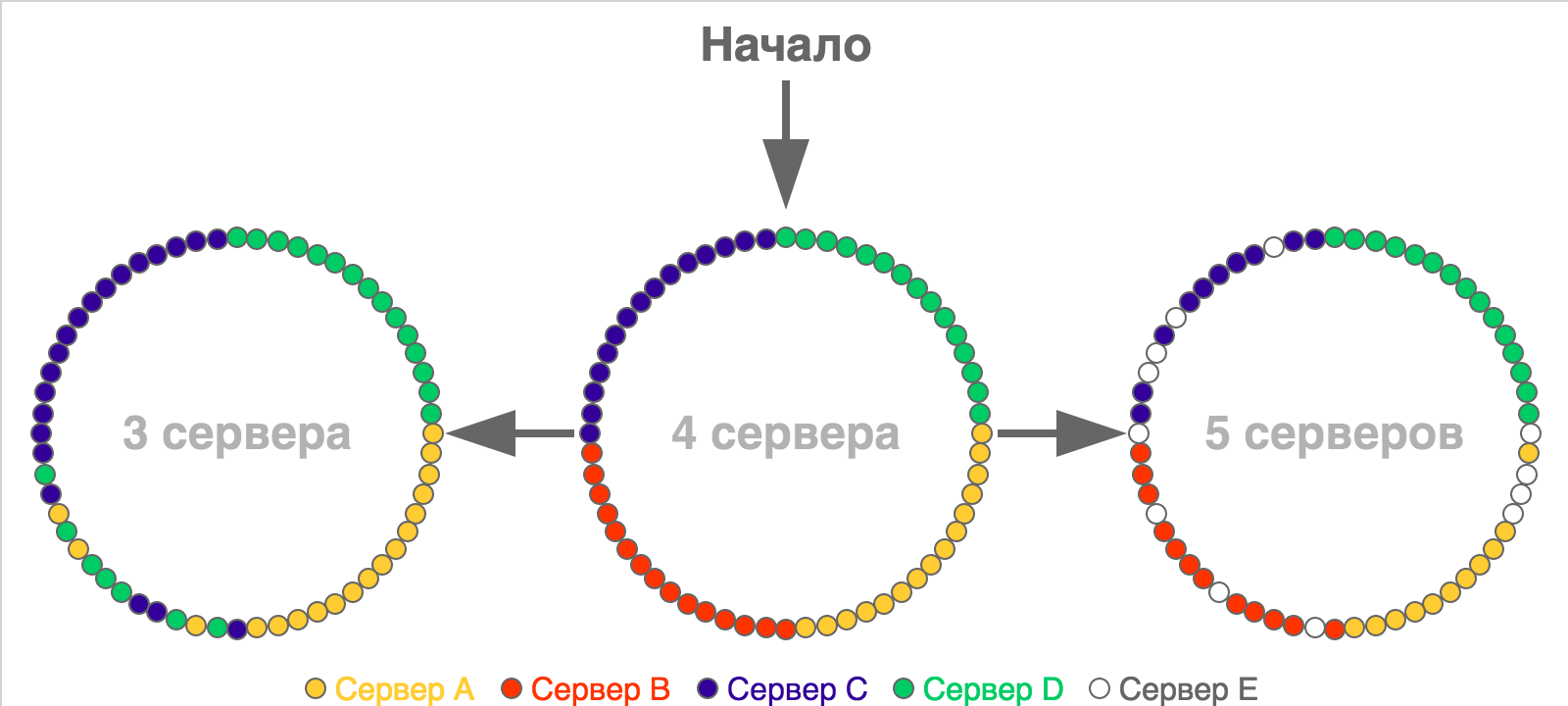

Usually, the idea of consistent hashing is visualized using rings, the points on the circles of which show slots or the boundaries of the ranges of slots (if there are a lot of these slots). Here is a simple redistribution example for a situation with a small number of slots (60), which are initially distributed across four servers:

In the picture of the initial partition, all the slots of one server are arranged in a row, but in reality this is not a prerequisite - they can be located as you like.

The main advantage of this method over the previous one is that here each server corresponds not to one value, but to a whole range, and when the number of servers changes, a much smaller part of the keys is redistributed between them (

If we go back to the scenario that I used to demonstrate the lack of hashing modulo, then in the same situation with the fall of one of the five servers (with the same weight) and the redistribution of keys from it between the remaining losses, we do not have 80% of the cache, but only 20%. If we assume that initially all the data is in the cache and all of them will be requested, then this difference means that with a coordinated hashing we will receive four times less requests to the database.

The code that implements this algorithm will be more complicated than the code of the previous one, so I will not give it in the article. If you wish, you can easily find it - there are a lot of implementations in various languages on GitHub .

Along with consistent hashing, there are other ways to solve this problem (for example, rendezvous hashing ), but they are much less common.

Regardless of the chosen algorithm, choosing a server based on a key hash may not work well. Usually the cache does not contain a set of the same type of data, but a large amount of heterogeneous data: cached values take up different places in memory, are requested at different frequencies, have different generation times, different update rates and different lifetimes. When using hashing, you cannot control exactly where the key will go, and the result may be a “skew” both in the amount of stored data and in the number of requests to it, which will cause the behavior of different caching servers to vary greatly.

To solve this problem, it is necessary to “smear” the keys so that heterogeneous data is distributed more or less uniformly between the servers. To do this, to select a server, you need to use not a key, but some other parameter, to which you will need to apply one of the described approaches. This is not to say what kind of parameter it will be, because it depends on your data model.

In our case, almost all cached data refers to the same user, so we use the User ID as a parameter for sharding data in the cache. Thanks to this, we are able to distribute data more or less evenly. In addition, we get a bonus - the ability to use

See also:

Look at this simple piece of code:

What happens if there is no requested data in the cache? Judging by the code, a mechanism should start that will get this data. If the code is executed in only one thread, then everything will be fine: the data will be downloaded, cached and taken from there on the next request. But when working in several parallel threads, everything will be different: the data will be downloaded not once, but several.

It will look something like this:

At the time of the beginning of the processing of the request in process No. 2, there is no data in the cache yet, but they are already read from the database in process No. 1. In this example, the problem is not so significant, because there are only two requests, but there can be many more.

The number of parallel downloads depends on the number of parallel users and the time it takes to download the necessary data.

Suppose you have some kind of functionality that uses a cache with a load of 200 requests per second. If you need 50 ms to download data, then during this time you will receive

That is, in the absence of a cache, one process will begin to load data, and during the download, nine more requests will come that will not see the data in the cache and will also load it.

This problem is called cache stampede.(I did not find a Russian analogue of this term, literally it can be translated as “stampede of the cache”, and the picture at the beginning of the article shows an example of this action in the wild), hit miss storm (“storm of misses to the cache”) or dog-pile effect ("The effect of a dog pack"). There are several ways to solve it:

The essence of this method is that if there is no data in the cache, the process that wants to load it must capture a lock that will prevent other processes from running in parallel with the same. In the case of memcached, the easiest way to block is to add a key to the same caching server where the cached data itself should be stored.

With this option, the data is updated in only one process, but you need to decide what to do with processes that fall into a situation with a missing cache but could not get a lock. They may give an error or some default value, wait for a while, and then try to get the data again.

In addition, you need to carefully choose the time of the lock itself - it should be guaranteed to be able to load data from the source and put it in the cache. If not enough, then another parallel process may start reloading the data. On the other hand, if this time period is too large and the process that received the lock dies without writing data to the cache and releasing the lock, then other processes will also not be able to receive this data before the lock time expires.

The main idea of this method is the separation between different processes of reading data from the cache and writing to it. Online processes only read data from the cache, but not download them, which only occurs in a separate background process. This option makes parallel data updates impossible.

This method requires additional “expenses” for the creation and monitoring of a separate script that writes data to the cache, and synchronization of the lifetime of the recorded cache and the time of the next start of the script updating it.

We use this option in Badoo, for example, for a counter of the total number of users, which will be discussed further.

The essence of these methods is that the data in the cache is updated not only in the absence, but also with some probability if they exist. This will allow them to be updated before the cached data is “rotten” and will be required by all processes at once.

For the correct operation of such a mechanism, it is necessary that at the beginning of the life time of the cached data the recalculation probability be small, but gradually increase. This can be achieved using the XFetch algorithm , which uses exponential distribution. Its implementation looks something like this:

In this example

You need to understand that when using such probabilistic mechanisms you do not exclude parallel updates, but only reduce their likelihood. To exclude them, you can “cross” several methods at once (for example, by adding a lock before updating).

See also:

It should be noted that the problem of mass data updates due to their absence in the cache can be caused not only by a large number of updates of the same key, but also by a large number of simultaneous updates of different keys. For example, this can happen when you roll out a new “popular” functionality using caching and a fixed cache lifetime.

In this case, immediately after rolling out, the data will begin to load (the first manifestation of the problem), after which it will go to the cache and everything will be fine for a while, and after the cache expires, all the data will begin to load again and create an increased load on the database.

You cannot completely get rid of such a problem, but you can “smear” the data load in time, thereby eliminating the sharp number of parallel queries to the database. There are several ways to achieve this:

If for some reason you do not want to use a random number, you can replace it with a pseudo-random value obtained using a hash function based on some data (for example, User ID).

I wrote a small script that emulates a “unheated” cache situation.

In it, I reproduce a situation in which the user, when requested, downloads data about himself (if they are not in the cache). Of course, the example is synthetic, but even on it you can see the difference in the behavior of the system.

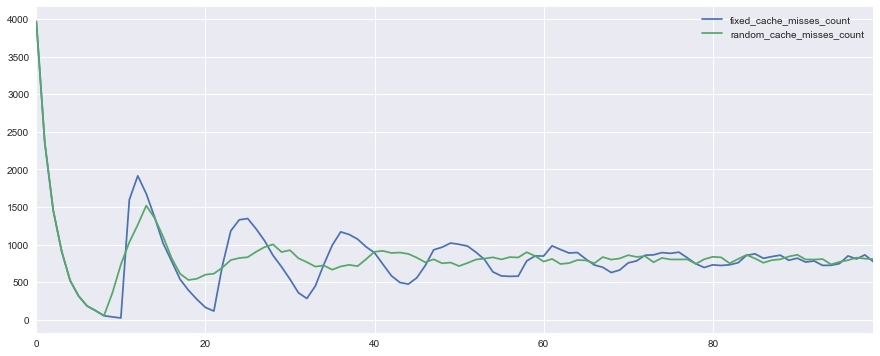

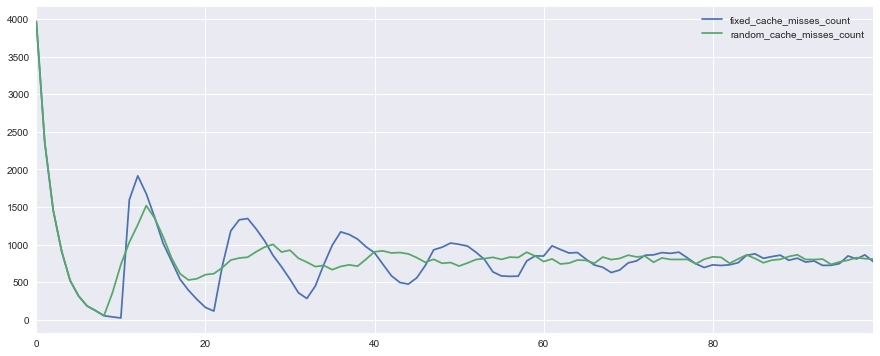

Here is a graph of the number of hit misss in a situation with fixed (fixed_cache_misses_count) and different (random_cache_misses_count) cache lifetimes:

It can be seen that load peaks are very noticeable in both cases at the beginning of work, but when using pseudo-random lifetimes, they smooth out much faster.

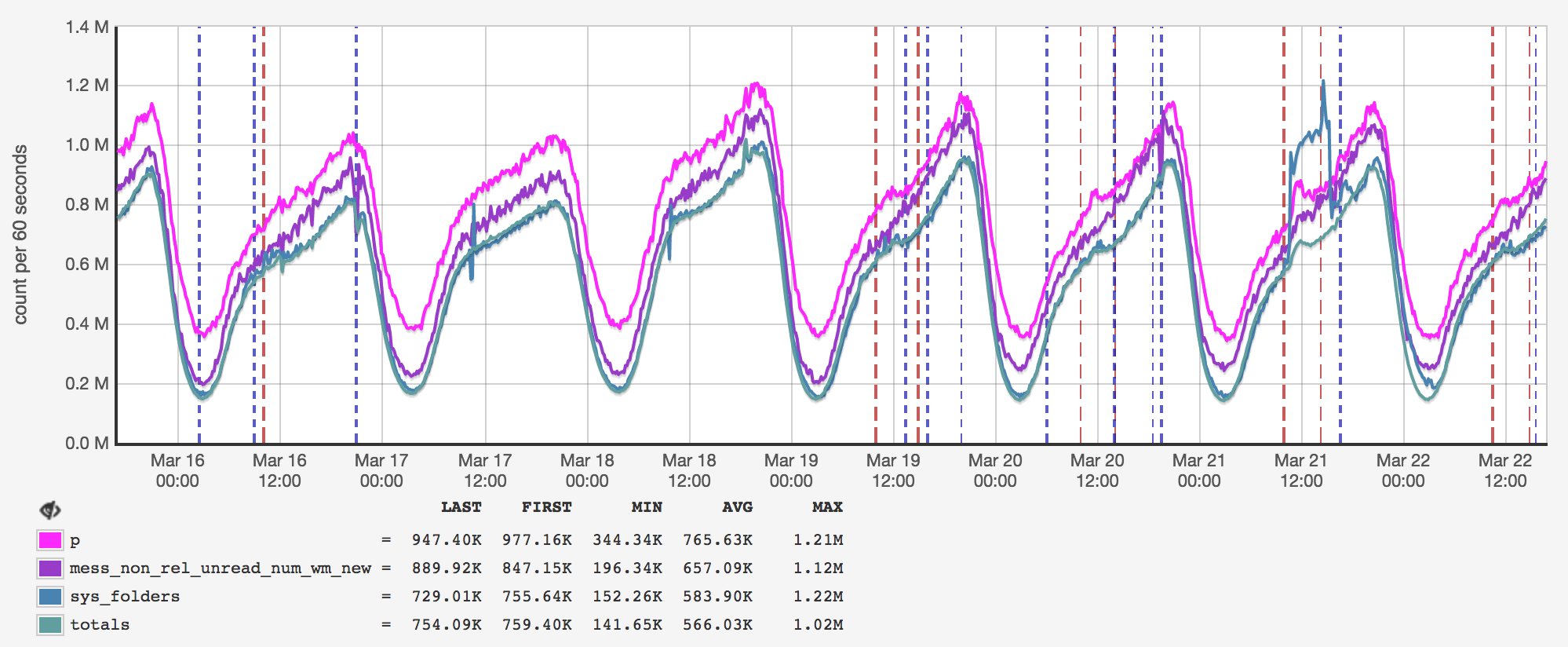

The data in the cache is heterogeneous, some of which can be requested very often. In this case, the problems may not even be created by parallel updates, but by the number of readings. An example of such a key with us is a counter of the total number of users:

This counter is one of the most popular keys, and using the usual approach, all requests to it will go to one server (since this is only one key, and not many of the same type), the behavior of which can Change and slow down work with other keys stored in the same place.

To solve this problem, you need to write data not to one caching server, but to several at once. In this case, we will reduce the number of readings of this key by several times, but complicate its updates and the server selection code - after all, we will need to use a separate mechanism.

We at Badoo solve this problem by writing data to all caching servers at once. Due to this, when reading, we can use the general server selection mechanism - in the code, you can use the usual user ID sharding mechanism, and when reading you do not need to know anything about the specifics of this hot key. In our case, this works, since we have relatively few servers (about ten per site).

If there were much more caching servers, then this method might not be the most convenient - it just does not make sense to duplicate the same data hundreds of times. In this case, it would be possible to duplicate the key not on all servers, but only on their part, but this option requires a little more effort.

If you use the server definition by the cache key, then you can add a limited number of pseudo-random values to it (by making

If you use explicit instructions for the sharding parameter, then simply pass different pseudo-random values there.

The main problem with both methods is to make sure that the different values actually go to the different caching servers.

See also:

The system cannot be 100% reliable, so you need to consider how it will behave in the event of a failure. Failures can occur both in the cache itself and in the database.

About the failures in the cache I said in the previous sections. The only thing that can be added is that it would be good to foresee the possibility of disabling part of the functional on a working system. This is useful when the system is unable to cope with peak loads.

When a database crashesand in the absence of a cache, we can get into a cache stampede situation, which I also talked about before. You can exit it by the methods already described, or you can write to the cache a deliberately incorrect value with a short lifespan. In this case, the system will be able to determine that the source is unavailable, and for some time will stop trying to request data.

In the article, I touched on the main problems when working with the cache, but I’m sure that, besides them, there are many others, and you can continue this conversation for a very long time. I hope that after reading my article your cache will become more efficient.

My name is Victor Pryazhnikov, I work in the Badoo SRV team. Our team is developing and supporting the internal API for our clients on the server side, and data caching is what we encounter every day.

There is an opinion that in programming there are only two truly complex tasks: inventing names and invalidating the cache. I won’t argue that disability is difficult, but it seems to me that caching is a pretty tricky thing even without disability. There are many things to think about before you start using the cache. In this article I will try to formulate some problems that you may encounter when working with the cache in a large system.

I’ll talk about the problems of sharing cached data between servers, parallel data updates, “cold start” and the system’s malfunctioning. I will also describe possible solutions to these problems and provide links to materials where these topics are covered in more detail. I will not talk about what a cache is in principle and about the implementation details of specific systems.

When working, I assume that the system in question consists of an application, a database, and a cache for data. Instead of a database, any other source can be used (for example, some kind of microservice or external API).

Sharing data between caching servers

If you want to use caching in a sufficiently large system, you need to make sure that you can share the cached data between available servers. This is necessary for several reasons:

- there can be a lot of data, and they will not physically fit in the memory of one server;

- data can be requested very often, and one server is not able to process all these requests;

- you want to make caching more reliable. If you have only one caching server, then when it crashes, the entire system will be left without a cache, which can dramatically increase the load on the database.

The most obvious way to break the data is to calculate the server number in a pseudo-random manner depending on the caching key.

There are different algorithms for implementing this. The simplest is to calculate the server number as the remainder of the integer division of the numerical key representation (for example, CRC32) by the number of caching servers:

$cache_server_index = crc32($cache_key) % count($cache_servers_list);Such an algorithm is called modulo hashing. CRC32 is used here as an example. Instead, you can take any other hash function, from the results of which you can get a number greater than or equal to the number of servers, with a more or less evenly distributed result.

This method is easy to understand and implement, it evenly distributes data between servers, but it has a serious drawback: when changing the number of servers (due to technical problems or adding new ones), a significant part of the cache is lost, since the remainder of the division changes for the keys.

I wrote a small script that will demonstrate this problem.

It generates 1 million unique keys distributed across five servers using modular hashing and CRC32. I emulate the failure of one of the servers and the redistribution of data on the four remaining.

As a result of this “failure”, approximately 80% of the keys will change their location, that is, they will be inaccessible for further reading:

Total keys count: 1000000

Shards count range: 4, 5

| Shardsbefore | ShardsAfter | LostKeysPercent | Lostkeys |

|---|---|---|---|

| 5 | 4 | 80.03% | 800345 |

The most unpleasant thing here is that 80% is far from the limit. With the increase in the number of servers, the percentage of cache loss will continue to grow. The only exception is multiple changes (from two to four, from nine to three, etc.), in which the losses will be less than usual, but in any case at least half of the existing cache:

I posted a script on GitHub with which I collected data, as well as an ipynb file that draws this table, and data files.

To solve this problem there is another breakdown of the algorithm - a consistent hashing (Eng. Consistent hashing) The basic idea of this mechanism is very simple: an additional mapping of keys to slots is added here, the number of which significantly exceeds the number of servers (there can be thousands or even more). The slots themselves, in turn, are somehow distributed across the servers.

When changing the number of servers, the number of slots does not change, but the distribution of slots between these servers changes:

- if one of the servers fails, then all the slots that belonged to it are distributed among the remaining;

- if a new server is added, then part of the slots from existing servers are transferred to it.

Usually, the idea of consistent hashing is visualized using rings, the points on the circles of which show slots or the boundaries of the ranges of slots (if there are a lot of these slots). Here is a simple redistribution example for a situation with a small number of slots (60), which are initially distributed across four servers:

In the picture of the initial partition, all the slots of one server are arranged in a row, but in reality this is not a prerequisite - they can be located as you like.

The main advantage of this method over the previous one is that here each server corresponds not to one value, but to a whole range, and when the number of servers changes, a much smaller part of the keys is redistributed between them (

k / N, where k is the total number of keys, andN- number of servers). If we go back to the scenario that I used to demonstrate the lack of hashing modulo, then in the same situation with the fall of one of the five servers (with the same weight) and the redistribution of keys from it between the remaining losses, we do not have 80% of the cache, but only 20%. If we assume that initially all the data is in the cache and all of them will be requested, then this difference means that with a coordinated hashing we will receive four times less requests to the database.

The code that implements this algorithm will be more complicated than the code of the previous one, so I will not give it in the article. If you wish, you can easily find it - there are a lot of implementations in various languages on GitHub .

Along with consistent hashing, there are other ways to solve this problem (for example, rendezvous hashing ), but they are much less common.

Regardless of the chosen algorithm, choosing a server based on a key hash may not work well. Usually the cache does not contain a set of the same type of data, but a large amount of heterogeneous data: cached values take up different places in memory, are requested at different frequencies, have different generation times, different update rates and different lifetimes. When using hashing, you cannot control exactly where the key will go, and the result may be a “skew” both in the amount of stored data and in the number of requests to it, which will cause the behavior of different caching servers to vary greatly.

To solve this problem, it is necessary to “smear” the keys so that heterogeneous data is distributed more or less uniformly between the servers. To do this, to select a server, you need to use not a key, but some other parameter, to which you will need to apply one of the described approaches. This is not to say what kind of parameter it will be, because it depends on your data model.

In our case, almost all cached data refers to the same user, so we use the User ID as a parameter for sharding data in the cache. Thanks to this, we are able to distribute data more or less evenly. In addition, we get a bonus - the ability to use

multi_getto load several different keys at once with information about the user (which we use in preloading frequently used data for the current user). If the position of each key was determined dynamically, it would be impossible to use multi_getin such a scenario, since there would be no guarantee that all the requested keys belong to the same server. See also:

- Distributed hash table article on Wikipedia

- Article "Consistent hashing" on Wikipedia

- A Guide to Consistent Hashing

- Consistent hashing and random trees: distributed caching protocols for relieving hot spots on the World Wide Web

- Building a Consistent Hashing Ring

Parallel data update requests

Look at this simple piece of code:

public function getContactsCountCached(int $user_id) : ?int

{

$contacts_count = \Contacts\Cache::getContactsCount($user_id);

if ($contacts_count !== false) {

return $contacts_count;

}

$contacts_count = $this->getContactsCount($user_id);

if (is_null($contacts_count)) {

return null;

}

\Contacts\Cache::setContactsCount($user_id, $contacts_count);

return $contacts_count;

}

What happens if there is no requested data in the cache? Judging by the code, a mechanism should start that will get this data. If the code is executed in only one thread, then everything will be fine: the data will be downloaded, cached and taken from there on the next request. But when working in several parallel threads, everything will be different: the data will be downloaded not once, but several.

It will look something like this:

At the time of the beginning of the processing of the request in process No. 2, there is no data in the cache yet, but they are already read from the database in process No. 1. In this example, the problem is not so significant, because there are only two requests, but there can be many more.

The number of parallel downloads depends on the number of parallel users and the time it takes to download the necessary data.

Suppose you have some kind of functionality that uses a cache with a load of 200 requests per second. If you need 50 ms to download data, then during this time you will receive

50 / (1000 / 200) = 10requests. That is, in the absence of a cache, one process will begin to load data, and during the download, nine more requests will come that will not see the data in the cache and will also load it.

This problem is called cache stampede.(I did not find a Russian analogue of this term, literally it can be translated as “stampede of the cache”, and the picture at the beginning of the article shows an example of this action in the wild), hit miss storm (“storm of misses to the cache”) or dog-pile effect ("The effect of a dog pack"). There are several ways to solve it:

Lock before starting the recount / load operation

The essence of this method is that if there is no data in the cache, the process that wants to load it must capture a lock that will prevent other processes from running in parallel with the same. In the case of memcached, the easiest way to block is to add a key to the same caching server where the cached data itself should be stored.

With this option, the data is updated in only one process, but you need to decide what to do with processes that fall into a situation with a missing cache but could not get a lock. They may give an error or some default value, wait for a while, and then try to get the data again.

In addition, you need to carefully choose the time of the lock itself - it should be guaranteed to be able to load data from the source and put it in the cache. If not enough, then another parallel process may start reloading the data. On the other hand, if this time period is too large and the process that received the lock dies without writing data to the cache and releasing the lock, then other processes will also not be able to receive this data before the lock time expires.

Removing updates to the background

The main idea of this method is the separation between different processes of reading data from the cache and writing to it. Online processes only read data from the cache, but not download them, which only occurs in a separate background process. This option makes parallel data updates impossible.

This method requires additional “expenses” for the creation and monitoring of a separate script that writes data to the cache, and synchronization of the lifetime of the recorded cache and the time of the next start of the script updating it.

We use this option in Badoo, for example, for a counter of the total number of users, which will be discussed further.

Probabilistic Update Methods

The essence of these methods is that the data in the cache is updated not only in the absence, but also with some probability if they exist. This will allow them to be updated before the cached data is “rotten” and will be required by all processes at once.

For the correct operation of such a mechanism, it is necessary that at the beginning of the life time of the cached data the recalculation probability be small, but gradually increase. This can be achieved using the XFetch algorithm , which uses exponential distribution. Its implementation looks something like this:

function xFetch($key, $ttl, $beta = 1)

{

[$value, $delta, $expiry] = cacheRead($key);

if (!$value || (time() − $delta * $beta * log(rand())) > $expiry) {

$start = time();

$value = recomputeValue($key);

$delta = time() – $start;

$expiry = time() + $ttl;

cacheWrite(key, [$value, $delta, $expiry], $ttl);

}

return $value;

}

In this example

$ttl, this is the lifetime of the value in the cache, $delta- the time it took to generate the cached value, $expiry- the time until which the value in the cache is valid, $beta- the algorithm setting parameter, changing which can affect the probability of recalculation (the higher it is, all the more likely recount at each request). A detailed description of this algorithm can be found in the white paper “Optimal Probabilistic Cache Stampede Prevention”, a link to which you will find at the end of this section. You need to understand that when using such probabilistic mechanisms you do not exclude parallel updates, but only reduce their likelihood. To exclude them, you can “cross” several methods at once (for example, by adding a lock before updating).

See also:

- The Cache stampede article on Wikipedia

- Optimal Probabilistic Cache Stampede Prevention

- GitHub repository with description and tests of different ways

- The article "Caches for" dummies ""

Cold start and cache warm-up

It should be noted that the problem of mass data updates due to their absence in the cache can be caused not only by a large number of updates of the same key, but also by a large number of simultaneous updates of different keys. For example, this can happen when you roll out a new “popular” functionality using caching and a fixed cache lifetime.

In this case, immediately after rolling out, the data will begin to load (the first manifestation of the problem), after which it will go to the cache and everything will be fine for a while, and after the cache expires, all the data will begin to load again and create an increased load on the database.

You cannot completely get rid of such a problem, but you can “smear” the data load in time, thereby eliminating the sharp number of parallel queries to the database. There are several ways to achieve this:

- smooth inclusion of new functionality. For this, a mechanism is needed that will allow this to be done. The simplest implementation option is to roll out new functionality included with a small part of users and gradually increase it. In this scenario, there should not be a large roll of updates at once, since at first the functionality will be available only to some users, and as it increases, the cache will already be “warmed up”.

- different lifetimes of different elements of the data set. This mechanism can only be used if the system is able to withstand the peak that occurs when all the functionality is rolled out. Its peculiarity lies in the fact that when writing data to the cache, each element will have its own lifetime, and thanks to this, the update shaft will be smoothed much faster due to the distribution of subsequent updates in time. The simplest way to implement such a mechanism is to multiply the cache lifetime by some random factor:

public function getNewSnapshotTTL()

{

$random_factor = rand(950, 1050) / 1000;

return intval($this->getSnapshotTTL() * $random_factor);

}

If for some reason you do not want to use a random number, you can replace it with a pseudo-random value obtained using a hash function based on some data (for example, User ID).

Example

I wrote a small script that emulates a “unheated” cache situation.

In it, I reproduce a situation in which the user, when requested, downloads data about himself (if they are not in the cache). Of course, the example is synthetic, but even on it you can see the difference in the behavior of the system.

Here is a graph of the number of hit misss in a situation with fixed (fixed_cache_misses_count) and different (random_cache_misses_count) cache lifetimes:

It can be seen that load peaks are very noticeable in both cases at the beginning of work, but when using pseudo-random lifetimes, they smooth out much faster.

Hot keys

The data in the cache is heterogeneous, some of which can be requested very often. In this case, the problems may not even be created by parallel updates, but by the number of readings. An example of such a key with us is a counter of the total number of users:

This counter is one of the most popular keys, and using the usual approach, all requests to it will go to one server (since this is only one key, and not many of the same type), the behavior of which can Change and slow down work with other keys stored in the same place.

To solve this problem, you need to write data not to one caching server, but to several at once. In this case, we will reduce the number of readings of this key by several times, but complicate its updates and the server selection code - after all, we will need to use a separate mechanism.

We at Badoo solve this problem by writing data to all caching servers at once. Due to this, when reading, we can use the general server selection mechanism - in the code, you can use the usual user ID sharding mechanism, and when reading you do not need to know anything about the specifics of this hot key. In our case, this works, since we have relatively few servers (about ten per site).

If there were much more caching servers, then this method might not be the most convenient - it just does not make sense to duplicate the same data hundreds of times. In this case, it would be possible to duplicate the key not on all servers, but only on their part, but this option requires a little more effort.

If you use the server definition by the cache key, then you can add a limited number of pseudo-random values to it (by making

total_users_countsomething like t otal_users_count_1, total_users_count_2etc.). A similar approach is used, for example, in Etsy. If you use explicit instructions for the sharding parameter, then simply pass different pseudo-random values there.

The main problem with both methods is to make sure that the different values actually go to the different caching servers.

See also:

Malfunctioning

The system cannot be 100% reliable, so you need to consider how it will behave in the event of a failure. Failures can occur both in the cache itself and in the database.

About the failures in the cache I said in the previous sections. The only thing that can be added is that it would be good to foresee the possibility of disabling part of the functional on a working system. This is useful when the system is unable to cope with peak loads.

When a database crashesand in the absence of a cache, we can get into a cache stampede situation, which I also talked about before. You can exit it by the methods already described, or you can write to the cache a deliberately incorrect value with a short lifespan. In this case, the system will be able to determine that the source is unavailable, and for some time will stop trying to request data.

Conclusion

In the article, I touched on the main problems when working with the cache, but I’m sure that, besides them, there are many others, and you can continue this conversation for a very long time. I hope that after reading my article your cache will become more efficient.