Facebook Moderator's Guide: Over 1,400 pages of controversial slides

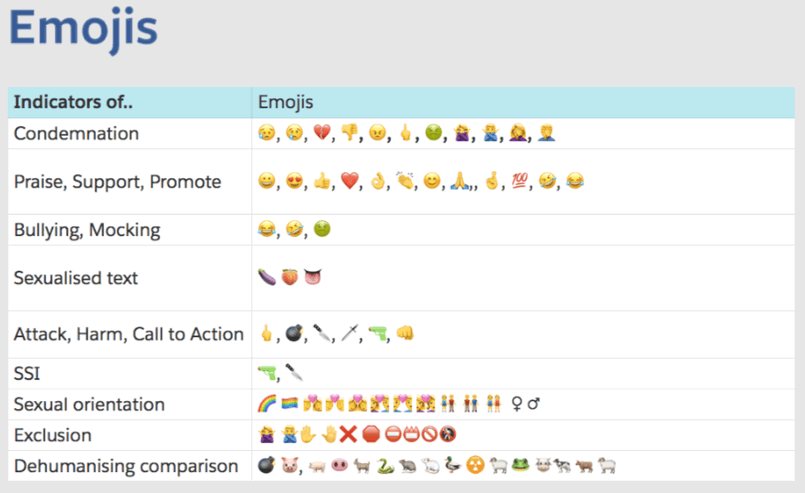

The guide explains which emoticons can be viewed as threats or, in the context of specific groups, to incite hatred.

Being under fire, Facebook has to take measures to moderate content. But this task is not as simple as it seems. The media got an internal manual for Facebook moderators: these are more than 1,400 (!) Pages of instructions, which are sometimes very difficult to figure out, writes the NY Times .

Today, both politicians and public organizations accuse the social network of inciting hatred and misinformation. For Facebook, this is primarily a financial problem, because such accusations create a negative background and can have a bad effect on the stock price, which means on the welfare of the owners and employees of the company.

Facebook, which earns about $ 5 billion per quarter, should demonstrate to everyone that it is serious about combating malicious content. But how to track billions of messages per day in more than 100 languages, while continuing to expand the user base, which is the foundation for business? The company's solution: a network of moderators who are guided by a maze of PowerPoint slides with an explanation of prohibited content.

“Every Tuesday, a few dozen Facebook employees gather at breakfast to come up with new rules and discuss what two billion users of the site are allowed to say, the NY Times writes . “Following the meetings, relevant rules are sent to more than 7,500 moderators around the world.” More than 1,400 pages of rulebooks were made available to the newspaper. The information was provided by one of the employees who is concerned about excessive moderation and too many mistakes that the company makes when moderating content.

Examination of the documents really revealed numerous gaps, prejudices and obvious mistakes. Obviously, the rules differ for different languages and countries, as a result of which hate speech can flourish in some regions and be overwhelmed in others. For example, once moderators were told to remove calls to raise funds for victims of a volcano in Indonesia, because one of the organizers of the fundraising was on the black list of banned Facebook groups. In India, moderators were mistakenly told to delete comments criticizing religion. In Myanmar, an extremist group for several months continued to be active due to an error in the Facebook documentation.

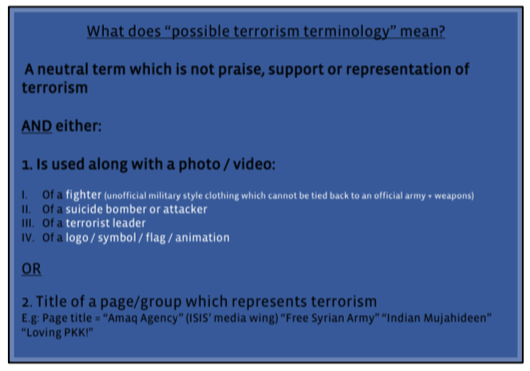

Screenshot from Facebook manual for moderators

Judging by the emerging documentation, Facebook is trying to formulate principles for the moderators in the form of simple rules "yes" or "no." The company then transfers the actual moderation to third-party companies. Those attract mostly unskilled workers, many of whom work in call centers (the notorious “Indian support” - it is these employees who are engaged in moderation).

Often moderators rely on Google Translate. Their performance requirements are such that within a few seconds they have to remember the countless rules and apply them to the hundreds of messages that appear every day on their work computer. For example, to quickly recall the rules in the presence of a smiley that jihad is prohibited.

Screenshot from the Facebook manual for moderators, which explains the signs of statements that incite hatred (hate speech). Moderators must identify three levels of "seriousness" in view of six "inhuman comparisons", including a comparison of Jews with rats. The general guide to defining hate speech is 200 pages.

Some moderators say that the rules are not very effective, and sometimes they are meaningless. However, the company's management believes that there is no other way: “We have billions of messages per day, and we are discovering more and more potential violations using our technical systems,” said Monika Bickert, Facebook global policy director. “On such a scale, even 99% accuracy leaves a lot of mistakes.”

The Facebook manual got into the press is not like a clear guide. It consists of dozens of disconnected PowerPoint presentations and Excel spreadsheets with bureaucratic headings such as “The Western Balkans Hate Organizations and Individuals” or “Explicit Violence: Implementation Standards”. This kind of "patchwork" of the rules established by different departments of the company. Facebook confirmed the authenticity of the documents, although it said that some of them were updated compared to the version that came under the NY Times . Facebook claims that the files are intended for training only, but moderators claim that they are actually used as reference materials in their daily work.