Modify Python in 6 minutes

- Transfer

All the good and inexorably coming!

This extremely busy year is coming to an end and we have the last course that we are launching this year - “ Full-stack Python Developer ”, which, in fact, is dedicated to the note, which, although it slipped past the main program, seemed interesting generally.

This week I made my first pull-request in a main CPython project. It was rejected :-( But in order not to waste my time completely, I will share my conclusions on how CPython works and show you how to easily change the Python syntax.

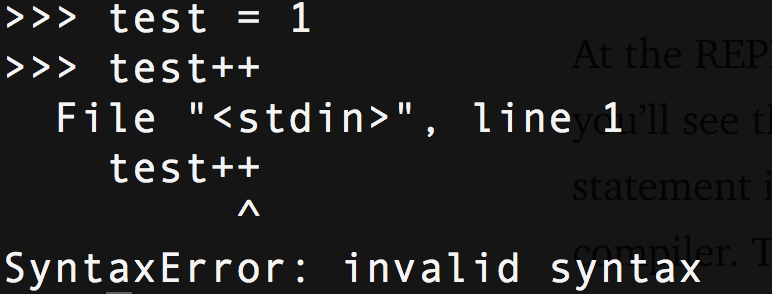

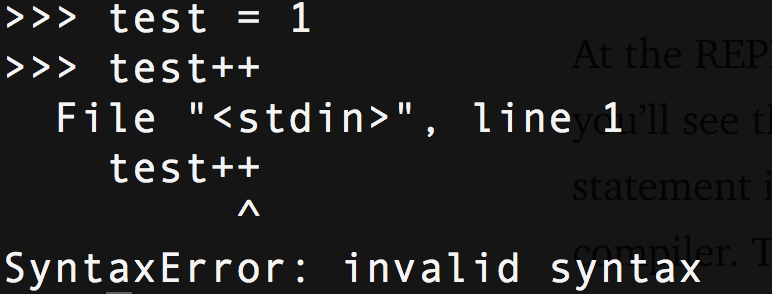

I am going to show you how to add a new feature to the Python syntax. This feature is an increment operator / decrement, the standard operator for most languages. To make sure, open REPL and try:

A change to the Python syntax is preceded by a statement describing the reasons, design, and behavior of the changes made. All language changes are discussed by the core Python team and approved by the BDFL. Increment operators are not approved (and probably never will be), which gives us a good opportunity to practice.

The Grammar file is a simple text file that describes all the elements of the Python language. It is used not only by CPython, but also by other implementations, such as PyPy, to maintain consistency and harmonize types of language semantics.

Inside, these keys form tokens that are parsed by the lexer. When you enter

So,

Let's add expressions of increment and decrement: that which does not exist in the language. This would be another version of the structure of the expression, along with yield statements, extended and standard assignments, i.e. foo = 1.

We add it to the list of possible small expressions (this will become apparent in AST).

If you run Python with the -d option and try this, you should get:

What is a token? Let's find out ...

There are four steps Python does when you call return: lexical analysis, parsing, compilation, and interpretation. Lexical analysis breaks the line of code that you just entered into tokens. The lexer called CPython

For example, code that allows you to use

Let's add two things to Parser / tokenizer.c: the new tokens

Then we add a check to return a token

They are defined in

Now, when we run Python with -d and try to execute our statement, we see:

The parser accepts these tokens and generates a structure that shows their relationship with each other. For Python and many other languages, this is an abstract syntax tree (or AST). The compiler then takes the AST and turns it into one (or more) code objects. Finally, the interpreter accepts every code object that executes the code that it represents.

Present your code in the form of a tree. The top level is the root, the function can be a branch, the class is also a branch, and class methods branch from it. Expressions are leaves on a branch.

AST is defined in

This is the code we need to add for increment and decrement.

This returns an extended assignment - instead of a new expression type with a constant value of 1. The operator is either Add or Sub (tract) depending on the type of token

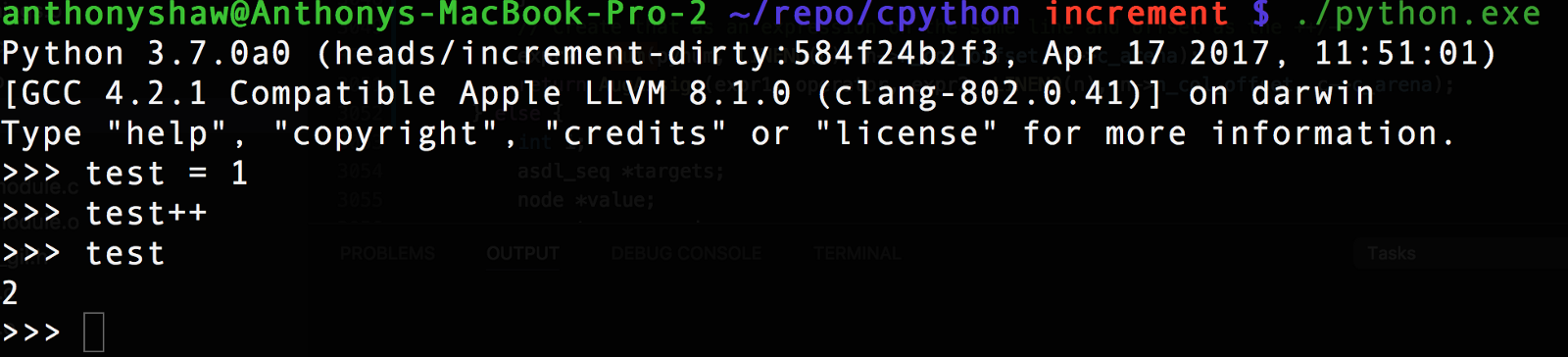

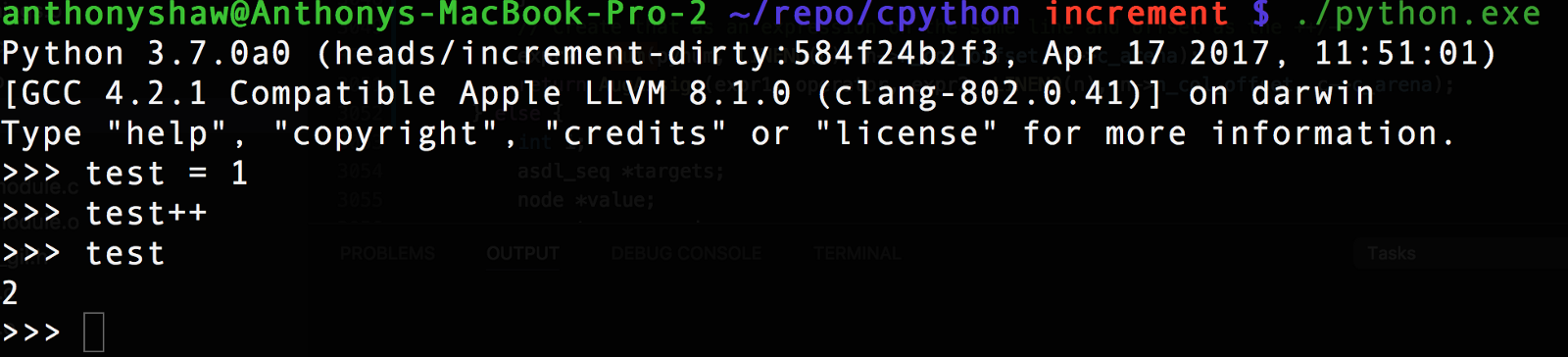

In REPL, you can try

Then the compiler takes the syntax tree and “visits” each branch. The CPython compiler has a method for visiting a statement called

The result will be VISIT (the load value is 1 for us), ADDOP (add a binary operation code operation depending on the operator (subtract, add)) and STORE_NAME (save the ADDOP result for the name). These methods respond with more specific bytecodes.

If you download the module

The final level is the interpreter. It takes a sequence of bytecodes and converts it to machine operations. This is why Python.exe and Python for Mac and Linux are all separate executable files. Some bytecodes need specific OS processing and verification. The streaming processing API, for example, should work with the GNU / Linux API, which is very different from Windows threads.

For further reading.

If you're interested in interpreters, I talked about Pyjion, the plugin architecture for CPython, which became PEP523.

If you still want to play, I run the code on GitHub along with my changes to the wait tokenizer.

THE END

As always, we are waiting for questions, comments, comments.

This extremely busy year is coming to an end and we have the last course that we are launching this year - “ Full-stack Python Developer ”, which, in fact, is dedicated to the note, which, although it slipped past the main program, seemed interesting generally.

Go

This week I made my first pull-request in a main CPython project. It was rejected :-( But in order not to waste my time completely, I will share my conclusions on how CPython works and show you how to easily change the Python syntax.

I am going to show you how to add a new feature to the Python syntax. This feature is an increment operator / decrement, the standard operator for most languages. To make sure, open REPL and try:

Level 1: PEP

A change to the Python syntax is preceded by a statement describing the reasons, design, and behavior of the changes made. All language changes are discussed by the core Python team and approved by the BDFL. Increment operators are not approved (and probably never will be), which gives us a good opportunity to practice.

Level 2: Grammar

The Grammar file is a simple text file that describes all the elements of the Python language. It is used not only by CPython, but also by other implementations, such as PyPy, to maintain consistency and harmonize types of language semantics.

Inside, these keys form tokens that are parsed by the lexer. When you enter

make -j, the command converts them to a set of enumerations and constants in C-headers. This allows you to refer to them in the future.stmt: simple_stmt | compound_stmt

simple_stmt: small_stmt (';' small_stmt)* [';'] NEWLINE

# ...

pass_stmt: 'pass'

flow_stmt: break_stmt | continue_stmt | return_stmt | raise_stmt | yield_stmt

break_stmt: 'break'

continue_stmt: 'continue'

# ..

import_as_name: NAME ['as' NAME]

So,

simple_stmtthis is a simple expression, it may or may not have a semicolon, for example, when you type import pdb; pdb.set_trace(), and end in a new line NEWLINE. Pass_stmt- omission of a word, break_stmt- interruption of work. Simple, right? Let's add expressions of increment and decrement: that which does not exist in the language. This would be another version of the structure of the expression, along with yield statements, extended and standard assignments, i.e. foo = 1.

# Добавляем выражения инкремента и декремента

expr_stmt: testlist_star_expr (annassign | augassign (yield_expr|testlist) |

('=' (yield_expr|testlist_star_expr))* | incr_stmt | decr_stmt)

annassign: ':' test ['=' test]

testlist_star_expr: (test|star_expr) (',' (test|star_expr))* [',']

augassign: ('+=' | '-=' | '*=' | '@=' | '/=' | '%=' | '&=' | '|=' | '^=' |

'<<=' | '>>=' | '**=' | '//=')

# Для нормальных и аннотированных присвоений, дополнительных ограничений навязываемых интерпретатором

del_stmt: 'del' exprlist

# Новые выражения

incr_stmt: '++'

decr_stmt: '--'We add it to the list of possible small expressions (this will become apparent in AST).

Incr_stmtit will be our increment method and it decr_stmtwill be a decrement. Both follow NAME (variable name) and form a small stand-alone expression. When we put together a Python project, it will generate components for us (not now). If you run Python with the -d option and try this, you should get:

Token /’++’ … Illegal token

What is a token? Let's find out ...

Level 3: Lexer

There are four steps Python does when you call return: lexical analysis, parsing, compilation, and interpretation. Lexical analysis breaks the line of code that you just entered into tokens. The lexer called CPython

tokenizer.c. It has functions that read from a file (e.g., python file.py) a string (e.g. REPL). It also processes a special comment for encoding at the top of the files and parses your file as UTF-8, etc. It processes nesting, async and yield keywords, detects sets and tuples of assignments, but only grammatically. He does not know what these things are or what to do with them. He cares only for the text. For example, code that allows you to use

o-notation for octal values is intokenizer . The code that actually creates octal values is in the compiler. Let's add two things to Parser / tokenizer.c: the new tokens

INCREMENTand DECREMENT, are the keys that the tokenizer returns for each piece of code./* Имена токенов */

const char *_PyParser_TokenNames[] = {

"ENDMARKER",

"NAME",

"NUMBER",

...

"INCREMENT",

"DECREMENT",

...

Then we add a check to return a token

INCREMENTor DECREMENT, each time we see ++ or -. There is already a function for two-character operators, so we extend it in accordance with our case.@@ -1175,11 +1177,13 @@ PyToken_TwoChars(int c1, int c2)

break;

case '+':

switch (c2) {

+ case '+': return INCREMENT;

case '=': return PLUSEQUAL;

}

break;

case '-':

switch (c2) {

+ case '-': return DECREMENT;

case '=': return MINEQUAL;

case '>': return RARROW;

}They are defined in

token.h#define INCREMENT 58

#define DECREMENT 59Now, when we run Python with -d and try to execute our statement, we see:

It’s a token we know - Успех!Level 4: Parser

The parser accepts these tokens and generates a structure that shows their relationship with each other. For Python and many other languages, this is an abstract syntax tree (or AST). The compiler then takes the AST and turns it into one (or more) code objects. Finally, the interpreter accepts every code object that executes the code that it represents.

Present your code in the form of a tree. The top level is the root, the function can be a branch, the class is also a branch, and class methods branch from it. Expressions are leaves on a branch.

AST is defined in

ast.pyand ast.c. ast.cIs the file we need to modify. AST code is broken down into methods that process types of tokens, ast_for_stmtprocesses operators, ast_for_exprprocesses expressions. We put incr_stmtanddecr_stmtas possible expressions. They are almost identical to extended expressions, for example, test + = 1, but there is no right expression (1), it is implicit. This is the code we need to add for increment and decrement.

static stmt_ty

ast_for_expr_stmt(struct compiling *c, const node *n)

{

...

else if ((TYPE(CHILD(n, 1)) == incr_stmt) || (TYPE(CHILD(n, 1)) == decr_stmt)) {

expr_ty expr1, expr2;

node *ch = CHILD(n, 0);

operator_ty operator;

switch (TYPE(CHILD(n, 1))){

case incr_stmt:

operator = Add;

break;

case decr_stmt:

operator = Subtract;

break;

}

expr1 = ast_for_testlist(c, ch);

if (!expr1) {

return NULL;

}

switch (expr1->kind) {

case Name_kind:

if (forbidden_name(c, expr1->v.Name.id, n, 0)) {

return NULL;

}

expr1->v.Name.ctx = Store;

break;

default:

ast_error(c, ch,

"illegal target for increment/decrement");

return NULL;

}

// Создаем PyObject для числа 1

PyObject *pynum = parsenumber(c, "1");

if (PyArena_AddPyObject(c->c_arena, pynum) < 0) {

Py_DECREF(pynum);

return NULL;

}

// Создаем это как выражение в той же строке и смещении как ++/--

expr2 = Num(pynum, LINENO(n), n->n_col_offset, c->c_arena);

return AugAssign(expr1, operator, expr2, LINENO(n), n->n_col_offset, c->c_arena);This returns an extended assignment - instead of a new expression type with a constant value of 1. The operator is either Add or Sub (tract) depending on the type of token

incr_stmtor decr_stmt. Returning to the Python REPL after compilation, we can see our new operator!

In REPL, you can try

ast.parse ("test=1; test++).body[1]and you will see the return type AugAssign. AST has just converted the statement to an expression that can be processed by the compiler. The function AugAssignsets the field Kindthat is used by the compiler.Level 5: Compiler

Then the compiler takes the syntax tree and “visits” each branch. The CPython compiler has a method for visiting a statement called

compile_visit_stmt. This is just a big switch statement that defines the type of statement. We had a type AugAssign, so it refers to compiler_augassignfor processing parts. This function then converts our statement into a set of bytecodes. It is an intermediate language between machine code (01010101) and the syntax tree. A byte code sequence is what is cached in .pyc files.static int

compiler_augassign(struct compiler *c, stmt_ty s)

{

expr_ty e = s->v.AugAssign.target;

expr_ty auge;

assert(s->kind == AugAssign_kind);

switch (e->kind) {

...

case Name_kind:

if (!compiler_nameop(c, e->v.Name.id, Load))

return 0;

VISIT(c, expr, s->v.AugAssign.value);

ADDOP(c, inplace_binop(c, s->v.AugAssign.op));

return compiler_nameop(c, e->v.Name.id, Store);The result will be VISIT (the load value is 1 for us), ADDOP (add a binary operation code operation depending on the operator (subtract, add)) and STORE_NAME (save the ADDOP result for the name). These methods respond with more specific bytecodes.

If you download the module

dis, you can see the bytecode:

Level 6: Interpreter

The final level is the interpreter. It takes a sequence of bytecodes and converts it to machine operations. This is why Python.exe and Python for Mac and Linux are all separate executable files. Some bytecodes need specific OS processing and verification. The streaming processing API, for example, should work with the GNU / Linux API, which is very different from Windows threads.

Вот и все!For further reading.

If you're interested in interpreters, I talked about Pyjion, the plugin architecture for CPython, which became PEP523.

If you still want to play, I run the code on GitHub along with my changes to the wait tokenizer.

THE END

As always, we are waiting for questions, comments, comments.