We make a website for virtual reality. We embed the monitor in the monitor and think about the future

Despite the fact that the concept of “virtual reality” has been flickering for many years, it still remains a mystery to most people, and the prices of accessories associated with this entertainment can go to infinity. But there is a budget option. An average person interested in new technologies can afford a smartphone with a gyroscope, built into Google Cardboard or any analogue of this simple device and a simple joystick with a couple of buttons. Nowadays, this option for exploring this technology is the most common. But, like many other technological innovations, such as quadrocopters, an interesting toy quickly turns into a dusty one on a shelf. Practical use is very limited. Adult people who bought virtual reality glasses play toys in the first days, watch various videos

Introduction

Over time, new I / O devices appear in our world. The monitor, a mechanical keyboard and a mouse with a ball were supplemented with touchpads, a wheel appeared in the mouse, devices with flat screens began to appear in which everyone poked with old ball pens instead of a stylus, then phones with touch screens became something everyday and completely unremarkable . We stopped saying “don't point your finger at the monitor” and began to think about adapting the interfaces to the new realities. The buttons become larger so that your finger does not miss, the interactive elements support swipe and other movements with a different number of fingers. With the advent of virtual reality glasses (a phone with a pair of lenses will also be considered as such), we should think about how to adapt what we have on the Internet at the moment to such a turn of events.

We get something, but we also lose something

VR glasses give us the opportunity to arrange information in three dimensions (well, or at least such an effect is created), unlike a conventional monitor. We have the opportunity to control what is happening by turning the head, which is quite interesting. Some joysticks, if you can call them that, allow you to control what we see with the help of hand movements in space. But at the same time, we lose the ability to use the keyboard - only if you really know how to type blindly, can you use it. Moreover, for those who print a lot, the absence of the usual tactile sensation from it and the lack of knocking keys can be a serious test. A similar effect, although not to such an extent, occurs when switching from the old keyboard from the beginning of the 2000s to the new one, with a small key travel and almost silent.

It turns out that we have a completely new set of input-output devices with new behavior for us. We do not just evolve one of them, but the entire stack is replaced. In such a situation, questions are inevitable about how adapted all these devices are to solve existing problems.

Content consumption. Still planes?

Since we started talking about the application of technology from the point of view of the consumer, not the developer, we will continue to look from the same side. The consumption of information over the past decades has not undergone significant changes. Content can be presented in the form of text, in the form of sound, graphic image or video. Mechanical buttons are replaced by virtual ones, page turning is replaced by clicking on links, but the content itself does not change much. Smells and tactile sensations from films or games to a wide range of consumers are not yet available.

With audio, everything is more or less clear. Headphones or several speakers can give a certain sense of volume, although two headphones do not allow you to “move” the sound source forward or backward. We can listen to music as before, and most of the interfaces work silently, so the question of sound will concern us last. Players for video in 3d are already in different versions. It can be considered that the issue of video consumption “full screen” is more or less resolved.

But with the text, everything is more interesting. First of all, once in virtual space, the thought arises of moving away from the idea of a flat monitor and placing text on a curved surface. Ball? And the user is at the center. Why not. But it’s better to keep the vertical axis on the surface, or even tear it away from such an interface. We didn’t think about this before? And now I have to. Some toys make warnings for epileptics, and now they will probably be for people with problems with the vestibular apparatus. A cylindrical surface seems to be a good choice. The same “distance” to the text at all points should reduce eye strain (compared to a huge monitor), efficient use of space ...

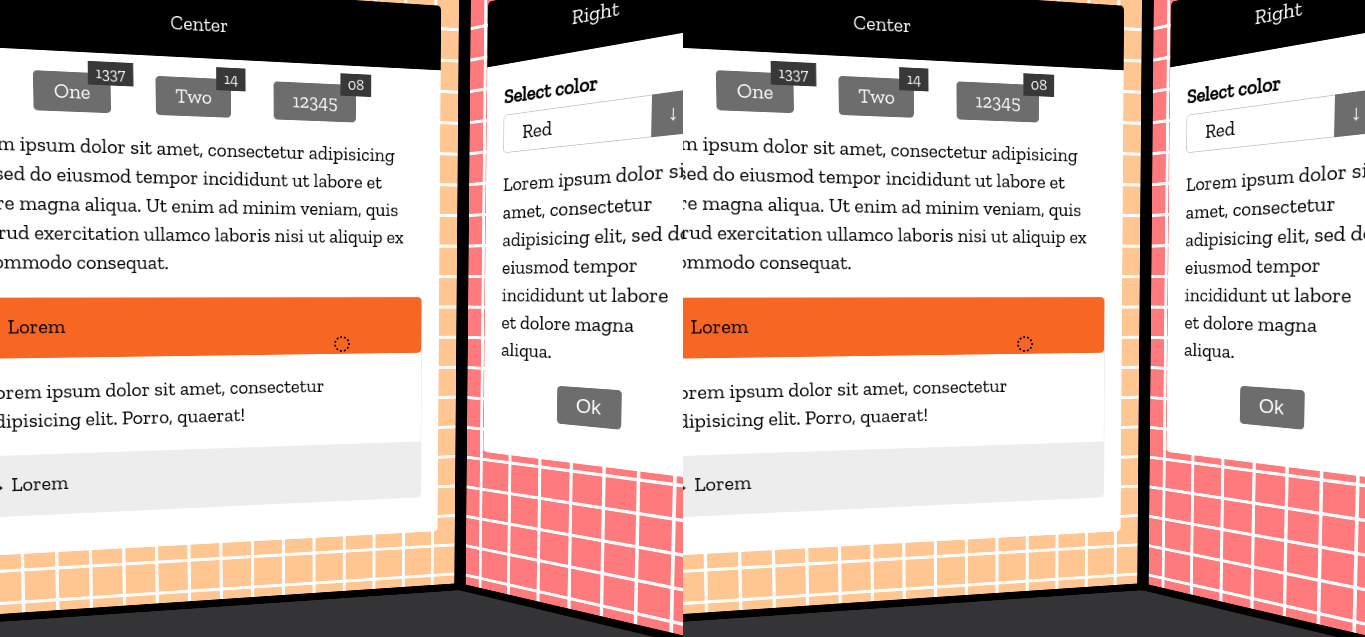

But reality hurts a lot. Because of distortions, reading such text is very difficult, and this is exacerbated by the poor quality of displays on most smartphones - letters on the edges of the visibility area turn into a soapy-pixel something. And constantly turning your head on each line of text is not an option. It seems that in the near future we will be limited to planes in 3d. We'll have to lean toward a prism - we can build three vertical monitors into our space and at the same time more or less maintain the readability of the text. Moreover, it is desirable to place them so that when you turn your head towards the “monitor”, your gaze falls exactly at its center and the line of sight is perpendicular to the plane of the monitor. Thus, the distortion will be minimal. If you tried to sit at an angle to the monitor, you will understand what I mean. Why three? One could make a couple more monitors behind but assuming long work, you will need to have the health of an astronaut to spin on a chair all day. Some people, and without glasses from a couple of revolutions, a rather unpleasant episode of dizziness and blurred eyes will suffice. So it makes sense to limit the viewing angle. It might be worth experimenting with a part of the dodecahedron, rather than with a prism, but for now it’s hard for me to imagine an interface built on pentagons.

What about the input?

Three-dimensional controller is an interesting thing. But ... Have you ever seen an ordinary artist who paints on an easel? Formally, he does not do physical work - a brush weighs a few grams (well, maybe a couple of dozen), but after a full day of drawing, his hand just falls off. Yes, and the back gets tired, no matter what they say. Three-dimensional controllers have the same problem. The hands weakened from many years of lying on the table and typing on the keyboard cannot be suspended all day. So it can be great for games, for certain stages of 3d-modeling, but this kind of controller is poorly adapted for continuous operation. There is an option when the hand rests and presses a few buttons. But this is already good. There are usually more buttons on controllers than on a standard mouse (for users of top models from Razer, I’ll remind you

With a keyboard - trouble. You can make it look like an on-screen keyboard, but the print speed on such a miracle will be lower than the baseboard. It is possible that over time, translucent glasses will be distributed with us so that we have an image at eye level, and from below we could see a real keyboard, but so far there is no question of a budget option. Plus - those devices that are on the market have a problem with fatigue of their ears - you can read a translucent text for a long time only if there is something more or less monophonic and motionless on the background. You can see the notification, but read on an ongoing basis - no. Voice input is also far from perfect. It seems that in the near future it will not work to enter large volumes of text in virtual reality.

At the moment, the option with a smartphone will not allow us to combine glasses and things like WebGazer in order to use the direction of view as a substitute for the mouse. If it is interesting, I will analyze separately the idea of building an interface for a web application that can be controlled with just a glance (it is likely that this may have practical applications in medicine). But in the case of glasses, we only have a head turn. The most effective option for its use in our situation seems to be the following: take the center of the field of view, build a beam from the user and use its intersection with the monitor planes in order to create a mouse in these planes.

Let's try to do something.

Since we are doing several monitor planes, we’ll create some markup for them

(a link to GitHub with full sources will be at the end of the article). This is the usual markup, I used buttons and an accordion from one of my projects in the full source code.

....

....

....

There are not many options to use the usual HTML + CSS markup in three-dimensional space. But there are a couple of renderers for Three.js that immediately attract attention. One of them, called CSS3DRenderer, allows us to take our markup and render it on the plane. This is quite suitable for our task. The second, CSS3DStereoRenderer, which we will use, does the same, but divides the screen into two parts, creates two images, one for each eye.

The initialization of Three.js has been mentioned more than once in various articles, so we will not get too focused on it.

var scene = null,

camera = null,

renderer = null,

controls = null;

init();

render();

animate();

function init() {

initScene();

initRenderer();

initCamera();

initControls();

initAreas();

initCursor();

initEvents();

}

function initScene() {

scene = new THREE.Scene();

}

function initRenderer() {

renderer = new THREE.CSS3DStereoRenderer();

renderer.setSize(window.innerWidth, window.innerHeight);

renderer.domElement.style.position = 'absolute';

renderer.domElement.style.top = 0;

renderer.domElement.style.background = '#343335';

document.body.appendChild(renderer.domElement);

}

function initCamera() {

camera = new THREE.PerspectiveCamera(45,

window.innerWidth/window.innerHeight, 1, 1000);

camera.position.z = 1000;

scene.add(camera);

}

The second component that we will add right away is the DeviceOrientationController, which allows you to use the rotation of the device for orientation in 3d space. The technology for determining the position of the device is experimental, and, to put it mildly, today it works so-so. All three devices on which it was tested behaved differently. This is further aggravated by the fact that in addition to the gyroscope (and, possibly, instead of it), the browser uses a compass, which, surrounded by various iron and wires, behaves very inappropriately. We will use this controller also in order to maintain the possibility of using a conventional mouse in evaluating the results of an experiment.

function initControls() {

controls = new DeviceOrientationController(camera, renderer.domElement);

controls.connect();

controls.addEventListener('userinteractionstart', function () {

renderer.domElement.style.cursor = 'move';

});

controls.addEventListener('userinteractionend', function () {

renderer.domElement.style.cursor = 'default';

});

}

CSS3DRenderer, as we already said, makes it possible to use the usual markup in our 3d example. Creating CSS objects in this case is similar to creating regular planes.

function initAreas() {

var width = window.innerWidth / 2;

initArea('.vr-area.-left', [-width/2 -width/5.64,0,width/5.64], [0,Math.PI/4,0]);

initArea('.vr-area.-center', [0,0,0], [0,0,0]);

initArea('.vr-area.-right', [width/2 + width/5.64,0,width/5.64], [0,-Math.PI/4,0]);

}

function initArea(contentSelector, position, rotation) {

var element = document.querySelector(contentSelector),

area = new THREE.CSS3DObject(element);

area.position.x = position[0];

area.position.y = position[1];

area.position.z = position[2];

area.rotation.x = rotation[0];

area.rotation.y = rotation[1];

area.rotation.z = rotation[2];

scene.add(area);

}

Then again come the standard functions for almost all Three.js demos for animating and changing the browser window.

function initEvents() {

window.addEventListener('resize', onWindowResize);

}

function onWindowResize() {

camera.aspect = window.innerWidth / window.innerHeight;

camera.updateProjectionMatrix();

renderer.setSize( window.innerWidth, window.innerHeight );

render();

}

function animate() {

controls.update();

render();

requestAnimationFrame(animate);

}

function render() {

renderer.render(scene, camera);

}

But then the fun begins. CSS3DStereoRenderer essentially creates two identical trees of elements that are built using CSS transformations in the right place. In other words, there is no magic in this renderer. And what is there is a problem. How can you interact with interactive elements if they are all duplicated? We cannot focus on two elements at the same time, we cannot do a double: hover, in general a little ambush. The second problem is the id of the elements, which can only be in a single copy on the page. But if id can not be used, then you need to think carefully about interactions.

The only way to control the appearance of two elements at the same time is to add and remove some CSS classes to them. We can also simulate click (). We can click on several elements, this does not lead to strange browser behavior. Thus, we can make the second cursor in the center of the field of view (not the entire screen, but in the centers of its halves, designed for different eyes). In order to recognize the element under the cursor, we can use the document.elementFromPoint (x, y) function. At this point, it seems very useful that the renderer uses transformations, but the elements themselves remain standard DOM elements. We don’t need to invent geometry with the intersection of the ray with the plane on which the content is rendered, search for the coordinates of the intersection and the determination of elements on the plane by these coordinates. Everything is much simpler. The standard function copes with this task.

True, the question arises as to which element to add and remove classes to. I decided to temporarily focus on a simple transition to the parent elements until the focused element appears. There is probably a more beautiful solution, and you can put it in a separate function, but I really wanted to quickly see what happens there.

function initCursor() {

var x1 = window.innerWidth * 0.25,

x2 = window.innerWidth * 0.75,

y = window.innerHeight * 0.50,

element1 = document.body,

element2 = document.body,

cursor = document.querySelector('.fake-cursor');

setInterval(function() {

if (element1 && element1.classList) {

element1.classList.remove('-focused');

}

if (element2 && element2.classList) {

element2.classList.remove('-focused');

}

element1 = document.elementFromPoint(x1, y);

element2 = document.elementFromPoint(x2, y);

if (element1 && element2) {

while (element1.tabIndex < 0 && element1.parentNode) {

element1 = element1.parentNode;

}

while (element2.tabIndex < 0 && element2.parentNode) {

element2 = element2.parentNode;

}

if (element1.tabIndex >= 0) {

element1.classList.add('-focused');

}

if (element2.tabIndex >= 0) {

element2.classList.add('-focused');

}

}

}, 100);

}

We will also add in haste a simple imitation of a click on both elements under our fake cursor. In the case of glasses, there should be button processing on the controller, but not having one, we will stop at the usual enter on the keyboard.

document.addEventListener('keydown', function(event) {

if (event.keyCode === 13) {

if (element1 && element2) {

element1.click();

element2.click();

cursor.classList.add('-active');

setTimeout(function() {

cursor.classList.remove('-active');

}, 100);

}

}

});

What happened there?

Since a significant part of what was used in the article is experimental, it is still difficult to assert about cross-browser compatibility. In Chrome under Linux with a normal mouse, everything works, in Chrome under android - on one device everything rotates a little depending on the position in the room (I think because of the compass influenced by the surrounding interference), on the other - everything flies into cabbage soup. For this reason, I recorded a small video from the screen, which shows the result from the desktop browser:

Thoughts instead of conclusion.

The experiment is interesting. Yes, it is still very far from the real application. Browsers still have very poor support for determining the position of the device in space, there are no possibilities for calibrating the compass (there is formally a compassneedscalibration event, but in practice there is not much use for it). But in general, the idea of creating an interface in three-dimensional space is quite feasible. At least on the desktop, we can already definitely use this. If we replace the stereo renderer with a regular one, and turn the camera just by moving the mouse, we get something similar to a regular three-dimensional toy, only with the ability to use

Full sample sources are available on GitHub.