Bayes Event Connectivity Assessment

In his book, Nate Silver gives an example: let's say you want to place investments in several enterprises that may go bankrupt with probability  . It is required to assess its risks. The higher the probability of bankruptcy, the less we will invest. Conversely, if the probability of bankruptcy tends to zero, then you can invest without restrictions.

. It is required to assess its risks. The higher the probability of bankruptcy, the less we will invest. Conversely, if the probability of bankruptcy tends to zero, then you can invest without restrictions.

If there are 2 enterprises, then the likelihood that they will both go bankrupt and we will lose all investments . So the standard theory of probability teaches. But what happens if the enterprises are connected and the bankruptcy of one leads to the bankruptcy of the other?

. So the standard theory of probability teaches. But what happens if the enterprises are connected and the bankruptcy of one leads to the bankruptcy of the other?

An extreme case is when enterprises are completely dependent. The probability of double bankruptcy (bankrupt1 & bankrupt2) =

(bankrupt1 & bankrupt2) =  (bankrupt1), then the probability of losing all investments is

(bankrupt1), then the probability of losing all investments is  . The risk assessment technique has a wide spread

. The risk assessment technique has a wide spread from 0.05 to 0.0025 and the real value depends on how correctly we estimated the connectedness of the two events.

from 0.05 to 0.0025 and the real value depends on how correctly we estimated the connectedness of the two events.

When evaluating investments in we have enterprises

we have enterprises  from

from  before

before  . That is, the maximum possible probability remains large

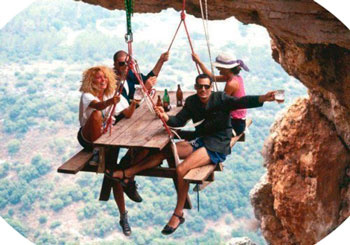

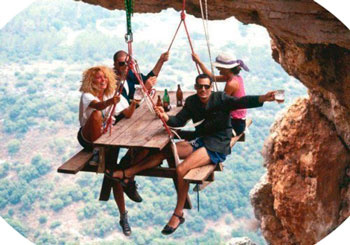

. That is, the maximum possible probability remains large , and the old saying “don't put your eggs in one basket” will not work if the counter with all the baskets falls at once.

, and the old saying “don't put your eggs in one basket” will not work if the counter with all the baskets falls at once.

Thus, our estimates have a huge spread, and how much to invest remains a question. But you need to take it well before investing. Nate Silver says analysts' ignorance of these simple laws led to stock market crashes in 2008, when US rating agencies assessed risks, but did not assess risk connectivity. Which ultimately led to the domino effect, when the first big player toppled over and carried away the others.

Let's try to sort this problem out by solving a simple math problem after the kat.

Let's solve a simplified problem in order to learn how to evaluate the connectedness of two events by the Bayes method using a simple example of two coins. More math ... I’ll try to chew, so that it’s become clear to myself.

Let there be 2 coins and

and  which give out 0 or 1 at throwing and one of 4 combinations on one throw is possible:

which give out 0 or 1 at throwing and one of 4 combinations on one throw is possible:

combination 1: 00

combination 2: 01

combination 3: 10

combination 4: 11

Here the first digit refers to the first coin, and the second to the second. I introduced such notation for ease of presentation.

By the condition of the problem, let the first coin be independent. I.e and

and  . And the second may be dependent, but we do not know how much. I.e

. And the second may be dependent, but we do not know how much. I.e depends on

depends on  .

.

Maybe there is some kind of magnet that attracts coins, or the thrower is a sharpie and a swindler, of which a dime a dozen. We will evaluate our ignorance in the form of probability.

In order to assess the connectivity, we need the actual material and model, the parameters of which we will evaluate. Let's use the simplest assumptions to get a feel for the topic and build the model.

If the coins are incoherent, then all four combinations will be equally probable. Here a correction arises from the standard theory of probability - this result can be achieved with an infinite number of casts. But since in practice the number of throws is finite, then we can fall into a deviation from the average. For example, you can accidentally get a series of three or five eagles when tossing coins, although on average with an endless toss, there will be exactly 50% of the eagles and exactly 50% of the tails. Deviation can be interpreted as a manifestation of connectedness, but can be interpreted as a usual deviation of statistics from the average. The smaller the sample, the more deviation is possible, and therefore different assumptions can be confused.

Here the Bayesian theory comes to the rescue, which makes it possible to estimate the probability of a particular hypothesis from a finite data set. Bayes produces the opposite process from the one we are used to dealing with in probability theory. According to Bayes, the probability is estimated that our conjectures coincide with the real state of affairs, and not the probability of outcomes.

We turn to the creative process of building a model. A requirement is imposed on the connectedness model - it should at least cover possible options. In our case, the extreme options are complete coherence or complete independence of coins. That is, the model must have at least one parameter k describing the connectivity.

We describe it as a coefficient![$ k \ in [0,1] $](https://habrastorage.org/getpro/habr/formulas/d68/773/69e/d6877369ec21a231dcf0b3e3ef36562e.svg) . If the second coin always coincides completely with the first, then

. If the second coin always coincides completely with the first, then 1. If the second coin always has opposite values, then

1. If the second coin always has opposite values, then  . If the coins are incoherent, then

. If the coins are incoherent, then . It turns out not bad - with one number we describe a lot of options. Moreover, the meaning of this variable is defined as the probability of coincidence.

. It turns out not bad - with one number we describe a lot of options. Moreover, the meaning of this variable is defined as the probability of coincidence.

Let's try to estimate this number based on actual data.

Let there be a specific data set which consists of

which consists of  outcomes:

outcomes:

outcome 1: 00

outcome 2: 01

outcome 3: 11

outcome 4: 00

outcome 5: 11

At first glance, it does not mean anything. Number of combinations:

We will slowly sort through what Bayes formula means. We use the standard notation, where the sign means the probability of an event occurring if it is already known that another event has occurred.

means the probability of an event occurring if it is already known that another event has occurred.

In this case, we have a combination of continuous and discrete distributions. and

and  are continuous distributions. A

are continuous distributions. A and

and  are discrete. For Bayes formula such a combination is possible. To save time, I do not paint all the subtleties.

are discrete. For Bayes formula such a combination is possible. To save time, I do not paint all the subtleties.

If we know this probability, then we can find the value![$ k \ in [0,1] $](https://habrastorage.org/getpro/habr/formulas/d68/773/69e/d6877369ec21a231dcf0b3e3ef36562e.svg) at which the probability of our hypothesis is maximum. That is, find the most likely coefficient

at which the probability of our hypothesis is maximum. That is, find the most likely coefficient .

.

On the right side we have 3 members that need to be evaluated. We analyze them.

1) It is required to know or calculate the probability of obtaining such data for a particular hypothesis . After all, even if the objects are disconnected (

. After all, even if the objects are disconnected ( ) then get a series

) then get a series  possible, although difficult. It is much more likely to get such a combination if the coins are connected (

possible, although difficult. It is much more likely to get such a combination if the coins are connected ( )

)  - the most important member and below we will analyze how to calculate it.

- the most important member and below we will analyze how to calculate it.

2) Need to know . Here we come across a delicate moment of modeling. We do not know this function and will make assumptions. If there is no additional knowledge, then we assume that

. Here we come across a delicate moment of modeling. We do not know this function and will make assumptions. If there is no additional knowledge, then we assume that equally likely in the range from 0 to 1. If we had insider information, we would know more about the connectivity and make a more accurate forecast. But since such information is not available, we put

equally likely in the range from 0 to 1. If we had insider information, we would know more about the connectivity and make a more accurate forecast. But since such information is not available, we put![$ k \ sim evenly [0,1] $](https://habrastorage.org/getpro/habr/formulas/9ea/128/5b3/9ea1285b314c95c9b2d2053d714360ff.svg) . Since the quantity

. Since the quantity independent of

independent of  then when calculating

then when calculating  she will not matter.

she will not matter.

3) Is the probability of having such a data set if all values are random. We can get this kit with different

Is the probability of having such a data set if all values are random. We can get this kit with different with different probabilities. Therefore, all possible ways of obtaining a set are taken into account

with different probabilities. Therefore, all possible ways of obtaining a set are taken into account . Since at this stage the value is still unknown

. Since at this stage the value is still unknown , then it is necessary to integrate over

, then it is necessary to integrate over  . To understand this better, it is necessary to solve the elementary problems in which the Bayesian graph is constructed, and then go from the sum to the integral. The result is an expression wolframalpha , which is to search for the maximum

. To understand this better, it is necessary to solve the elementary problems in which the Bayesian graph is constructed, and then go from the sum to the integral. The result is an expression wolframalpha , which is to search for the maximum will not affect, since this value does not depend on

will not affect, since this value does not depend on  .

.

Let's figure out how to calculate . Remember that the first coin is independent, and the second is dependent. Therefore, the probability of a value for the first coin will look something like this

. Remember that the first coin is independent, and the second is dependent. Therefore, the probability of a value for the first coin will look something like this , and for the second coin so

, and for the second coin so  . The probability of coincidence with the first coin is

. The probability of coincidence with the first coin is , and the probability of mismatch is

, and the probability of mismatch is  .

.

We will analyze the possible cases for one outcome:

To check, add up the probabilities, the unit should be . This makes me happy.

. This makes me happy.

Now you can move on to finding the most likely value according to the fictitious dataset that has already been given above

according to the fictitious dataset that has already been given above  .

.

Probability to have such a set

,

,

reveal

,

,

simplify

,

,

generalize for an arbitrary data set

,

,

.

.

Denote the number of matches , and the number of mismatches

, and the number of mismatches  .

.

We get such a generalized formula

.

.

Interested parties can play around with the schedule by entering different exponents: link to wolframalpha .

Since in this example , then we work directly with

, then we work directly with  .

.

To search for the maximum, we differentiate and equate to zero:

.

.

For a work to be equal to zero, one of the members must be equal to zero.

We are not interested and

and  , since there is no local maximum at these points, and the third factor indicates a local maximum, therefore

, since there is no local maximum at these points, and the third factor indicates a local maximum, therefore

.

.

We get a formula that can be used for forecasts. That is, after an additional throw of the first coin, we try to predict the behavior of the second through .

.

outcome 6: 1¿

Upon receipt of new data, we correct and specify the formula. Upon receipt of insider data, we will have a more accurate value , and you can further refine the entire chain of calculations.

, and you can further refine the entire chain of calculations.

Since we calculate the probability, then for It is advisable to analyze the mean and variance. The average can be calculated using the standard formula. But about the variance, we can say that with an increase in the amount of data, the peak on the graph (link above) becomes sharper, which means a more unambiguous forecast of the value

It is advisable to analyze the mean and variance. The average can be calculated using the standard formula. But about the variance, we can say that with an increase in the amount of data, the peak on the graph (link above) becomes sharper, which means a more unambiguous forecast of the value .

.

In the above dataset, we have 4 matches and one mismatch. therefore . In an additional sixth toss with a probability of 80%, the second coin will coincide with the first. Suppose we got 1 on the first coin, then we have 80%, that “outcome 6” will be “11” and the remaining 20%, that “outcome 6” will be “10”. After each throw, we adjust the formula and predict the probability of still imperfect matches one step further.

. In an additional sixth toss with a probability of 80%, the second coin will coincide with the first. Suppose we got 1 on the first coin, then we have 80%, that “outcome 6” will be “11” and the remaining 20%, that “outcome 6” will be “10”. After each throw, we adjust the formula and predict the probability of still imperfect matches one step further.

On this, I would like to finish my post. I will be glad to your comments.

PS

This example is described to demonstrate the algorithm. Here a lot of things are not taken into account that happens in reality. For example, when analyzing events from the real world, it will be necessary to analyze time intervals, conduct factor analysis, and much more. This is already the concern of professionals. It should also be philosophically noted that everything in this world is interconnected, only these connections are sometimes manifested, and sometimes not. Therefore, it’s impossible to completely take everything into account completely, because we would have to include all the objects of this world in the formula, even those that we don’t know, and process a very large amount of actual material.

Sources of information:

1. https://ru.wikipedia.org/wiki/Bayes theorem

2. https://ru.wikipedia.org/wiki/

Bayes output 3. Nate Silver, “Signal and noise”

Rybakov D.A. 2017

- my thanks to those who responded: Arastas, AC130, koldyr

If there are 2 enterprises, then the likelihood that they will both go bankrupt and we will lose all investments

An extreme case is when enterprises are completely dependent. The probability of double bankruptcy

When evaluating investments in

Thus, our estimates have a huge spread, and how much to invest remains a question. But you need to take it well before investing. Nate Silver says analysts' ignorance of these simple laws led to stock market crashes in 2008, when US rating agencies assessed risks, but did not assess risk connectivity. Which ultimately led to the domino effect, when the first big player toppled over and carried away the others.

Let's try to sort this problem out by solving a simple math problem after the kat.

Let's solve a simplified problem in order to learn how to evaluate the connectedness of two events by the Bayes method using a simple example of two coins. More math ... I’ll try to chew, so that it’s become clear to myself.

Let there be 2 coins

combination 1: 00

combination 2: 01

combination 3: 10

combination 4: 11

Here the first digit refers to the first coin, and the second to the second. I introduced such notation for ease of presentation.

By the condition of the problem, let the first coin be independent. I.e

Maybe there is some kind of magnet that attracts coins, or the thrower is a sharpie and a swindler, of which a dime a dozen. We will evaluate our ignorance in the form of probability.

In order to assess the connectivity, we need the actual material and model, the parameters of which we will evaluate. Let's use the simplest assumptions to get a feel for the topic and build the model.

If the coins are incoherent, then all four combinations will be equally probable. Here a correction arises from the standard theory of probability - this result can be achieved with an infinite number of casts. But since in practice the number of throws is finite, then we can fall into a deviation from the average. For example, you can accidentally get a series of three or five eagles when tossing coins, although on average with an endless toss, there will be exactly 50% of the eagles and exactly 50% of the tails. Deviation can be interpreted as a manifestation of connectedness, but can be interpreted as a usual deviation of statistics from the average. The smaller the sample, the more deviation is possible, and therefore different assumptions can be confused.

Here the Bayesian theory comes to the rescue, which makes it possible to estimate the probability of a particular hypothesis from a finite data set. Bayes produces the opposite process from the one we are used to dealing with in probability theory. According to Bayes, the probability is estimated that our conjectures coincide with the real state of affairs, and not the probability of outcomes.

We turn to the creative process of building a model. A requirement is imposed on the connectedness model - it should at least cover possible options. In our case, the extreme options are complete coherence or complete independence of coins. That is, the model must have at least one parameter k describing the connectivity.

We describe it as a coefficient

Let's try to estimate this number based on actual data.

Let there be a specific data set

outcome 1: 00

outcome 2: 01

outcome 3: 11

outcome 4: 00

outcome 5: 11

At first glance, it does not mean anything. Number of combinations:

We will slowly sort through what Bayes formula means. We use the standard notation, where the sign

In this case, we have a combination of continuous and discrete distributions.

If we know this probability, then we can find the value

On the right side we have 3 members that need to be evaluated. We analyze them.

1) It is required to know or calculate the probability of obtaining such data for a particular hypothesis

2) Need to know

3)

Let's figure out how to calculate

We will analyze the possible cases for one outcome:

To check, add up the probabilities, the unit should be

Now you can move on to finding the most likely value

Probability to have such a set

reveal

simplify

generalize for an arbitrary data set

Denote the number of matches

We get such a generalized formula

Interested parties can play around with the schedule by entering different exponents: link to wolframalpha .

Since in this example

To search for the maximum, we differentiate and equate to zero:

For a work to be equal to zero, one of the members must be equal to zero.

We are not interested

We get a formula that can be used for forecasts. That is, after an additional throw of the first coin, we try to predict the behavior of the second through

outcome 6: 1¿

Upon receipt of new data, we correct and specify the formula. Upon receipt of insider data, we will have a more accurate value

Since we calculate the probability, then for

In the above dataset, we have 4 matches and one mismatch. therefore

On this, I would like to finish my post. I will be glad to your comments.

PS

This example is described to demonstrate the algorithm. Here a lot of things are not taken into account that happens in reality. For example, when analyzing events from the real world, it will be necessary to analyze time intervals, conduct factor analysis, and much more. This is already the concern of professionals. It should also be philosophically noted that everything in this world is interconnected, only these connections are sometimes manifested, and sometimes not. Therefore, it’s impossible to completely take everything into account completely, because we would have to include all the objects of this world in the formula, even those that we don’t know, and process a very large amount of actual material.

Sources of information:

1. https://ru.wikipedia.org/wiki/Bayes theorem

2. https://ru.wikipedia.org/wiki/

Bayes output 3. Nate Silver, “Signal and noise”

Rybakov D.A. 2017

- my thanks to those who responded: Arastas, AC130, koldyr