Machine Learning for an Insurance Company: Realistic Ideas

Friday is a great day to start something, such as a new series of machine learning articles. In the first part, the WaveAccess team talks about why machine learning is needed at an insurance company and how they tested the feasibility of predicting cost peaks.

Today, machine learning is effectively used to automate tasks that require a lot of routine manual labor and which are hard to program in the traditional way. For example, these are tasks with a large number of influencing variables: identification of spam emails, search for information in the text, and so on. In such situations, the use of machine learning becomes especially in demand.

A large number of successful machine learning projects have been implemented in the USA, and these are mainly solutions from the field of intelligent applications. The companies that developed them have changed the market and the rules of the game in their industry. For example, Amazon introduced a recommendation system for purchases on its website - and this predetermined the current look of online stores. Google through machine learning algorithms has developed a targeted advertising system that offers the user individually selected products based on information known about him. Netflix, Pandora (Internet radio), Uber became key figures in their markets and set them a further development vector, and the basis of the solutions was machine learning.

An important financial indicator for an insurance company is the difference between the cost of insurance sold and the cost of reimbursement for insured events.

Since cost reduction is critical to this business, companies use a large number of proven methods, but are always looking for new opportunities.

For the medical insurance company (our client), forecasting the costs of treating insured people is a good way to reduce costs. If it’s known that a serious amount will be required for the client’s treatment in the next month or two, you need to take a closer look at it: for example, transfer it to more qualified curators, offer to undergo diagnostic examinations in advance, monitor the implementation of the doctors ’recommendations, and so on, so that reduce in some cases the cost of future treatment.

But how to predict the cost of treating each client when there are more than a million?

One option may be an individual analysis of information known about the patient. So you can predict, for example, a sharp increase (if we consider the relative values) or just a peak (absolute values) of costs. The data in this problem contain a lot of noise, so you can not count on a result close to 100% correct predictions. However, since we are talking about class-unbalanced statistics, even 50% of the predicted peaks with 80-90% of the predicted no cost increases can provide important information for the company. A similar problem can be effectively solved only by machine learning. Of course, you can manually select a set of rules based on existing data, which will be very crude and inefficient in the long run in comparison with machine learning algorithms,

In such projects, the implementation of a software package in the form of a web service is often relevant. To implement machine learning, the client considered 2 of the most famous solutions in this area: MS Azure ML and Amazon ML.

Since Amazon ML supports only 1 algorithm - linear regression (and its adaptation for classification problems - logistic). This limits the possibilities of implemented solutions.

Microsoft Azure ML is a more flexible service:

Since a complex composition of algorithms was required to solve the problems posed on the project, Azure was chosen. However, since it is impossible to talk about the advantages of an integrated approach to solving machine learning problems without comparison, in this article we restrict ourselves to simple algorithms, and consider more complex variations in the following articles.

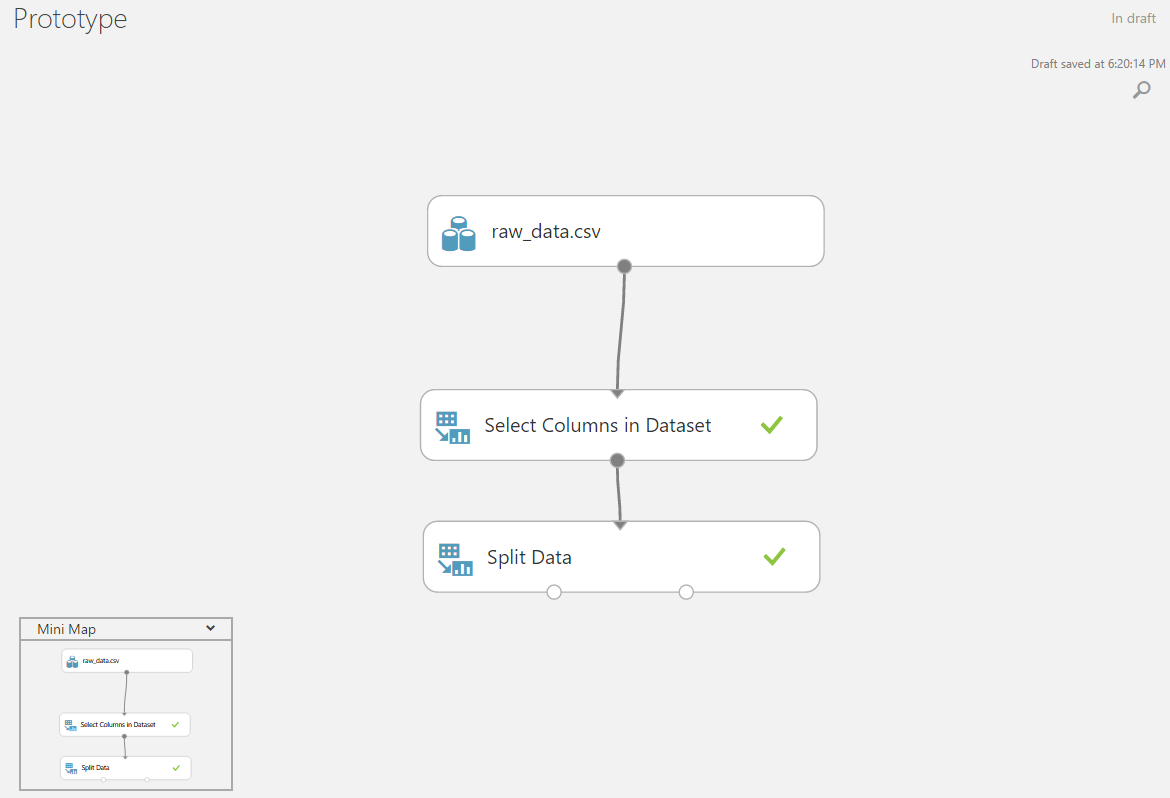

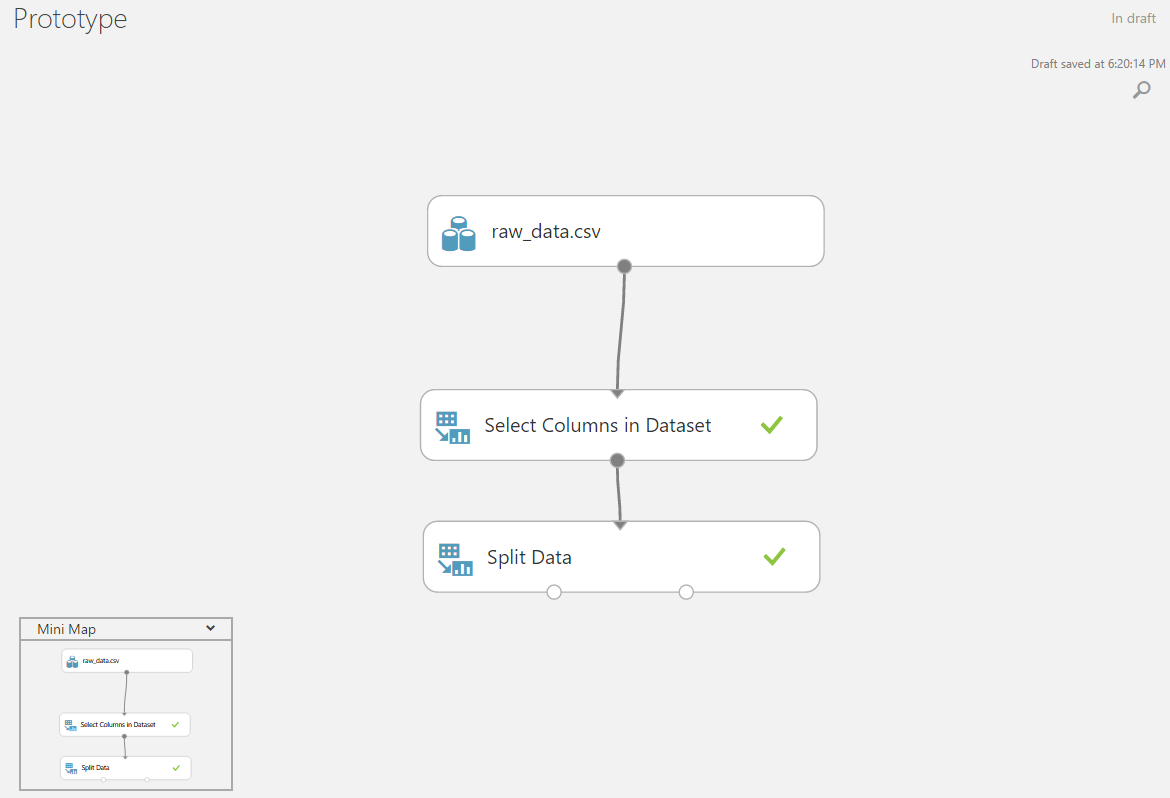

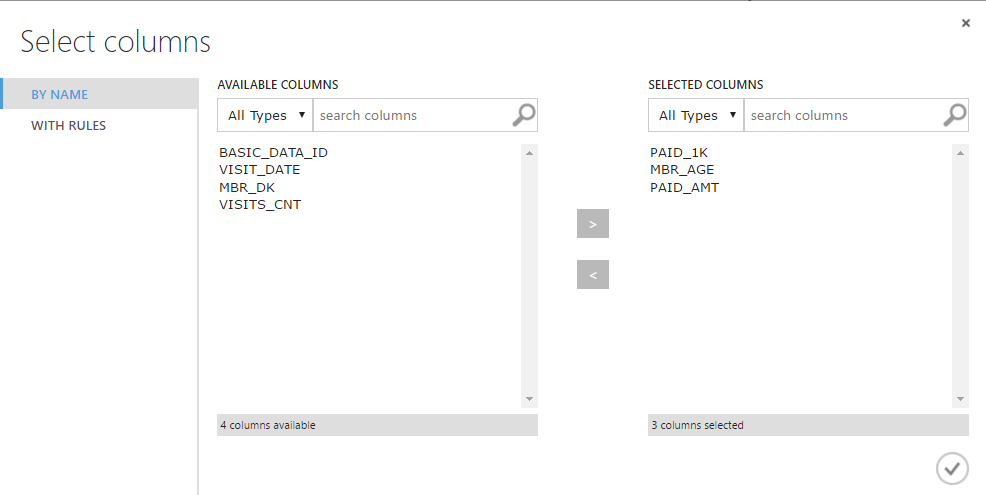

Let us verify the feasibility of predicting cost peaks. As the initial data, to obtain the basic mark, we take the raw data: the patient’s age, the number of doctor’s appointments, the amount of money spent on the client over the past few months. The border for the peak of costs for the next month will be chosen as $ 1000. We will upload the data to Azure and divide it into training and test samples in the ratio of 4 to 1 (we will not conduct validation at this stage, therefore, sampling is not provided for it in this situation).

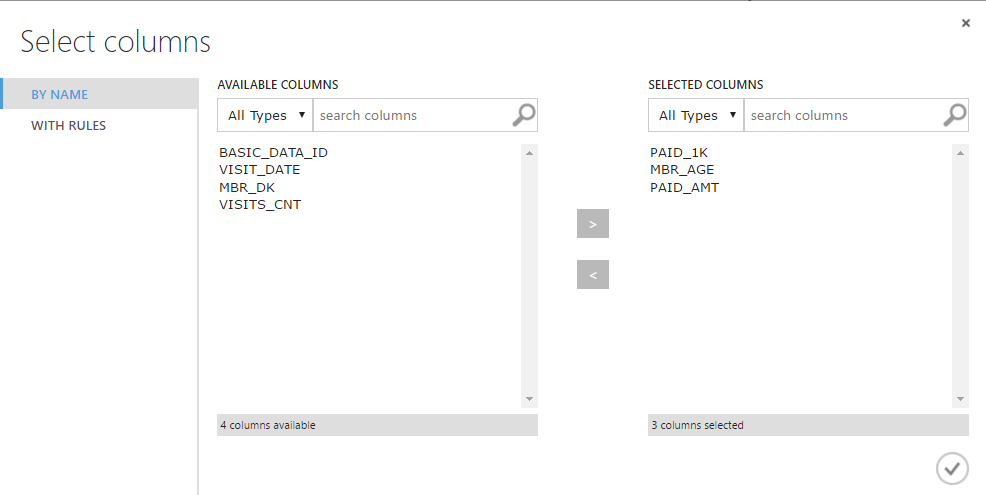

If the loaded data contains more columns than necessary, or vice versa, some data is in a different source - they can be easily combined or removed from the input data matrix.

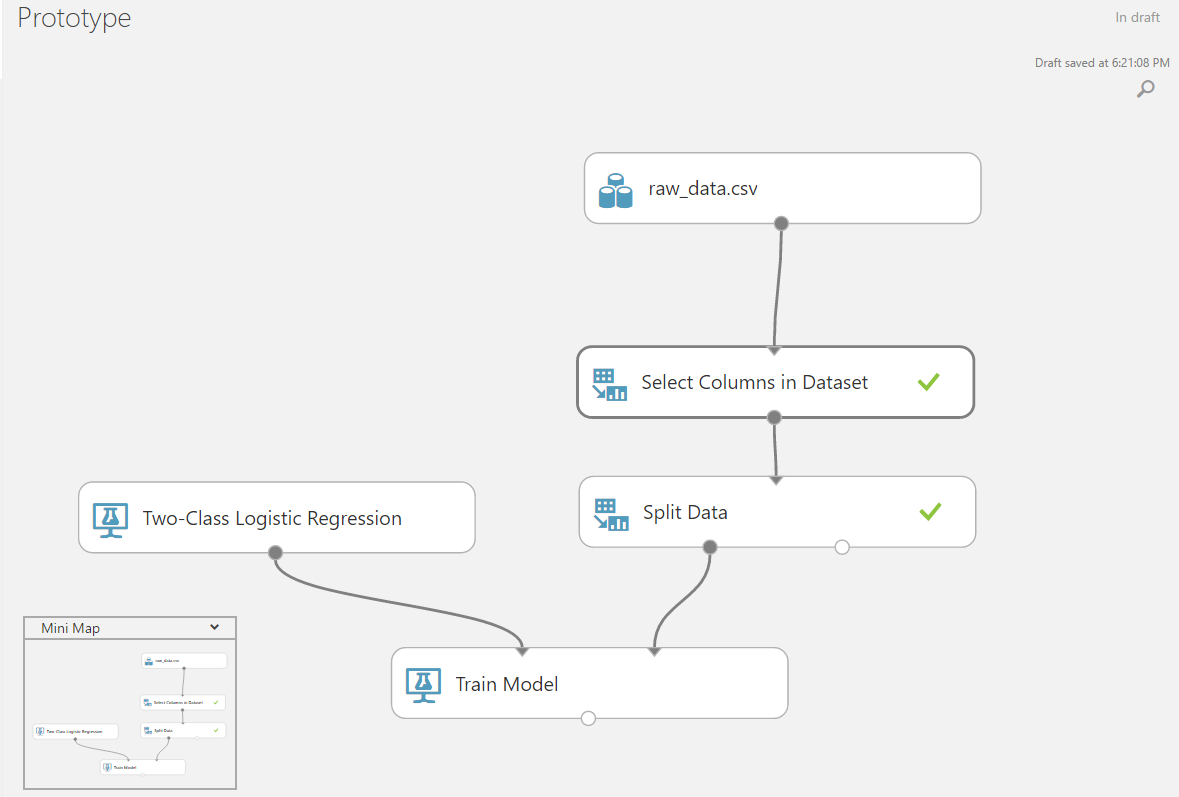

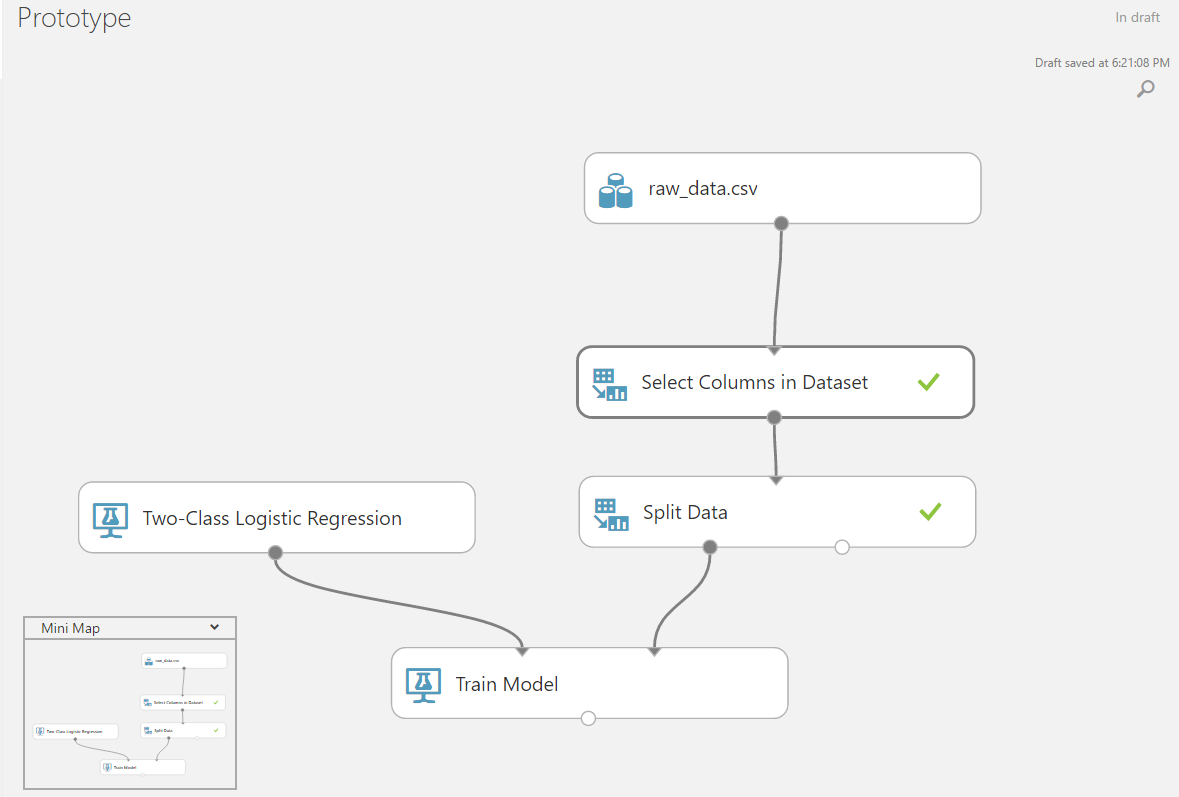

Let's choose (for training) the simplest version of the algorithm - logistic regression. This conservative method is often used first to get a point for further comparison; in certain tasks, it may be the most suitable and show the best result.

We add the blocks for training and testing the algorithm and associate the initial data with them.

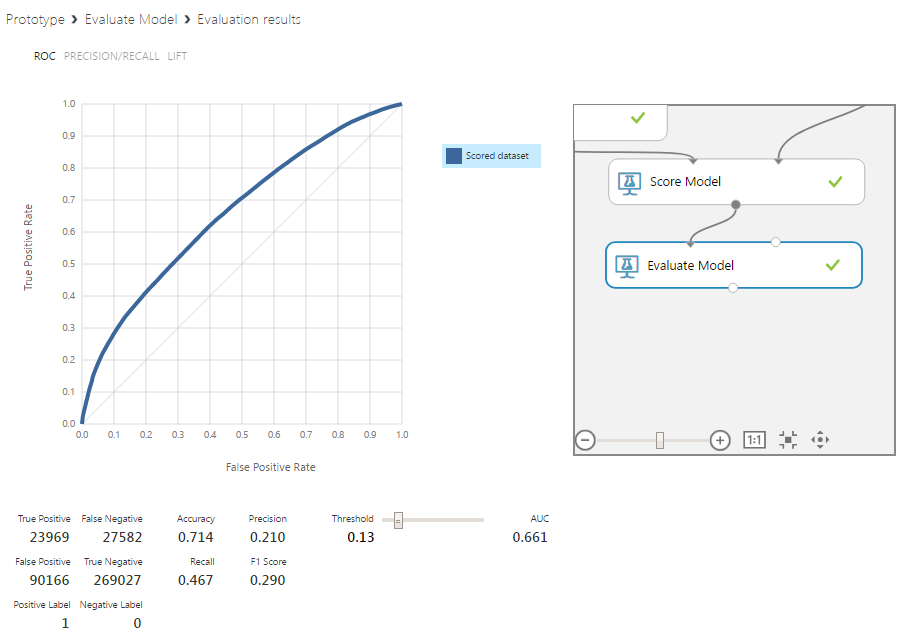

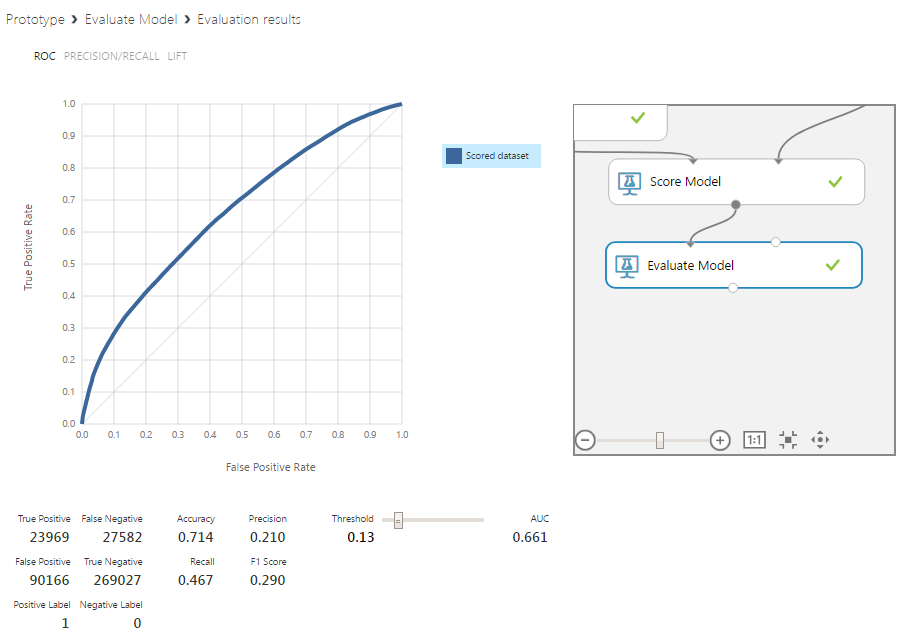

For convenience, checking the results, you can add a block for evaluating the results of the algorithm, where you can experiment with the class separation boundary.

In our experiment, the basic algorithm for raw data with the class separation boundary set to 0.13 predicted about 45% of the peaks and 75% of their absence. This indicates that the concept should be developed on more complex schemes, which will be done in the future.

In the following articles, we will consider, by way of example, data analysis, the operation of other algorithms and their combinations, the fight against retraining and incorrect data.

WaveAccess team creates technically sophisticated, highly loaded and fault-tolerant software for companies from different countries. Comment by Alexander Azarov, head of machine learning at WaveAccess:

Series of articles “Machine Learning for an Insurance Company”

Introduction

Today, machine learning is effectively used to automate tasks that require a lot of routine manual labor and which are hard to program in the traditional way. For example, these are tasks with a large number of influencing variables: identification of spam emails, search for information in the text, and so on. In such situations, the use of machine learning becomes especially in demand.

A large number of successful machine learning projects have been implemented in the USA, and these are mainly solutions from the field of intelligent applications. The companies that developed them have changed the market and the rules of the game in their industry. For example, Amazon introduced a recommendation system for purchases on its website - and this predetermined the current look of online stores. Google through machine learning algorithms has developed a targeted advertising system that offers the user individually selected products based on information known about him. Netflix, Pandora (Internet radio), Uber became key figures in their markets and set them a further development vector, and the basis of the solutions was machine learning.

Why does an insurance company need machine learning?

An important financial indicator for an insurance company is the difference between the cost of insurance sold and the cost of reimbursement for insured events.

Since cost reduction is critical to this business, companies use a large number of proven methods, but are always looking for new opportunities.

For the medical insurance company (our client), forecasting the costs of treating insured people is a good way to reduce costs. If it’s known that a serious amount will be required for the client’s treatment in the next month or two, you need to take a closer look at it: for example, transfer it to more qualified curators, offer to undergo diagnostic examinations in advance, monitor the implementation of the doctors ’recommendations, and so on, so that reduce in some cases the cost of future treatment.

But how to predict the cost of treating each client when there are more than a million?

One option may be an individual analysis of information known about the patient. So you can predict, for example, a sharp increase (if we consider the relative values) or just a peak (absolute values) of costs. The data in this problem contain a lot of noise, so you can not count on a result close to 100% correct predictions. However, since we are talking about class-unbalanced statistics, even 50% of the predicted peaks with 80-90% of the predicted no cost increases can provide important information for the company. A similar problem can be effectively solved only by machine learning. Of course, you can manually select a set of rules based on existing data, which will be very crude and inefficient in the long run in comparison with machine learning algorithms,

Implementing Machine Learning

In such projects, the implementation of a software package in the form of a web service is often relevant. To implement machine learning, the client considered 2 of the most famous solutions in this area: MS Azure ML and Amazon ML.

Since Amazon ML supports only 1 algorithm - linear regression (and its adaptation for classification problems - logistic). This limits the possibilities of implemented solutions.

Microsoft Azure ML is a more flexible service:

- a large number of built-in algorithms + support for embedding your code in R and Python;

- built-in tools have everything necessary for basic work in the areas of classification, clustering, regression, computer vision, working with text, etc .;

- there is a functional for preprocessing, manipulation and data organization;

- modules in Azure ML are organized as flowcharts, which makes the entry threshold low and the work intuitive - therefore, Azure ML has become a convenient prototyping tool that allows you to quickly implement basic solutions to test the viability of hypotheses, ideas and projects.

Since a complex composition of algorithms was required to solve the problems posed on the project, Azure was chosen. However, since it is impossible to talk about the advantages of an integrated approach to solving machine learning problems without comparison, in this article we restrict ourselves to simple algorithms, and consider more complex variations in the following articles.

Prototyping

Let us verify the feasibility of predicting cost peaks. As the initial data, to obtain the basic mark, we take the raw data: the patient’s age, the number of doctor’s appointments, the amount of money spent on the client over the past few months. The border for the peak of costs for the next month will be chosen as $ 1000. We will upload the data to Azure and divide it into training and test samples in the ratio of 4 to 1 (we will not conduct validation at this stage, therefore, sampling is not provided for it in this situation).

If the loaded data contains more columns than necessary, or vice versa, some data is in a different source - they can be easily combined or removed from the input data matrix.

Let's choose (for training) the simplest version of the algorithm - logistic regression. This conservative method is often used first to get a point for further comparison; in certain tasks, it may be the most suitable and show the best result.

We add the blocks for training and testing the algorithm and associate the initial data with them.

For convenience, checking the results, you can add a block for evaluating the results of the algorithm, where you can experiment with the class separation boundary.

In our experiment, the basic algorithm for raw data with the class separation boundary set to 0.13 predicted about 45% of the peaks and 75% of their absence. This indicates that the concept should be developed on more complex schemes, which will be done in the future.

In the following articles, we will consider, by way of example, data analysis, the operation of other algorithms and their combinations, the fight against retraining and incorrect data.

About Authors

WaveAccess team creates technically sophisticated, highly loaded and fault-tolerant software for companies from different countries. Comment by Alexander Azarov, head of machine learning at WaveAccess:

Machine learning allows you to automate areas where expert opinions currently dominate. This makes it possible to reduce the impact of the human factor and increase the scalability of the business.