Analysis of the relationship of skills using graphs in R

It is interesting, but such an area as professional development remains slightly aloof from noise due to data science. Startups in HRtech are just starting to increase their momentum and increase their share, replacing the traditional approach in the field of working with professionals or those who want to become a professional.

HRtech's field is very diverse and includes automation of hiring employees, development and coaching, automation of internal HR procedures, tracking market salaries, tracking candidates, employees and much more. This study helps using data analysis methods to answer the question of how skills are interconnected, what specializations are, which skills are more popular, and which skills should be studied as follows.

Problem statement and input data

Initially, I did not want to share the skills according to some well-known classification. For example, through the average salary it was possible to distinguish “expensive” and “cheap” skills. We wanted to highlight “specializations” based on mathematics and statistics based on market requirements, i.e. employers. Therefore, in this study, the task of unsepervised learning arose to combine skills into groups. And the first profession we chose a programmer.

For the analysis, we took data from the portal Work in Russia available on data.gov.ru . Here are all the vacancies available on the portal with a description, salary, region and other details. Next, we parsed the descriptions and identified the skills from them. This is a separate study and is not covered by this article. However, already tagged data can also be taken from the hh.ru API .

Thus, the initial data are represented by a matrix with values 0/1, in which X are skills and objects are vacancies. A total of 164 signs and 841 objects.

Selection of a method for searching for skill groups

When choosing a method, we based on the assumption that one vacancy can have several specializations. They also proceeded from the assumption that a particular skill can relate to only one specialization. We will reveal the maps; this assumption was required for the operation of algorithms using the results of this study.

Solving the problem head on, it can be assumed that if one group of skills is found in one group of vacancies, and another group of skills is found in another group of vacancies, then this group of skills is a specialization. And you can share skills using metric methods (k-means and modifications). But the problem was precisely that one vacancy could have several specializations. And in the end, no matter how the algorithm changes, he assigned 90% of the skills to one cluster and another dozen clusters of 1-2 skills. Upon reflection, they began to rewrite k-means for the task in such a way that instead of the classical Euclidean distance, we consider a measure of the adjacency of skills, that is, the frequency of occurrence of two skills:

library(data.table)

grid<-as.data.table(expand.grid(skill_1=names(skills_clust),skill_2=names(skills_clust)))

grid<-grid[grid$skill_1 != grid$skill_2,]

for (i in c(1:nrow(grid))){

grid$value[i]<-sum(skills_clust[,grid$skill_1[i]]*skills_clust[,grid$skill_2[i]])

}But, fortunately, in time the idea came to present the task as the task of finding communities in graphs. And it is time to recall the theory of graphs, safely forgotten after the second year of university.

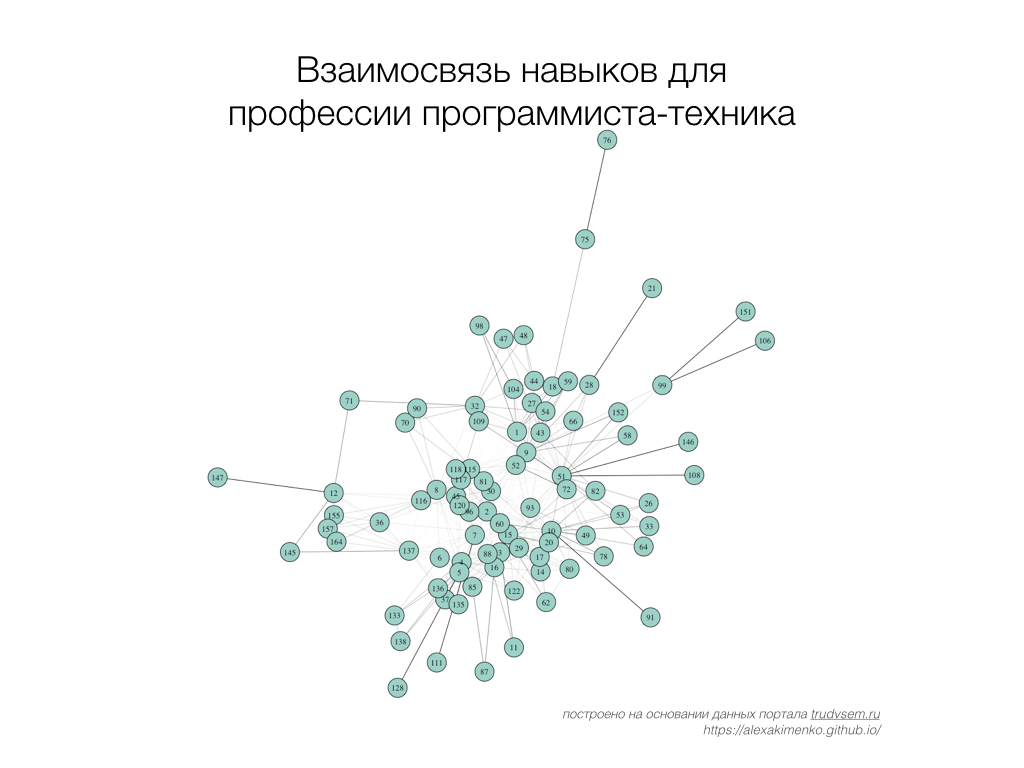

Building and analyzing a skill graph

In order to build a graph, we will use the package igraph(the same exists in python and C / C ++) and first of all we will create an adjacency matrix from the table, which we started to count for k-means ( grid). Then we normalize the adjacency of skills in the range from 0 to 1:

grid_clean<-grid[grid$value>1,] # пары навыков встречающиеся <=1 раза исключаются

grid_cast<-dcast(grid_clean,skill_1~skill_2)

grid_cast[,skill_1:=NULL]

grid_cast_norm<-grid_cast/colSums(grid_cast,na.rm=T)

grid_cast_norm[is.na(grid_cast_norm)]<-0

grid_cast_norm<-as.matrix(grid_cast_norm)

grid_cast_norm[grid_cast_norm<=0.02] <-0 # пары навыков встречающиеся <=2% исключаютсяThe adjacency of the skill iand the skill jwe normalize as a fraction of the total occurrence of the skill i. Initially, we normalized the matrix as a fraction of the maximum occurrence of all skills, but then moved on to this formula. The idea is that, for example, a skill imeets a skill j10 times and no longer encounters any other skill. It can be assumed that such a relationship will be more significant (for example, 100%) than if we looked at this occurrence from the maximum in this matrix (for example, 100 - 10%).

Also, to clean the adjacency matrix from random connections, we removed pairs that occur less than 2 times or 2% of the total occurrence of this skill. Unfortunately, this reduced the skill set from 164 to 87, however, made the segments more logical and understandable.

Then we create an undirected weighted graph from the adjacency matrix:

library(igraph)

library(RColorBrewer)

skills_graph<-graph_from_adjacency_matrix(grid_cast_norm, mode = "undirected",weighted=T)

E(skills_graph)$width <- E(skills_graph)$weight

plot(skills_graph, vertex.size=7,vertex.label.cex=0.8, layout=layout.auto,

vertex.label.color="black",vertex.color=brewer.pal(max(V(skills_graph)$group),"Set3")[1])

igraph It also allows us to calculate the main statistics for the vertices:

closeness(skills_graph) # Центральность вершины на основании расстояния до других вершин

betweenness(skills_graph) # Количество самых коротких путей, проходящих через вершину

degree(skills_graph) # Количество связанных вершин с данной вершинойNext, we can display an adjacency sheet for each skill. This sheet can later become the basis of a recommendation system for choosing new skills:

get.adjlist(skills_graph)Multilevel community highlighting

On the topic of community search in graphs, there is an excellent work by Konstantin Slavnov . This article lays out the main metrics for the quality of community highlighting, methods for highlighting communities and aggregating the results of these methods.

When the true community breakdown is not known, the value of the modularity functional is used to assess quality. This is the most popular and recognized measure of quality for this task. The functionality was proposed by Newman and Girvan during the development of the algorithm for clustering vertices of the graph. In simple terms, this metric estimates the difference in bond densities within and between communities. The main disadvantage of this functionality is that it does not see small communities. For the task of highlighting specializations, where a combination of 2-3 peaks can become a community, this problem can become critical, however, it can be done by adding an additional scale parameter to the optimized functional.

To optimize the modularity functional, the Multilevel algorithm proposed in the article is most often used . Firstly, because of the good quality of optimization, and secondly, because of speed (this was not required in this task, but still), and thirdly, the algorithm is quite intuitive.

Based on our data, this algorithm also showed one of the best results:

| N | Algorithm | Modularity | Number of communities |

|---|---|---|---|

| 1 | Betweenness | 0.223 | 6 |

| 2 | Fastgreedy | 0.314 | 8 |

| 3 | Multilevel | 0.331 | 8 |

| 4 | LabelPropogation | 0.257 | fifteen |

| 5 | Walktrap | 0.316 | 10 |

| 6 | Infomap | 0.315 | thirteen |

| 7 | Eigenvector | 0.348 | 8 |

In igraphthe package, the Multilevel algorithm is implemented by the function cluster_louvain():

fit_cluster<-cluster_louvain(skills_graph)

V(skills_graph)$group <- fit_cluster$membershipresults

As we see, it was possible to identify 8 specializations (the names are given subjectively by the author of the article):

- Server and network maintenance - includes knowledge of Microsoft Hyper-V, VMware vSphere, equipment repair and tuning. Knowledge of English is also required;

- Developer of industrial systems / controllers - there is a whole set from C / C ++ to Java including Assembler and SCADA;

- Developer of ERP-systems (1C, SAP) - here, in addition to 1C, SAP, ABAP, knowledge of the basics of accounting, management accounting and technical support skills of users are also required;

- Machine Programming - this specialization requires programming skills for CNC machines, programming on Unigraphics NX and knowledge of machine control systems;

- General programming skills - includes knowledge of GOST, structural programming, writing TK skills and for some reason knowledge of the Chinese language (probably someone really needs it);

- Microsoft developer with knowledge of the database - C #, .NET Framework, MS SQL Server, FoxPro, experience working with other people's code, the ability to refactor;

- Web developer - JavaScript, HTML, CSS, PLPG / SQL (PostgreSQL), jQuery, PHP, etc. It is interesting that it is in this specialization that such skills as knowledge of the OOP concept, Agile, and knowledge of version control systems are most often required;

- Mobile application developer - knowledge of Swift, experience in developing Android / iOS applications;

Specializations are rather closely interconnected. This is explained by our basic assumption that one vacancy can have several specializations. So, for example, in the specialization “Mobile application developer”, “Knowledge of network protocols” (71) is required, which in turn is interconnected with “Administration of local networks” (32) from the specialization “Server and network maintenance”.

It should also be understood that the data source is the “Work in Russia” portal, the selection of vacancies on which differs from hh.ru or superjob.ru - vacancies are biased towards vacancies with lower qualifications. Plus, the sample is limited to 841 vacancies (of which only 585 had marks about any skills), because of this, a large number of skills were not analyzed and did not fall into specialization.

However, on the whole, the proposed algorithm gives fairly logical results and allows for automatic jobs for professions that can be quantified (naturally, top manager’s skills cannot be distinguished in specializations) to answer questions about how skills are related, what specializations are, which skills are more popular and which are more popular skills should be studied as follows.

Everyone who has read to the end is a bonus. You can play along with the link with interactive visualization of the graph and download the final table with the results of the study.