Huawei HyperMetro - tested and implemented

The main capabilities of the third generation storage systems of Huawei Oceanstor on Habré have already been described , but there is not much information about the function of access to that “stretched” between platforms. We will try to close this omission. This material was prepared based on the results of implementation by one of our customers in the banking environment.

The main purpose of HyperMetro is to protect against data center failure, and only then storage, disk shelves, etc. The function became available along with the V300R003C00SPC100 firmware in December 2015.

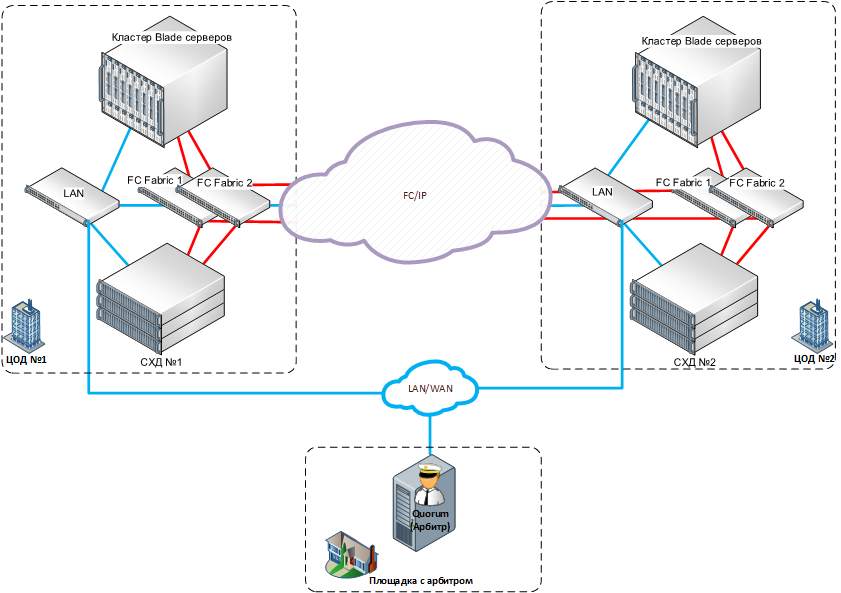

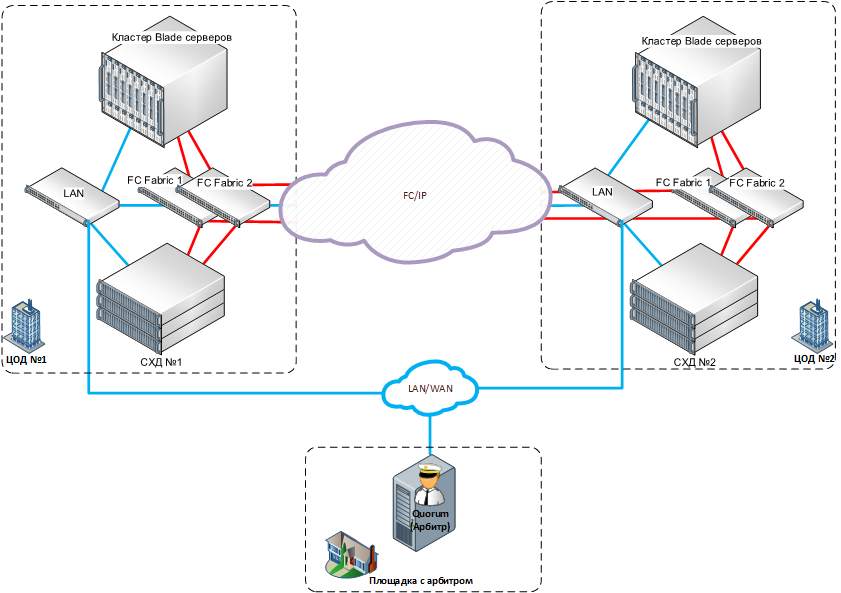

In fact, this is providing one-time read and write access to a logical volume that has two independent copies on storage systems located at different technology sites. The HyperMetro solution topology is shown below.

Figure 1. HyperMetro Topology

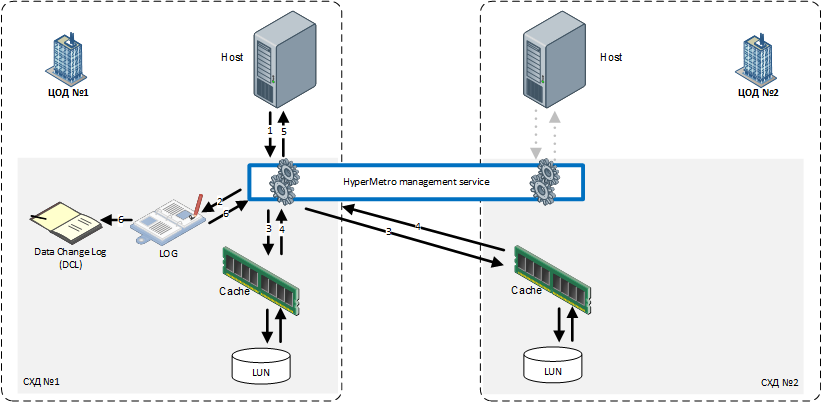

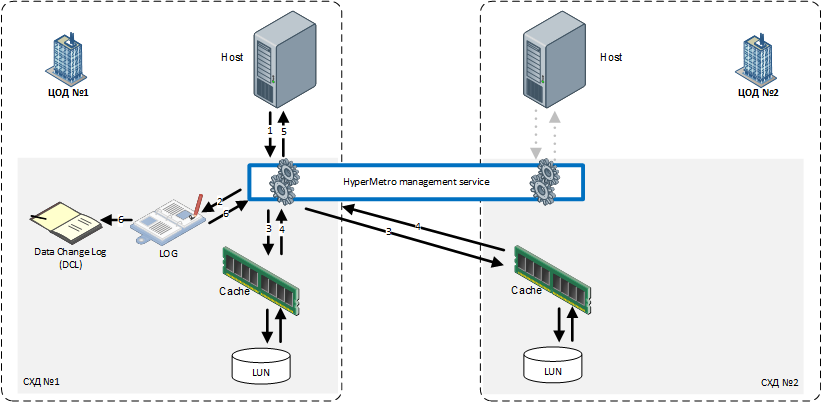

For arbitration in case of failures, Quorum is used, which is installed on the third site, has access to both arrays via Ethernet and is an application for Linux (SUSE / RHEL / Ubuntu). Reading and writing to a distributed volume is possible from any of the sites to any of the two storage systems due to the exchange of locks between arrays by writing to the transaction log (data change log - DCL), which protects against the possibility of writing from different hosts to one block. It should also be noted that the write to the cache of both storage systems runs in parallel, and not sequentially. The following is the recording process in HyperMetro.

Figure 2. The order of operations when writing to a distributed volume

The MPIO Huawei Ultrapath driver (supplied with Oceanstor V3) is prioritizing local reading / writing to avoid cross-site traffic. Performance balancing between sites is allowed. It is possible to use not only FC, but also iSCSI - both for access to hosts and for replication.

After creating HyperMetro relationships, both volumes are considered symmetrical and equivalent. However, in the event of a quorum failure and communication between the storage systems, the priority side is set for each of the HyperMetro relationships in order to avoid the split brain situation. It is possible to use consistency groups to combine several pairs of volumes. In the event of failure of one of the copies in the vast majority of cases, there is no loss of access to data.

In the absence of a third site, HyperMetro can be used in the Static Priority Mode. This mode provides for automatic decision-making in case of failures in favor of storage systems with a high priority (preferred site). In the event of a failure of the main storage system, the non-preferred array will have to be started manually by changing the priority of the HyperMetro relationship for each volume pair or consistency group. Arrays also go into quorum-free mode when a quorum or all access paths to it fail. If you place a quorum on one of the sites, then when it falls, access to data on another site will not be provided - manual switching will be required.

Due to the fact that the volume distributed between the platforms is technologically based on conventional synchronous replication, most large manufacturers already have similar solutions: HPE 3Par Peer Persistance, IBM HyperSwap, HDS Global Active Device, Fujitsu Storage Cluster, Dell Compellent Live Volume. And apart can be highlighted data protection solutions between sites that appeared much earlier than the above and are based on unique solutions: IBM SVC Stretched Cluster, EMC VPLEX Metro, and Netapp MetroCluster.

In this material, we did not pursue the goal of comparing products from different manufacturers. However, in the comments we will try to answer your questions regarding the comparison of any specific points in the decision architecture.

Since HyperMetro was a new and unverified function, we decided to first test together with the customer on the basis of equipment from the demofund. In testing, we used two customer server sites connected via DWDM. An integrated SAN network based on two Brocade factories. At each site - one storage system Huawei OceanStor 5500 V3 with SAS 10k rpm disks and SSD, x86 blade server farm with VMware.

On two storage systems, LUNs, consistency groups are created sequentially and the HyperMetro relationship is configured. After that, volumes are addressed from both storage systems to the necessary hosts. The servers ran test virtual machines running IOmeter. When simulating the failure of one of the storage systems, a significant drop in performance (> 10%) was not observed. When loading CPU controllers less than 50%.

After a full configuration, even a hard shutdown of the array (pulling out the power cables from the controllers) did not lead to loss of access. After the connection between the arrays is restored, the volumes are synchronized manually (you can configure automatic mode in the settings of each HyperMetro relationship - Recovery Policy). If necessary, the synchronization process can be paused, deployed, or restarted for full resynchronization of volumes. It should be noted that switching access through another storage system does not occur if the array is manually reloaded through the graphical menu or through the command interface.

In the process, we worked out a simulation of various failures (see table below). The behavior of the system is fully consistent with that described in this table and in the manufacturer's documentation.

* 4 The fall of the site from the storage without quorum

* 5 The fall of the site from the storage with quorum

* 8.1 The failure of the storage occurred more than 20 seconds after item 7

* 8.2 The failure of the storage occurred less than 20 seconds after item 7

After successful testing, the customer approved the target configuration. The implementation of the solution after such testing was quick and without problems. The system has been working like a clock for 5 months now. Technical support in 24 * 7 mode is provided by our Service Center (Jet is a Huawei service partner of the highest level - Certified Service Partner 5-stars). Without false modesty, we note that the customer is completely satisfied with the capabilities of HyperMetro and the performance of the OceanStor-based solution. He plans to further increase the amount of protected data.

The material was prepared by Dmitry Kostryukov, senior design engineer of storage systems, Jet Infosystems company.

Detailed description

The main purpose of HyperMetro is to protect against data center failure, and only then storage, disk shelves, etc. The function became available along with the V300R003C00SPC100 firmware in December 2015.

In fact, this is providing one-time read and write access to a logical volume that has two independent copies on storage systems located at different technology sites. The HyperMetro solution topology is shown below.

Figure 1. HyperMetro Topology

For arbitration in case of failures, Quorum is used, which is installed on the third site, has access to both arrays via Ethernet and is an application for Linux (SUSE / RHEL / Ubuntu). Reading and writing to a distributed volume is possible from any of the sites to any of the two storage systems due to the exchange of locks between arrays by writing to the transaction log (data change log - DCL), which protects against the possibility of writing from different hosts to one block. It should also be noted that the write to the cache of both storage systems runs in parallel, and not sequentially. The following is the recording process in HyperMetro.

Figure 2. The order of operations when writing to a distributed volume

The MPIO Huawei Ultrapath driver (supplied with Oceanstor V3) is prioritizing local reading / writing to avoid cross-site traffic. Performance balancing between sites is allowed. It is possible to use not only FC, but also iSCSI - both for access to hosts and for replication.

After creating HyperMetro relationships, both volumes are considered symmetrical and equivalent. However, in the event of a quorum failure and communication between the storage systems, the priority side is set for each of the HyperMetro relationships in order to avoid the split brain situation. It is possible to use consistency groups to combine several pairs of volumes. In the event of failure of one of the copies in the vast majority of cases, there is no loss of access to data.

In the absence of a third site, HyperMetro can be used in the Static Priority Mode. This mode provides for automatic decision-making in case of failures in favor of storage systems with a high priority (preferred site). In the event of a failure of the main storage system, the non-preferred array will have to be started manually by changing the priority of the HyperMetro relationship for each volume pair or consistency group. Arrays also go into quorum-free mode when a quorum or all access paths to it fail. If you place a quorum on one of the sites, then when it falls, access to data on another site will not be provided - manual switching will be required.

Pros:

- HyperMetro is licensed for each storage, not a mirrored volume;

- in connection with the general firmware code in the Oceanstor third-generation storage system, this function is available starting from 2600v3 to the Hi-End array 18800v3;

- It works not only on FC, but also on IP between the two main sites;

- the use of replication, tiered storage, virtualization, snapshots and other storage functions on volumes protected with HyperMetro is available.

Minuses:

- requires a separate Ethernet-board for connection to an arbiter (dedicated ports for managing storage systems cannot be used for these purposes);

- Requires installation of MPIO Ultrapath driver on hosts.

Competitive solutions

Due to the fact that the volume distributed between the platforms is technologically based on conventional synchronous replication, most large manufacturers already have similar solutions: HPE 3Par Peer Persistance, IBM HyperSwap, HDS Global Active Device, Fujitsu Storage Cluster, Dell Compellent Live Volume. And apart can be highlighted data protection solutions between sites that appeared much earlier than the above and are based on unique solutions: IBM SVC Stretched Cluster, EMC VPLEX Metro, and Netapp MetroCluster.

In this material, we did not pursue the goal of comparing products from different manufacturers. However, in the comments we will try to answer your questions regarding the comparison of any specific points in the decision architecture.

Implementation

Since HyperMetro was a new and unverified function, we decided to first test together with the customer on the basis of equipment from the demofund. In testing, we used two customer server sites connected via DWDM. An integrated SAN network based on two Brocade factories. At each site - one storage system Huawei OceanStor 5500 V3 with SAS 10k rpm disks and SSD, x86 blade server farm with VMware.

On two storage systems, LUNs, consistency groups are created sequentially and the HyperMetro relationship is configured. After that, volumes are addressed from both storage systems to the necessary hosts. The servers ran test virtual machines running IOmeter. When simulating the failure of one of the storage systems, a significant drop in performance (> 10%) was not observed. When loading CPU controllers less than 50%.

After a full configuration, even a hard shutdown of the array (pulling out the power cables from the controllers) did not lead to loss of access. After the connection between the arrays is restored, the volumes are synchronized manually (you can configure automatic mode in the settings of each HyperMetro relationship - Recovery Policy). If necessary, the synchronization process can be paused, deployed, or restarted for full resynchronization of volumes. It should be noted that switching access through another storage system does not occur if the array is manually reloaded through the graphical menu or through the command interface.

In the process, we worked out a simulation of various failures (see table below). The behavior of the system is fully consistent with that described in this table and in the manufacturer's documentation.

| # | Equipment performance | Link Health | Result | ||||

| SHD №1 | SHD №2 | Quorum | No. 1 <-> Q | No. 2 <-> Q | No. 1 <-> No. 2 | ||

| 1. | + | + | + | + | + | + | Everything works as usual |

| 2. | - | + | + | + | + | + | Access to that goes through working storage |

| 3. | + | - | + | + | + | + | |

| 4. | - * | + | + | - | + | - | |

| 5. | + | - * | + * | + | - | - | |

| 6. | + | + | - | - | - | + | Access to that goes through both SHD. They are for 20 sec. switch to quorum priority mode |

| 7. | + | + | - | + | + | + | |

| 8.1. | + | - * | - | + | + | + | Access to that goes through Priority of storage. If Priority crashes, then manually enable access with Non-Priority storage |

| 8.2. | + | - * | - | + | + | + | Access to that is stopped, manual inclusion of access to storage is necessary |

| 9. | + | + | + | - | + | + | Access to that goes through both SHD. |

| + | + | + | + | - | + | ||

| eleven. | + | + | + | + | + | - | Arbitration occurs. Access to that goes through Priority of storage. |

| 12. | + | + | + | - | + | - | Access to that goes through storage №2. |

| thirteen. | - | + | + | + | - | + | Access is denied. It is necessary to manually enable access from storage №2 |

* 4 The fall of the site from the storage without quorum

* 5 The fall of the site from the storage with quorum

* 8.1 The failure of the storage occurred more than 20 seconds after item 7

* 8.2 The failure of the storage occurred less than 20 seconds after item 7

After successful testing, the customer approved the target configuration. The implementation of the solution after such testing was quick and without problems. The system has been working like a clock for 5 months now. Technical support in 24 * 7 mode is provided by our Service Center (Jet is a Huawei service partner of the highest level - Certified Service Partner 5-stars). Without false modesty, we note that the customer is completely satisfied with the capabilities of HyperMetro and the performance of the OceanStor-based solution. He plans to further increase the amount of protected data.

The material was prepared by Dmitry Kostryukov, senior design engineer of storage systems, Jet Infosystems company.