Data processing and storage: from antiquity to data centers

Our time is often called the information age. However, information was critical to the human race throughout its existence. Man has never been the fastest, most powerful and hardy animal. We owe our position in the food chain to two things: sociality and the ability to transmit information through more than one generation.

The way information has been stored and disseminated through the centuries continues to be literally a matter of life and death: from the survival of the tribe and the preservation of traditional medicine recipes to the survival of the species and the processing of complex climate models.

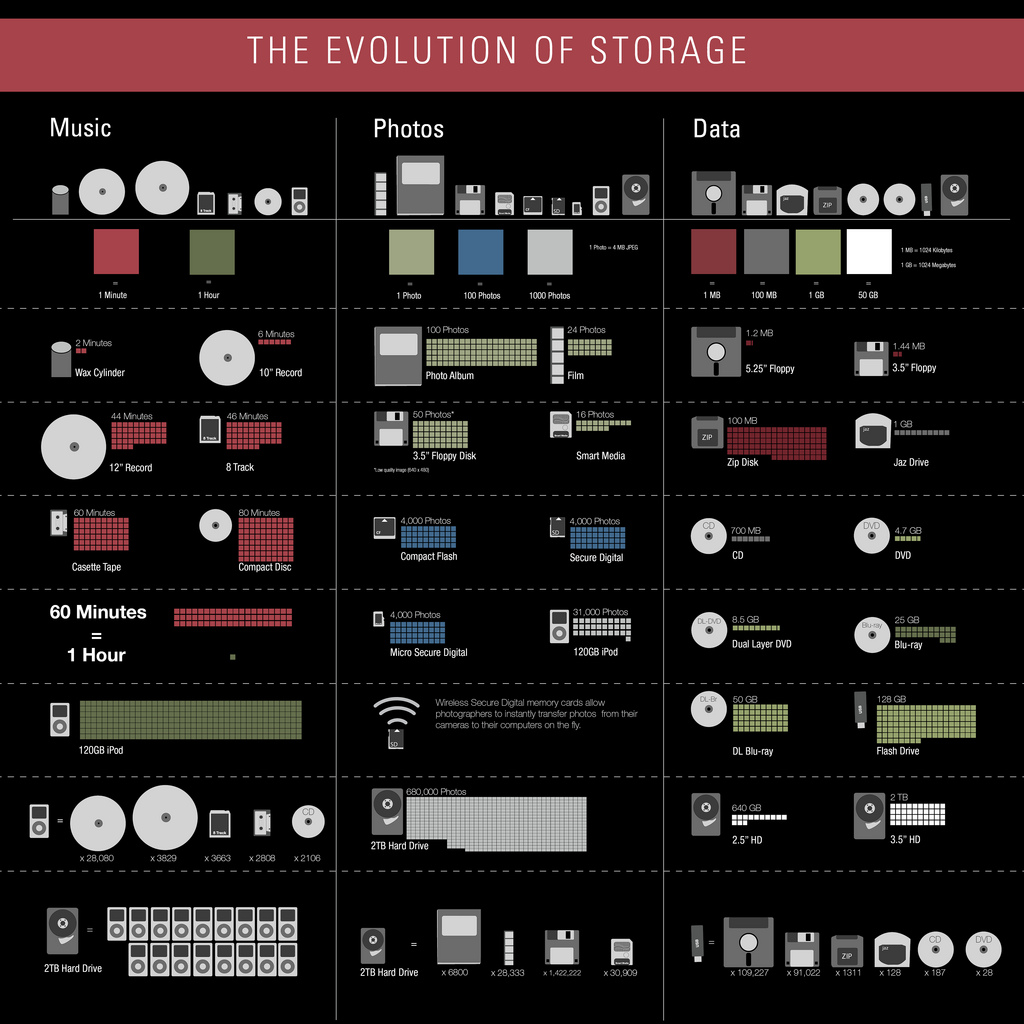

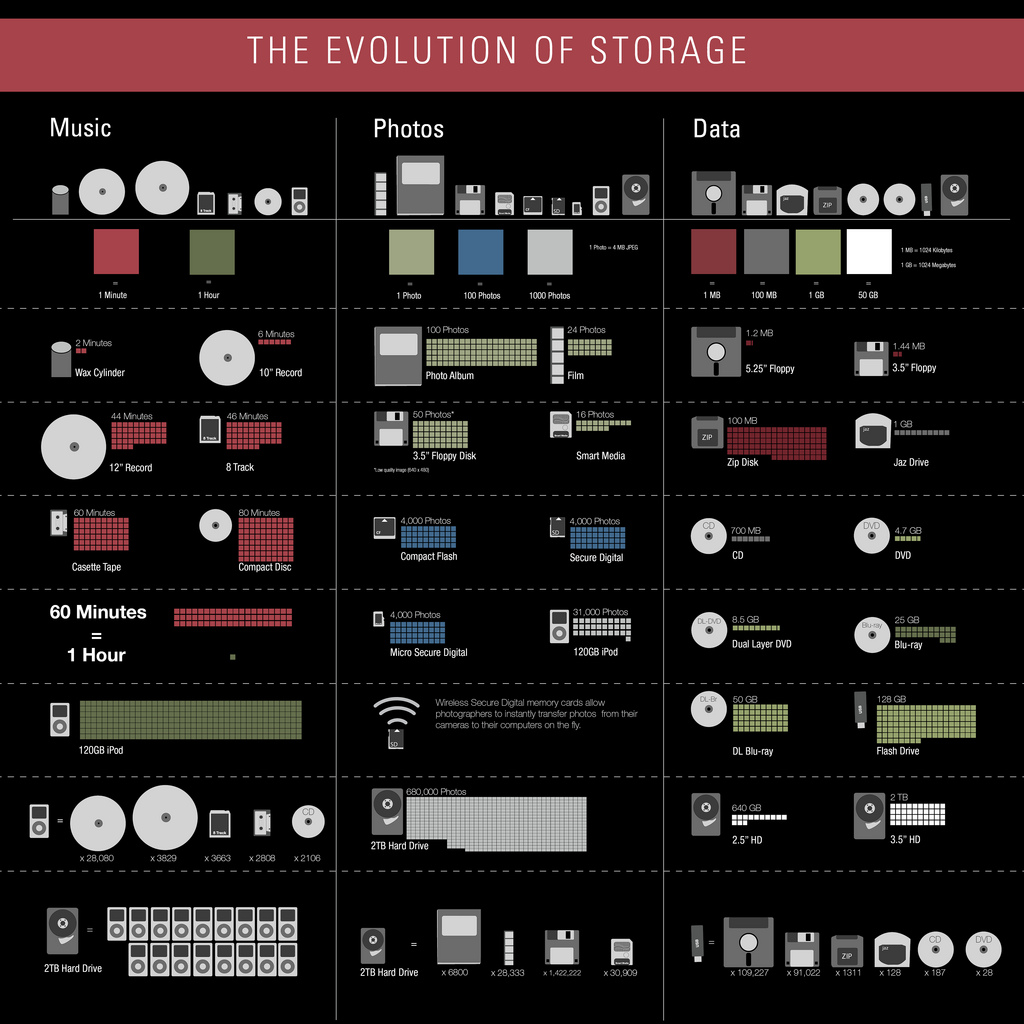

Look at the infographic (clickable to view in full version). It reflects the evolution of storage devices, and the scale is truly impressive. However, this picture is far from perfect - it covers some decades of the history of mankind, already living in the information society. Meanwhile, the data has been accumulated, broadcast and stored from the moment that we know the history of mankind. At first it was ordinary human memory, and in the near future we are already waiting for data to be stored in holographic layers and quantum systems. On Habré already repeatedly wrote about the history of magnetic drives, punch cards and disks the size of a house. But there has never been a journey to the very beginning, when there were no iron technologies and data concepts, but there were biological and social systems, who have learned to accumulate, save, transmit information. Let's try today to scroll through the whole story in one post.

Image Source: Flickr

Before what appeared to be what can undoubtedly be called writing, the main way to preserve important facts was the oral tradition. In this form, social customs, important historical events, personal experience or the narrator’s work were conveyed. It is difficult to overestimate this form, it continued to flourish until the Middle Ages, far after the advent of writing. Despite the undeniable cultural value, the oral form is the standard of inaccuracy and distortion. Imagine a spoiled phone game that people have been playing for centuries. Lizards turn into dragons, people gain the head’s songs, and reliable information about the life and customs of entire nationalities cannot be distinguished from myths and legends.

Boyan

For most historians, the birth of civilization with a capital letter is inextricably linked with the advent of writing. According to popular theories, civilization in its modern sense appears as a result of the creation of surplus food, the division of labor and the emergence of trade. This is exactly what happened in the Tigris and Euphrates: fertile fields gave rise to commerce, and commerce, unlike the epic, requires accuracy. It was around 2700 BC, that is 4700 years ago. The lion's share of Sumerian cuneiform tablets are filled with an endless series of trading transactions. Not everything, of course, is so banal, for example, the decoding of the Sumerian cuneiform writing has preserved for us the oldest literary work at the moment - “The Epic of Gilgamesh”.

Clay cuneiform tablet

Cuneiform writing was definitely a great invention. Clay tablets are well preserved, let alone cuneiform, carved in stone. But cuneiform writing has an unambiguous minus - speed, and the physical (not in megabytes) weight of the resulting "documents". Imagine that you urgently need to write and deliver several invoices to a neighboring city. With clay tablets, this kind of work can literally become overwhelming.

In many countries, from Egypt to Greece, mankind has been looking for ways to quickly, conveniently and reliably capture information. More and more people came to one or another variation of thin sheets of organic origin and contrasting "ink". This solved the problem with speed and, so to speak, “capacity” per kilogram of weight. Thanks to parchment, papyrus and, ultimately, paper, humanity received its first information network: mail.

However, new problems came with new advantages: everything that is written on materials of organic origin tends to decompose, fade, and simply burn. In the era from the Dark Ages until the invention of the print press, copying books was a big and important thing: literal rewriting is full, letter by letter. If you imagine the complexity and complexity of this process, it is easy to understand why reading and writing remained the privilege of a very narrow layer of monasticism and noble people. However, in the mid-fifteenth century, what could be called the First Information Revolution happened.

Attempts to simplify and speed up typing using sets of pre-cast word forms or letters and a hand press were made in China in the 11th century. Why do we know little about this and are accustomed to consider Europe as the birthplace of the press? The spread of typesetting in China was hindered by their own complex writing. The production of letters for full-fledged printing in Chinese was too laborious.

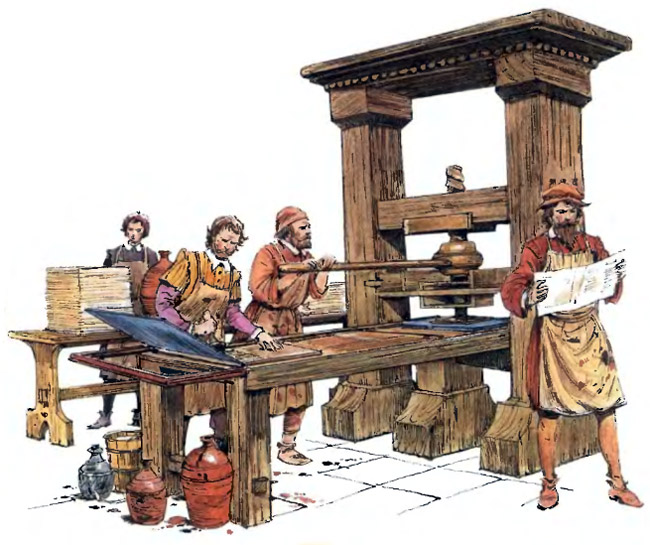

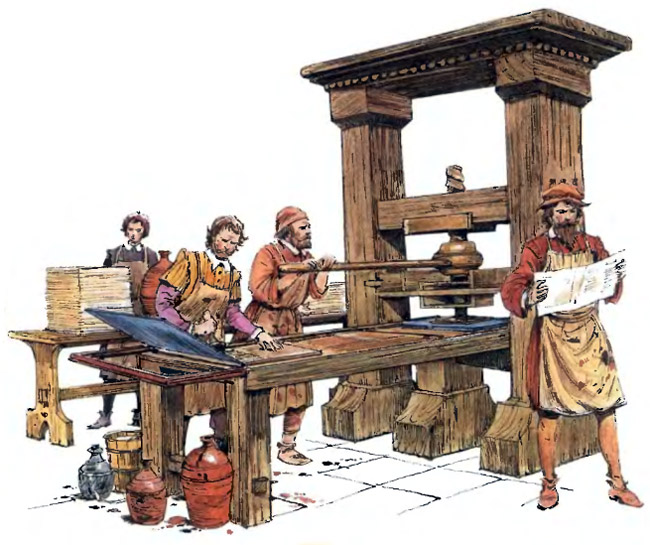

Thanks to Gutenberg, the concept of an instance appeared in books. Gutenberg's Bible has been printed 180 times. 180 copies of the text, and each copy increases the likelihood that fires, floods, lazy copyists, hungry rodents will not interfere with future generations of readers.

Gutenberg Printing Press

The manual press and manual selection of letters, however, are, of course, not an optimal process in terms of speed and labor. With each century, human society has sought not only to find a way to preserve information, but also to disseminate it to the widest possible range of individuals. With the development of technology, both printing and copying have evolved.

The rotary printing press was invented at the end of the nineteenth century, and its variations are still in use today. These engines, with continuously rotating shafts on which printed forms are fixed, were the quintessence of the industrial approach and symbolized a very important stage in the information development of mankind: information became mass, thanks to newspapers, leaflets and cheaper books.

Mass, however, does not always benefit a particular piece of information. The main media, paper and ink, are still subject to wear, decay, loss. Libraries, full of books on all possible areas of human knowledge, became more and more voluminous, occupying vast spaces and requiring more and more resources for their maintenance, cataloging and search.

The next paradigm shift in the field of information storage occurred after the invention of the photographic process. Several engineers came up with the bright idea that miniature photocopies of technical documents, articles and even books can extend the life of the source and reduce the space needed for their storage. Microfilms resulting from such a thought process (miniature photographs and equipment for viewing them) came into use in financial, technical and scientific circles in the 20s of the twentieth century. Microfilm has many advantages - this process combines ease of copying and durability. It seemed that the development of information storage methods reached its zenith.

Microfilm still in use

Engineering minds have tried to come up with a universal method for processing and storing information since the 17th century. Blaise Pascal, in particular, noted that if calculations are carried out in a binary number system, then mathematical laws make it possible to bring solutions to problems in a form that makes it possible to create a universal computer. His dream of such a machine remained only a beautiful theory, however, centuries later, in the middle of the 20th century, Pascal's ideas were embodied in iron and spawned a new information revolution. Some believe that it is still ongoing.

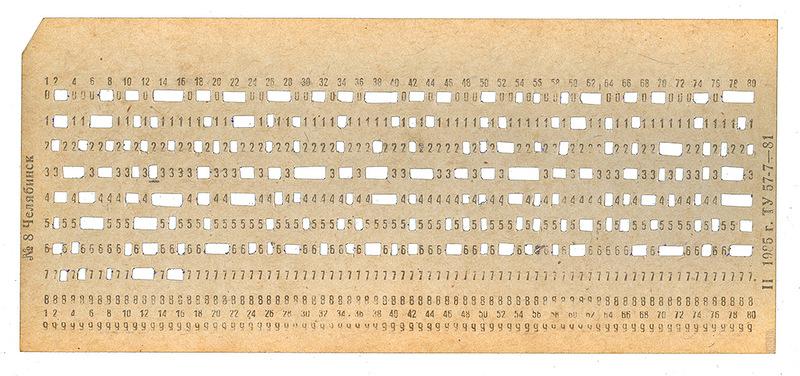

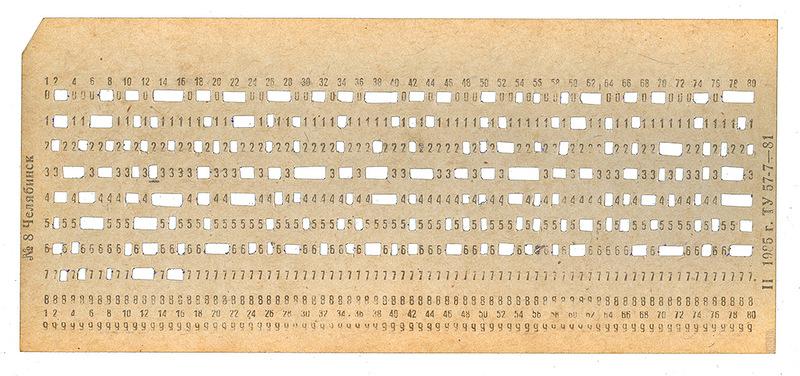

What is now called “analog” methods of information storage, implies that for sound, text, images and video their own technologies of fixation and reproduction were used. Computer memory is universal - everything that can be written is expressed using zeros and ones and reproduced using specialized algorithms. The very first method of storing digital information was neither convenient, nor compact, nor reliable. These were punch cards, simple cardboard boxes with holes in specially designated places. A gigabyte of such "memory" could weigh up to 20 tons. In such a situation, it was difficult to talk about competent systematization or backup.

Card

Card

The computer industry developed rapidly and quickly penetrated into all possible areas of human activity. In the 1950s, engineers “borrowed” tape data from analog audio and video recordings. Streamers with cassettes up to 80 MB in size were used to store and back up data until the 90s. Was this a good method with a relatively long shelf life (up to 50 years) and a small media size? In addition, the convenience of their use and standardization of data storage formats introduced the concept of backup in everyday life.

One of the first IBM hard drives, 5 MB

Magnetic tapes and the systems associated with them have one major drawback - consistent access to data. That is, the further the record is from the beginning of the tape, the more time it will take to read it.

In the 70s of the 20th century, the first “hard disk” (HDD) was produced in the format in which we are familiar with it today - a set of several disks with magnetized material and reading / writing heads. Variations of this technology are used today, gradually losing in popularity to solid-state drives (SSDs). Starting from this moment, during the entire computer boom of the 80s, the main paradigms of storing, protecting and backing up information are formed. Due to the mass distribution of household and office computers that do not have a large amount of memory and processing power, the client-server model has been strengthened. At the beginning, the “servers” were mostly local, their own for each organization, institute, or company. There was no system, rules, information was duplicated mainly on floppy disks or magnetic tapes.

The advent of the Internet, however, has spurred the development of storage and data processing systems. In the 90s, at the dawn of the “dotcom bubble”, the first data centers, or data centers (data centers) began to appear. The requirements for the reliability and availability of digital resources grew, along with them the complexity of their provision grew. From special rooms in the back of the enterprise or institute, data centers have turned into separate buildings with their cunning infrastructure. At the same time, a kind of anatomy crystallized in the data centers: the computers themselves (servers), communication systems with Internet providers and everything related to engineering communications (cooling, fire extinguishing systems and physical access to rooms).

The closer to today, the more we depend on data stored somewhere in the “clouds” of data centers. Banking systems, e-mail, online encyclopedias and search engines - all this has become a new standard of life, one might say, a physical extension of our own memory. The way we work, relax and even heal, all of this can be damaged by a simple loss or even temporary disconnection from the network. In the 2000s, standards were developed for the reliability of data centers, from the 1st to 4th levels.

At the same time, reservation technologies began to penetrate actively from the space and medical sectors. Of course, people knew how to copy and propagate information in order to protect it in case of destruction of the original for a long time, but it is the duplication of not only storage media, but also various engineering systems, as well as the need to provide points that failed and possible human errors are distinguished by serious data centers. For example, a data center belonging to Tier I will only have limited data storage redundancy. The requirements for Tier II already stipulate redundancy of power supplies and the availability of protection against elementary human errors, and Tier III provides for the backup of all engineering systems and protection against unauthorized entry. Finally, the highest level of data center reliability, fourth, requires additional duplication of all backup systems and the complete absence of points of failure. The redundancy ratio (exactly how many redundant elements fall on each primary) is usually indicated by the letter M. Over time, the requirements for the redundancy ratio have only grown.

To build a data center of the TIER-III reliability level is a project that only an exclusively qualified company can handle. This level of reliability and availability means that both engineering communications and communication systems are duplicated, and the data center is entitled to downtime only in the amount of about 90 minutes per year.

We have such experience in Safedata : in January 2014, in cooperation with the Russian Research Center Kurchatov Institute, we commissioned the second SAFEDATA data center, Moscow-II, which also meets the requirements of TIER 3 level of TIA-942 standard, earlier (2007-2010) we built the Moscow-I data center, which meets the requirements of the TIER 3 level of the TIA-942 standard and belongs to the category of data storage and processing centers with a secure network infrastructure.

We see that in IT there is another paradigm shift, and it is associated with data science. Processing and storing large amounts of data are becoming more relevant than ever. In a sense, any business should be ready to become a bit of a scientist: you collect a huge amount of data about your customers, process them and get a new perspective for yourself. To implement such projects, it will be necessary to rent a large number of powerful server machines and operation will not be the cheapest. Or, perhaps, your internal IT system is so complex that maintaining it takes too much company resources.

In any case, for whatever purpose you do not need significant computing power, we have a “Virtual Data Center” service. Infrastructure as a service is not a new direction, however, we are favorably distinguished by a holistic approach, ranging from specific IT-related problems, such as transferring corporate resources to the Virtual Data Center, to legal ones, such as consultation on the current legislation of the Russian Federation in the field of data protection.

The development of information technology is similar to the train rushing rushing forward, not everyone has time to jump into the car when they are given the opportunity. Somewhere they still use paper documents, hundreds of un-digitized microfilms are stored in old archives, government agencies can still use floppy disks. Progress is never linearly uniform. No one knows how many important things we have lost forever as a result and how many hours have been spent due to still not quite optimal processes. But we at Safedata know how to prevent waste and irreparable losses specifically in your case.

The way information has been stored and disseminated through the centuries continues to be literally a matter of life and death: from the survival of the tribe and the preservation of traditional medicine recipes to the survival of the species and the processing of complex climate models.

Look at the infographic (clickable to view in full version). It reflects the evolution of storage devices, and the scale is truly impressive. However, this picture is far from perfect - it covers some decades of the history of mankind, already living in the information society. Meanwhile, the data has been accumulated, broadcast and stored from the moment that we know the history of mankind. At first it was ordinary human memory, and in the near future we are already waiting for data to be stored in holographic layers and quantum systems. On Habré already repeatedly wrote about the history of magnetic drives, punch cards and disks the size of a house. But there has never been a journey to the very beginning, when there were no iron technologies and data concepts, but there were biological and social systems, who have learned to accumulate, save, transmit information. Let's try today to scroll through the whole story in one post.

Image Source: Flickr

Before the invention of writing

Before what appeared to be what can undoubtedly be called writing, the main way to preserve important facts was the oral tradition. In this form, social customs, important historical events, personal experience or the narrator’s work were conveyed. It is difficult to overestimate this form, it continued to flourish until the Middle Ages, far after the advent of writing. Despite the undeniable cultural value, the oral form is the standard of inaccuracy and distortion. Imagine a spoiled phone game that people have been playing for centuries. Lizards turn into dragons, people gain the head’s songs, and reliable information about the life and customs of entire nationalities cannot be distinguished from myths and legends.

Boyan

From cuneiform to printing press

For most historians, the birth of civilization with a capital letter is inextricably linked with the advent of writing. According to popular theories, civilization in its modern sense appears as a result of the creation of surplus food, the division of labor and the emergence of trade. This is exactly what happened in the Tigris and Euphrates: fertile fields gave rise to commerce, and commerce, unlike the epic, requires accuracy. It was around 2700 BC, that is 4700 years ago. The lion's share of Sumerian cuneiform tablets are filled with an endless series of trading transactions. Not everything, of course, is so banal, for example, the decoding of the Sumerian cuneiform writing has preserved for us the oldest literary work at the moment - “The Epic of Gilgamesh”.

Clay cuneiform tablet

Cuneiform writing was definitely a great invention. Clay tablets are well preserved, let alone cuneiform, carved in stone. But cuneiform writing has an unambiguous minus - speed, and the physical (not in megabytes) weight of the resulting "documents". Imagine that you urgently need to write and deliver several invoices to a neighboring city. With clay tablets, this kind of work can literally become overwhelming.

In many countries, from Egypt to Greece, mankind has been looking for ways to quickly, conveniently and reliably capture information. More and more people came to one or another variation of thin sheets of organic origin and contrasting "ink". This solved the problem with speed and, so to speak, “capacity” per kilogram of weight. Thanks to parchment, papyrus and, ultimately, paper, humanity received its first information network: mail.

However, new problems came with new advantages: everything that is written on materials of organic origin tends to decompose, fade, and simply burn. In the era from the Dark Ages until the invention of the print press, copying books was a big and important thing: literal rewriting is full, letter by letter. If you imagine the complexity and complexity of this process, it is easy to understand why reading and writing remained the privilege of a very narrow layer of monasticism and noble people. However, in the mid-fifteenth century, what could be called the First Information Revolution happened.

From Gutenberg to the lamp

Attempts to simplify and speed up typing using sets of pre-cast word forms or letters and a hand press were made in China in the 11th century. Why do we know little about this and are accustomed to consider Europe as the birthplace of the press? The spread of typesetting in China was hindered by their own complex writing. The production of letters for full-fledged printing in Chinese was too laborious.

Thanks to Gutenberg, the concept of an instance appeared in books. Gutenberg's Bible has been printed 180 times. 180 copies of the text, and each copy increases the likelihood that fires, floods, lazy copyists, hungry rodents will not interfere with future generations of readers.

Gutenberg Printing Press

The manual press and manual selection of letters, however, are, of course, not an optimal process in terms of speed and labor. With each century, human society has sought not only to find a way to preserve information, but also to disseminate it to the widest possible range of individuals. With the development of technology, both printing and copying have evolved.

The rotary printing press was invented at the end of the nineteenth century, and its variations are still in use today. These engines, with continuously rotating shafts on which printed forms are fixed, were the quintessence of the industrial approach and symbolized a very important stage in the information development of mankind: information became mass, thanks to newspapers, leaflets and cheaper books.

Mass, however, does not always benefit a particular piece of information. The main media, paper and ink, are still subject to wear, decay, loss. Libraries, full of books on all possible areas of human knowledge, became more and more voluminous, occupying vast spaces and requiring more and more resources for their maintenance, cataloging and search.

The next paradigm shift in the field of information storage occurred after the invention of the photographic process. Several engineers came up with the bright idea that miniature photocopies of technical documents, articles and even books can extend the life of the source and reduce the space needed for their storage. Microfilms resulting from such a thought process (miniature photographs and equipment for viewing them) came into use in financial, technical and scientific circles in the 20s of the twentieth century. Microfilm has many advantages - this process combines ease of copying and durability. It seemed that the development of information storage methods reached its zenith.

Microfilm still in use

From punch cards and magnetic tapes to modern data centers

Engineering minds have tried to come up with a universal method for processing and storing information since the 17th century. Blaise Pascal, in particular, noted that if calculations are carried out in a binary number system, then mathematical laws make it possible to bring solutions to problems in a form that makes it possible to create a universal computer. His dream of such a machine remained only a beautiful theory, however, centuries later, in the middle of the 20th century, Pascal's ideas were embodied in iron and spawned a new information revolution. Some believe that it is still ongoing.

What is now called “analog” methods of information storage, implies that for sound, text, images and video their own technologies of fixation and reproduction were used. Computer memory is universal - everything that can be written is expressed using zeros and ones and reproduced using specialized algorithms. The very first method of storing digital information was neither convenient, nor compact, nor reliable. These were punch cards, simple cardboard boxes with holes in specially designated places. A gigabyte of such "memory" could weigh up to 20 tons. In such a situation, it was difficult to talk about competent systematization or backup.

Card

CardThe computer industry developed rapidly and quickly penetrated into all possible areas of human activity. In the 1950s, engineers “borrowed” tape data from analog audio and video recordings. Streamers with cassettes up to 80 MB in size were used to store and back up data until the 90s. Was this a good method with a relatively long shelf life (up to 50 years) and a small media size? In addition, the convenience of their use and standardization of data storage formats introduced the concept of backup in everyday life.

One of the first IBM hard drives, 5 MB

Magnetic tapes and the systems associated with them have one major drawback - consistent access to data. That is, the further the record is from the beginning of the tape, the more time it will take to read it.

In the 70s of the 20th century, the first “hard disk” (HDD) was produced in the format in which we are familiar with it today - a set of several disks with magnetized material and reading / writing heads. Variations of this technology are used today, gradually losing in popularity to solid-state drives (SSDs). Starting from this moment, during the entire computer boom of the 80s, the main paradigms of storing, protecting and backing up information are formed. Due to the mass distribution of household and office computers that do not have a large amount of memory and processing power, the client-server model has been strengthened. At the beginning, the “servers” were mostly local, their own for each organization, institute, or company. There was no system, rules, information was duplicated mainly on floppy disks or magnetic tapes.

The advent of the Internet, however, has spurred the development of storage and data processing systems. In the 90s, at the dawn of the “dotcom bubble”, the first data centers, or data centers (data centers) began to appear. The requirements for the reliability and availability of digital resources grew, along with them the complexity of their provision grew. From special rooms in the back of the enterprise or institute, data centers have turned into separate buildings with their cunning infrastructure. At the same time, a kind of anatomy crystallized in the data centers: the computers themselves (servers), communication systems with Internet providers and everything related to engineering communications (cooling, fire extinguishing systems and physical access to rooms).

The closer to today, the more we depend on data stored somewhere in the “clouds” of data centers. Banking systems, e-mail, online encyclopedias and search engines - all this has become a new standard of life, one might say, a physical extension of our own memory. The way we work, relax and even heal, all of this can be damaged by a simple loss or even temporary disconnection from the network. In the 2000s, standards were developed for the reliability of data centers, from the 1st to 4th levels.

At the same time, reservation technologies began to penetrate actively from the space and medical sectors. Of course, people knew how to copy and propagate information in order to protect it in case of destruction of the original for a long time, but it is the duplication of not only storage media, but also various engineering systems, as well as the need to provide points that failed and possible human errors are distinguished by serious data centers. For example, a data center belonging to Tier I will only have limited data storage redundancy. The requirements for Tier II already stipulate redundancy of power supplies and the availability of protection against elementary human errors, and Tier III provides for the backup of all engineering systems and protection against unauthorized entry. Finally, the highest level of data center reliability, fourth, requires additional duplication of all backup systems and the complete absence of points of failure. The redundancy ratio (exactly how many redundant elements fall on each primary) is usually indicated by the letter M. Over time, the requirements for the redundancy ratio have only grown.

To build a data center of the TIER-III reliability level is a project that only an exclusively qualified company can handle. This level of reliability and availability means that both engineering communications and communication systems are duplicated, and the data center is entitled to downtime only in the amount of about 90 minutes per year.

We have such experience in Safedata : in January 2014, in cooperation with the Russian Research Center Kurchatov Institute, we commissioned the second SAFEDATA data center, Moscow-II, which also meets the requirements of TIER 3 level of TIA-942 standard, earlier (2007-2010) we built the Moscow-I data center, which meets the requirements of the TIER 3 level of the TIA-942 standard and belongs to the category of data storage and processing centers with a secure network infrastructure.

We see that in IT there is another paradigm shift, and it is associated with data science. Processing and storing large amounts of data are becoming more relevant than ever. In a sense, any business should be ready to become a bit of a scientist: you collect a huge amount of data about your customers, process them and get a new perspective for yourself. To implement such projects, it will be necessary to rent a large number of powerful server machines and operation will not be the cheapest. Or, perhaps, your internal IT system is so complex that maintaining it takes too much company resources.

In any case, for whatever purpose you do not need significant computing power, we have a “Virtual Data Center” service. Infrastructure as a service is not a new direction, however, we are favorably distinguished by a holistic approach, ranging from specific IT-related problems, such as transferring corporate resources to the Virtual Data Center, to legal ones, such as consultation on the current legislation of the Russian Federation in the field of data protection.

The development of information technology is similar to the train rushing rushing forward, not everyone has time to jump into the car when they are given the opportunity. Somewhere they still use paper documents, hundreds of un-digitized microfilms are stored in old archives, government agencies can still use floppy disks. Progress is never linearly uniform. No one knows how many important things we have lost forever as a result and how many hours have been spent due to still not quite optimal processes. But we at Safedata know how to prevent waste and irreparable losses specifically in your case.