Generation of automatic tests: Excel, XML, XSLT, then everywhere

Problem

There is a certain functional area of the application: a certain expert system that analyzes the state of the data and produces the result - a lot of recommendations based on a set of rules. System components are covered by a specific set of unit tests, but the main “magic” is to follow the rules. The set of rules is determined by the customer at the project stage, the configuration is completed.

Moreover, since after the initial acceptance (it was long and difficult - because “manually") the rules of the expert system are regularly changed at the request of the customer. It is obviously not bad to conduct regression testing of the system to make sure that the rest the rules still work correctly and the last changes have not made any side effects.

The main difficulty is not even in the preparation of scenarios - they are, but in their implementation. When executing scripts “manually”, approximately 99% of the time and effort is spent preparing test data in the application. The time it takes for the expert system to execute the rules and then analyze the result is insignificant compared to the preparatory part. The complexity of the tests is known to be a serious negative factor. generating distrust on the part of the customer, and affecting the development of the system (“Change something, and then you will have to test it again ... Well, it ...”).

An obvious technical solution would be to turn all scripts into automated ones and run them regularly as part of release testing or as needed. However, we will be lazy, and try to find a way in which data for test scenarios is prepared quite simply (ideally by the customer), and automatic tests are generated based on them, also automatically.

Under the cat we will talk about one approach that implements this idea - using MS Excel, XML and XSLT transformations.

A test is primarily data

And where is the easiest way to prepare data, especially for an unprepared user? In the tables. So, first of all - in MS Excel.

Personally, I really don't like spreadsheets. But not as such (as a rule, this is a standard of usability), but because they instill and cultivate the concept of “mixing data and presentation” in the minds of unprofessional users (and now programmers must pick out data from endless multi-level “sheets”, where the value has everything - both cell color and font). But in this case, we know about the problem, and will try to eliminate it.

So, the statement of the problem

- provide data preparation in MS Excel. The format should be reasonable from the point of view of convenience of data preparation, simple for further processing, accessible for transfer to business users (the latter is optional, for a start - we will make a tool for ourselves);

- accept prepared data and convert it to test code.

Decision

A couple of additional introduction:

- The specific format for the presentation of data in Excel is not yet clear and, apparently, will change slightly in search of the optimal presentation;

- The code of the test script may change over time (debugging, fixing defects, optimization).

Both points lead to the idea that the initial data for the test should be extremely divided both from the format in which the input will be carried out and from the process of processing and turning it into an autotest code, since both sides will change.

The well-known technology for turning data into an arbitrary textual representation is template engines, and XSLT transformations, in particular, are flexible, simple, convenient, and extensible. As an added bonus, the use of transformations opens the way to both generating the tests themselves (no matter what programming language) and generating test documentation.

So, the solution architecture:

- Convert data from Excel to XML in a specific format

- Convert XML using XSLT to the final code of a test script in an arbitrary programming language

A specific implementation at both stages may be task specific. But some general principles that I think will be useful in any case are given below:

Stage 1. Data Maintenance in Excel

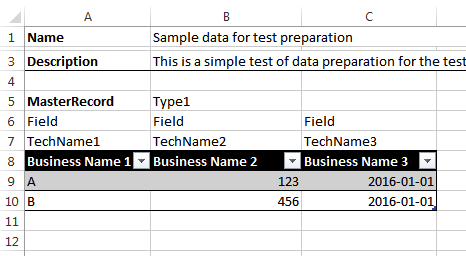

Here, frankly, I limited myself to maintaining data in the form of table blocks. A fragment of the file is in the picture.

- The block begins with a line containing the name of the block (cell “A5"). It will be used as the name of the xml element, so that the content must meet the requirements. An optional “type” (cell “B5") may be present in the same string - it will be used as the attribute value, so it also has limitations.

- Each column of the table contains, in addition to the “official” name representing business terms (line 8), two more fields for “type” (line 6) and “technical name” (line 7). In the process of preparing data, technical fields can be hidden, but they will be used during code generation.

- The columns in the table can be any number. The script finishes processing the columns as soon as it encounters a column with an empty value of “type” (column D).

- Columns with a “type” starting with underscore are skipped.

- The table is processed until a row with an empty value in the first column is encountered (cell “A11”)

- The script stops after 3 empty lines.

Stage 2. Excel -> XML

Converting data from Excel worksheets to XML is a simple task. The conversion is done using VBA code. There may be options, but it seemed to me the easiest and fastest.

Below are just a few considerations - how to make the final tool easier to maintain and use.

- The code is presented in the form of an Excel add-in (.xlam) - to simplify code support when the number of files with test data is more than 1 and these files are created / supported by more than one person. In addition, this corresponds to the approach of separating code and data;

- XSLT templates are placed in the same directory as the add-in file - to simplify support;

- Generated files: intermediate XML and the resulting file with code, it is advisable to put in the same directory as the Excel file with the source data. People who create test scripts will be more convenient and faster to work with the results;

- An Excel file may contain several sheets with data for tests - they are used to organize the variability of data for the test (for example, if you are testing a process in which you need to check the system response at each step): copy the sheet, change some of the input data and expected results - you're done. All in one file;

- Since all sheets in the Excel workbook must have a unique name, this uniqueness can be used as part of the name of the test script. This approach provides guaranteed uniqueness of the names of the various sub-scenarios within the script. And if you include the file name in the name of the test script, then it becomes even easier to achieve the uniqueness of the script names - which is especially important if several people prepare the test data independently. In addition, a standard approach to naming will help later in the analysis of test results - it will be very easy to get from the execution results to the source data;

- Data from all sheets of the book is saved in a single XML file. For us, this seemed appropriate in the case of generating test documentation, and in some cases generating test scripts;

- When generating the data file for the test, it was convenient to be able to not include separate sheets with the source data in the generation (for various reasons; for example, the data for one of the five scenarios are not ready yet - it’s time to run the tests). To do this, we use the agreement: sheets where the name begins with the underscore - are excluded from generation;

- It’s convenient to keep a sheet with the details of the scenario for creating test data (“Documentation”) in the file - you can copy information from the customer there, make comments, keep the basic data and constants referenced by the other data sheets, and so on. Of course, this sheet is not involved in the generation;

- In order to be able to influence some aspects of generating the final code of test scripts, it turned out to be convenient to include additional information “generation options” in the final XML, which are not test data, but can be used by a template to include or exclude sections of code (similar to pragma, define, etc.) To do this, we use named cells located on the non-generated sheet “Options”;

- Each line of test data should have a unique identifier at the XML level - this will greatly help when generating code and when processing cross-links between lines of test data, which must be formulated in terms of just these unique identifiers.

XML fragment that is obtained from data in Excel from the image above

Field TechName1

Business Name 1

Field TechName2

Business Name 2

Field TechName3

Business Name 3

A 123 2016-01-01 |

B 456 2016-01-01 |

Stage 3. XML -> Code

This part is extremely specific to the tasks that are being solved, therefore I will limit myself to general remarks.

- The initial iteration begins on the elements representing the sheets (various test cases). Here you can place the setup / teardown blocks of utilities;

- Iteration over data elements within a script element should begin with elements of the expected results. So you can logically organize the generated tests on the principle of "one test - one test";

- It is advisable to explicitly divide at the template level the areas where data is generated, the action being checked is performed, and the result is controlled. This is possible by using templates with modes. Such a structure of the template will allow us to do other generation options in the future - simply importing this template and overlapping the necessary area in the new template;

- Along with the code, it will be convenient to include help on running tests in the same file;

- It is very convenient to separate the data generation code into a separately called unit (procedure) - so that it can be used both within the test and independently, for debugging or just creating a set of test data.

Final comment

After some time, there will be a lot of files with test data, and debugging and “polishing” of templates for generating test scripts will continue. Therefore, you will have to provide for the possibility of "mass" generation of autotests from a set of source Excel files.

Conclusion

Using the described approach, you can get a very flexible tool for preparing test data or fully functional autotests.

In our project, we were able to quickly create a set of test scripts for integration testing of a complex functional area - there are currently about 60 files generated in about 180 tSQLt test classes (a framework for testing logic on the side of MS SQL Server). The plans are to use the approach to expand testing of this and other functional areas of the project.

The user input format remains as before, and the generation of final autotests can be changed as needed.

VBA code for converting Excel files to XML and starting the conversion (along with an example of Excel and XML) can be taken on GitHubgithub.com/serhit/TestDataGenerator .

XSLT transformation is not included in the repository, because it generates code for a specific task - you will still have your own. I will be glad to comments and pull requests.

Happy testing!