Load testing with locust

- Tutorial

Load testing is not so much in demand and widespread, as other types of testing - tools that allow such testing to take place, are not so many but can be easily and easily counted on the fingers of one hand.

When it comes to performance testing - in the first place, everyone thinks about JMeter, it undoubtedly remains the most famous tool with the largest number of plug-ins. I never liked JMeter because of the non-obvious interface and high threshold of entry, as soon as the need arises to test a non-Hello World application.

And so, inspired by the success of testing in two different projects, I decided to share information about a relatively simple and convenient software - Locust

For those who are too lazy to go under the cat, he recorded a video:

Open-source tools, which allow you to specify load scripts for Python code that supports distributed load and, as the authors assert, were used for load testing Battlelog for the Battlefild series of games (immediately appealing)

From the pros:

Of the minuses:

Any testing is a complex task that requires planning, preparation, performance monitoring and analysis of results. With load testing, if possible, it is possible and necessary to collect all possible data that may affect the result:

The following examples can be classified as black-box functional load testing. Even without knowing anything about the application under test and not accessing the logs, we can measure its performance.

In order to test load tests in practice, I deployed a locally simple web server https://github.com/typicode/json-server . Almost all of the following examples I will give for him. I took the data for the server from a deployed online example - https://jsonplaceholder.typicode.com/.

To start it, a nodeJS is required.

The obvious spoiler : as with security testing, it is better to perform load testing experiments on cats locally without loading online services so that you are not banned.

In order to start, you also need Python - in all examples I will use version 3.6, as well as locust (at the time of writing, version 0.9.0). It can be installed with the command

Details of the installation can be found in the official documentation.

Next we need a test file. I took an example from the documentation, since it is very simple and straightforward:

Everything! This is really enough to start the test! Let's take an example before proceeding to launch.

Skipping imports, at the very beginning we see 2 almost identical functions of login and logout, consisting of one line. l.client is an HTTP session object with which we will create the load. We use the POST method, which is almost identical to the same in the requests library. Almost - because in this example, we pass not the full URL as the first argument, but only a part of it - a specific service.

The second argument passes the data - and I can not help but notice that it is very convenient to use Python dictionaries, which are automatically converted into json

You can also note that we do not process the result of the request in any way - if it is successful, the results (for example, cookies) will be saved in this session. If an error occurs, it will be recorded and added to the load statistics.

If we want to know if we wrote the request correctly, we can always check it as follows:

I added only the base_url variable , which should contain the full address of the resource under test.

The next few functions are requests that will create a load. Again, we do not need to process the server response - the results will go immediately to the statistics.

Next is the UserBehavior class (the class name can be any). As the name implies, it will describe the behavior of the spherical user in a vacuum of the application under test. The tasks property is passed to the dictionary of methods that the user will call and their call frequency. Now, despite the fact that we do not know what function and in which order each user will call - they are chosen randomly, we guarantee that the index functionwill be called on average 2 times more often than the profile function .

In addition to behavior, the parent TaskSet class allows you to set 4 functions that can be performed before and after tests. The order of calls will be as follows:

Here it is worth mentioning that there are 2 ways to declare user behavior: the first is already indicated in the example above - the functions are declared in advance. The second way is to declare methods right inside the UserBehavior class :

In this example, user functions and the frequency of their calling is set using task annotation . Functionally, nothing has changed.

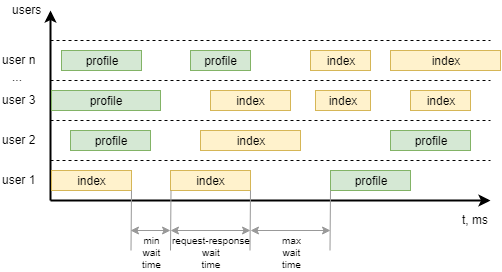

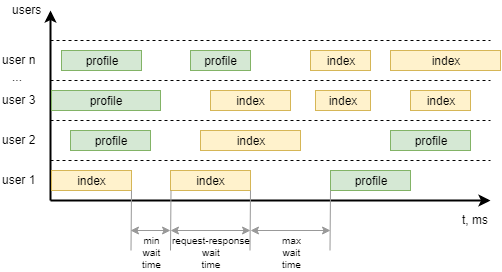

The last class from the example is WebsiteUser (the class name can be any). In this class, we define the UserBehavior *** + user behavior model , as well as the minimum and maximum waiting times between individual tasks for each task to be called by individual users. To make it clearer, here's how to visualize it:

Run the server, the performance of which we will test:

We also modify the sample file so that it can test the service, remove the login and logout, set the user behavior:

To run the command line need to run the command

where host is the address of the tested resource. It will be added to it the addresses of the services specified in the test.

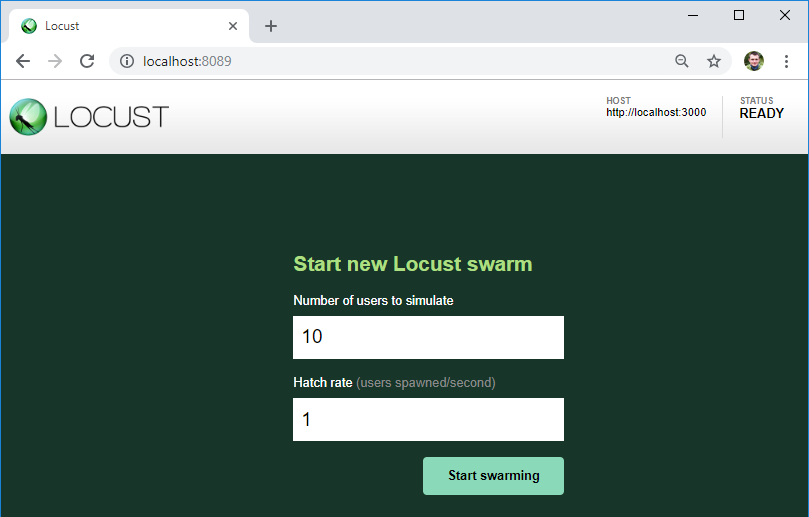

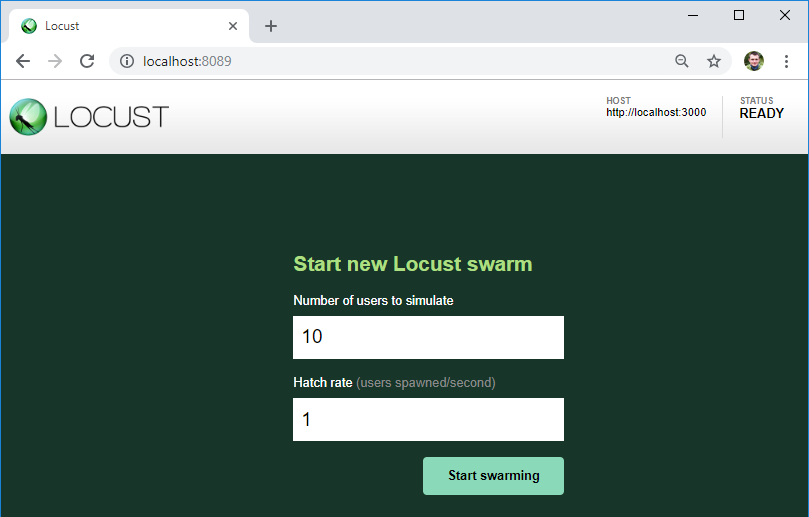

If there are no errors in the test, the load server will start and will be available at http: // localhost: 8089 /

As you can see, the server that we will test is indicated here - the addresses of the services from the test file will be added to this URL.

Also here we can specify the number of users for the load and their increase per second.

By the button we start the load!

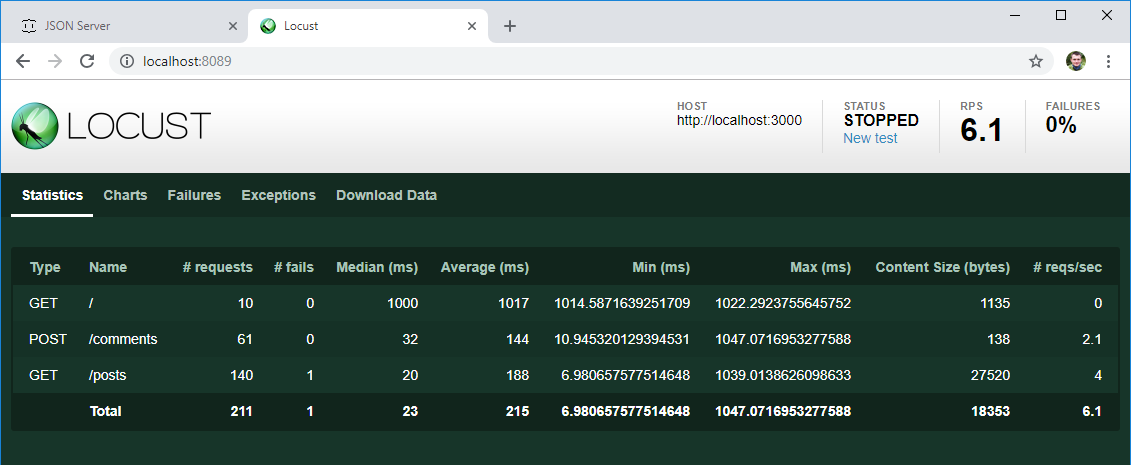

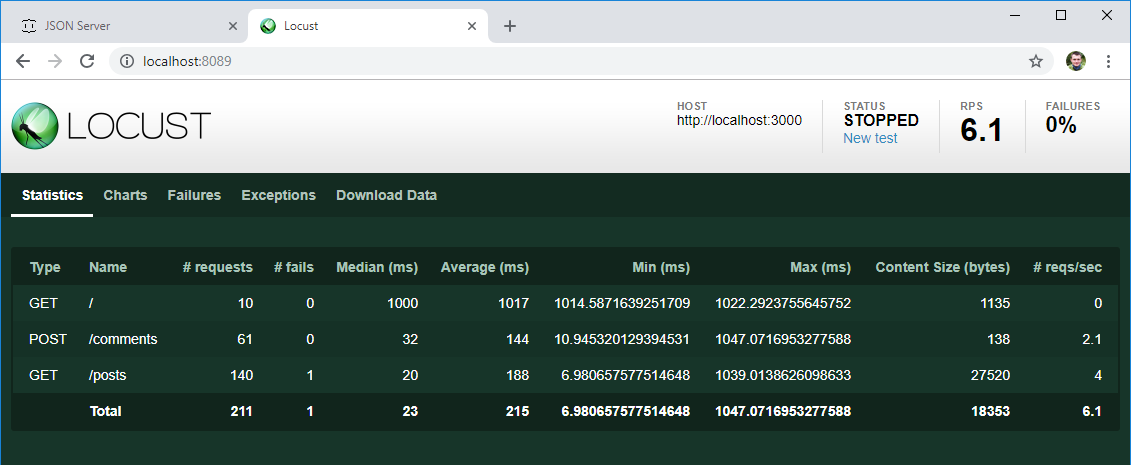

After a certain time, stop the test and take a look at the first results:

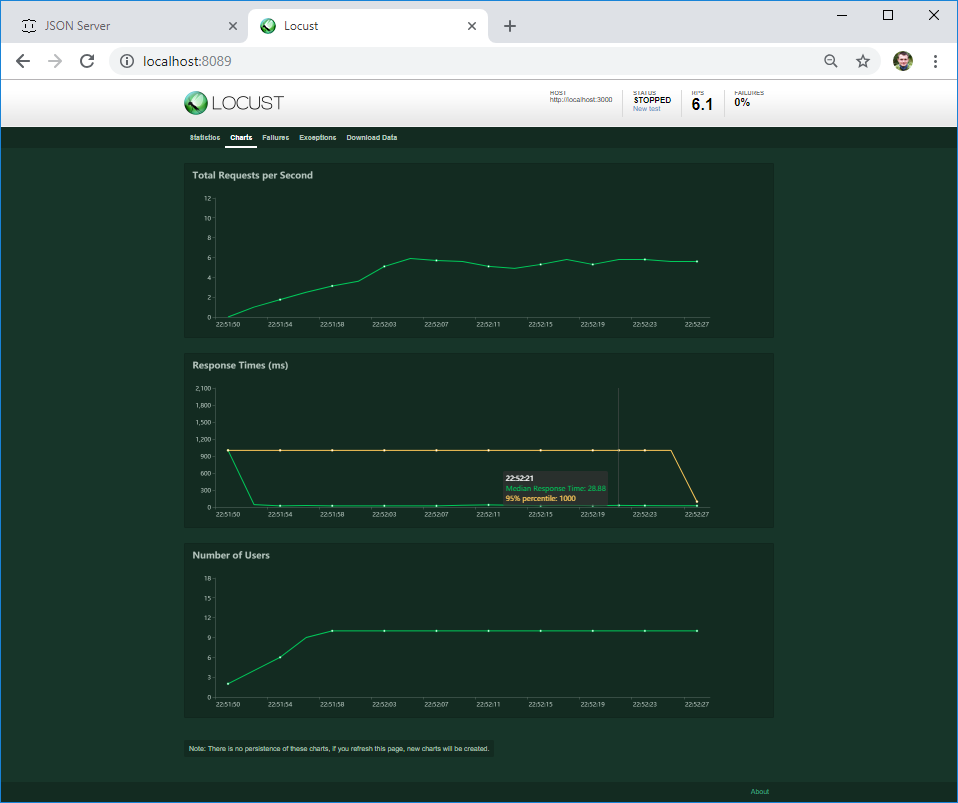

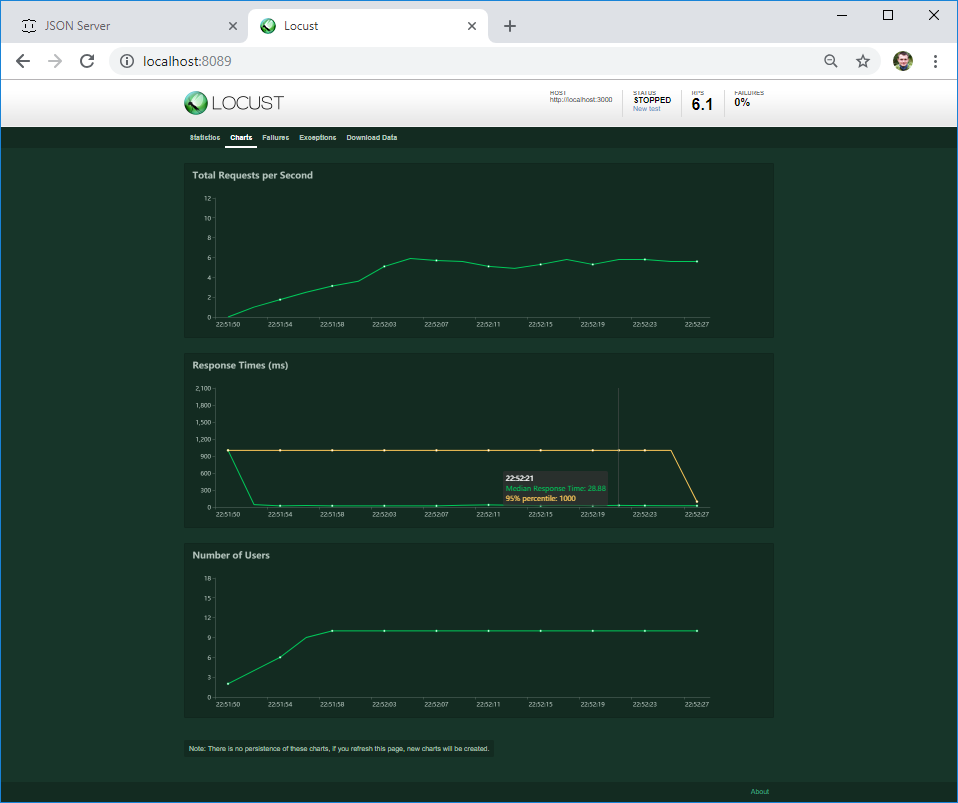

On the second tab, you can see the load graphs in real time. If the server crashes at a certain load or its behavior changes, it will be immediately visible on the graph.

On the third tab, you can see the error - in my case it is a client error. But if the server returns a 4XX or 5XX error - its text will be written here.

If the error happens in the code of your text, it will go to the Exceptions tab. So far I have the most frequent error associated with using the print () command in the code - this is not the best way to log in :)

On the last tab, you can download all the test results in csv format

Are these results relevant? Let's see. Most often, the performance requirements (if they are stated at all) sound like this: the average page load time (server response) should be less than N seconds with the load of M users. Not really specifying what users should do. And I like this locust - it creates an activity of a specific number of users who randomly perform the intended actions that they expect from users.

If we need to carry out a benchmark - measure the behavior of the system at different loads, we can create several classes of behavior and conduct several tests at different loads.

For starters, this is enough. If you liked the article, I plan to write about:

When it comes to performance testing - in the first place, everyone thinks about JMeter, it undoubtedly remains the most famous tool with the largest number of plug-ins. I never liked JMeter because of the non-obvious interface and high threshold of entry, as soon as the need arises to test a non-Hello World application.

And so, inspired by the success of testing in two different projects, I decided to share information about a relatively simple and convenient software - Locust

For those who are too lazy to go under the cat, he recorded a video:

What is it?

Open-source tools, which allow you to specify load scripts for Python code that supports distributed load and, as the authors assert, were used for load testing Battlelog for the Battlefild series of games (immediately appealing)

From the pros:

- simple documentation, including copy-paste example. You can start to test, even almost not knowing how to program

- “Under the hood” uses the requests library (HTTP for people). Her documentation can be used as an extended cheat sheet and debug tests.

- Python support - I just like the language

- The preceding paragraph gives cross-platform tests to run.

- Own web server on Flask to display test results

Of the minuses:

- No Capture & Replay - all by hand

- The result of the previous paragraph - you need a brain. As with Postman, you need an understanding of how HTTP works.

- Looking for minimal programming skills

- Linear load model - which immediately upsets fans to generate users “according to Gauss”

Testing process

Any testing is a complex task that requires planning, preparation, performance monitoring and analysis of results. With load testing, if possible, it is possible and necessary to collect all possible data that may affect the result:

- Server hardware (CPU, RAM, ROM)

- Server software (OS, server versions, JAVA, .NET, etc., database and amount of data itself, server logs and application under test)

- Network bandwidth

- Availability of proxy servers, load balancers and DDOS protection

- Load testing data (number of users, average response time, number of requests per second)

The following examples can be classified as black-box functional load testing. Even without knowing anything about the application under test and not accessing the logs, we can measure its performance.

Before the start

In order to test load tests in practice, I deployed a locally simple web server https://github.com/typicode/json-server . Almost all of the following examples I will give for him. I took the data for the server from a deployed online example - https://jsonplaceholder.typicode.com/.

To start it, a nodeJS is required.

The obvious spoiler : as with security testing, it is better to perform load testing experiments on cats locally without loading online services so that you are not banned.

In order to start, you also need Python - in all examples I will use version 3.6, as well as locust (at the time of writing, version 0.9.0). It can be installed with the command

python -m pip install locustioDetails of the installation can be found in the official documentation.

Case study

Next we need a test file. I took an example from the documentation, since it is very simple and straightforward:

from locust import HttpLocust, TaskSet

deflogin(l):

l.client.post("/login", {"username":"ellen_key", "password":"education"})

deflogout(l):

l.client.post("/logout", {"username":"ellen_key", "password":"education"})

defindex(l):

l.client.get("/")

defprofile(l):

l.client.get("/profile")

classUserBehavior(TaskSet):

tasks = {index: 2, profile: 1}

defon_start(self):

login(self)

defon_stop(self):

logout(self)

classWebsiteUser(HttpLocust):

task_set = UserBehavior

min_wait = 5000

max_wait = 9000Everything! This is really enough to start the test! Let's take an example before proceeding to launch.

Skipping imports, at the very beginning we see 2 almost identical functions of login and logout, consisting of one line. l.client is an HTTP session object with which we will create the load. We use the POST method, which is almost identical to the same in the requests library. Almost - because in this example, we pass not the full URL as the first argument, but only a part of it - a specific service.

The second argument passes the data - and I can not help but notice that it is very convenient to use Python dictionaries, which are automatically converted into json

You can also note that we do not process the result of the request in any way - if it is successful, the results (for example, cookies) will be saved in this session. If an error occurs, it will be recorded and added to the load statistics.

If we want to know if we wrote the request correctly, we can always check it as follows:

import requests as r

response=r.post(base_url+"/login",{"username":"ellen_key","password":"education"})

print(response.status_code)I added only the base_url variable , which should contain the full address of the resource under test.

The next few functions are requests that will create a load. Again, we do not need to process the server response - the results will go immediately to the statistics.

Next is the UserBehavior class (the class name can be any). As the name implies, it will describe the behavior of the spherical user in a vacuum of the application under test. The tasks property is passed to the dictionary of methods that the user will call and their call frequency. Now, despite the fact that we do not know what function and in which order each user will call - they are chosen randomly, we guarantee that the index functionwill be called on average 2 times more often than the profile function .

In addition to behavior, the parent TaskSet class allows you to set 4 functions that can be performed before and after tests. The order of calls will be as follows:

- setup - it is called once at the start of UserBehavior (TaskSet) - it is not in the example

- on_start - is called 1 time by each new user of the load when starting work

- tasks - performing the tasks themselves

- on_stop - called once by each user when the test finishes

- teardown - called 1 time when TaskSet terminates - it is also not in the example

Here it is worth mentioning that there are 2 ways to declare user behavior: the first is already indicated in the example above - the functions are declared in advance. The second way is to declare methods right inside the UserBehavior class :

from locust import HttpLocust, TaskSet, task

classUserBehavior(TaskSet):defon_start(self):

self.client.post("/login", {"username":"ellen_key", "password":"education"})

defon_stop(self):

self.client.post("/logout", {"username":"ellen_key", "password":"education"})

@task(2)defindex(self):

self.client.get("/")

@task(1)defprofile(self):

self.client.get("/profile")

classWebsiteUser(HttpLocust):

task_set = UserBehavior

min_wait = 5000

max_wait = 9000In this example, user functions and the frequency of their calling is set using task annotation . Functionally, nothing has changed.

The last class from the example is WebsiteUser (the class name can be any). In this class, we define the UserBehavior *** + user behavior model , as well as the minimum and maximum waiting times between individual tasks for each task to be called by individual users. To make it clearer, here's how to visualize it:

Beginning of work

Run the server, the performance of which we will test:

json-server --watch sample_server/db.jsonWe also modify the sample file so that it can test the service, remove the login and logout, set the user behavior:

- Open the main page 1 time when starting work

- Get a list of all posts x2

- Write a comment on the first post x1

from locust import HttpLocust, TaskSet, task

classUserBehavior(TaskSet):defon_start(self):

self.client.get("/")

@task(2)defposts(self):

self.client.get("/posts")

@task(1)defcomment(self):

data = {

"postId": 1,

"name": "my comment",

"email": "test@user.habr",

"body": "Author is cool. Some text. Hello world!"

}

self.client.post("/comments", data)

classWebsiteUser(HttpLocust):

task_set = UserBehavior

min_wait = 1000

max_wait = 2000To run the command line need to run the command

locust -f my_locust_file.py --host=http://localhost:3000where host is the address of the tested resource. It will be added to it the addresses of the services specified in the test.

If there are no errors in the test, the load server will start and will be available at http: // localhost: 8089 /

As you can see, the server that we will test is indicated here - the addresses of the services from the test file will be added to this URL.

Also here we can specify the number of users for the load and their increase per second.

By the button we start the load!

results

After a certain time, stop the test and take a look at the first results:

- As expected, each of the 10 users created at the start went to the main page

- The list of posts on average was opened 2 times more often than the comment was written.

- There is an average and median response time for each operation, the number of operations per second is already useful data, even though now take it and compare it with the expected result from the requirements

On the second tab, you can see the load graphs in real time. If the server crashes at a certain load or its behavior changes, it will be immediately visible on the graph.

On the third tab, you can see the error - in my case it is a client error. But if the server returns a 4XX or 5XX error - its text will be written here.

If the error happens in the code of your text, it will go to the Exceptions tab. So far I have the most frequent error associated with using the print () command in the code - this is not the best way to log in :)

On the last tab, you can download all the test results in csv format

Are these results relevant? Let's see. Most often, the performance requirements (if they are stated at all) sound like this: the average page load time (server response) should be less than N seconds with the load of M users. Not really specifying what users should do. And I like this locust - it creates an activity of a specific number of users who randomly perform the intended actions that they expect from users.

If we need to carry out a benchmark - measure the behavior of the system at different loads, we can create several classes of behavior and conduct several tests at different loads.

For starters, this is enough. If you liked the article, I plan to write about:

- complex test scenarios in which the results of one step are used in the following

- processing server response, since it may be wrong even if HTTP 200 OK has arrived

- unobvious difficulties that can be encountered and how to get around them

- testing without using UI

- distributed load testing