On the way to QUIC: what is the basis of HTTP / 3

- Transfer

A new milestone in Internet history begins before our eyes: we can assume that HTTP / 3 has already been announced. At the end of October, Mark Nottingham from the IETF proposed to decide on the name for the new protocol, on which the IETF has been building since 2015. So instead of QUIC-like names, a loud HTTP / 3 appeared. Western publications have already written about this and not even once . The history of QUIC began in the depths of the Corporation of Good in 2012, since then only Google servers supported HTTP-over-QUIC connections, but time passes and now Facebook began to introduce this technology (on November 7, Facebook and LiteSpeed made the first HTTP / 3 interaction ); at the moment, the share of websites supporting QUIC is 1.2%. Finally, the WebRTC teamalso looks towards QUIC (plus see the QUIC API ), so in the foreseeable future real-time video / audio will go through QUIC instead of RTP / RTCP. Therefore, we decided that it would be great to disclose the details of IETF QUIC: especially for Habr, we prepared a translation of the longrid, dotting i. Enjoy!

QUIC (Quick UDP Internet Connections) is a new, encrypted, default transport layer protocol that has many HTTP enhancements, both to speed up traffic and to increase security. QUIC also has a long-term goal - to eventually replace TCP and TLS. In this article, we will look at both QUIC key chips and why the web will benefit from them, as well as the problems of supporting this completely new protocol.

In fact, there are two protocols with this name: Google QUIC (gQUIC), the original protocol that Google engineers developed several years ago, which after a series of experiments was adopted by the Internet Engineering Task Force (IETF) for standardization purposes.

IETF QUIC (hereinafter - simply QUIC) already has such strong discrepancies with gQUIC that it can be considered a separate protocol. From packet format to handshake and HTTP mapping, QUIC improved the original gQUIC architecture through collaboration with many organizations and developers who have a common goal: to make the Internet faster and safer.

So what improvements does QUIC offer?

One of the most noticeable differences between QUIC and the venerable TCP is the initially stated goal of being a transport protocol that is secure by default . QUIC does this with authentication and encryption, which usually occur at a higher level (for example, in TLS), and not in the transport protocol itself.

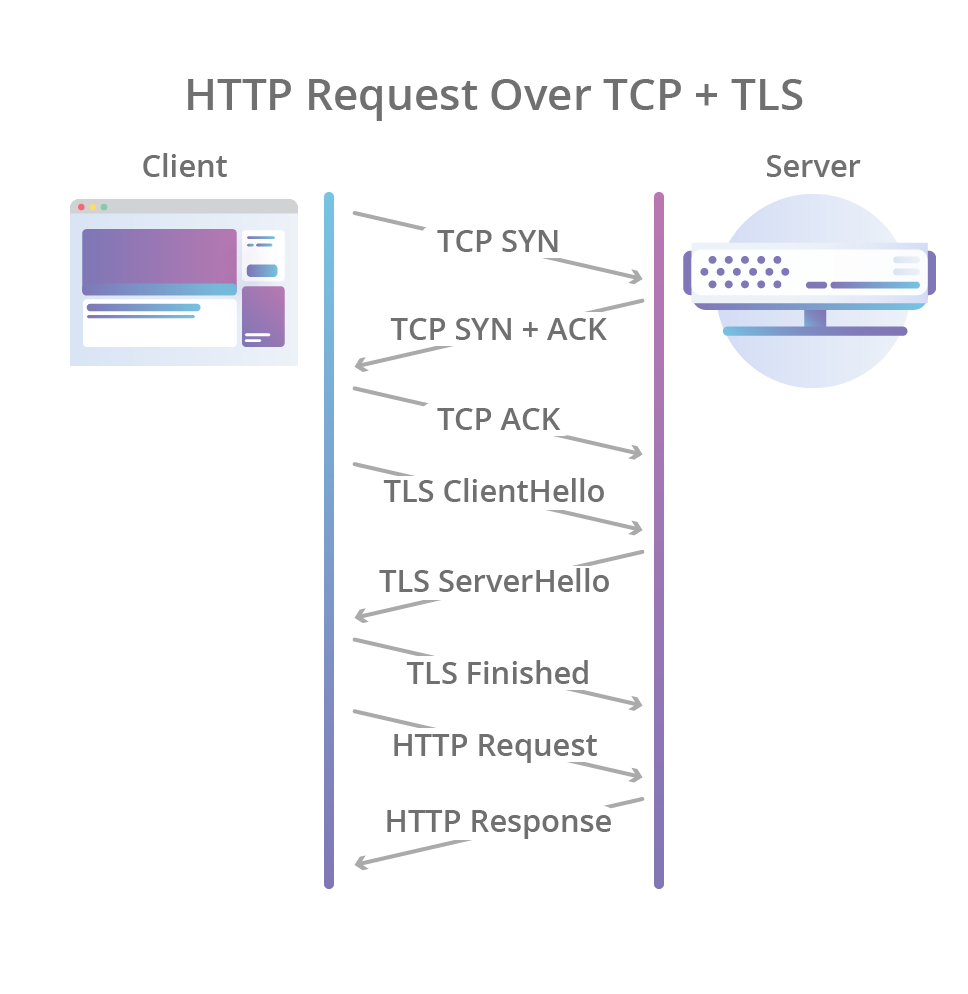

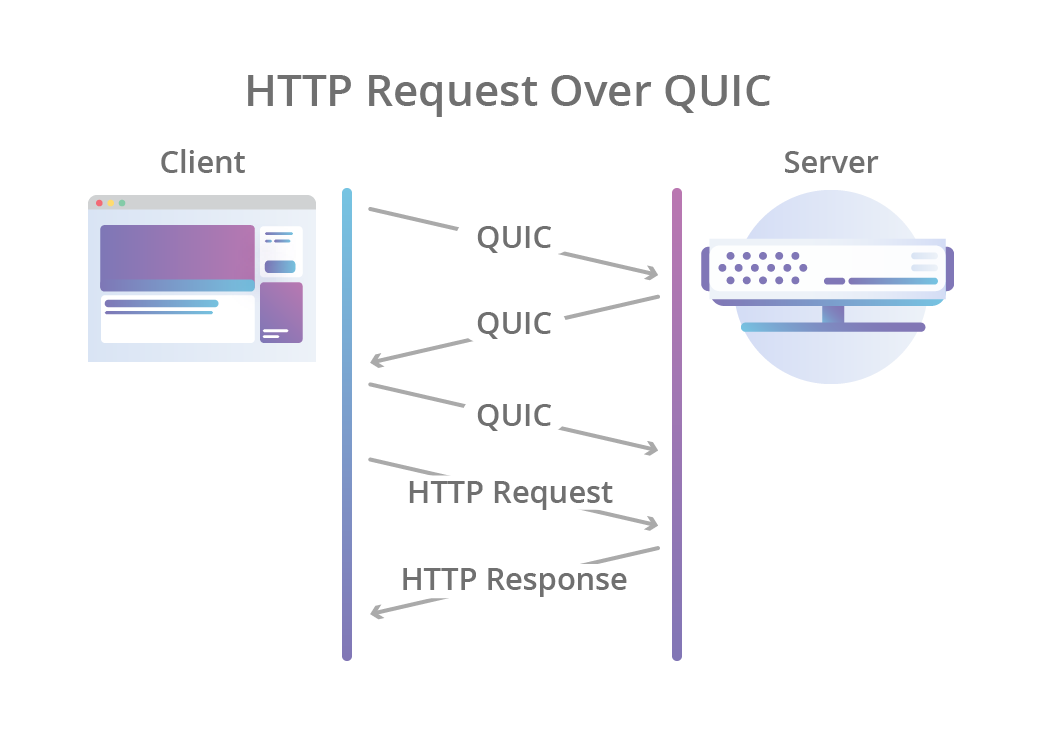

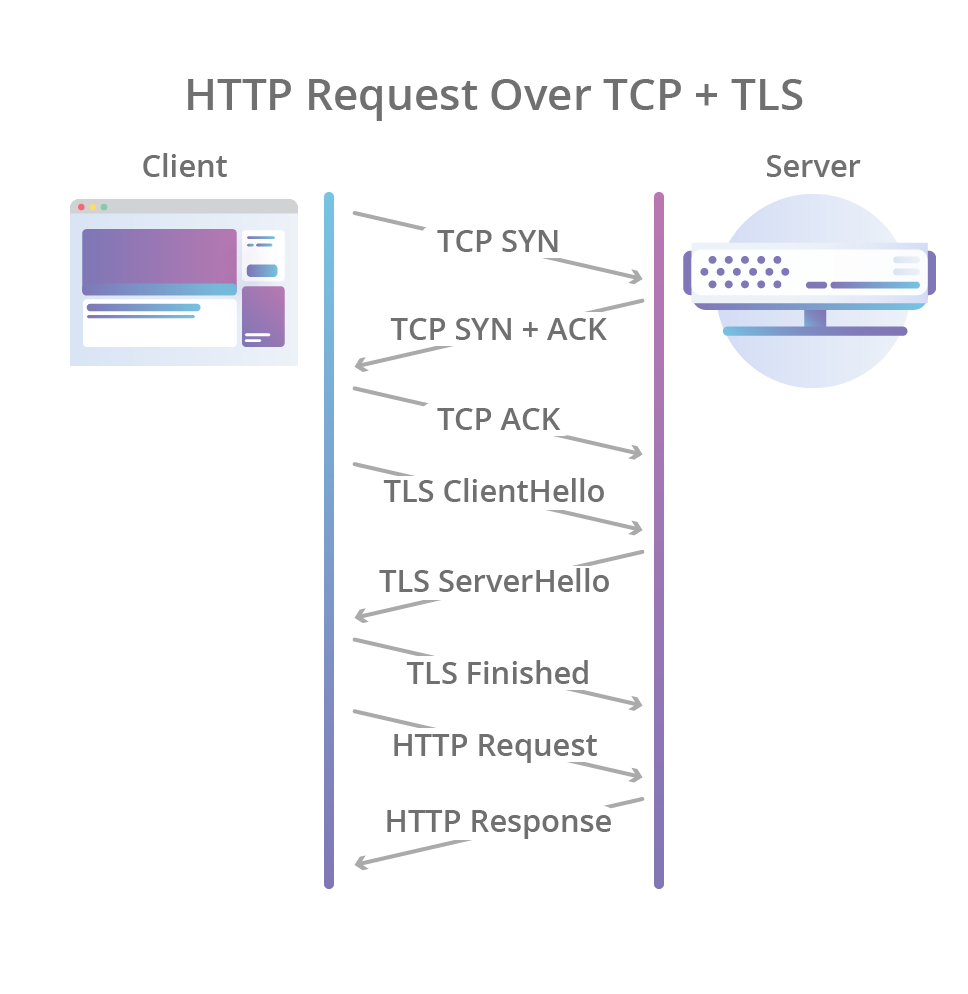

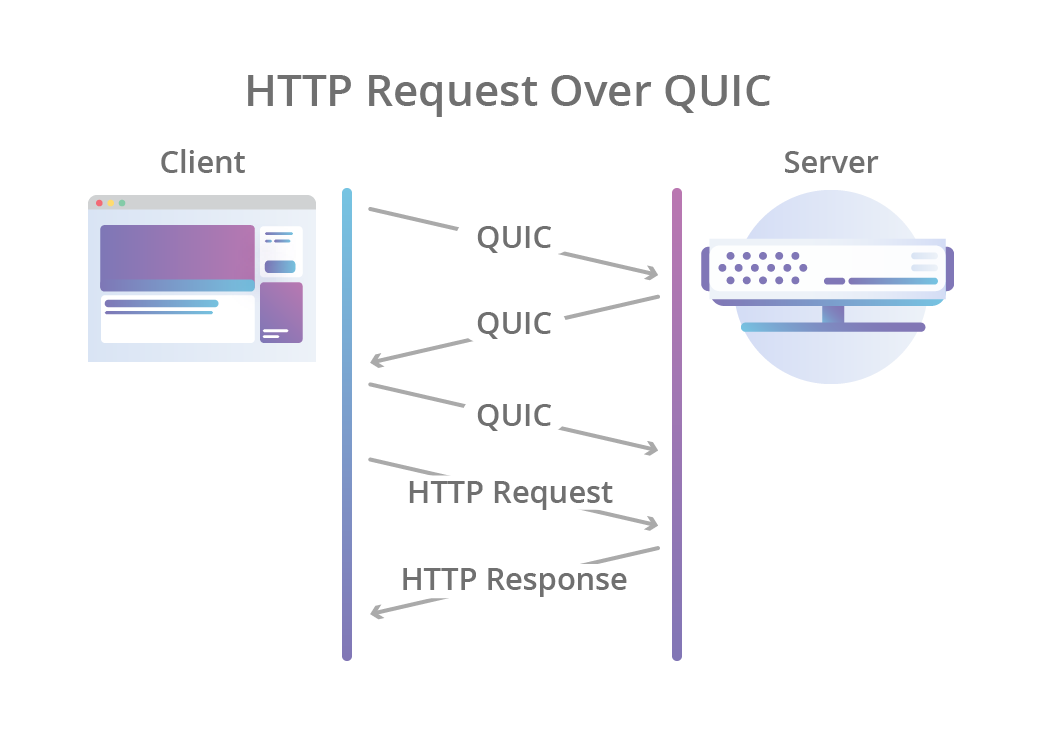

The initial handshake at QUIC combines the usual three-way TCP communication with the TLS 1.3 handshake, which provides authentication of participants, as well as coordination of cryptographic parameters. For those who are familiar with TLS: QUIC replaces the TLS recording level with its own frame format, but uses TLS handshakes.

This not only allows the connection to always be encrypted and authenticated, but also make the initial connection faster: the ordinary QUIC handshake makes the exchange between the client and the server in one pass, while TCP + TLS 1.3 takes two passes.

However, QUIC goes further and also encrypts connection metadata that can be easily compromised by a third party. For example, attackers can use packet numbers to direct users across multiple network paths when using connection migration (see below). QUIC encrypts packet numbers, so they cannot be adjusted by anyone other than the actual participants in the connection.

Encryption can also be effective against "conservatism" - a phenomenon that does not use the flexibility of the protocol in practice due to incorrect assumptions implementations (ossification - that because of what the long delayed TLS 1.3 laying open lay out only after. Several changes that prevent unwanted blocks for new TLS revisions).

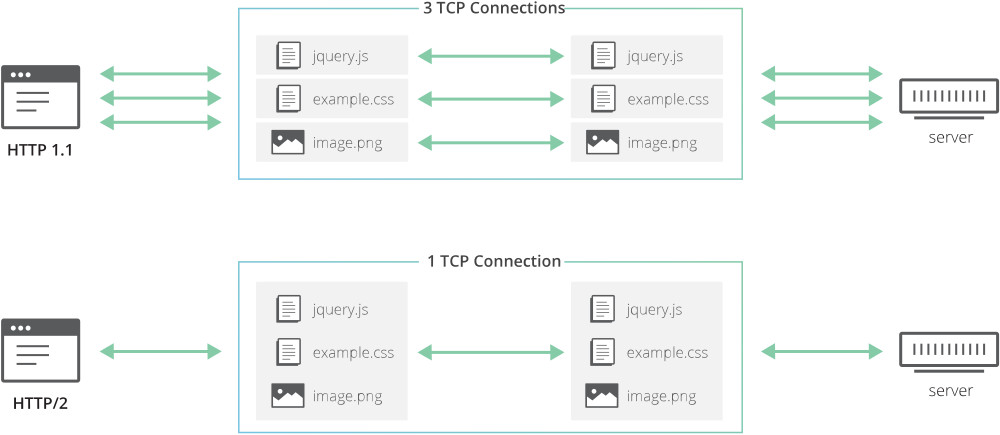

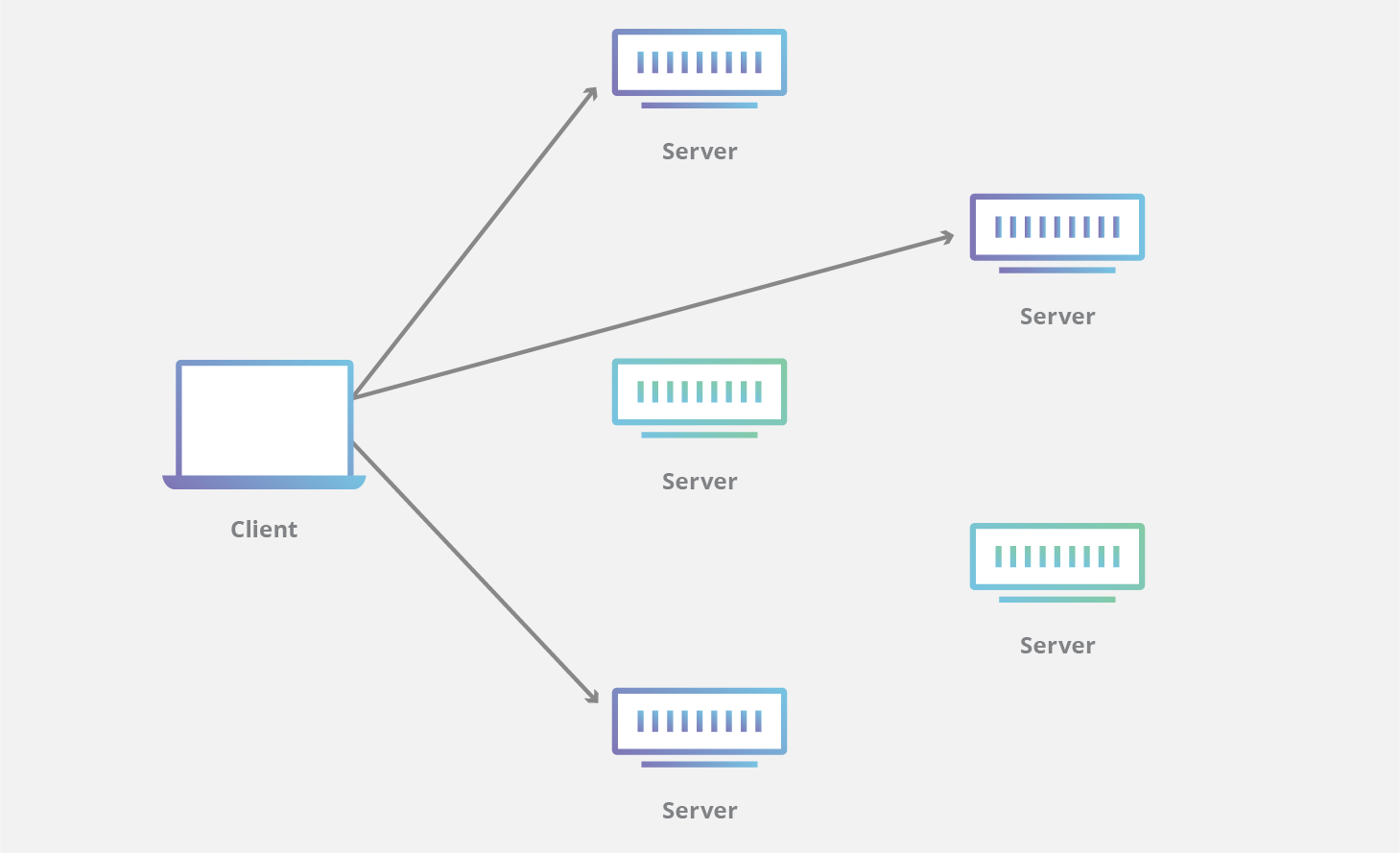

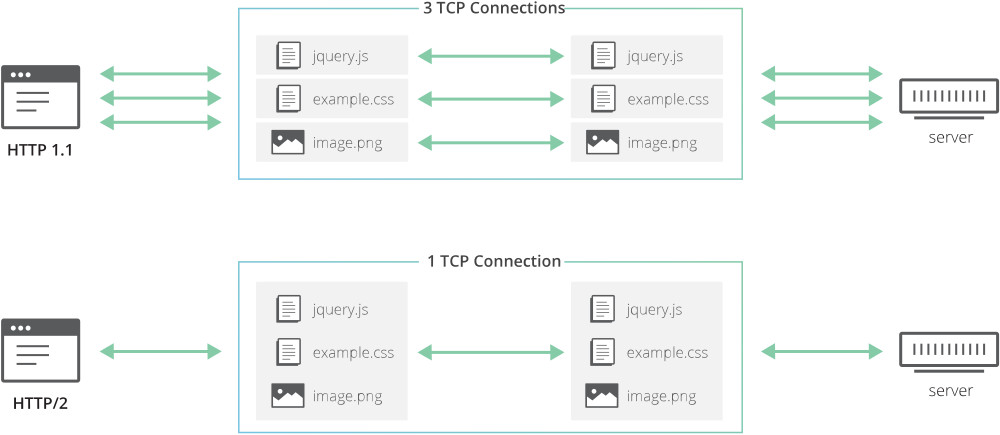

One of the major improvements that HTTP / 2 brought us is the ability to combine different HTTP requests in a single TCP connection. This allows HTTP / 2 applications to handle requests in parallel and make better use of the network channel.

Of course, this was a significant step forward. Because earlier, applications needed to initiate many TCP + TLS connections if they wanted to simultaneously process several HTTP requests (for example, when the browser needs to receive both CSS and JavaScript in order to render the page). Creating new connections requires multiple handshakes, as well as initializing the overload window: this means slowing down the rendering of the page. Combined HTTP requests avoid this.

However, there is a drawback: since multiple requests / responses are transmitted over the same TCP connection, they are all equally dependent on packet loss, even if the lost data only affects one of the requests. This is called “turn start blocking”.

QUIC goes deeper and provides first-class support for combining requests, for example, different HTTP requests can be regarded as different QUIC transport requests, but they will all use the same QUIC connection - that is, no additional handshakes are needed, there is one congestion condition, QUIC requests are delivered independently - in the end, in most cases, packet loss affects only one request.

Thus, it is possible to significantly reduce the time for, for example, full rendering of a web page (CSS, JavaScript, images and other resources), especially in the case of an overloaded network with high packet loss.

In order to fulfill its promises, the QUIC protocol must overcome some assumptions that many network applications take for granted. This can make implementation and implementation of QUIC difficult.

QUIC is designed to be delivered on top of UDP datagrams in order to facilitate development and avoid problems with network devices that drop packets of unknown protocols (because most devices support UDP). It also allows QUIC to live in user-space, so, for example, browsers will be able to inject new protocol chips and communicate them to end users, without waiting for OS updates.

However, the good goal — to reduce network problems — makes it more difficult to protect packets and properly route them.

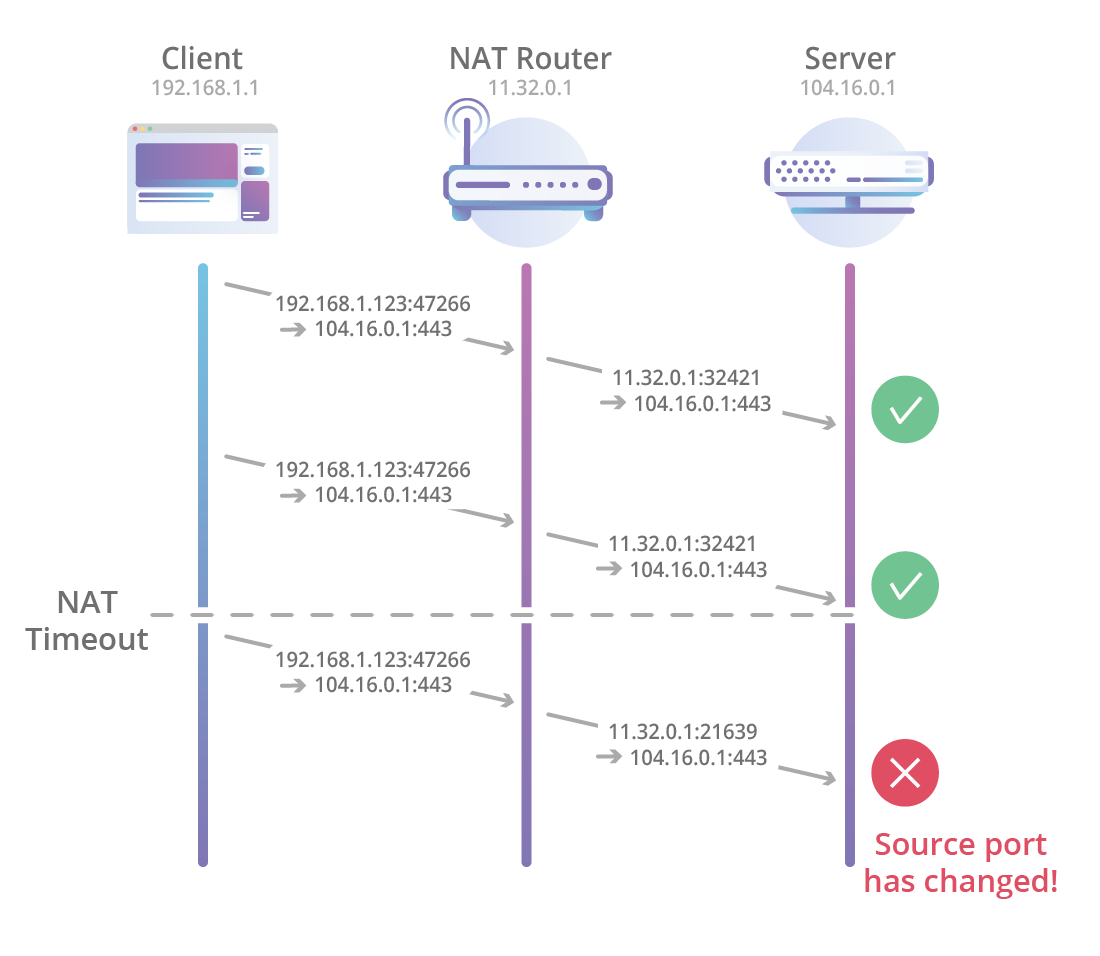

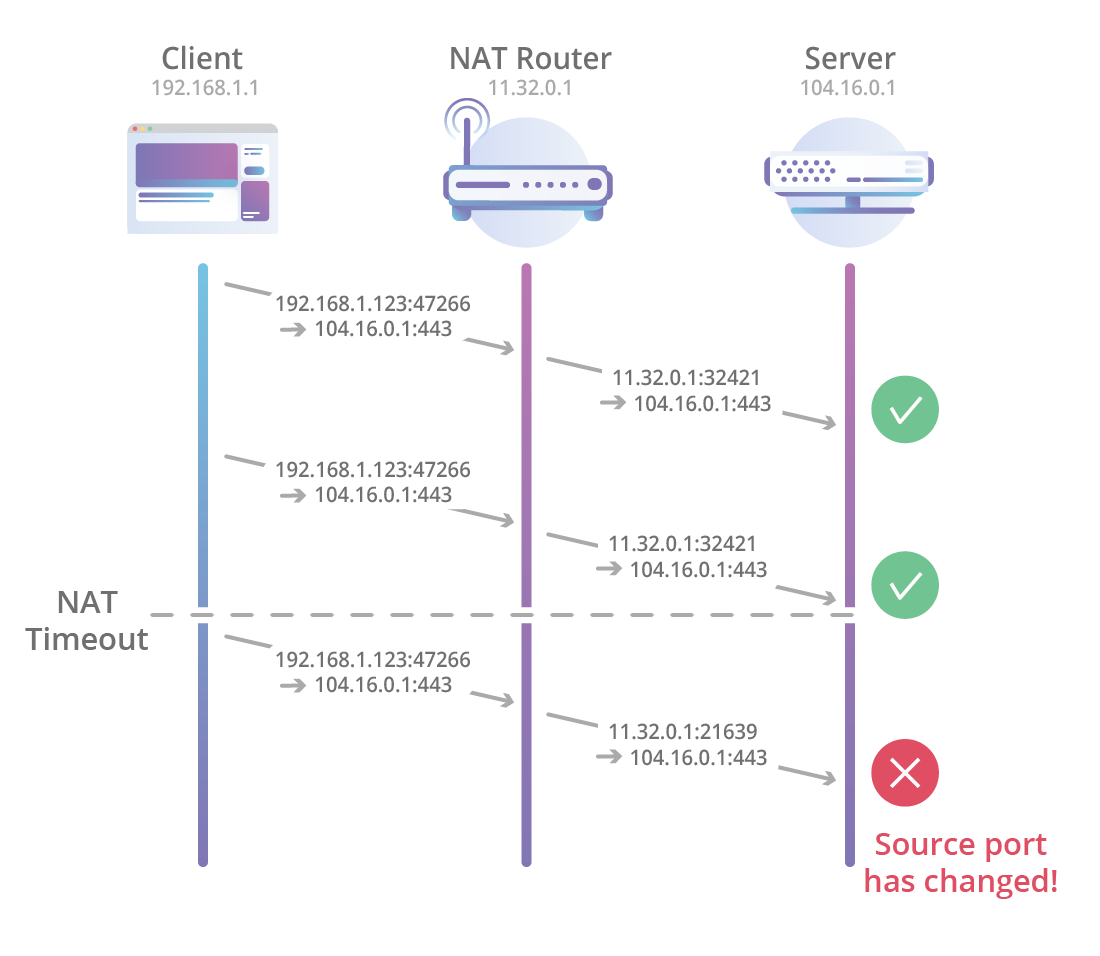

Typically, NAT routers work with TCP connections using a tuple of 4 values (source IP and port plus IP and destination port), as well as tracking TCP SYN, ACK and FIN packets transmitted over the network; routers can determine when a new connection was established and when it ended. Therefore, it is possible to accurately manage NAT bindings (connections between internal and external IP and ports).

In the case of QUIC, this is not yet possible. Modern NAT routers are not yet aware of QUIC, so they usually downgrade to default and less accurate UDP processing, which means timeouts of arbitrary (sometimes small) duration that can affect long-term connections.

When a reassociation occurs (for example, due to a timeout), the device outside the perimeter of the NAT begins to receive packets from another source, which is why it is impossible to maintain the connection using only a tuple of 4 values.

And it's not just NAT! One of the QUIC features is called connection migration and allows devices, at their discretion, to transfer connections to other IP addresses / paths. For example, a mobile client will be able to transfer a QUIC connection from a mobile network to an already known WiFi network (the user has visited a favorite coffee shop, etc.).

QUIC is trying to solve this problem with the concept of connection ID: a piece of information of arbitrary length, transmitted in QUIC packets and allowing identification of the connection. End devices can use this ID to track their connections without matching the tuple. In practice, there should be a set of IDs that point to the same connection, for example, to avoid connecting different paths when a connection is migrated - because the whole process is controlled only by end devices, not middle boxes.

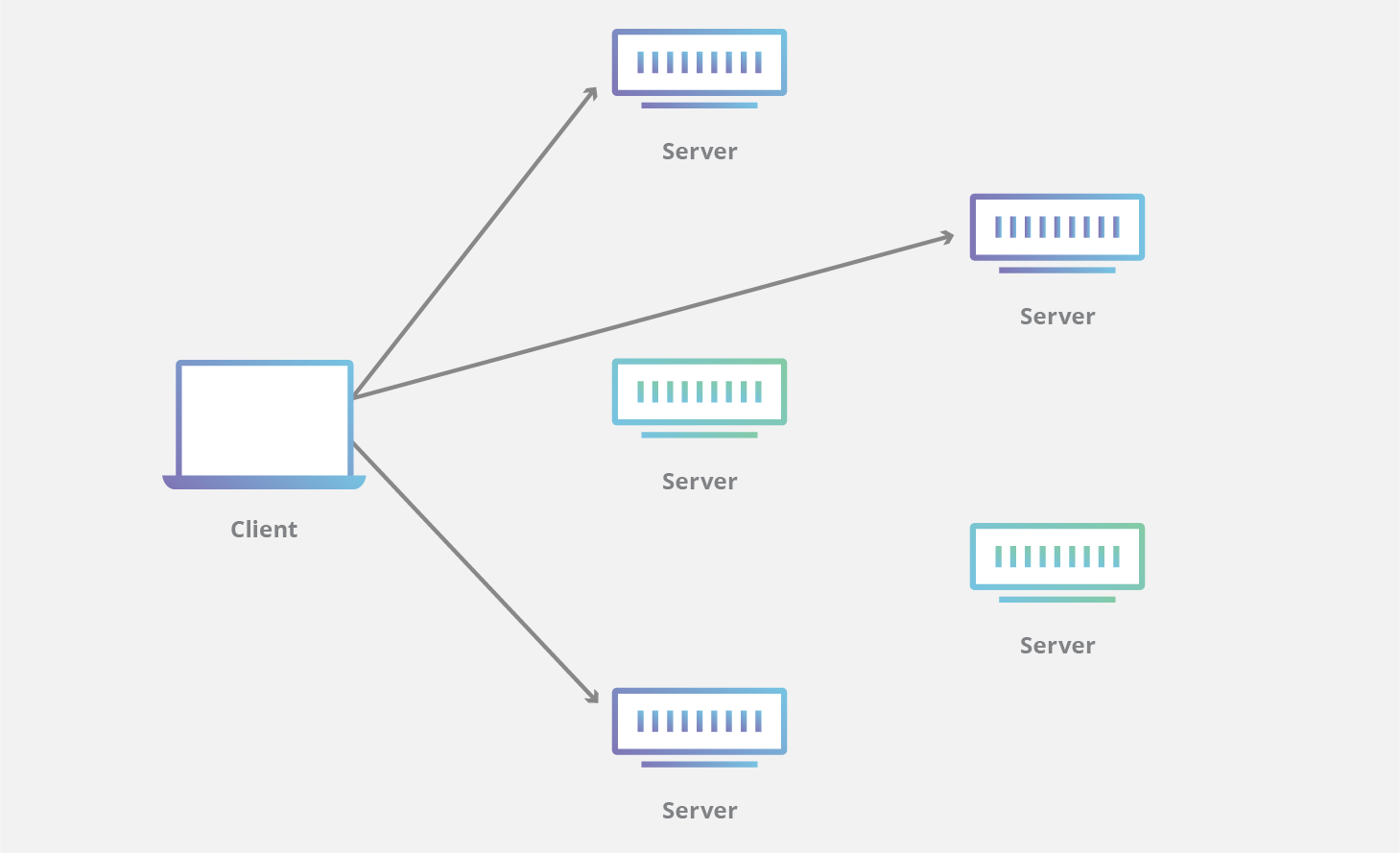

However, there may be a problem for telecom operators that use anycast and ECMP routing, where one IP can potentially identify hundreds or thousands of servers. Since the border routers in these networks do not yet know how to handle QUIC traffic, it may happen that UDP packets from one QUIC connection, but with different tuples will be sent to different servers, which means that the connection is broken.

To avoid this, operators may need to implement a smarter balancer at level 4. This can be achieved programmatically without affecting the border routers themselves (for an example, see the Katran project from Facebook).

Another useful feature of HTTP / 2 was header compression (HPACK) , which allows end devices to reduce the size of the data being transferred by discarding unnecessary in requests and responses.

In particular, among other techniques, HPACK uses dynamic tables with headers that have already been sent / received from past HTTP requests / responses, which allows devices to refer to new requests / responses to previously encountered headers (instead of sending them again) .

The HPACK tables must be synchronized between the encoder (the side that sends the request / response) and the decoder (the receiving side), otherwise the decoder simply cannot decode what it receives.

In the case of HTTP / 2 over TCP, this synchronization is transparent because the transport layer (TCP) delivers requests / responses in the same order in which they were sent. That is, you can send the decoder instructions for updating the tables in a simple request / response. But in the case of QUIC, everything is much more complicated.

QUIC can deliver multiple HTTP requests / responses in different directions at the same time, which means that QUIC guarantees delivery in one direction, and there is no such guarantee in the case of multiple directions.

For example, if a client sends HTTP request A in QUIC stream A, as well as request B in stream B, due to packet swapping or network losses, the server will receive request B before request A. And if request B was encoded as was specified in the request header A, the server simply cannot decode request B, since it has not yet seen request A.

In the gQUIC protocol, this problem was solved simply by making all the headers (but not the body) of the HTTP requests / responses consistentwithin one gQUIC stream. This ensured that all headers would come in the right order, no matter what happened. This is a very simple scheme; with its help, existing solutions can continue to use the code sharpened for HTTP / 2; on the other hand, this increases the likelihood of blocking the turn of the queue, which QUIC is intended to reduce. Therefore, the QUIC working group from the IETF has developed a new mapping between HTTP and QUIC (HTTP / QUIC), as well as a new header compression principle - QPACK.

In the final draft of the HTTP / QUIC and QPACK specifications, each HTTP request / response exchange uses its own QUIC bidirectional stream, so blocking the start of the queue does not occur. Also, in order to support QPACK, each participant creates two additional, unidirectional QUIC streams, one to send table updates, and the other to confirm that they have been received. Thus, the QPACK encoder can use the link to the dynamic table only after its receipt has been confirmed by the decoder.

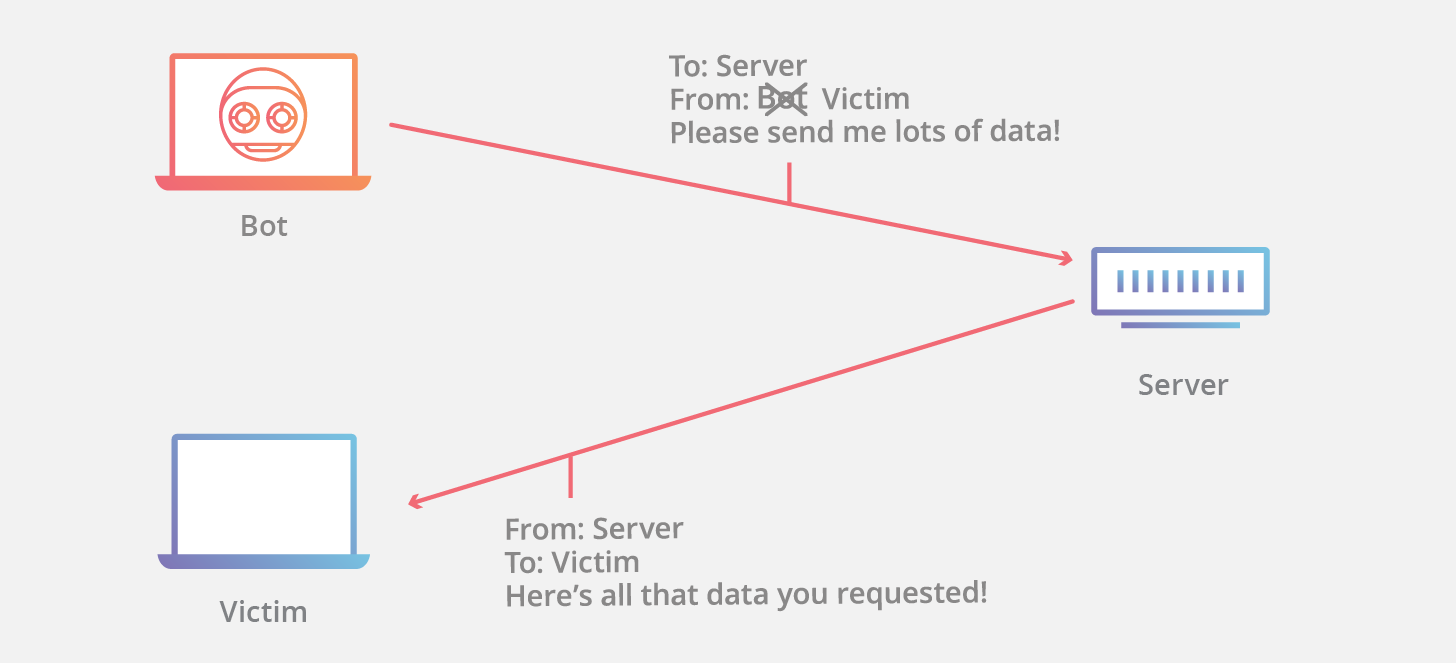

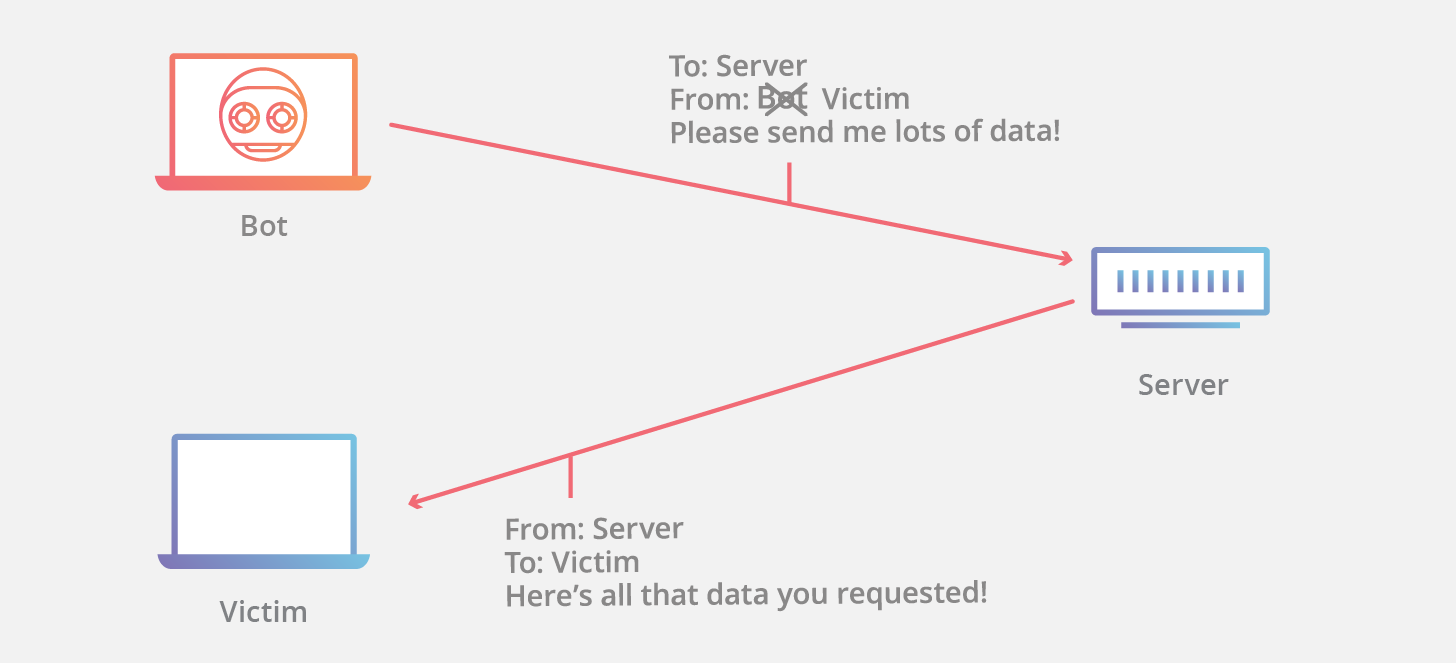

A common problem with UDP-based protocols is their susceptibility to reflection attacks, when an attacker causes a server to send a huge amount of data to the victim. The attacker substitutes his IP so that the server thinks that the data request came from the victim's address.

This type of attack can be very effective when the response of the server is incomparably greater than the request. In this case, talking about the "gain".

TCP is usually not used for such attacks, because the packets in the original handshake (SYN, SYN + ACK, ...) are the same length, so they do not have the potential for "gain".

On the other hand, the QUIC handshake is very asymmetric: just like in TLS, first the QUIC server sends its own chain of certificates, which can be quite large, despite the fact that the client has to send only a few bytes (the message from the ClientHLS client's TLS client ). For this reason, the initial QUIC package must be increased to a certain minimum length, even if the contents of the package are significantly smaller. However, this measure is still not very effective, since a typical server response contains several packages and therefore may be larger than an increased client package.

The QUIC protocol also defines an explicit source verification mechanism: instead of giving a large response, the server sends only a retry packet with a unique token, which the client then sends to the server in a new packet. So the server has greater confidence that the client does not have a spoofed IP address and you can complete the handshake. The minus of the solution is that the time of the hendzhaek increases, instead of one pass two are already required.

An alternative solution is to reduce the server's response to a size in which the reflection attack becomes less effective — for example, using ECDSA certificates (usually they are much smaller than RSA). We also experimented with the mechanism for compressing TLS certificates.using off-the-shelf compression algorithms like zlib and brotli; This is a feature that first appeared in gQUIC, but is not currently supported in TLS.

One of the constant problems of QUIC is the existing hardware and software that is not able to work with QUIC. We have already considered how QUIC is trying to cope with network mid-boxes like routers, but another potentially problematic area is the send / receive performance of data between QUIC devices via UDP. Over the years, efforts have been made to optimize TCP implementations as much as possible, including built-in unloading capabilities in software (for example, operating systems) and hardware (network interfaces), but none of this concerns UDP.

However, it is only a matter of time until QUIC’s implementation surpasses these improvements and benefits. Take a look at recent efforts to introduce UDP offloading on Linuxwhich would allow applications to combine and transfer multiple UDP segments between the user-space and the kernel-space to the network stack at a cost of approximately one segment; Another example is zerocopy support for sockets in Linux , thanks to which applications could avoid the cost of copying user-space memory into kernel space.

Like HTTP / 2 and TLS 1.3, the QUIC protocol should bring a ton of new features that will enhance the performance and security of both websites and other participants in the Internet infrastructure. The IETF working group intends to roll out the first version of the QUIC specifications by the end of the year, so it's time to think about how we can get the most out of the advantages of QUIC.

QUIC (Quick UDP Internet Connections) is a new, encrypted, default transport layer protocol that has many HTTP enhancements, both to speed up traffic and to increase security. QUIC also has a long-term goal - to eventually replace TCP and TLS. In this article, we will look at both QUIC key chips and why the web will benefit from them, as well as the problems of supporting this completely new protocol.

In fact, there are two protocols with this name: Google QUIC (gQUIC), the original protocol that Google engineers developed several years ago, which after a series of experiments was adopted by the Internet Engineering Task Force (IETF) for standardization purposes.

IETF QUIC (hereinafter - simply QUIC) already has such strong discrepancies with gQUIC that it can be considered a separate protocol. From packet format to handshake and HTTP mapping, QUIC improved the original gQUIC architecture through collaboration with many organizations and developers who have a common goal: to make the Internet faster and safer.

So what improvements does QUIC offer?

Integrated security (and performance)

One of the most noticeable differences between QUIC and the venerable TCP is the initially stated goal of being a transport protocol that is secure by default . QUIC does this with authentication and encryption, which usually occur at a higher level (for example, in TLS), and not in the transport protocol itself.

The initial handshake at QUIC combines the usual three-way TCP communication with the TLS 1.3 handshake, which provides authentication of participants, as well as coordination of cryptographic parameters. For those who are familiar with TLS: QUIC replaces the TLS recording level with its own frame format, but uses TLS handshakes.

This not only allows the connection to always be encrypted and authenticated, but also make the initial connection faster: the ordinary QUIC handshake makes the exchange between the client and the server in one pass, while TCP + TLS 1.3 takes two passes.

However, QUIC goes further and also encrypts connection metadata that can be easily compromised by a third party. For example, attackers can use packet numbers to direct users across multiple network paths when using connection migration (see below). QUIC encrypts packet numbers, so they cannot be adjusted by anyone other than the actual participants in the connection.

Encryption can also be effective against "conservatism" - a phenomenon that does not use the flexibility of the protocol in practice due to incorrect assumptions implementations (ossification - that because of what the long delayed TLS 1.3 laying open lay out only after. Several changes that prevent unwanted blocks for new TLS revisions).

Head-of-line blocking

One of the major improvements that HTTP / 2 brought us is the ability to combine different HTTP requests in a single TCP connection. This allows HTTP / 2 applications to handle requests in parallel and make better use of the network channel.

Of course, this was a significant step forward. Because earlier, applications needed to initiate many TCP + TLS connections if they wanted to simultaneously process several HTTP requests (for example, when the browser needs to receive both CSS and JavaScript in order to render the page). Creating new connections requires multiple handshakes, as well as initializing the overload window: this means slowing down the rendering of the page. Combined HTTP requests avoid this.

However, there is a drawback: since multiple requests / responses are transmitted over the same TCP connection, they are all equally dependent on packet loss, even if the lost data only affects one of the requests. This is called “turn start blocking”.

QUIC goes deeper and provides first-class support for combining requests, for example, different HTTP requests can be regarded as different QUIC transport requests, but they will all use the same QUIC connection - that is, no additional handshakes are needed, there is one congestion condition, QUIC requests are delivered independently - in the end, in most cases, packet loss affects only one request.

Thus, it is possible to significantly reduce the time for, for example, full rendering of a web page (CSS, JavaScript, images and other resources), especially in the case of an overloaded network with high packet loss.

So simple, yes?

In order to fulfill its promises, the QUIC protocol must overcome some assumptions that many network applications take for granted. This can make implementation and implementation of QUIC difficult.

QUIC is designed to be delivered on top of UDP datagrams in order to facilitate development and avoid problems with network devices that drop packets of unknown protocols (because most devices support UDP). It also allows QUIC to live in user-space, so, for example, browsers will be able to inject new protocol chips and communicate them to end users, without waiting for OS updates.

However, the good goal — to reduce network problems — makes it more difficult to protect packets and properly route them.

One NAT to convene all together and forge a single black will

Typically, NAT routers work with TCP connections using a tuple of 4 values (source IP and port plus IP and destination port), as well as tracking TCP SYN, ACK and FIN packets transmitted over the network; routers can determine when a new connection was established and when it ended. Therefore, it is possible to accurately manage NAT bindings (connections between internal and external IP and ports).

In the case of QUIC, this is not yet possible. Modern NAT routers are not yet aware of QUIC, so they usually downgrade to default and less accurate UDP processing, which means timeouts of arbitrary (sometimes small) duration that can affect long-term connections.

When a reassociation occurs (for example, due to a timeout), the device outside the perimeter of the NAT begins to receive packets from another source, which is why it is impossible to maintain the connection using only a tuple of 4 values.

And it's not just NAT! One of the QUIC features is called connection migration and allows devices, at their discretion, to transfer connections to other IP addresses / paths. For example, a mobile client will be able to transfer a QUIC connection from a mobile network to an already known WiFi network (the user has visited a favorite coffee shop, etc.).

QUIC is trying to solve this problem with the concept of connection ID: a piece of information of arbitrary length, transmitted in QUIC packets and allowing identification of the connection. End devices can use this ID to track their connections without matching the tuple. In practice, there should be a set of IDs that point to the same connection, for example, to avoid connecting different paths when a connection is migrated - because the whole process is controlled only by end devices, not middle boxes.

However, there may be a problem for telecom operators that use anycast and ECMP routing, where one IP can potentially identify hundreds or thousands of servers. Since the border routers in these networks do not yet know how to handle QUIC traffic, it may happen that UDP packets from one QUIC connection, but with different tuples will be sent to different servers, which means that the connection is broken.

To avoid this, operators may need to implement a smarter balancer at level 4. This can be achieved programmatically without affecting the border routers themselves (for an example, see the Katran project from Facebook).

QPACK

Another useful feature of HTTP / 2 was header compression (HPACK) , which allows end devices to reduce the size of the data being transferred by discarding unnecessary in requests and responses.

In particular, among other techniques, HPACK uses dynamic tables with headers that have already been sent / received from past HTTP requests / responses, which allows devices to refer to new requests / responses to previously encountered headers (instead of sending them again) .

The HPACK tables must be synchronized between the encoder (the side that sends the request / response) and the decoder (the receiving side), otherwise the decoder simply cannot decode what it receives.

In the case of HTTP / 2 over TCP, this synchronization is transparent because the transport layer (TCP) delivers requests / responses in the same order in which they were sent. That is, you can send the decoder instructions for updating the tables in a simple request / response. But in the case of QUIC, everything is much more complicated.

QUIC can deliver multiple HTTP requests / responses in different directions at the same time, which means that QUIC guarantees delivery in one direction, and there is no such guarantee in the case of multiple directions.

For example, if a client sends HTTP request A in QUIC stream A, as well as request B in stream B, due to packet swapping or network losses, the server will receive request B before request A. And if request B was encoded as was specified in the request header A, the server simply cannot decode request B, since it has not yet seen request A.

In the gQUIC protocol, this problem was solved simply by making all the headers (but not the body) of the HTTP requests / responses consistentwithin one gQUIC stream. This ensured that all headers would come in the right order, no matter what happened. This is a very simple scheme; with its help, existing solutions can continue to use the code sharpened for HTTP / 2; on the other hand, this increases the likelihood of blocking the turn of the queue, which QUIC is intended to reduce. Therefore, the QUIC working group from the IETF has developed a new mapping between HTTP and QUIC (HTTP / QUIC), as well as a new header compression principle - QPACK.

In the final draft of the HTTP / QUIC and QPACK specifications, each HTTP request / response exchange uses its own QUIC bidirectional stream, so blocking the start of the queue does not occur. Also, in order to support QPACK, each participant creates two additional, unidirectional QUIC streams, one to send table updates, and the other to confirm that they have been received. Thus, the QPACK encoder can use the link to the dynamic table only after its receipt has been confirmed by the decoder.

Refraction reflection

A common problem with UDP-based protocols is their susceptibility to reflection attacks, when an attacker causes a server to send a huge amount of data to the victim. The attacker substitutes his IP so that the server thinks that the data request came from the victim's address.

This type of attack can be very effective when the response of the server is incomparably greater than the request. In this case, talking about the "gain".

TCP is usually not used for such attacks, because the packets in the original handshake (SYN, SYN + ACK, ...) are the same length, so they do not have the potential for "gain".

On the other hand, the QUIC handshake is very asymmetric: just like in TLS, first the QUIC server sends its own chain of certificates, which can be quite large, despite the fact that the client has to send only a few bytes (the message from the ClientHLS client's TLS client ). For this reason, the initial QUIC package must be increased to a certain minimum length, even if the contents of the package are significantly smaller. However, this measure is still not very effective, since a typical server response contains several packages and therefore may be larger than an increased client package.

The QUIC protocol also defines an explicit source verification mechanism: instead of giving a large response, the server sends only a retry packet with a unique token, which the client then sends to the server in a new packet. So the server has greater confidence that the client does not have a spoofed IP address and you can complete the handshake. The minus of the solution is that the time of the hendzhaek increases, instead of one pass two are already required.

An alternative solution is to reduce the server's response to a size in which the reflection attack becomes less effective — for example, using ECDSA certificates (usually they are much smaller than RSA). We also experimented with the mechanism for compressing TLS certificates.using off-the-shelf compression algorithms like zlib and brotli; This is a feature that first appeared in gQUIC, but is not currently supported in TLS.

UDP performance

One of the constant problems of QUIC is the existing hardware and software that is not able to work with QUIC. We have already considered how QUIC is trying to cope with network mid-boxes like routers, but another potentially problematic area is the send / receive performance of data between QUIC devices via UDP. Over the years, efforts have been made to optimize TCP implementations as much as possible, including built-in unloading capabilities in software (for example, operating systems) and hardware (network interfaces), but none of this concerns UDP.

However, it is only a matter of time until QUIC’s implementation surpasses these improvements and benefits. Take a look at recent efforts to introduce UDP offloading on Linuxwhich would allow applications to combine and transfer multiple UDP segments between the user-space and the kernel-space to the network stack at a cost of approximately one segment; Another example is zerocopy support for sockets in Linux , thanks to which applications could avoid the cost of copying user-space memory into kernel space.

Conclusion

Like HTTP / 2 and TLS 1.3, the QUIC protocol should bring a ton of new features that will enhance the performance and security of both websites and other participants in the Internet infrastructure. The IETF working group intends to roll out the first version of the QUIC specifications by the end of the year, so it's time to think about how we can get the most out of the advantages of QUIC.