Software-defined data center: why is this necessary in the practice of a system administrator

The concept of software-defined data centers appeared a long time ago. However, in practice, little has been implemented and worked, except for IaaS providers. In fact, most often there was ordinary virtualization. Now you can step further on the VMware stack, or you can implement everything on Openstack - here you have to think with your head and weigh a lot of factors.

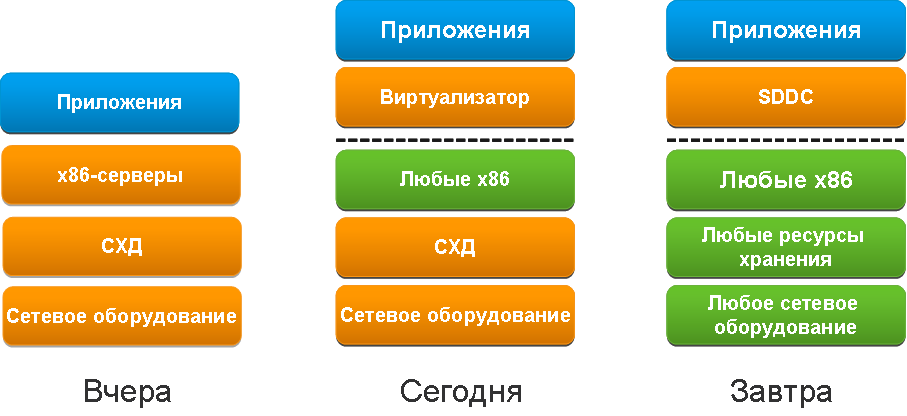

Over the past year, we saw a very significant technological leap in terms of using SDDC in normal sysadmin practice. Now there are proven technologies for network virtualization and storage virtualization in the form of normal tools. And from this you can get real benefits for the business.

Why is this needed? Very simple: starting with automation of routine, untying dependence on physical iron; Know exactly the consumption of each resource; to know a penny, and ending with where and how the money goes in the IT budget. The last two reasons lie slightly beyond the usual admin goals, but are very useful for CIOs or sysadmins of medium and large businesses, counting on full understanding with the commercial department. And the prize, which is already there.

Let's start with the story

In the 60s, IBM took the right path, deciding that mainframes would steer. Virtualization has emerged as such. In the 80s, the emergence of the IBM PC standard created a situation where you could buy a dozen cars, and not invest heavily in infrastructure. Already in 1990 began a massive transition from centralized to decentralized architecture. Only in the late 90s did a more or less successful virtualization based on this same x86 architecture appear. In the 2000s, a major commercial implementation of virtualization by large companies began. After about five years, new VDI, application virtualization, and SDN technologies began to appear. In 2010, serious support for the SDN approach began in equipment for data centers. Now we see that SDN and SDS are software-defined networks,

Today, the main thing is the consolidation of computing resources (when all the hardware is viewed through the virtualization platform as a common capacity for computing, storing and transmitting data). Then, disaster-proof solutions from ordinary standby data centers to “extended” data centers are priorities. Our experience in this story is this: in most cases, large retailers need backup solutions, state-owned enterprises need to store a lot of data, therefore, virtual storage systems are a priority for large volumes, banks need everything that optimizes their work and allows for cost accounting.

Now I must say that at the global level, a new round of development of SDN begins. Some foreign operators began to look at SDN and NFV as a real replacement for the current hardware solutions, they grew their teams for SDDC inside, but for our operators this story is ahead.

I already wrote about SDS in the last post, I’ll write a few conceptual words about SDN here. I think a little later we will have a more detailed article about this part.

The essence of the SDN approach

The main feature of the stack is the abstraction from hardware, and, as a result, the abstraction of all service policies, features and features, avoiding compatibility and scaling problems. Horizontal scaling is easier than ever.

Below is the familiar time-tested approach to building a network.

And in the next picture, the SDN approach, in fact, we just take and take out the control module or Data plane from all network devices in one place, naturally reserving it. Thus, turning the entire network infrastructure into one “large switch”, physical switches from various vendors, physical servers with various hypervisors and virtual switches on them, and so on, can act as interface modules.

That's basically the whole approach.

Next, why is SDDC needed? Perhaps all of this has been voiced from different angles, and you heard it, then consider that we simply agree and heard this from our customers as well.

Routine Automation

Everyone is solving it differently now. Someone creates control systems, someone writes scripts. But I think any admin wants it so that there is no need to create a virtual machine 100 times a day, mark up the moons, or install an OS if it is urgently needed.

Suppose the IT department operates on requests from service consumers. Today you need to create one server, and it is simple and fast. Tomorrow - already 20. At the same time, everyone needs to register addresses, create networks to switch, route, and so on. And also to select storage. It’s good that there are virtual machines now, and often you only need to click the buttons. But often this is not always rational. For example, we know this from our testing laboratory, in which usually about 300-500 virtual machines work simultaneously. And for consumers, slow initialization often looks like downtime and service failure, there are constant complaints.

According to VMware, automation is about 40% of the reasons for buying their proprietary stack for SDDC.

Resource management

When at any time you can build a report on what works for you, who the owner is, how intensively one or another resource is used, and so on. This is an inventory of hardware and software, access control to resources. Imagine how many “dead” VMs you have already scattered across different corners of your infrastructure? How to find them and understand whether they are needed?

Let’s say the director comes to you and asks: “Why did you spend 3 million rubles this year on hardware and software?” What are you doing in this situation? How to show management how many resources are there, who uses them, by department or system? And how much is generally free? How to answer questions like: “How much does it cost for IT to open a new branch?”, “And how to compare the outsourcing of this service and its use inside?”

Each year, the IT department agrees on a development budget. You say, you understand that this storage for 1 million dollars is very necessary and without it in any way. How to show it to business? It is necessary to operate with consumption, and it must not only be considered, but also detailed to specific departments and services.

Cost management

This is probably the most important and interesting thing for business users, which can now give SDDC. It will allow management to know how much and what resources are used to implement a particular IT function. Be it banal mail, CRM, or ERP system ... In addition, you can integrate the cloud directly with these systems so that, for example, the cost of storage is distributed as it is used between departments. What exactly does the money go to, for ERP, or for CRM, in what shares and so on? And yet this understanding is important when you need to buy some kind of infrastructure thing, and it absolutely does not affect anyone.

The main objective: to turn the IT infrastructure into a business unit that provides services to the rest of the company. Imagine you are providing resources and you are being paid “money”, and as a result, you automatically spend this “income” on upgrading and maintaining IT, causing fewer questions from management. Since at any moment there is an opportunity to show - and who actually needs this IT and how much. It is this model that is best understood by commercial departments - in fact, they work this way with all departments, evaluating them in the profit generation model.

Evaluate how difficult it is now to find out how much resources (and therefore money) have been spent on testing and implementing a specific function? How much will it cost to service each of the business systems, taking into account all the resources used?

Full Cloud Ecosystem

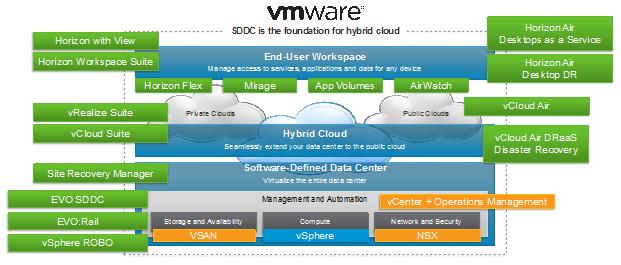

As I think it’s already clear, the cloud is not only and not so much the virtualization of computing (which is just the simplest in IaaS), but also a software-defined network and software-defined storage. You can read about SDS here in a large educational program (https://habrahabr.ru/company/croc/blog/272795/). In short, we use all types of storage on the network including servers with disks, “classic” centralized storage systems and other types of libraries, and we combine all this into one virtual storage that can balance data correctly and provide more correct storage of “cold” and “Hot” bases, and squeeze everything possible out of iron, up to using the RAM of the servers as a storage cache. This is the essence, this is the strategy: pretty soon, without such solutions, it seems that only ultra-homogeneous data centers from one vendor will do.

SDN is a bit more complicated. Here, the entire network as such is controlled by software. Any node can be redefined at any time.

Open source VS proprietary approach

There are 2 ways to build a cloud:

- Proprietary. That is, suppose that significant investments have already been made in the platform on VMware. And you continue to develop the cloud using what the vendor offers or recommends.

- Hybrid. You can fix the current infrastructure. And on top of it, create a cloud solution based on Openstack. Most often, this way begins with the fact that you already have a good virtualizer (usually VMware or KVM), around which a stack of open source software is built. As a rule, large players go to this.

Everyone understands that VMware has now gathered a huge number of technologies under its umbrella and is confidently continuing to develop them. But open solutions are not far behind, as practice shows. What is only worth it is that VMware itself has released and is developing its own version of Openstack, which has a set of functional capabilities for working specifically in the VMware environment. Just like a cloud management system.

That is, the second way is actually legalized.

At the same time, the use of the vmvar version is not required. Especially if you plan to abandon VMware as a whole, significantly reducing costs.

However, hereinafter a couple of features that can play a role in the selection.

Proprietary vendors, using VMware as an example

In VMware, they assembled products under a single brand on the basis of which you can assemble a full-fledged software-defined data center, i.e. SDDC. Plus, VMware has an entire ecosystem of technology partners that expand the capabilities of the software.

- The vSphere virtualization platform today is actually the de facto standard when building virtual infrastructures in the Enterprise segment, with the release of the 6th version (a little less than a year ago), it was possible to move virtual machines without stopping even within the continent (round trip did not must exceed 100 ms).

- And in order to organize a single network space, a product for network virtualization — VMware NSX — is perfect. Which, in fact, now during installation it becomes part of the platform almost imperceptibly for the admin.

- For storing virtual machines there are ample opportunities for choice ... VMware has “native” solutions - Virtual Volumes (https://www.vmware.com/products/vsphere/features/virtual-volumes) and Virtual SAN (https: // www .vmware.com / products / virtual-san /)

- For SDS, check out Atlantis, PernixData, and DataCore.

- As the cloud management platform itself, there is the vRealize line. This is just all those products that automate, provide a self-service portal and resource billing.

Openstack

Openstock is an open source. Everybody knows. For a private cloud, this can be extremely useful. Just because you are unlikely to see 2 identical cloud implementations. Just because organizations are all different, their processes within and the IT culture.

As a result, there is the possibility of customization at a very high level. It is important to understand that customization is achieved by rewriting or appending program code, respectively.

The components of Openstack, as you are sure, are listed here (http://openstack.ru/about/components/). The truth is not much different from VMware when viewed from a bird's eye view?

The components are ideologically the same. Of course, the maturity level of each of them may vary. But now even telecom operators say we will use this. And we pick up everything specific only later.

And if at first glance the open source is likely to appear, it means that it will be difficult for you to fasten the usual corporate-enterprise one. And here it is not so.

There are also SDN solutions, for example, OpenDaylight or OpenContrail, which, by the way, is developed with the support of Juniper, one of the main players in the market of “traditional” network equipment.

There is SDS, for example, Datacore has the ability to work with Openstack, combining and broadcasting all existing storage systems in an understandable form for Openstack.

Suppose if you need a good balancer, you can safely take F5. He has an integration with a neutron. There is also an interesting startup Avi networks. They make both SDN, NFV, and balancers, as well for Openstack. Anyway, integration with Openstack has become a good form for enterprise software.

Probably the reader at this moment might think, but we have an open source. Why are we talking about paid software again. And this is because it is best to consider Openstack as a cloud platform, the unifying link that automates and connects certain functions, and not only provides them itself. Since automation is his main task.

Summary

There is an old programming principle: “work - do not touch”. Perhaps it is he who prevents the IT specialists of many enterprises from starting open source experiments. But now, course jumps are playing against IT budgets of companies, despite the fact that in foreign currency the cost of software products of Western developers does not get higher. So even the task of maintaining infrastructure at the existing level requires more financial resources than before. Simply put, finally becoming proprietary has become so expensive that it has even reached the commercial departments.

Therefore, right now, the share of open source solutions in the infrastructures of our customers is growing significantly. And so I also think therefore about the hybrid path of cloud development, many will think.

Therefore, the scheme of actions is approximately the following:

- Can a company get benefits from SDDC technology and which ones. To do this, you need to understand, for example, how much you need a personal or hybrid cloud. It will not work out in the forehead, and the paradox is that neither the IT department, nor the commercial department will do this inside themselves - you need to collaborate and understand what is important to you. For example, an acceleration of the development cycle of interest by 30-40% would be significant for the bank? Surely! And this, in general, can be achieved with the right test environments and the removal of problems with the allocation of resources. For an industry with a high IT share in development, this is even more important. Speeding up the deployment of infrastructure services to work - it sounds cool, but, again, directly assessing the money can be difficult at the IT department level.

- Decide on the architecture. If you are already using some piece of the proprietary stack, this is not a reason to build everything on it, but, I note, the VMware repeatedly mentioned, for example, has a bunch of commercial “stray” third-party manufacturers that turn the entire system into “closed space”, but with This makes it possible to do everything from one panel. For money. On the other hand, if you have the power to deal with Openstack, prepare files, but it will probably cost a lot less. As a rule, the proprietary path is a variant of a financial organization because of vendor guarantees, or a variant of a company where the staff turnover in the IT department is high.

- Count in miniature. Alas, the implementation of SDDC components is very difficult to do "on the knee for Project N," because it affects the entire architecture.

- Look at these documents. This is a foreign experience, and quite successful and well applicable in Russian conditions. The spread of implementations - from universities to large financial organizations, well-known application software and so on.

In general, see:- University - www.mirantis.com/blog/case-study-university-of-hawaii-openstack-private-cloud

- content.mirantis.com/rs/451-RBY-185/images/mirantis-banking-case-study.pdf - a “stretched" solution.

- Cisco Webex as a critical stock - content.mirantis.com/rs/451-RBY-185/images/Mirantis_CiscoWebex-case-study.pdf

- Of course, changing the architecture is not one of the fastest. This is a long way, but the results can greatly exceed expectations. This type of infrastructure saves a ton of money and resources, but most importantly, you can start rebuilding parts of the infrastructure (for example, the least critical, but still requiring resources) right now. In this case, start collecting data from colleagues, Western friends and professional forums.

- If you are ready, and you need to figure out something “rough” with a fork, I can help at the level of advice and first approximation - my mail is albelyaev@croc.ru. By the way, tentatively in May we will have the first admin training on Datacore, however, there are no more free places. But if anyone is interested, write in a personal email or mail, I will inform you about the seminar, as soon as the group is typed, we will plan a new one. And on April 14 we will hold an extended workshop with an online broadcast on the topic of infrastructure optimization using virtualization of networks / storage systems, VDI, etc. The announcement will soon appear here .