Pomp - metaframe for parsing sites

With asyncio support and inspired by Scrapy .

First of all, as a tool for collecting data, used in my hobby project, which would not have crushed with its power, complexity and legacy. And yes, who will consciously start something new on python2.x?

As a result, the idea came up to create a simple framework for the modern python3.x ecosystem, but as elegant as Scrapy.

Under katom a review article about Pomp in style of FAQ.

Indeed, a lot can be done on a simple bunch of requests + lxml . In reality, frameworks set the rules and the necessary abstractions, and take a lot of routine on themselves.

Pomp out of the box does not provide something that can cover a wide range of requirements in solving the problems of website parsing: content parsing, proxies, caching, redirect processing, cookies, authorization, filling out forms, etc.

This is the weakness and at the same time the strength of Pomp. This framework is positioned as a "framework for frameworks", in other words, it gives everything you need to make your own framework and start productively riveting web spiders.

Pomp gives the developer:

Winning:

In other words, Scrapy can be made from Pomp if you work with a Twisted network and parse content using lxml, etc.

In the case when you need to process N sources, with a common data model and with periodic updating of data - this is the ideal case of using Pomp.

If you need to process 1-2 sources and forget, then it’s faster and more clear to do everything on requests + lxml, and do not use special frameworks at all.

You can try to compare only in the context of a specific task.

And it’s hard to say which is better, but for me this is a settled issue, since I can use Pomp to build a system of any complexity. With other frameworks, you often have to deal with their “frames” and even hammer in nails with a microscope, for example, use Scrapy to work with headless browsers, leaving all the power of Twisted to failure.

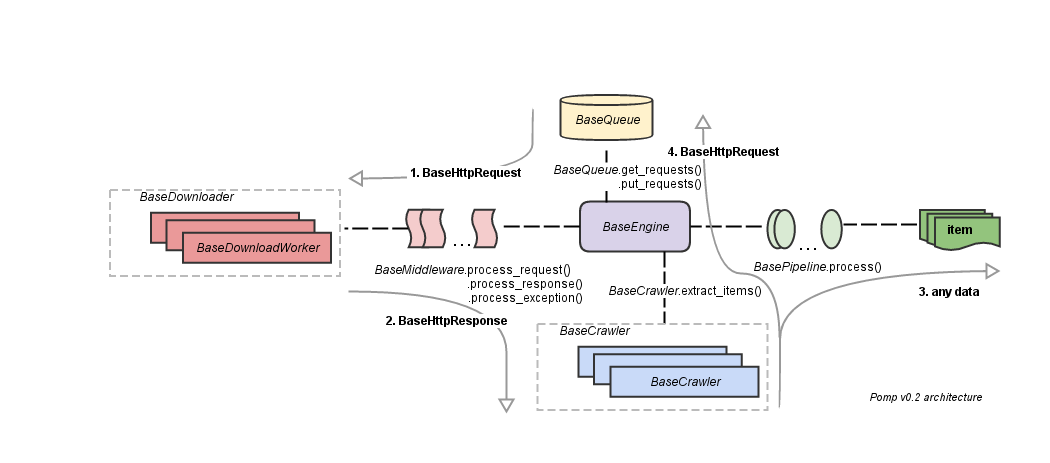

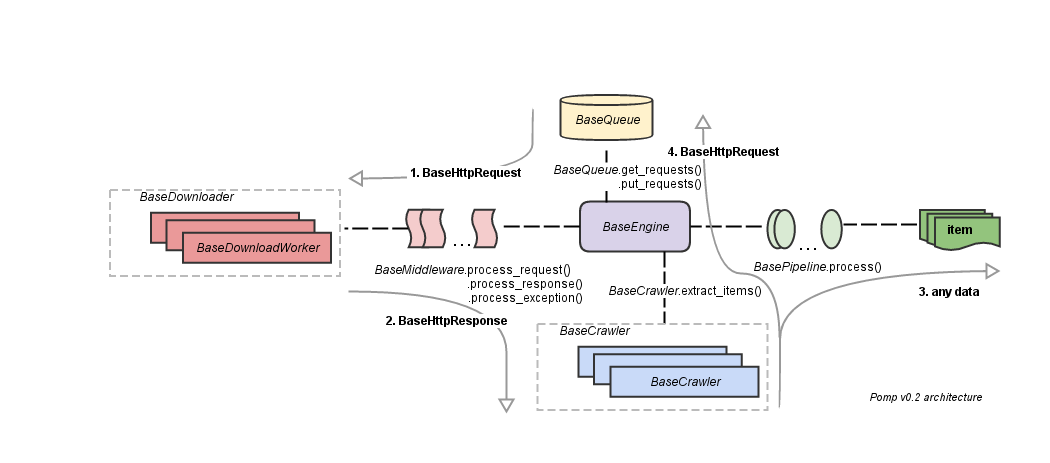

Main blocks:

- queue of requests (tasks);

- "transport" (in the diagram as BaseDownloader);

- middlewares for pre- and post-processing of requests;

- pipelines for sequential processing / filtering / storage of extracted data;

- crawler for parsing content and generating the following requests;

- an engine that links all parts.

Search on http://python.org/news for sentences with the

The example uses:

- Redis to organize a centralized task queue;

- Apache Kafka for aggregation of extracted data;

- Django on postgres for storing and displaying data;

- grafana with kamon dashboards to display the metrics of the kamon-io / docker-grafana-graphite cluster

- docker-compose to run this entire zoo on one machine.

See source code and launch instructions here - estin / pomp-craigslist-example .

As well as video without sound, where most of the time was spent on deploying the environment. On the video you can find some errors in collecting metrics about the size of the task queue.

Note : in the example, errors in parsing some pages were deliberately not fixed, so that exceptions would appear in the process of work.

Pomp for the most part has already formed and achieved its goals.

Further development will most likely consist of tighter integration with asyncio.

- project on bitbucket https://bitbucket.org/estin/pomp

- mirror of the project on github https://github.com/estin/pomp

- documentation http://pomp.readthedocs.org/en/latest/

Why another one?

First of all, as a tool for collecting data, used in my hobby project, which would not have crushed with its power, complexity and legacy. And yes, who will consciously start something new on python2.x?

As a result, the idea came up to create a simple framework for the modern python3.x ecosystem, but as elegant as Scrapy.

Under katom a review article about Pomp in style of FAQ.

Why do we need frameworks for parsing sites?

Indeed, a lot can be done on a simple bunch of requests + lxml . In reality, frameworks set the rules and the necessary abstractions, and take a lot of routine on themselves.

Why is Pomp positioned as a "metaframe"?

Pomp out of the box does not provide something that can cover a wide range of requirements in solving the problems of website parsing: content parsing, proxies, caching, redirect processing, cookies, authorization, filling out forms, etc.

This is the weakness and at the same time the strength of Pomp. This framework is positioned as a "framework for frameworks", in other words, it gives everything you need to make your own framework and start productively riveting web spiders.

Pomp gives the developer:

- the necessary abstractions (interfaces) and architecture similar to Scrapy;

- Does not impose a choice of methods for working with the network and parsing the extracted content;

- can work both synchronously and asynchronously;

- competitive extraction and analysis of content ( concurrent.futures );

- It does not require a "project", settings and other restrictions.

Winning:

- launch on python2.x, python3.x and pypy (you can even start on the google app engine)

- You can use your favorite libraries to work with the network and to parse content;

- enter a turn of tasks;

- develop your own cluster of spiders;

- simpler transparent integration with headless browsers (see an example of integration with phatnomjs ).

In other words, Scrapy can be made from Pomp if you work with a Twisted network and parse content using lxml, etc.

When should you use Pomp and when not?

In the case when you need to process N sources, with a common data model and with periodic updating of data - this is the ideal case of using Pomp.

If you need to process 1-2 sources and forget, then it’s faster and more clear to do everything on requests + lxml, and do not use special frameworks at all.

Pomp vs Scrapy / Grab / etc?

You can try to compare only in the context of a specific task.

And it’s hard to say which is better, but for me this is a settled issue, since I can use Pomp to build a system of any complexity. With other frameworks, you often have to deal with their “frames” and even hammer in nails with a microscope, for example, use Scrapy to work with headless browsers, leaving all the power of Twisted to failure.

Architecture

Main blocks:

- queue of requests (tasks);

- "transport" (in the diagram as BaseDownloader);

- middlewares for pre- and post-processing of requests;

- pipelines for sequential processing / filtering / storage of extracted data;

- crawler for parsing content and generating the following requests;

- an engine that links all parts.

Simplest example

Search on http://python.org/news for sentences with the

pythonsimplest word regexp.import re

from pomp.core.base import BaseCrawler

from pomp.contrib.item import Item, Field

from pomp.contrib.urllibtools import UrllibHttpRequest

python_sentence_re = re.compile('[\w\s]{0,}python[\s\w]{0,}', re.I | re.M)

class MyItem(Item):

sentence = Field()

class MyCrawler(BaseCrawler):

"""Extract all sentences with `python` word"""

ENTRY_REQUESTS = UrllibHttpRequest('http://python.org/news') # entry point

def extract_items(self, response):

for i in python_sentence_re.findall(response.body.decode('utf-8')):

item = MyItem(sentence=i.strip())

print(item)

yield item

if __name__ == '__main__':

from pomp.core.engine import Pomp

from pomp.contrib.urllibtools import UrllibDownloader

pomp = Pomp(

downloader=UrllibDownloader(),

)

pomp.pump(MyCrawler())An example of creating a "cluster" to parse craigslist.org

The example uses:

- Redis to organize a centralized task queue;

- Apache Kafka for aggregation of extracted data;

- Django on postgres for storing and displaying data;

- grafana with kamon dashboards to display the metrics of the kamon-io / docker-grafana-graphite cluster

- docker-compose to run this entire zoo on one machine.

See source code and launch instructions here - estin / pomp-craigslist-example .

As well as video without sound, where most of the time was spent on deploying the environment. On the video you can find some errors in collecting metrics about the size of the task queue.

Note : in the example, errors in parsing some pages were deliberately not fixed, so that exceptions would appear in the process of work.

Future plans

Pomp for the most part has already formed and achieved its goals.

Further development will most likely consist of tighter integration with asyncio.

References

- project on bitbucket https://bitbucket.org/estin/pomp

- mirror of the project on github https://github.com/estin/pomp

- documentation http://pomp.readthedocs.org/en/latest/