Vision-based SLAM: Monocular SLAM

- Tutorial

We continue the series of tutorial articles on visual SLAM with a lesson about working with its monocular variants. We already talked about installing and setting up the environment , and also conducted a general review in the article on quadrocopter navigation . Today we’ll try to understand how different SLAM algorithms that use a single camera work, consider their differences for the user and give recommendations for use.

For a more detailed analysis of the details, today we will confine ourselves to two implementations of monocular SLAM: ORB SLAM and LSD SLAM. These algorithms are the most advanced in their class from open-source projects. PTAM is also very common , but it is not as cool as, for example, ORB SLAM.

All monocular SLAM algorithms require accurate camera calibration. We did this in the last lesson , now we will extract the camera settings. For the camera model we use, we need to extract the camera matrix (fx, fy, cx, cy) and 5 parameters of the distortion function (k1, k2, p1, p2, k3). Go to the ~ / .ros / camera_info directory and open the YAML file with the camera settings there. The contents of the file will look something like this (instead of ardrone_front there will be a different name):

We are interested in the fields camera_matrix and distortion_coefficients, they contain the necessary values in the following format: Save these values, they will come in handy later.

The ORB SLAM algorithm as a whole is not too different in principle of work from other visual SLAMs. Features are extracted from the images. Then, using the Bundle Adjustment algorithm, features from different images are placed in 3D space, while simultaneously setting the location of the camera at the time of shooting. However, there are also features. In all cases, a single feature detector is used - ORB (Oriented FAST and Rotated BRIEF). This is a very fast detector (which allows you to achieve real time without using a GPU), and the resulting ORB descriptors of features with a high degree of invariant to the angle of view, camera rotation and lighting. This allows the algorithm to track cycle closures with high accuracy and reliability, and also provides high reliability during relocalization. The algorithm ultimately belongs to the class of so-called feature-based. ORB SLAM builds a sparse map of the area, but it is possible to build a dense map based on images of key frames. You can get acquainted with the algorithm in the article by the developers .

We did not describe the process of installing ORB SLAM in the previous lesson, so let's dwell on this here. In addition to the already installed environment, we will need to install Pangolin (do not clone the repository in the ROS workspace):

Next, install the actual ORB SLAM (again, do not clone the sources in the workspace):

To use the package in ROS, you need to add the path to the binaries in ROS_PACKAGE_PATH (replace PATH with the path where you installed ORB SLAM):

Now we need to enter the camera calibration data and ORB SLAM settings in the settings file itself. Go to the Examples / Monocular directory and copy the file TUM1.yaml:

Open the copied file our.yaml and replace the camera calibration parameters with the ones obtained above, and also set the FPS:

Save the file. Now we can run ORB SLAM (execute three commands in different tabs of the terminal):

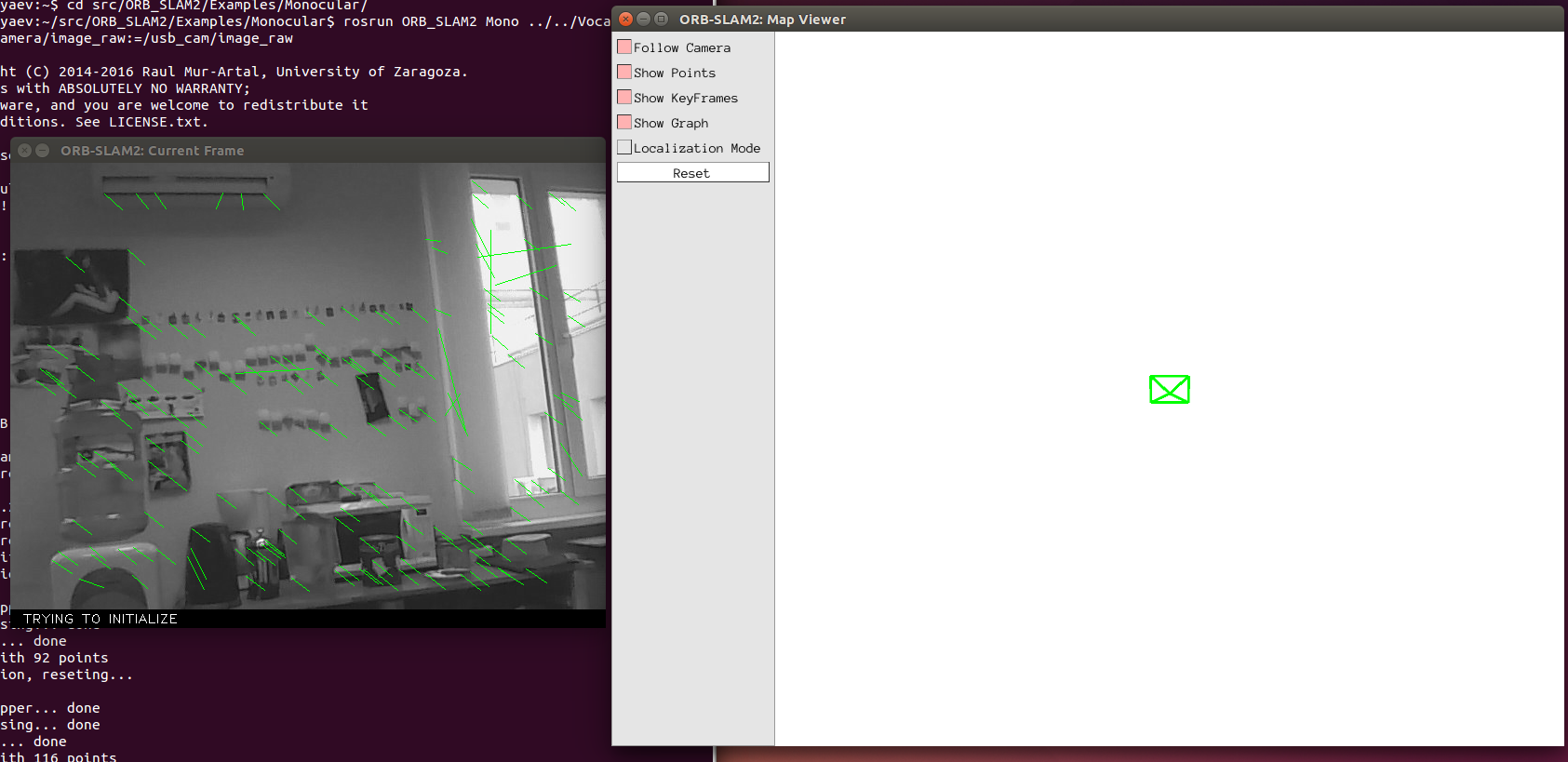

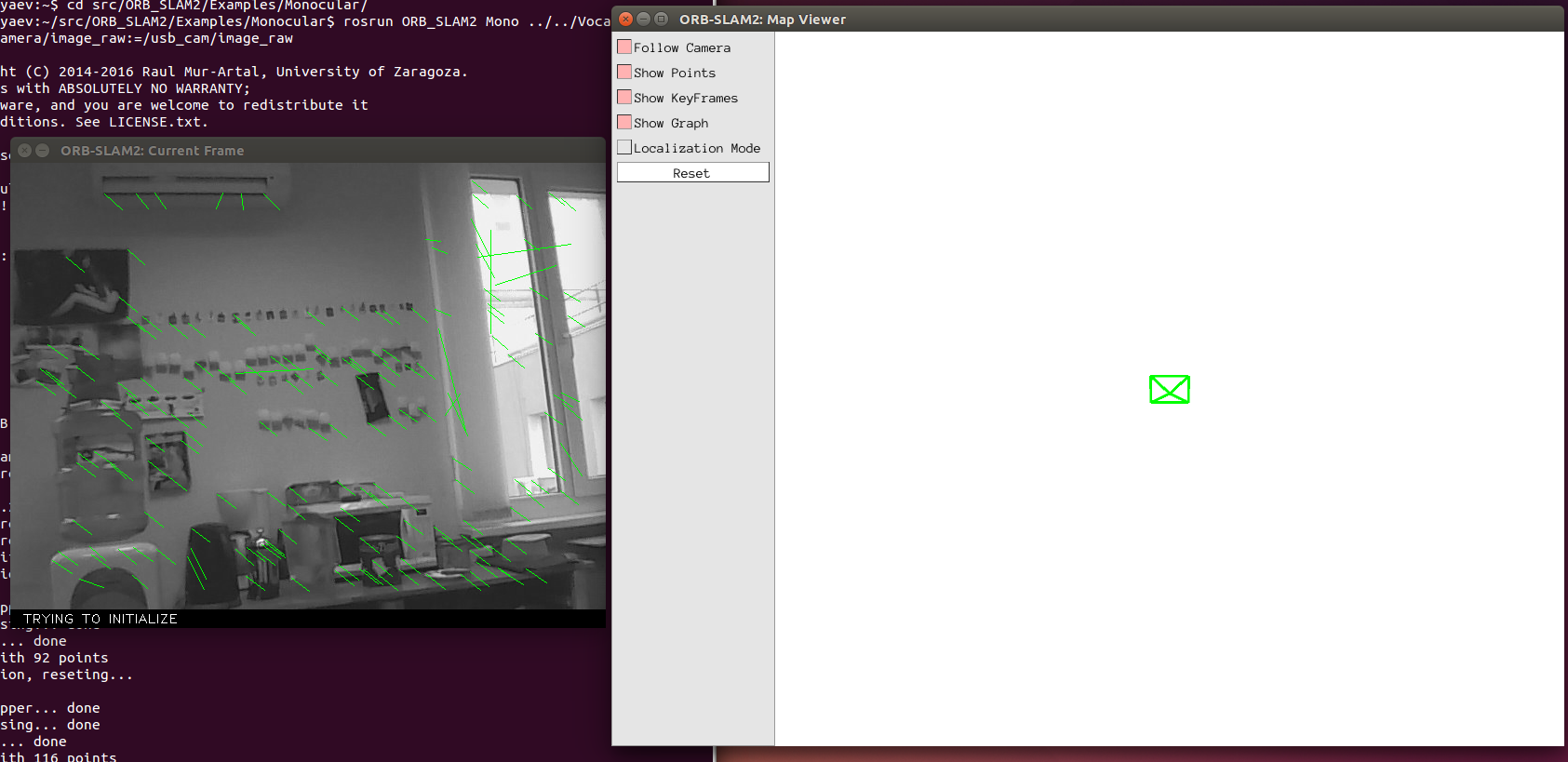

If everything went well, then you should see two windows:

Move the camera a bit in the image plane to initialize SLAM:

All this is great, but ORB SLAM was designed as a ROS-independent package. The binary that we launched is actually just an example of using the algorithm in ROS. By vague logic, the developers did not include the publication of the motion path in this example, and only save it in the form of the KeyFrameTrajectory.txt text file after completion of work. Although such a publication will take several lines of code.

The algorithm provides very few parameters for tuning, and they are very accurately described in the launch file, which is given above.

If you need a fast algorithm that should work, for example, onboard, and the environment does not contain large flat monophonic objects, then ORB SLAM is perfect for you.

We have already briefly touched on the principle of LSD SLAM in the article about AR.Drone navigation experiments . A more detailed analysis of the algorithm clearly does not fit into the format of the lesson, you can read about it in the developers article .

After you have installed LSD SLAM (guided by the previous lesson), to start you need to prepare:

Launch LSD SLAM (from the folder with the startup file):

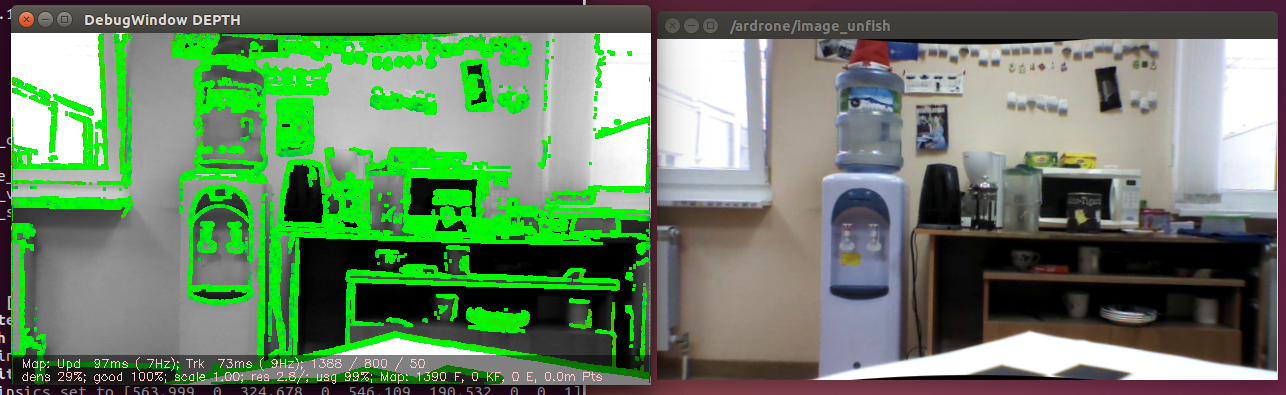

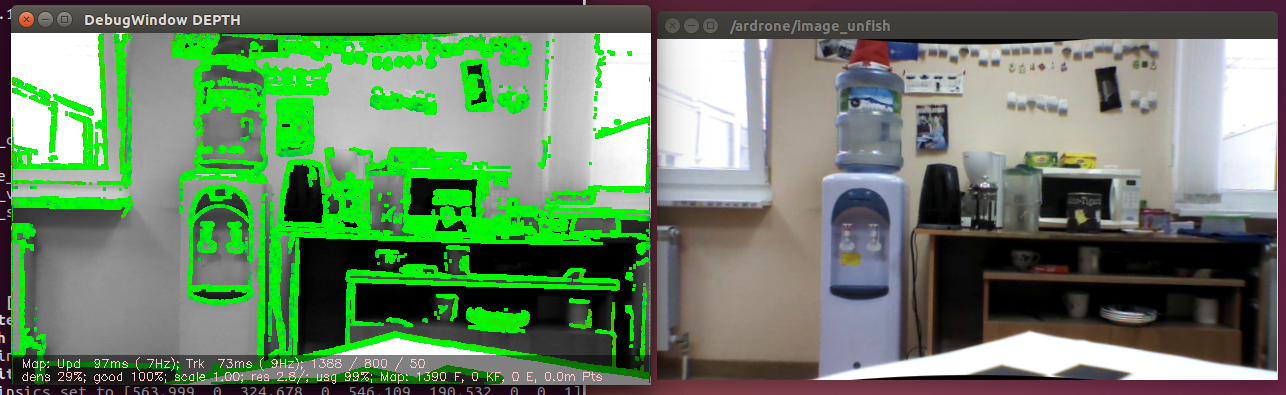

If everything worked out, you should see two windows:

Also start the point cloud viewer from the LSD SLAM delivery (in another terminal window):

The viewer should look something like this:

The algorithm provides several parameters for tuning, the most important are these:

If you need a dense terrain map (for example, to build an obstacle map), or if the environment does not contain enough features, that is, includes poorly textured large objects, and your platform provides sufficient computing capabilities, then LSD SLAM is suitable for you.

Comparing feature-based monocular algorithms with the so-called direct algorithms that use the whole image, the creator of LSD SLAM Jacob Engel showed at one of his presentations such a table (our translation):

It’s hard to add something.

All monocular algorithms have a set of similar requirements and limitations, namely:

Based on such features and our experience in using such algorithms, we conclude that monocular SLAM should be used when:

This concludes today's lesson, next time we look at SLAM algorithms that use stereo cameras and depth cameras.

Previous lesson - installing and setting up the environment

LSD SLAM

developers site ORB SLAM developers site

For a more detailed analysis of the details, today we will confine ourselves to two implementations of monocular SLAM: ORB SLAM and LSD SLAM. These algorithms are the most advanced in their class from open-source projects. PTAM is also very common , but it is not as cool as, for example, ORB SLAM.

Retrieving Calibration Parameters

All monocular SLAM algorithms require accurate camera calibration. We did this in the last lesson , now we will extract the camera settings. For the camera model we use, we need to extract the camera matrix (fx, fy, cx, cy) and 5 parameters of the distortion function (k1, k2, p1, p2, k3). Go to the ~ / .ros / camera_info directory and open the YAML file with the camera settings there. The contents of the file will look something like this (instead of ardrone_front there will be a different name):

Calibration file

image_width: 640

image_height: 360

camera_name: ardrone_front

camera_matrix:

rows: 3

cols: 3

data: [569.883158064802, 0, 331.403348466206, 0, 568.007065238522, 135.879365106014, 0, 0, 1]

distortion_model: plumb_bob

distortion_coefficients:

rows: 1

cols: 5

data: [-0.526629354780687, 0.274357114262035, 0.0211426202132638, -0.0063942451330052, 0]

rectification_matrix:

rows: 3

cols: 3

data: [1, 0, 0, 0, 1, 0, 0, 0, 1]

projection_matrix:

rows: 3

cols: 4

data: [463.275726318359, 0, 328.456687172518, 0, 0, 535.977355957031, 134.693732992726, 0, 0, 0, 1, 0]

We are interested in the fields camera_matrix and distortion_coefficients, they contain the necessary values in the following format: Save these values, they will come in handy later.

camera_matrix:

rows: 3

cols: 3

data: [fx, 0, fy, 0, cx, cy, 0, 0, 1]

distortion_coefficients:

rows: 1

cols: 5

data: [k1, k2, p1, p2, k3]

ORB SLAM

Principle of operation

The ORB SLAM algorithm as a whole is not too different in principle of work from other visual SLAMs. Features are extracted from the images. Then, using the Bundle Adjustment algorithm, features from different images are placed in 3D space, while simultaneously setting the location of the camera at the time of shooting. However, there are also features. In all cases, a single feature detector is used - ORB (Oriented FAST and Rotated BRIEF). This is a very fast detector (which allows you to achieve real time without using a GPU), and the resulting ORB descriptors of features with a high degree of invariant to the angle of view, camera rotation and lighting. This allows the algorithm to track cycle closures with high accuracy and reliability, and also provides high reliability during relocalization. The algorithm ultimately belongs to the class of so-called feature-based. ORB SLAM builds a sparse map of the area, but it is possible to build a dense map based on images of key frames. You can get acquainted with the algorithm in the article by the developers .

Launch

We did not describe the process of installing ORB SLAM in the previous lesson, so let's dwell on this here. In addition to the already installed environment, we will need to install Pangolin (do not clone the repository in the ROS workspace):

git clone https://github.com/stevenlovegrove/Pangolin.git

cd Pangolin

mkdir build

cd build

cmake -DCPP11_NO_BOOST=1 ..

make -j

Next, install the actual ORB SLAM (again, do not clone the sources in the workspace):

git clone https://github.com/raulmur/ORB_SLAM2.git ORB_SLAM2

cd ORB_SLAM2

chmod +x build.sh

./build.sh

To use the package in ROS, you need to add the path to the binaries in ROS_PACKAGE_PATH (replace PATH with the path where you installed ORB SLAM):

echo export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:PATH/ORB_SLAM2/Examples/ROS >> ~/.bashrc

source ~/.bashrc

Now we need to enter the camera calibration data and ORB SLAM settings in the settings file itself. Go to the Examples / Monocular directory and copy the file TUM1.yaml:

cd Examples/Monocular

cp TUM1.yaml our.yaml

Open the copied file our.yaml and replace the camera calibration parameters with the ones obtained above, and also set the FPS:

Configuration file

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 563.719912

Camera.fy: 569.033809

Camera.cx: 331.711374

Camera.cy: 175.619211

Camera.k1: -0.523746

Camera.k2: 0.306187

Camera.p1: 0.011280

Camera.p2: 0.003937

Camera.k3: 0

# Camera frames per second

Camera.fps: 30.0

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1000

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500

Save the file. Now we can run ORB SLAM (execute three commands in different tabs of the terminal):

roscore

rosrun usb_cam usb_cam_node _video_device:=dev/video0 ← Ваше устройство может отличаться

rosrun ORB_SLAM2 Mono ../../Vocabulary/ORBvoc.txt our.yaml /camera/image_raw:=/usb_cam/image_raw

If everything went well, then you should see two windows:

Move the camera a bit in the image plane to initialize SLAM:

All this is great, but ORB SLAM was designed as a ROS-independent package. The binary that we launched is actually just an example of using the algorithm in ROS. By vague logic, the developers did not include the publication of the motion path in this example, and only save it in the form of the KeyFrameTrajectory.txt text file after completion of work. Although such a publication will take several lines of code.

Settings

The algorithm provides very few parameters for tuning, and they are very accurately described in the launch file, which is given above.

When to use ORB SLAM?

If you need a fast algorithm that should work, for example, onboard, and the environment does not contain large flat monophonic objects, then ORB SLAM is perfect for you.

LSD SLAM

Principle of operation

We have already briefly touched on the principle of LSD SLAM in the article about AR.Drone navigation experiments . A more detailed analysis of the algorithm clearly does not fit into the format of the lesson, you can read about it in the developers article .

Launch

After you have installed LSD SLAM (guided by the previous lesson), to start you need to prepare:

- Camera.cfg camera calibration

file Create a camera.cfg file in the ~ / ros_workspace / rosbuild / package / lsd_slam / lsd_slam_core / calib directory

and copy the calibration parameters to the first line camera.cfg using this pattern (note that the fifth distortion parameter is not used): In the next line, set the width and height of the original image, and leave the last lines unchanged.fx fy cx cy k1 k2 p1 p2

640 360

crop

640 480 - Lsd_slam.launch startup file

Launch LSD SLAM (from the folder with the startup file):

roslaunch lsd_slam.launch

If everything worked out, you should see two windows:

Also start the point cloud viewer from the LSD SLAM delivery (in another terminal window):

rosrun lsd_slam_viewer viewer

The viewer should look something like this:

Settings

The algorithm provides several parameters for tuning, the most important are these:

- minUseGrad - minimum intensity gradient to create a new 3D point. The lower the value, the better the algorithm works with solid objects and the denser the map. However, the lower this value, the more clearly the rectification errors of the camera affect SLAM quality. In addition, lower values significantly reduce the performance of the algorithm.

- cameraPixelNoise - noise of pixel intensity values. It is necessary to set a value greater than the real noise of the matrix in order to take into account the errors of discretization and interpolation.

- useAffineLightningEstimation - you can try to enable it to fix problems with autoexposure.

- useFabMap - Enables openFabMap for finding loops.

Recommendations

- Run the algorithm on a powerful CPU. Unlike ORB SLAM, LSD SLAM has significant hardware requirements. In addition, the algorithm must work in real time, otherwise there can be no talk of acceptable SLAM quality.

- Calibrate the camera as accurately as possible. Direct methods, which include LSD SLAM, are very sensitive to calibration quality.

- Use a global-shutter camera if possible. Rolling shutter can be used (in fact, we used only this type of shutter), but the results will be worse.

When to use LSD SLAM?

If you need a dense terrain map (for example, to build an obstacle map), or if the environment does not contain enough features, that is, includes poorly textured large objects, and your platform provides sufficient computing capabilities, then LSD SLAM is suitable for you.

Feature-based vs. Direct

Comparing feature-based monocular algorithms with the so-called direct algorithms that use the whole image, the creator of LSD SLAM Jacob Engel showed at one of his presentations such a table (our translation):

| Feature-based | Direct |

|---|---|

| Use only features (e.g. corners) | Use full image |

| Faster | Slower (but parallelize well) |

| Easy to remove noise (outliers) | Not so easy to remove noise |

| Resistant to rolling shutter | Unstable to rolling shutter |

| Use a small portion of information from images | Use more complete information |

| No complicated initialization required | Require good initialization |

| over 20 years of intensive development | about 4 years of research |

It’s hard to add something.

General recommendations for use

All monocular algorithms have a set of similar requirements and limitations, namely:

- The need for accurate camera calibration. Not so critical for feature-based algorithms.

- The inability to determine the scale without the help of external sensors or the user.

- Camera requirements: high FPS + wide viewing angle. These parameters are related both to each other and to the maximum speed of the camera.

Based on such features and our experience in using such algorithms, we conclude that monocular SLAM should be used when:

- You are strictly limited to one camera;

- you have the opportunity to assess the scale of localization and maps from external sources, or the scale does not matter for the solution of your problem;

- The camera’s specifications satisfy the above requirements and allow for precise calibration and rectification of the image.

This concludes today's lesson, next time we look at SLAM algorithms that use stereo cameras and depth cameras.

Sources

Previous lesson - installing and setting up the environment

LSD SLAM

developers site ORB SLAM developers site