How to make the test environment as close as possible to the combat environment

One of the ways to improve the quality of the product is the matching environment on the battle servers and in the testing environment. We tried to minimize the number of errors associated with the difference in configurations, by moving from our old test environment, where the service settings were very different from the combat ones, to a new environment where the configuration almost matches the combat one. We did this with docker and ansible, got a lot of profit, but also did not avoid various problems. I will try to talk about this transition and interesting details in this article.

At HeadHunter, about 20 engineers are involved in the testing process: manual testing specialists, automated testers, and a small QA team that develops its process and infrastructure. The infrastructure is quite extensive - about 100 test benches with their own tool for their deployment, more than 5000 autotests with the ability to run them by any employee of the technical department.

On the other hand, production where there are even more servers and where the price of the error is much higher.

What do our testers want? Release a product without bugs and downtime in a combat environment. And they achieve this in the current test environment. True, sometimes problems slip through that are impossible to catch on this environment. Such as a configuration change.

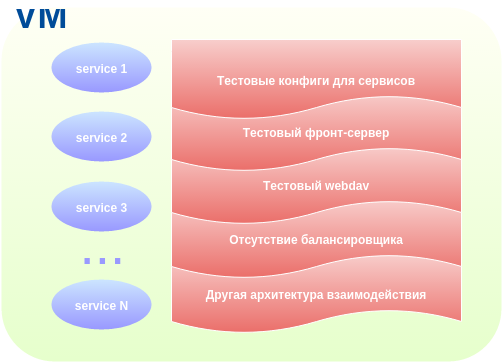

The old test environment had a number of features:

- Own configuration files for applications that differ from production and require constant support.

- Lack of application deployment testing.

- The absence of some applications that exist and work in a combat environment.

- Fully test web server and webdav configs.

All these points led to various bugs, the most unpleasant of which was almost an hour-long simple site due to the incorrect nginx config.

What I wanted from the new environment:

- using the configuration as close as possible to the combat environment (the same nginx locations, balancers, etc.);

- the ability to do everything that we could do in the old environment, and even a little more - to test the deployment of applications and their interaction;

- relevance of the configuration in the test environment relative to the combat environment;

- ease in supporting change.

The beginning of the way

The idea to use the configuration from production in a test environment appeared a long time ago (about 3 years ago), but there were other more important tasks, and we did not undertake the implementation of this idea.

And we took it by chance, deciding to try a new technological trend in the form of docker at the moment when version 0.8 was released.

To understand what is so special about our test environment, we need to have a little idea of what hh.ru production consists of.

Production hh.ru is a fleet of servers running separate parts of the site - applications, each of which is responsible for its function. Applications communicate with each other via http.

The idea was to recreate the same structure by replacing the server fleet with lxc containers. And to make it convenient to manage all this economy, use an add-in called docker.

Not really docker-way

We didn’t follow the true docker-way when one service is running in one container. The goal was to make test benches look like production infrastructure and operational services.

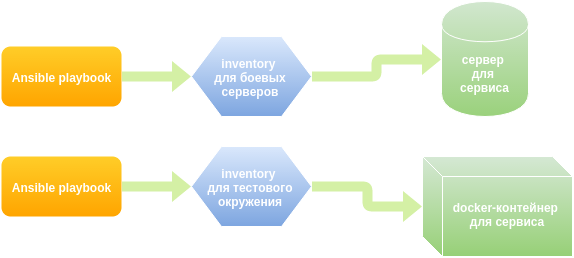

The maintenance service uses ansible to deploy applications and configure servers. The calculation procedure and configuration templates for each service are described in the yaml file, in the terminology ansible - playbook. It was decided to use the same playbooks in the test environment. The flexibility here is the ability to replace values and have your own, test, set of variable values.

As a result, we formed several docker images - a basic image in the form of ubuntu with init & ssh, with a test database, with cassandra, with a deb repository and a maven repository, which are available in the internal docker registry. From these images we create the number of containers we need. Ansible then rolls the required playbooks to the host containers specified in inventory (a list of server groups that ansible will work with).

All running containers are located in one (internal for each host machine) network, and each container has a fixed IP address and DNS name - and here is also not a docker-way. To completely recreate the production infrastructure, some containers even have the IP addresses of battle servers.

You can access containers by ssh directly from the host machine. Inside the container, the same file location as on production servers, the same versions of applications and dependencies.

To conveniently search for errors on the host machine, directories with logs from each container are aggregated using a symbolic link mechanism. Each production request has a unique number, and now the same system is available in a test environment, which allows, having the request id, to find its mention in the logs of all services.

We create and configure the host machine for containers from our testbed management system using vmbuilder & ansible. New machines required more RAM due to additional services that were not previously in the test environment.

To facilitate the work, a lot of scripts were written (python, shell) - for launching playbooks (so that testers do not remember various tags and ansible startup parameters), for working with containers, for creating the whole environment from scratch and updating it, updating and restoring test databases and etc.

The assembly of services from the code for testing is also automated, and in the new environment the exact same build program is used as when releasing releases for production. The assembly is carried out in a special container where all the necessary build dependencies are installed.

What problems were and how we solved them

IP addresses of containers and their permanent list.

Docker by default issues IP addresses from the subnet in random order and the container can get any address. In order to somehow get stuck in the DNS zone, we decided to toss each container its unique address assigned to it specified in the config file.

Parallel launch ansible.

The maintenance service never faces the challenge of creating production from scratch, so they never run all ansible playbooks at once. We tried, and the sequential launch of playbooks to deploy all services took about 3 hours. Such a time did not completely suit us when deploying a test environment. It was decided to run playbooks in parallel. And here we were again ambushed: if you run everything in parallel, then some services do not start because they are trying to establish a connection with other services that have not yet started.

Having designated an approximate map of these dependencies, we have selected service groups and run playbooks in parallel inside these groups.

Tasks that are not available during the release of applications by the maintenance service.

The maintenance service deploys already assembled applications in deb packages, the tester, like the developer, has to compile applications from the source code, there are other dependencies during assembly when it comes to the installation. To solve the assembly problem, we added another container in which we put all the necessary dependencies. A separate script collects a package inside this container from the sources of the application you need at the moment. Then the package is poured into the local repository and rolled out to those containers where it is needed.

Problems with increased iron requirements.

In view of the greater number of services (balancers) and other application settings relative to the old test environment, new stands required more RAM.

Problems with timeouts.

On production, each service is on its own server, quite powerful. In a test environment, container services share resources. The set production timeouts turned out to be inactive in the test environment. They were moved to ansible variables and changed for the test environment.

Problems with retries and container aliases.

Some errors that occur in services are bypassed at production by repeated requests for another copy of the service (retrays). In the old environment, retrays were not used at all. In the new environment, although we raised one container with the service, dns aliases were made for some services to make retrays work. Retrays make the system a little more stable.

pgbouncer and number of connections.

In the old test environment, all services went directly to postgres, in production, pgbouncer, the database connection manager, stands in front of each postgres. If there is no manager, then each connection to the database is a separate postgres process, consuming additional memory. After installing pgbouncer, the number of postgres processes was reduced from 300 to 35 on a working test bench.

Service monitoring.

For ease of use and launch of autotests, easy monitoring was done, which with a delay of less than a minute shows the status of services inside containers. Made on the basis of haproxy, which does http check for services from the config. A configuration file for haproxy is automatically generated based on ansible inventory and address and port data from ansible playbooks. Monitoring has a user-friendly view for people and a json-view for autotests, which check the status of the stand before running.

There were many other problems: with containers, with ansible, with our applications, with their settings, etc. We have solved most of them and continue to decide what remains.

How to use all this for testing?

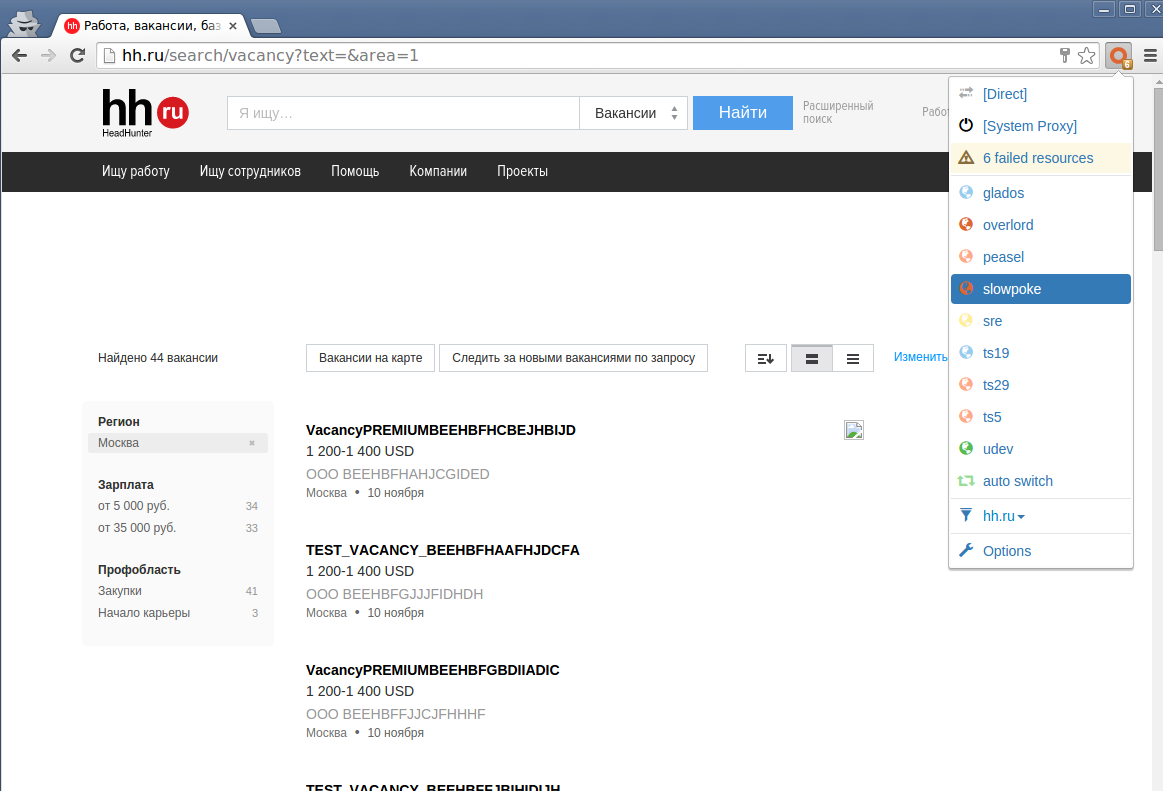

Each test bench has its own DNS name on the network. Previously, to gain access to the test bench, the tester entered hh.ru.standname and received a copy of the hh.ru site. It was quite simple, but it did not allow testing many things and made difficulties in testing mobile applications.

Now, thanks to the use of production configs for nginx, which do not really want to accept URIs ending in standname as input, the tester writes proxies in his browser (squid is raised on each host machine) and opens hh.ru or any other website from the browser our pool (career.ru, jobs.tut.by, etc) and any of the third level domains, for example, nn.hh.ru.

Using a proxy allows you to test mobile applications without problems by simply enabling the proxy in the wifi settings.

If the tester or developer wants to access the service and its port directly from the outside, then port forwarding is done using haproxy - for example, for debugging or other purposes.

Profit

At the moment, we are still in the process of transitioning to a new environment and we are realizing all kinds of wishes that have appeared during the work. Manual testing has already been transferred to new stands and the main release stand. In the near future we plan to transfer the auto-testing infrastructure to a new environment.

Currently, we already have profit in the following cases:

- to release a new service, the developer himself can write and test ansible playbook and only after that transfer it to the operation service. This allows you to test the configs and the calculation process as close as possible to production;

- you can easily raise several instances of the same service in different containers and check the compatibility of versions or the work of the balancer when one of them is disabled, or how the site will work if two instances of three of one service suddenly stop responding;

- you can check for some complex changes in nginx config files or change the webdav working scheme;

- for debugging, you can easily connect a test server to our monitoring and conveniently investigate problems;

- While working on a new environment, a lot of bugs were discovered and fixed in the production configuration and in ansible playbooks.

As with all innovations, there are those who are satisfied and those who accept the new with hostility. The main stumbling block is the use of proxies - for some it is not very convenient, which is understandable. Together we will try to solve this problem.

The following plans include full automation of the creation of stands for each release and exclusion of a person from the process of assembling and automatically testing releases.