Neural networks for image processing. Tells Alexander Savsunenko from Skylum Software

For six years, Alexander Savsunenko has been developing intelligent systems, two of which as a senior research fellow at the University of New York at Stony Brook. He developed intelligent systems for DNA analysis, imaging, marketing.

Now Alexander is in charge of AI Lab at Skylum Software, where he is engaged in graphic editors based on neural networks. We asked which of the created services he is particularly proud of and why use neural networks in A / B testing.

Alexander, tell us about your scientific work at the University of New York at Stony Brook. What projects did you do there and were they related to artificial intelligence or machine learning?

No, they were not directly related to AI and machine learning. I was researching new materials based on graphene. We developed a new material for 3D printing, which would conduct electricity. Then, using a two-nozzle printer, it would be possible to print both the case and the electronic board layout at one time. The material we eventually created, and now it is sold.

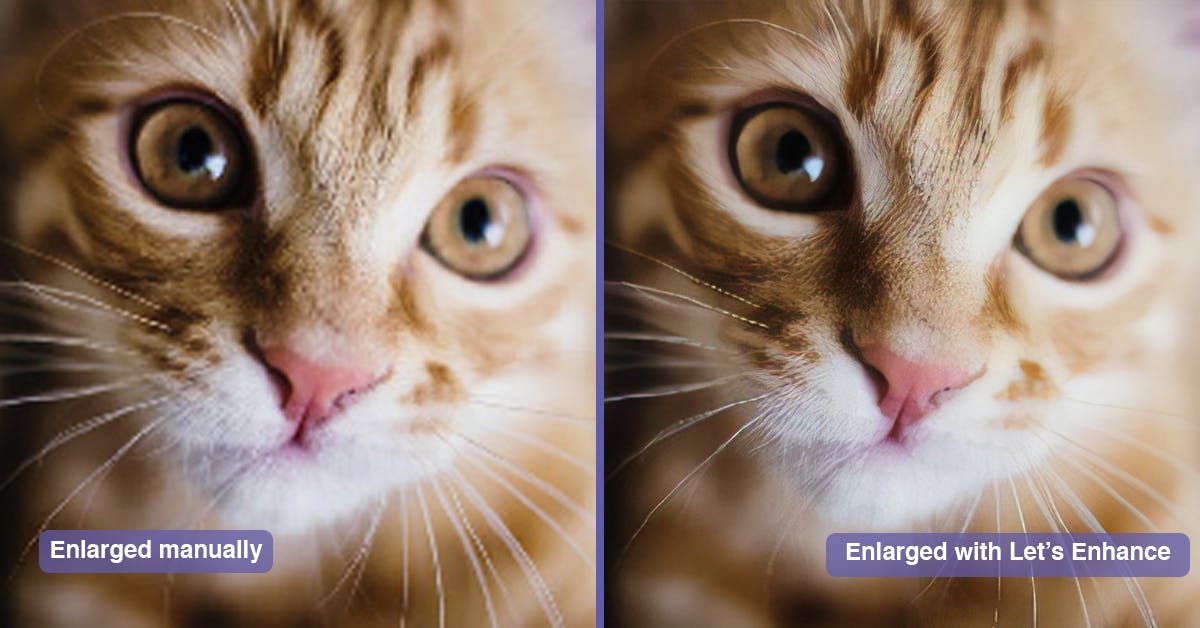

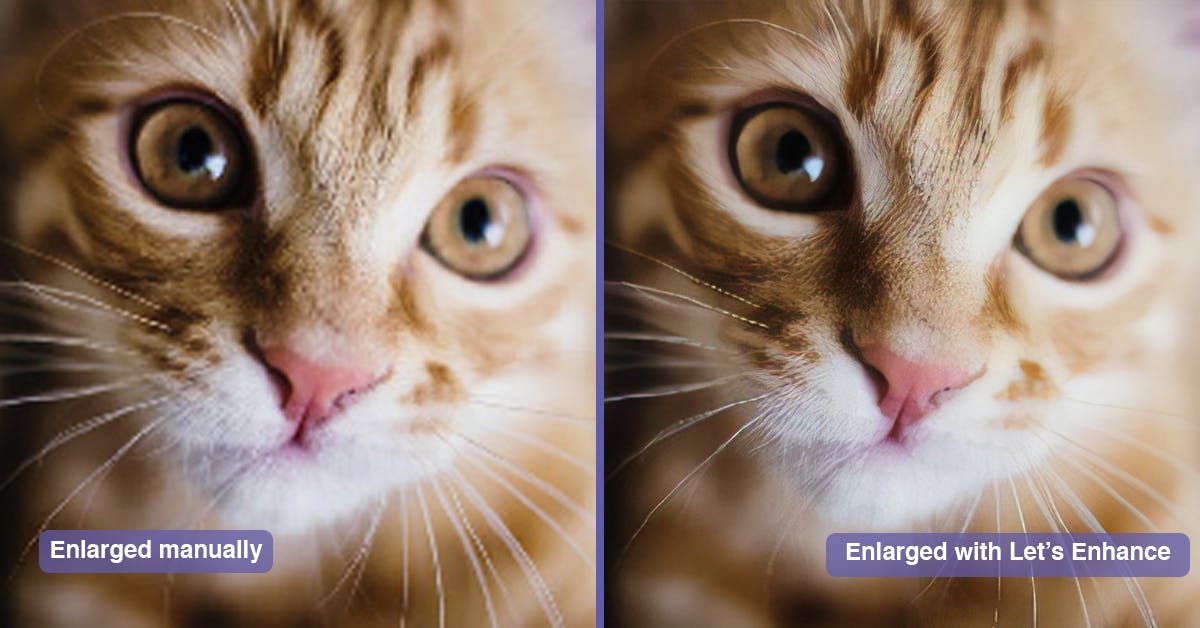

After you had an interesting project in the field of machine learning - Let's Enhance, a service for improving the quality of photos. Tell us how you managed to teach the machine to restore compressed images?

We took images in good quality, got compressed and noisy of them, and then trained the neural network so that it learned how to restore the image in good quality. After training on such pairs, the neural network was able to independently improve the quality of images: remove pixelation, compression artifacts and other defects.

Photo Source

What was the most difficult thing about this project?

I think to support this system in produstion. When TechCrunch articles came out, Mashable was about our service, a lot of traffic came to us, and we processed about 200 thousand images a day. We had to work on ensuring that our servers withstood all this.

Last year released Let's Enhance 2.0. What was new in it?

We changed the training method, the loss function, the network architecture. If you strive to improve the quality of the product, these aspects can be changed infinitely.

What is the service audience today? Did you manage to monetize it?

I left Let's Enhance.io almost a year ago. After that, in July 2018, the startup went into the Techstars London program and received investments from the accelerator. The project monetized almost immediately and went into profit.

What AI developments did you take part in? Which of them are particularly proud?

My colleagues and I had a Titanovo Nutrigenetic project that analyzed DNA. Using machine learning, we learned to predict physiological markers and predispositions on the basis of full-genome analysis and chip genotyping. Collected data from scientific articles, statistics, formed datasets, taught models, based on them formulated recommendations for people and predictions about their health in the future - all this was built on fuzzy logic, different classifiers. Now there are many projects with the use of AI and ML in nutrigenetics, in sportgenetics. But we were among the first. Materials on these developments can be found in my blog on Medium.

I experimented with dynamic optimization of landing pages for marketing teams, shifting the theory of multi-armed gangsters to a neural network. Made machine learning scripts to optimize traffic purchases. And the work with images that I am doing now is also related to artificial intelligence. And I, too, am proud of her.

You are currently working on image editing services. What are the functions of a neural network here?

First of all, pattern recognition. The largest role of AI plays in the program Photolemur from Skylum Software: thanks to machine vision, this program can improve photos in one click.

How does this happen?

We upload a photo, and the service automatically improves it - you just need to save it. No popup windows, sliders and modes.

To do this, first of all, the program must recognize the type of image: portrait, landscape, cityscape. And the people in the picture, buildings and other objects, time of day, time of year (if the photo was taken on the street). Then you need to produce a segmentation of the image, select the appropriate zones. In the portrait, for example, separate parts of the face stand out: eyes, ears, nostrils, and others.

Then all this needs to be improved, and here artificial intelligence is no longer applied. The snapshot is improved by the wired algorithms, following the way photographers process such snapshots. For example, smooth the skin, increase the contrast for the sky, make the whites of the eyes lighter. But this is all secondary. And first of all you need to segment the image.

What databases and algorithms were used to train the system?

Speaking about the framework for developing neural networks, I prefer MXNet - quite an exotic choice for today, but gradually gaining popularity. The main advantage is the speed of calculations and the hybrid mode of switching between the imperative and symbolic modes of programming neural networks, this is convenient. But the names of datasets and algorithms I can not tell you, this is a commercial secret of the project.

What difficulties did you encounter when creating an intelligent graphic editor?

The technology has not yet matured; neural networks often make mistakes: in pattern recognition, and especially in segmentation, when it comes to complex images. Therefore, it was necessary to analyze the results and edit using traditional methods and standard algorithms. While it is impossible to build a system that would do everything from beginning to end exclusively with the help of a neural network. And, of course, when working on the end user device, the complexity of the network must be taken into account - computing on the CPU is rather slow, not everyone has CUDA-enabled GPUs, and OpenCL is not well supported.

What image is taken for the perfect option?

Our Quality Assurance team is working on this, which pays special attention to the final image quality. Since both our photo editors and cameras are constantly changing, it is impossible to fix some ideal variant, because it is constantly changing.

What is the audience for these products? Are you able to "poach" Adobe users?

Our flagship product, Luminar, is a new alternative to Adobe Lightroom. Due to the small and cohesive team, it is possible to introduce new technologies into the product much faster and constantly attract new users. Luminar is great for both beginners and professional photographers, as it combines one-click editing tools and a full range of functions for more detailed photo work.

Luminar interface. In the articlethere is a comparison of work in Luminar and Photoshop

But Photolemur is a unique and rather young product, it is just over a year old. His target audience is people who do not want to understand all the sliders and Photoshop buttons, but simply want their vacation photos to become beautiful. We managed to find our audience: sales go and the product is actively used.

You are also involved in projects that develop neural networks to optimize landing pages. Tell us more about this work.

This is a classic task when you need to conduct A / B testing of a landing page. If you generate separate pages for all possible elementary variants, there may be millions of versions. And to get a statistically significant result with the classical approach, you need to perform a pair of A / B testing of all these options. This requires an incredible amount of traffic. Such large-scale testing can afford companies only with very large resources, Amazon, for example.

And if a small company wants to test a lot of options, then you can do A / B testing using neural networks that work on reinforcement training. Then, in essence, filling the page with elements is handed over to the neural network and assigning the page conversion increase to it as a task. In this version of the work, the neural network is spinning on the server and is trained in parallel with the traffic flow. And in the end, he finds the optimal landing variant much faster.

If you make it a little more difficult, the AI will learn to show versions of landing pages that are personalized for a particular user. Because we also provide additional information: browser, time of day, operating system. Accordingly, the user sees the page that the neural network demonstrates to him, and the traffic with this method needs to be attracted much less. Of course, the perfect hit is not guaranteed, but the page will give better results much faster.

Now Alexander is in charge of AI Lab at Skylum Software, where he is engaged in graphic editors based on neural networks. We asked which of the created services he is particularly proud of and why use neural networks in A / B testing.

Alexander, tell us about your scientific work at the University of New York at Stony Brook. What projects did you do there and were they related to artificial intelligence or machine learning?

No, they were not directly related to AI and machine learning. I was researching new materials based on graphene. We developed a new material for 3D printing, which would conduct electricity. Then, using a two-nozzle printer, it would be possible to print both the case and the electronic board layout at one time. The material we eventually created, and now it is sold.

After you had an interesting project in the field of machine learning - Let's Enhance, a service for improving the quality of photos. Tell us how you managed to teach the machine to restore compressed images?

We took images in good quality, got compressed and noisy of them, and then trained the neural network so that it learned how to restore the image in good quality. After training on such pairs, the neural network was able to independently improve the quality of images: remove pixelation, compression artifacts and other defects.

Photo Source

What was the most difficult thing about this project?

I think to support this system in produstion. When TechCrunch articles came out, Mashable was about our service, a lot of traffic came to us, and we processed about 200 thousand images a day. We had to work on ensuring that our servers withstood all this.

Last year released Let's Enhance 2.0. What was new in it?

We changed the training method, the loss function, the network architecture. If you strive to improve the quality of the product, these aspects can be changed infinitely.

What is the service audience today? Did you manage to monetize it?

I left Let's Enhance.io almost a year ago. After that, in July 2018, the startup went into the Techstars London program and received investments from the accelerator. The project monetized almost immediately and went into profit.

What AI developments did you take part in? Which of them are particularly proud?

My colleagues and I had a Titanovo Nutrigenetic project that analyzed DNA. Using machine learning, we learned to predict physiological markers and predispositions on the basis of full-genome analysis and chip genotyping. Collected data from scientific articles, statistics, formed datasets, taught models, based on them formulated recommendations for people and predictions about their health in the future - all this was built on fuzzy logic, different classifiers. Now there are many projects with the use of AI and ML in nutrigenetics, in sportgenetics. But we were among the first. Materials on these developments can be found in my blog on Medium.

I experimented with dynamic optimization of landing pages for marketing teams, shifting the theory of multi-armed gangsters to a neural network. Made machine learning scripts to optimize traffic purchases. And the work with images that I am doing now is also related to artificial intelligence. And I, too, am proud of her.

You are currently working on image editing services. What are the functions of a neural network here?

First of all, pattern recognition. The largest role of AI plays in the program Photolemur from Skylum Software: thanks to machine vision, this program can improve photos in one click.

How does this happen?

We upload a photo, and the service automatically improves it - you just need to save it. No popup windows, sliders and modes.

To do this, first of all, the program must recognize the type of image: portrait, landscape, cityscape. And the people in the picture, buildings and other objects, time of day, time of year (if the photo was taken on the street). Then you need to produce a segmentation of the image, select the appropriate zones. In the portrait, for example, separate parts of the face stand out: eyes, ears, nostrils, and others.

Then all this needs to be improved, and here artificial intelligence is no longer applied. The snapshot is improved by the wired algorithms, following the way photographers process such snapshots. For example, smooth the skin, increase the contrast for the sky, make the whites of the eyes lighter. But this is all secondary. And first of all you need to segment the image.

What databases and algorithms were used to train the system?

Speaking about the framework for developing neural networks, I prefer MXNet - quite an exotic choice for today, but gradually gaining popularity. The main advantage is the speed of calculations and the hybrid mode of switching between the imperative and symbolic modes of programming neural networks, this is convenient. But the names of datasets and algorithms I can not tell you, this is a commercial secret of the project.

What difficulties did you encounter when creating an intelligent graphic editor?

The technology has not yet matured; neural networks often make mistakes: in pattern recognition, and especially in segmentation, when it comes to complex images. Therefore, it was necessary to analyze the results and edit using traditional methods and standard algorithms. While it is impossible to build a system that would do everything from beginning to end exclusively with the help of a neural network. And, of course, when working on the end user device, the complexity of the network must be taken into account - computing on the CPU is rather slow, not everyone has CUDA-enabled GPUs, and OpenCL is not well supported.

What image is taken for the perfect option?

Our Quality Assurance team is working on this, which pays special attention to the final image quality. Since both our photo editors and cameras are constantly changing, it is impossible to fix some ideal variant, because it is constantly changing.

What is the audience for these products? Are you able to "poach" Adobe users?

Our flagship product, Luminar, is a new alternative to Adobe Lightroom. Due to the small and cohesive team, it is possible to introduce new technologies into the product much faster and constantly attract new users. Luminar is great for both beginners and professional photographers, as it combines one-click editing tools and a full range of functions for more detailed photo work.

Luminar interface. In the articlethere is a comparison of work in Luminar and Photoshop

But Photolemur is a unique and rather young product, it is just over a year old. His target audience is people who do not want to understand all the sliders and Photoshop buttons, but simply want their vacation photos to become beautiful. We managed to find our audience: sales go and the product is actively used.

You are also involved in projects that develop neural networks to optimize landing pages. Tell us more about this work.

This is a classic task when you need to conduct A / B testing of a landing page. If you generate separate pages for all possible elementary variants, there may be millions of versions. And to get a statistically significant result with the classical approach, you need to perform a pair of A / B testing of all these options. This requires an incredible amount of traffic. Such large-scale testing can afford companies only with very large resources, Amazon, for example.

And if a small company wants to test a lot of options, then you can do A / B testing using neural networks that work on reinforcement training. Then, in essence, filling the page with elements is handed over to the neural network and assigning the page conversion increase to it as a task. In this version of the work, the neural network is spinning on the server and is trained in parallel with the traffic flow. And in the end, he finds the optimal landing variant much faster.

If you make it a little more difficult, the AI will learn to show versions of landing pages that are personalized for a particular user. Because we also provide additional information: browser, time of day, operating system. Accordingly, the user sees the page that the neural network demonstrates to him, and the traffic with this method needs to be attracted much less. Of course, the perfect hit is not guaranteed, but the page will give better results much faster.

Alexander will talk about using neural networks for visual content and optimizing landing pages on November 14 at AI Conference Kyiv . The list of other speakers and the program of the event, see the official site .