We feel the Chinese iron and find out how cheap and cheerful it is

No need to explain how sanctions have changed the Russian IT market. Because they have not changed it yet. But, at least, the state of mind has changed: it was sanctions that generated interest in alternative brands. First of all, to the Chinese.

We decided to take a closer look at the achievements of the Chinese national industry and test them under typical computing problems, and at the same time check how they would behave in the event of man-made failures and other acts of vandalism. Below is the history of our testing of Inspur and Huawei products .

Everyone is talking about vendor replacement. It is in words quick and easy. However, in practice, replacing the usual, dear to the heart equipment of Western manufacturers with another, little-known one is a difficult and risky step. Until recently, the stigma "Chinese" testified to second-rate. And although today in China they collect many prestigious things (where, for example, is your iPhone built?), It is still not easy to get rid of stereotypes. Huawei

brandhas been known in the market for a long time, and first of all, in the segment of telecommunication equipment. Since the end of the 80s, the company has grown, successfully manufacturing inexpensive competitors of Western models of equipment, primarily Cisco. And more than once was involved in scandals related to unscrupulous copying of technology. Huawei fell into the niche of server solutions about 8 years ago, and during this time managed to build up a rich portfolio of various hardware and software solutions.

Inspur, in turn, has been known outside of China not so long ago, but in China this company with state participation has long been developing the local IT market. In the years 60-70, it produced transistors, which were also used in Chinese satellites; in the 80-90s, it was the first in China to assemble a PC and a server. In its current form as a manufacturer of server hardware and related solutions, Inspur was founded in 2000, today the company takes 5th place in the world in the number of server sales (mainly due to the Chinese market).

We tested some samples of Chinese equipment partly in our laboratory, partly on the Huawei site. But before moving on to describing iron and what we did with it, I want to focus on another problem, which we simultaneously pursued. This is a global task, which is to gradually migrate information systems from proprietary RISC / UNIX platforms to a solid and well-lived x86 platform.

There is a worldwide downward trend in the share of commercial UNIX in the server market. For over 40 years, RISC / UNIX systems have dominated the enterprise segment because they reliably work even under massive load and scale almost linearly as processors and memory are added. But progress does not stand still, and the x86 architecture began to catch up with mainframe-class machines in its capabilities, and Linux was improving more and more in the ability to effectively manage "big hardware". Of course, to this day there are problems where the RISC architecture has no equal when, say, 1,500 database users are “pecked” at one point, which is typical for systems such as billing or card processing of a bank. Nevertheless, already now various tasks, including databases, can be successfully transferred to a cheaper and simpler x86-architecture.

So, about testing. Of course, we did not make any discovery by putting Linux on Intel hardware and running the base on it. And here I do not claim the MUZ-TV Prize. But in general, it was interesting to see how Chinese hardware, as well as the software that was installed on it, would cope with typical tasks. How a red dragon behaves, so to speak, in a chicken coop. In relation to iron, we set ourselves the task of evaluating:

- reliability of the component base;

- stability of the built-in software;

- ease of setup and use.

Inspur

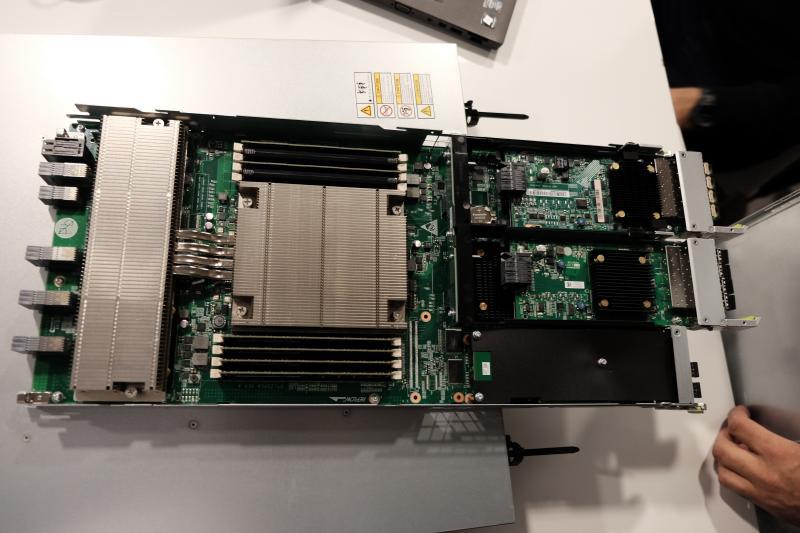

The first production instance of a great Chinese corporation that we came across was the NX5440 blade server. This is the youngest instance of the blade line, there are also the NX5840 and NX8840. The NX5440 server occupies one slot in the basket (half-height), and the NX5840 and NX8840 two each. All three options are installed in a single I8000 chassis, as in our case. Our chassis was equipped with a control module and an Ethernet module.

I note that Inspur in its product line of x86 servers offers NF-series Rack servers, NP tower servers, NX blade servers, as well as a storage server with high disk density. All types of servers are built on the same chipset - Intel C600 - and use Xeon E3 V2 processors. Inspur also has the first and so far the only one in China its large UNIX server based on Itanium processors and CC-NUMA architecture - Inspur Tiansuo TSK1, scalable to 128 physical cores with a UNIX-like OS of its own design K-UX.

The manufacturer claims unprecedented scalability and adaptability of its creations, but in an unbiased view, creations in terms of packing and design features do not differ much from their classmates released by other vendors. Power supplies and fans are duplicated, memory with error correction, network interfaces allow you to create fault-tolerant aggregations. There is flexibility in the layout of the servers - they really can be assembled from a large selection of components.

Experience with these products that have been in our laboratory, however, has shown that the very stereotypes that I wrote about are not so outdated. Our ordeals began almost immediately after the inclusion of this economy. Immediately make a reservation about the documentation from the manufacturer. The information for the most part is descriptive in nature: on such and such screen such and such buttons. Pictures in the user manual are screenshots from the Chinese interface. In general, it turned out to be easier to understand ourselves, without guidance.

In the week we tested this unit, we lost contact with the chassis control module (SMC) and the blade control module (BMC) three times. This misfortune happened beyond all regularities. When this happened for the first time, we called an Inspur engineer to help. By the way, Inspur is doing well with this, except for the fact that all the engineers in the Russian office are Chinese, probably because the office was opened recently. They go to the site immediately with the translator.

The Chinese quickly realized that we were good guys, and happily repaired the blade basket with the transfer of the control module. All the subsequent cases of the loss of communication we repaired by ourselves - in the same way: pushed or restarted something. Here is such a “floating” nature of the problem - when it manifests itself spontaneously and is repaired by random actions.

The SMC interface is not rich in features. The most useful thing is to turn on, turn off the server, and reboot its control module. It is impossible to change the network settings of the BMC blade module from it, this can generally be done only from the web interface of the control module of the blade itself, and the current IP address, if it is unknown, can be found in the BIOS.

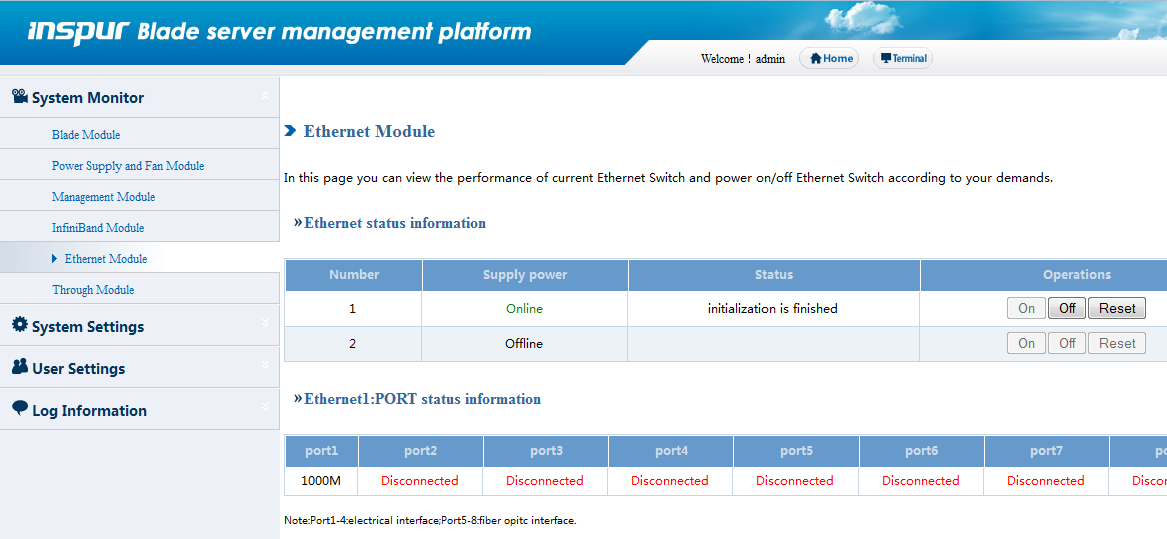

In the Ethernet Module menu, you can see whether the module is installed, whether its power is on, and turn it off if desired. To top it all off, this module turned out to be a hub, not a switch. True, now this model is already outdated, and Inspur supplies already more advanced modules.

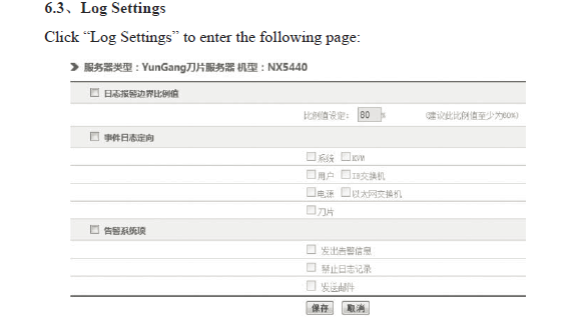

All options were included in the logging settings menu, but when trying to view the logs, the message “Empty logs” was only shown. In the user management menu, it turned out that it is impossible to create an additional user with administrator rights, and a user of the “Common User” type can actually only switch the menu and view, but cannot change anything. In the menu “Power Supply and Fan Module” you can find out the voltage and “revolutions” and, if you wish, manually set the latter for each Fan Module. When remotely controlling the blade through KVM OVER IP, we often encountered various errors that were resolved by reconnecting, less often by rebooting BMC. When I tried to install Windows, I needed a driver for the RAID controller, which in theory goes on the Inspur driver CD.

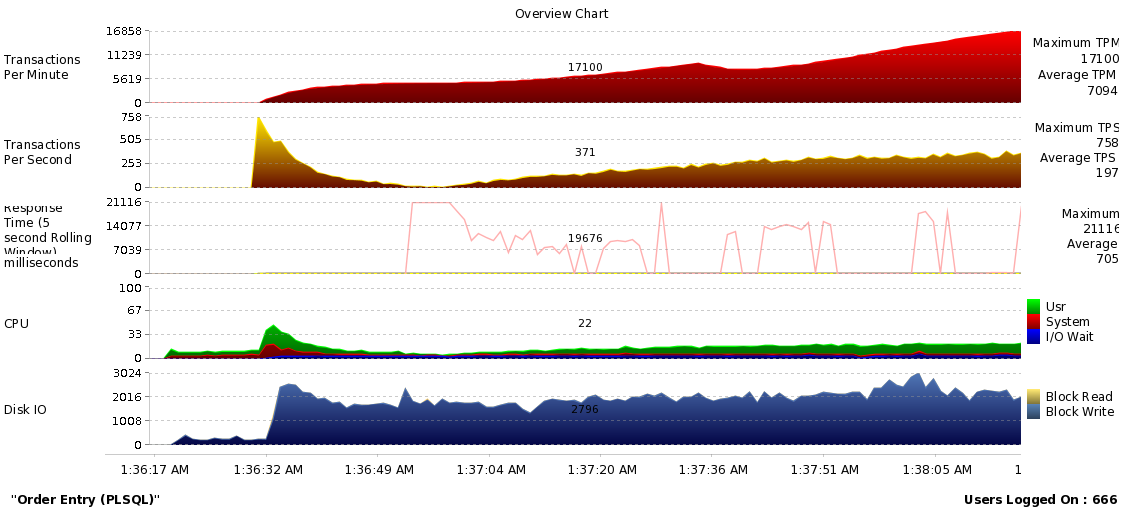

When we learned how to handle this product and repair it if necessary, we uploaded the RHEL 6.6 x86-64 OS server to it and installed the Oracle 11.2.0.4 DBMS on top. For testing, Swingbench 2.4 software was used, as well as the perl square root calculation procedure for heating the CPU. Mentioned Swingbench (http://dominicgiles.com/swingbench.html) is a small free application, a standard way to give a synthetic database load showing graphs.

The server successfully passed the test during the day. The temperature of the processor cores was kept at the level of 55-60 ° C at a temperature of cooling air of the server about 20 ° C. I will not specifically focus on performance indicators (IOPS, TPS, MB / S), because they all depend on the input data, on the settings. We did not set a goal to squeeze performance records from the server, the meaning of load testing was to test the server's ability to work in real-time tasks.

In general, we concluded that the equipment that came to us for testing looked damp. In fairness, I must say that the loss of communication with the control modules, of course, could be a feature of the specific instance with which we were dealing. For the rest, this is a familiar x86 server with two Intel Xeon E5-2690 v2 and 128 Gb DDR3. But in general, Chinese manufacturers are improving their products at such a speed that in a few months we may encounter a new model of this technique, which will already be rid of all childhood diseases. Actually, for this reason, we recently became official partners of Inspur, watching how the vendor develops its line and seeks to sell it in Russia. Given the sanctions, another alternative supplier in our portfolio will not be amiss.

Huawei

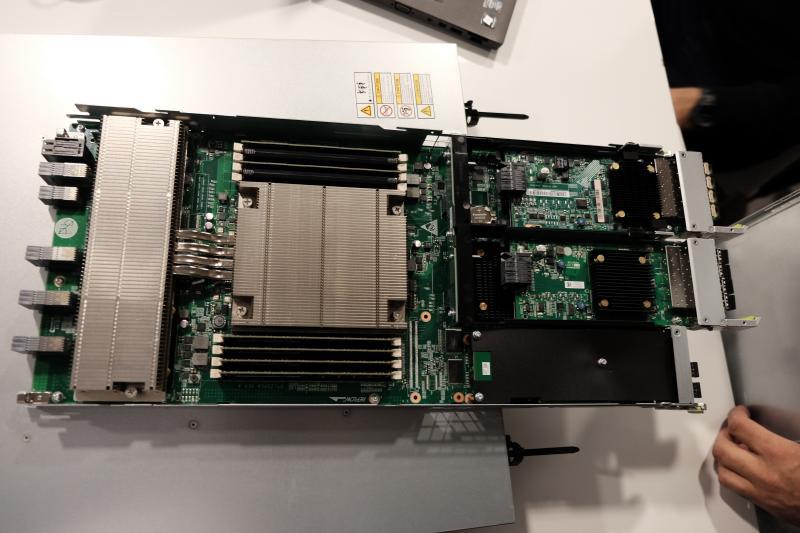

We had at our disposal an E6000H chassis with four BH620 V2 blades installed (configuration: 2x Intel Xeon CPU E5-2407 2.20GHz, 16 GB RAM, 2 Gigabit Ethernet, 2 Emulex FC3532 HBA).

Photo of Huawei from our laboratory. Rear view - that’s how the angle came out better

This hardware belongs to the previous generation, while the current line is now v3. However, this was our laboratory equipment, which we were not at all sorry for. We installed RHEL and Oracle Linux on top of it, on top of the same Oracle 11g DBMS, created a load in it using Swingbench, as well as our own scripts, emulating various load profiles: large write volumes, large data read volumes, heavy queries with heavy sorting. At the end, as an optional information, I gave a sequence of our steps in stress testing.

While the systems were working, processing transactions, we, suddenly jumping out from around the corner, applied various violent methods to them. We pulled out drives, a network on the go, cut down electricity with a knife switch. We were mainly interested in whether the OS could start correctly, and whether the DBMS could recover after resuming work and accessing data. On classical industrial systems, this in the vast majority of cases occurs. But how did Chinese goods behave?

Frankly, everything worked well, and in the end we had nothing to complain about. The hardware worked stably, did not show any daring tricks. The OS and the base behaved normally, practicing all the necessary measures to recover from bullying. We were not able to create a data corruption database.

We also felt a new generation of iron. Such an opportunity was kindly provided by Huawei at their demo site, which even looks futuristic in some places.

Here it is - the Huawei demo platform. I

must say that in the portfolio of Huawei products there are a whole range of solutions: from servers and storage to software virtualization solutions. However, Huawei employees, despite our ardent desire to get acquainted with FusionCompute, a proprietary virtualization environment created on the basis of Xen technology, were not able to launch it during our stay. It is positioned as a replacement for VMware products, however, as you can see, politely does not work. However, the Huawei hardware was definitely learned to do, and therefore the remaining time I will talk about our impressions about it.

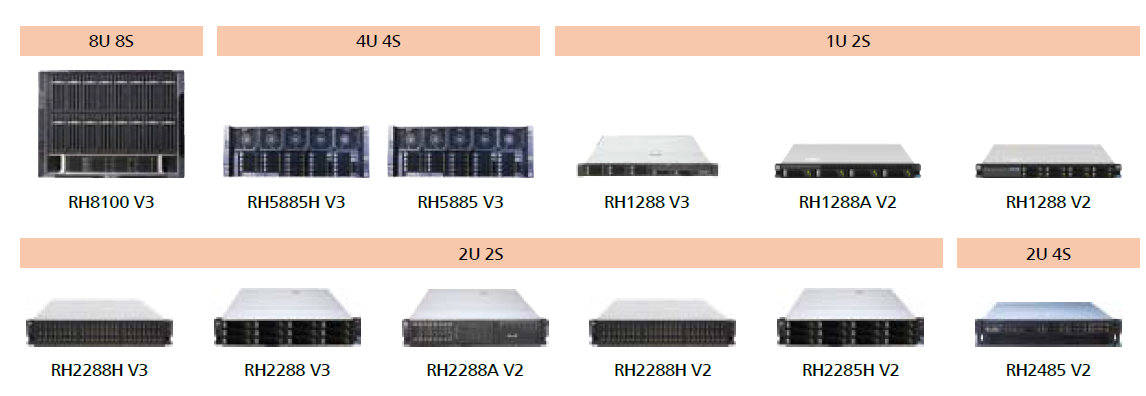

Third-generation server lines contain both Rack servers and Blade and High-Density options, thus providing a large number of competing solutions for medium and large businesses, accustomed to building infrastructure on machines such as HP DL360e and 360p, HP DL380 Gen9 , IBM x3650 and x3850, Dell R730 and R930, etc. Run-in technological solutions and conventional modern iron are used.

Huawei Rack Server Line

There are no Intel processors from entry-level E3, as are no AMD processors. In the new generation v3, DDR4 is supported, and support for 64 GB memory slots is announced, of course, when they appear. The network interfaces of the servers are replaceable, the LOM card also includes a management port. SAS RAID controllers for V2 generation have 6 Gb / s, for V3 they have 12 Gb / s. In both cases, LSI controllers are used with the manufacturer’s native firmware, and not modified from Huawei. A supercapacitor is used as a cache battery.

In general, the set of components in the servers is standard and duplicated for fault tolerance. Power supplies and fans support hot swapping - just like adults.

Huawei servers can operate over a wide temperature range, up to 45ºC. For competitors, the mark rarely exceeds 35ºC. These indicators were achieved due to a more thoughtful design of the motherboard and the location of the memory modules. There are no complaints about the assembly of the servers; the entire design and installation of the components is reliable, without backlashes; replacing the components is straightforward.

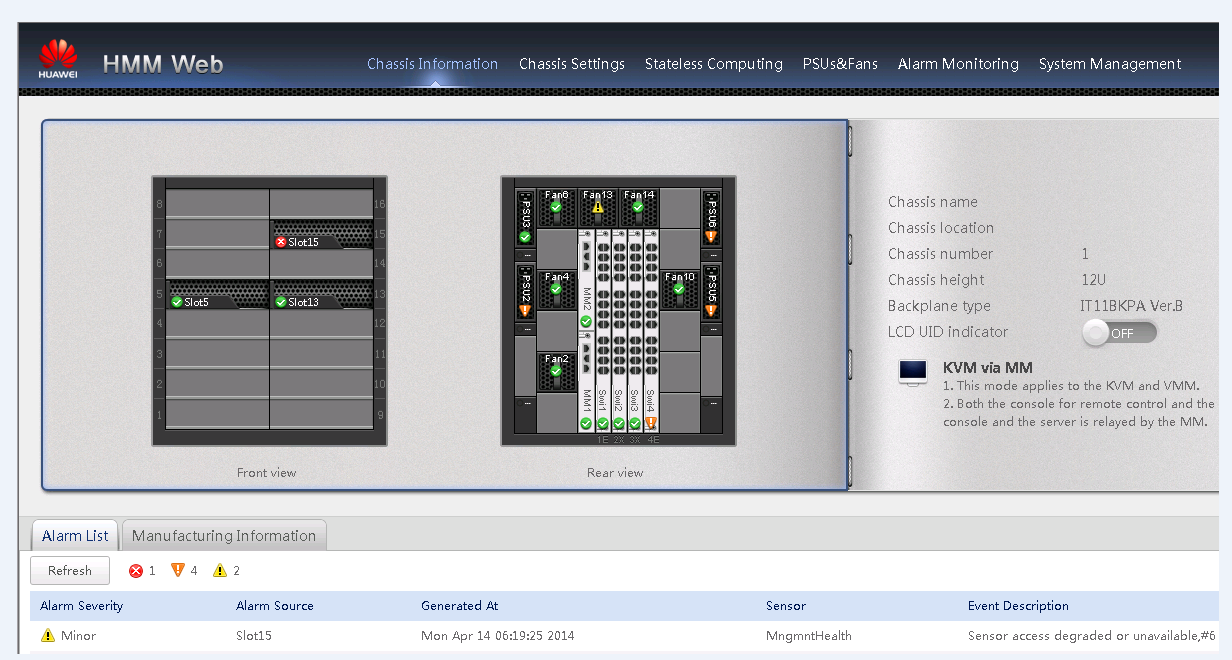

Huawei blade servers are installed in a new 12U E9000 basket, that is, only three pieces can fit in a standard rack. The number of Blade servers in the basket is 16, all are installed horizontally. Blade servers are no different from the Rack servers except for the form factor.

Huawei Blade Server Line

There are 4-socket configurations in the full-sized blade, which occupies 2 basket slots, as well as standard 2-socket configurations that vary in size depending on the number of disks. Blades can connect to LAN and SAN through a large number of switches presented in the line. Huawei’s general idea is to make everything convergent.

All Huawei servers, starting with V2, officially support the following OS: Windows Server, RHEL, SLES, Solaris, CentOS, Citrix XenServer, VMware ESXi, FusionSphere. To avoid driver issues, you must install the OS using the FusionServer Tools Service CD. The solution is a bootable image that first copies all the necessary drivers to disk. Service CD also allows you to configure a RAID array on disks in the server and create a logical volume of the required size. In general, everything worked clearly and without complaints. Drivers for all officially supported operating systems are constantly updated and are available for download through the portal. The E9000 basket manager is called HMM. Since the first version of NMM, only the graphical interface has changed. The rest of the functionality, of course, is still far from ideal.

iMana and iBMC - the managing web interface of the servers, respectively, of the second and third generations. iBMC is a continuation of iMana, also with the redesign of remaining flaws.

We spent several days in the demo center, and in addition to servers, we also studied storage systems and software. They also pulled wires and disks on the fly, however, no one gave us the whole repeat of inhuman experiments with other people's equipment. On the fourth day, for example, we still wanted to test the equipment for moisture resistance, but for some reason the vendor’s employees did not allow us to unwind the fire hose.

I’ll say without jokes that on the whole, Huawei’s products left a favorable impression on themselves, even despite minor roughnesses. There are still some shortcomings in the management interfaces, the English-language documentation still leaves much to be desired, and, obviously, so far there is not much information that can be gathered from the forums. The rest are workable samples, built according to all modern server hardware rules.

Laboratory Chronicles

I will give the torture methods that we applied to servers running the Oracle 11g base. Here is the sequence of steps for stress testing:

- Swingbench with "heavy" settings. The goal is to test the operation of the system under a diverse load remotely resembling the work of users in a spherical application in a vacuum. We installed and configured Swingbench, set the parameters more complicated and started. If it did not fall, then gradually increased the load - and so on until the exhaustion of productivity / resources.

- Large volumes of records in the database. The goal is to make sure that the server-OS-DB bundle remains in a healthy state with overwhelming recording volumes. To do this, we wrote a script that inserts a large amount of data into a table (something like insert into test_table01 as select * from dba_objects a;). We ran this script at the same time with a large number of threads, each of which frantically wrote to its own table.

- Large volumes of disk reads. The goal is to make sure that the server-OS-DB bundle remains in a healthy state when reading volumes from the disk are off scale (without cache). To do this, we set a small value for the buffer_cache parameter, created a huge table without indexes. And then they started to select from there by a random key value and so many times in many threads.

- Heavy queries with heavy sorts. We write the most curved queries on huge tables (using full join, for example, or simply complex and non-indexed). We run a lot and in parallel. This in itself is a serious burden, it was interesting how to cope.

- Concurency, latch, mutex. Our goal was to see how the base will work with high competition for shared resources. To do this, we changed a lot of flows a) a single row of data, b) a large, but always one, data set.

Instead of conclusions

Of the many options that an engineer may be puzzled with at work, testing new equipment seems to be almost the most exciting activity. But in this case, we were dealing not just with a new model of a well-known brand, but with fundamentally new representatives of the market, which, perhaps, in the near future will take their place in the computing equipment park. The x86 platform seems familiar, but, figuratively speaking, in the Chinese version it must be eaten with hot sauce and chopsticks. And for this you need skill. We took the first step in getting to know the Inspur and Huawei servers, but we are not going to stop there. And with a number of companies that have already approached us on the subject of vendor substitution, we are starting a series of pilot implementations where real tasks will be transferred to Chinese iron. In general, if you are interested,